The Alibaba Cloud 2021 Double 11 Cloud Services Sale is live now! For a limited time only you can turbocharge your cloud journey with core Alibaba Cloud products available from just $1, while you can win up to $1,111 in cash plus $1,111 in Alibaba Cloud credits in the Number Guessing Contest.

By Bajian and Bufeng, from TaoXi Technology Department

Alibaba's virtual venue is one of the most important elements of the annual Double 11 shopping festival, so naturally, the user experience of the virtual venue is a major focus for our teams each year. With increasingly complex business requirements every year, the teams within Alibaba need to ensure that the user experience is not degraded while making sure that the systems are even more optimized.

This year, we have used Server Side Rendering (SSR) technology for the virtual venue without changing the existing architecture or underlying business logic. With SSR technology, we have raised the "one-second opening rate" to a new record at 82.6%. While optimizing the user experience, we have also seen a slight increase in business indicators, such as the unique click through rate (CTR). Depending on different business scenarios, the increase can reach up to 5%, adding more business value for the team.

This article introduces our solutions and experiences on adopting SSR from the perspective of the server-side and front-end, including:

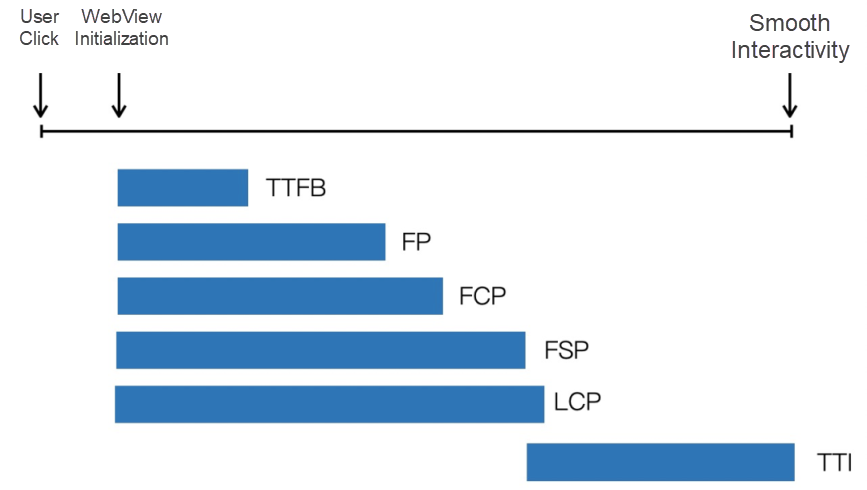

Before introducing our experience and solutions, we would like to introduce the relevant evaluation metrics. Many years ago, Yahoo's YSlow defined several relatively complete evaluation metrics for web user experience. Google also released Lighthouse as a new tool for experience evaluation, several years after the launch of YSlow. Despite being developed by different companies, it is evident that standards for experience evaluation have gradually become uniform and been increasingly recognized.

Based on Web.Dev and other references, we have defined our own simplified evaluation system as follows:

TTFB (Time to First Byte): It refers to the time from clicking the link to receiving the first byte.

FP (First Paint): It refers to the time when the user first sees any pixel content.

FCP (First Contentful Paint): It refers to the time when the user sees the first valid content.

FSP (First Screen Paint): It refers to the time when the user sees the content on the first screen.

LCP (Largest Contentful Paint): It refers to the time when the user sees the largest content.

TTI (Time To Interactive): It refers to the time when the page becomes interactive, for example, be capable for responding to an event.

In general, FSP is approximately equal to FCP or LCP

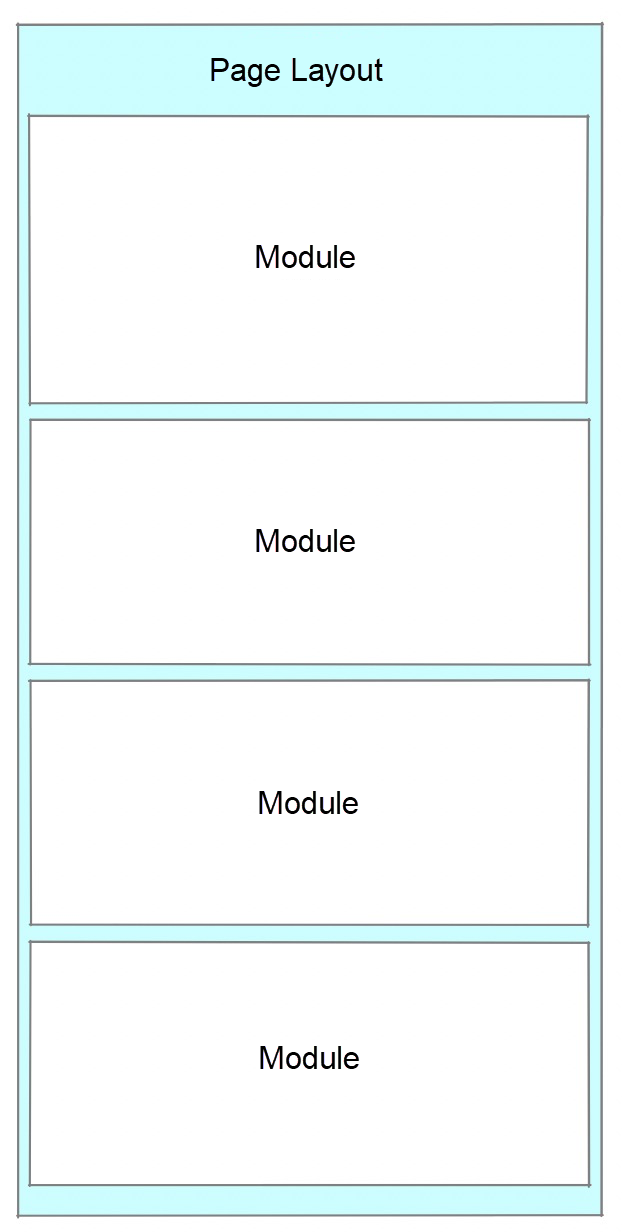

Our virtual venue page is generated through page construction platform based on the low-code solution. A venue page generated by the platform consists of two parts: the page layout and floor modules.

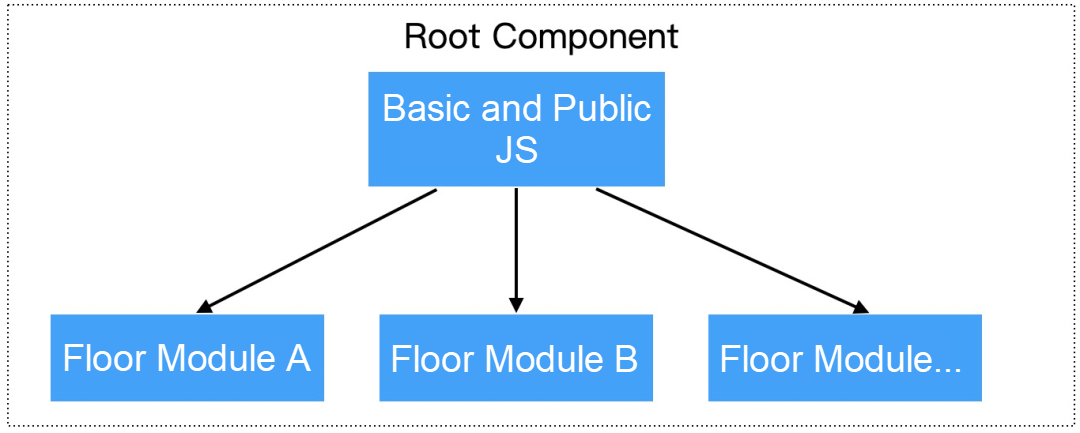

The page layout will separately generate the layout HTML of the page and basic and public assets resources such as JS and CSS. Each floor module will also separately generate modular JS, CSS and other assets resources, and dependency version information of basic and public JS.

The tasks of the page layout are complex. It is responsible for layout of pages, loading specific floor modules according to the page data, and organizing split-screen rendering modules.

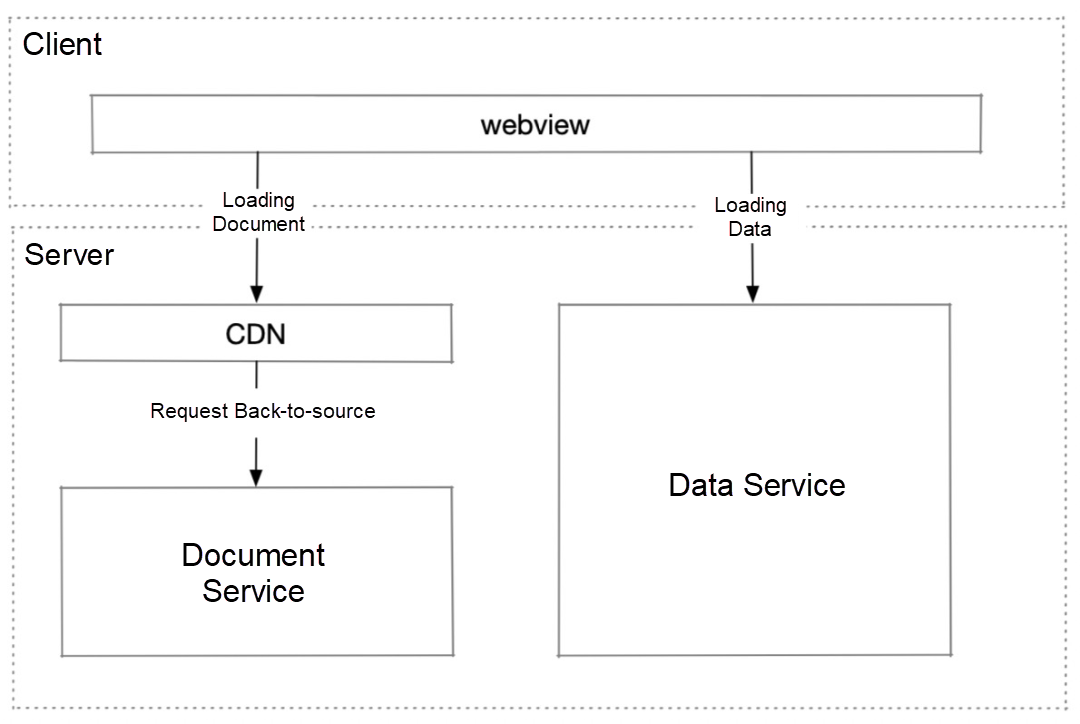

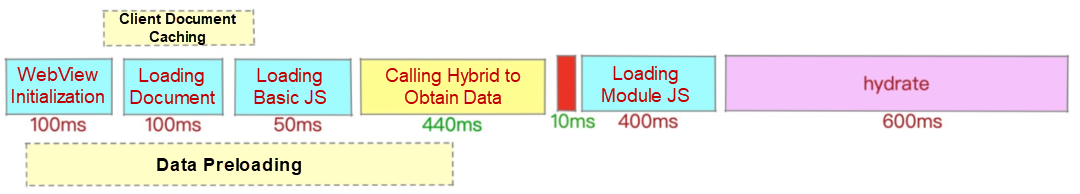

The original rendering architecture based on Client Side Rendering (CSR) is shown in the following figure, which can be divided into three parts:

The original CSR-based rendering process is as follows:

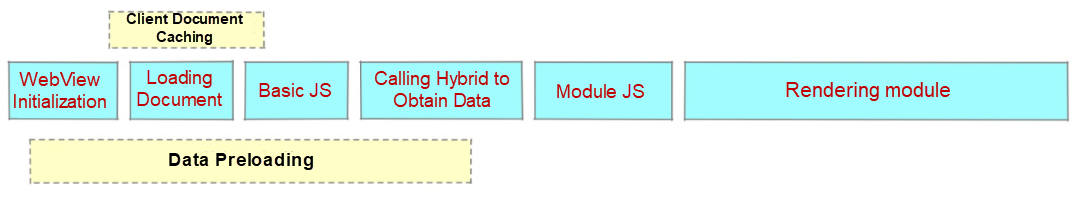

Apart from commonly used basic frontend optimization methods, we have also developed many venue optimization solutions based on the capabilities of the client. Among them, two typical solutions are:

1. Caching of main documents and assets on the client

On the client, we adopt the static resource caching capability provided by the client. Resources such as HTML and JS are pushed and delivered to the user-side client for caching. When a client WebView requests for resources, the client can match the cache package that has been issued based on rules. After the successful matching, the client directly reads the corresponding HTML and JS resources from the local cache, without the need to request through the network each time. By doing so, the page initialization time can be greatly reduced.

2. Data preloading

Before the page starts to load data, there are several transitory stages after the user clicks the link. These stages include client-side animation, WebView initialization, HTML request of the main document, and loading and execution of JS. After all these steps are finished, hundreds of ms of time have been wasted. However, through the data preloading capability provided by the client, the page data can be loaded by native immediately after clicking. After JS is executed, the pre-loaded data can be obtained from native by directly calling the jsbridge API.

Through these optimizations, the venue experience has been greatly improved.

However, these CSR-based optimizations have encountered some bottlenecks over time:

• Facing complex online network environments, such as low network speed and poor WiFi, the page experience on medium- and low-end Android devices is still unsatisfactory. This is a major concern for the team, the loading and execution time of modules are relatively long, while the user number of these devices has an increasing trend

• As a critical method to attract new users, Taobao or Tmall client should be able to be called externally. However, external calling needs time to initialize some functional components, such as the network library. Therefore, the physical experience of the page cannot be the same as that of the venue in the client.

• The virtual venue is serving as a marketing activity. The re-access rate of the page is relatively low, and its content is comprehensively personalized. User-side caching methods, like offline HTML snapshot, may cause problems such as repetitive rendering (slower opening) and page element shifting (rendering flicker and reflow), due to cached data expiration. These problems will affect user experience.

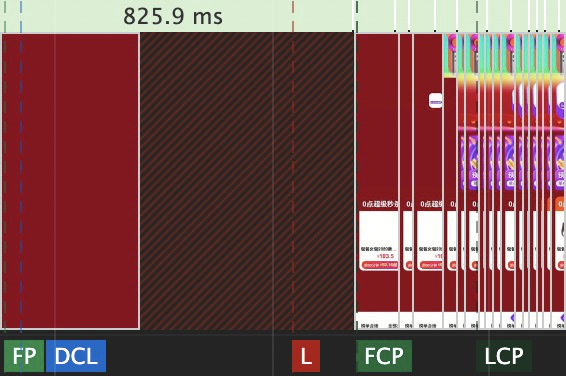

Is there an ideal optimization method? The following figure is the offline performance analysis result of a 2020 Double 11 venue page by using Chrome DevTools on PC.

The screenshot above shows that the transition from FP to FCP takes a long time and only has a background color. The time between FCP and LCP is for image loading. There is less optimization space for this time and it is difficult to be evaluated.

In consideration of the offline analysis result, online rendering problems are even more severe, because there are more complex network environments and differentiated phone models. For example, "background color" time has a great impact on the user experience and may cause user attrition more easily.

Our CSR rendering system relies on the front-end and client-side capabilities. Therefore, its working mechanism cannot be further improved. So how can we further improve the experience of the venue page? This is where SSR comes into being, which allows us to make improvements on the time from FP to FCP.

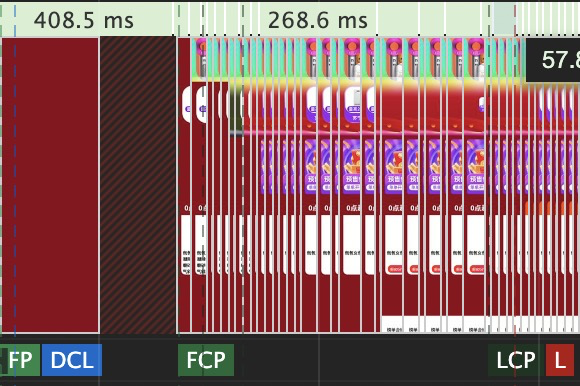

The figure above shows the offline test result of SSR. The time from FP to FCP is reduced from 825 ms to 408 ms.

SSR is short for Server Side Rendering. The "server side" can be located anywhere, for example, at the CDN edge node, on a cloud data center, or on your local route.

It is easy to implement the demo of SSR, the simplified process is as follows:

First, construct a Rax-based server renderer. Then, call the Rax-based component, which is called renderToString. Last, SSR demo is finished.

The industry already has many practical cases. Just like "putting an elephant into the fridge", the implementation of SSR demo seems simple. However, it requires sound and practicable execution solutions, facing stability requirements of complex scenarios during Double 11.

But how can SSR be used based on our existing modular and mature rendering architecture? At first, we try to use the normal method, which is directly returning the HTML rendered in the server from the HTML response of the document. Unfortunately, there are several problems with this approach:

• The reconstruction cost is high, and the existing server architecture needs to be changed significantly. This can lead to CDN cache failure and higher document service requirements.

• Existing performance optimization capabilities of the client cannot be reused, for example, the caching of client main documents and assets, and data preloading. This will affect the time to be fully interactive.

• CDN cache cannot be used, and TTFB increases, bringing a new "full white screen stage".

• There are many factors affecting the stability of SSR. The solution for automatic degradation to CSR is complicated and cannot be guaranteed to be 100% degraded.

• The security protection capability of the main document HTML is weak, making it difficult to resist malicious fetching.

In view of above problems, we have thought about whether there are other solutions that can achieve SSR with less risk and lower costs. After a short but heated discussion, we have designed the "Data SSR" architecture. The following part is the introduction to this architecture.

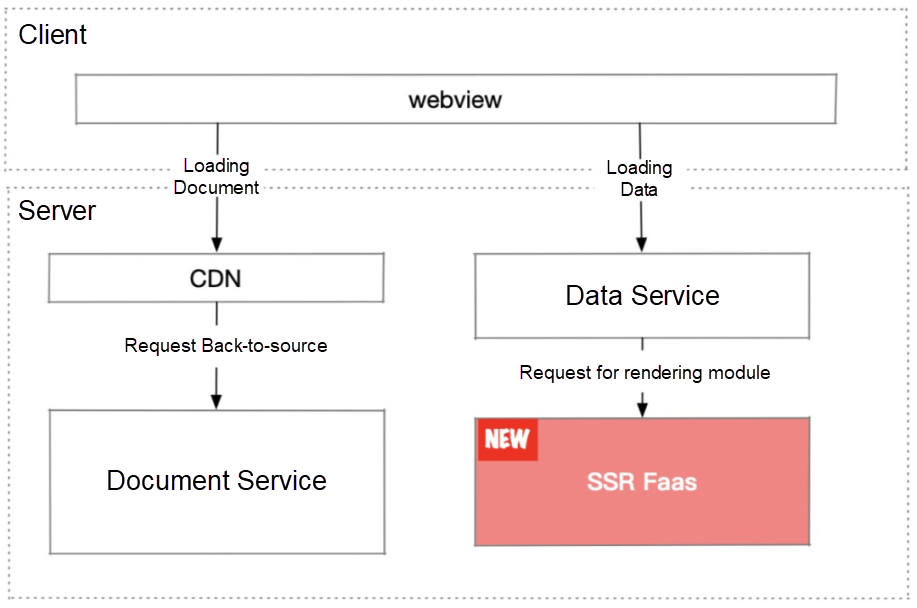

The Data SSR rendering architecture is as follows. The content returned by the document service remains static. The data service calls an independent SSR FaaS function, because the data contains the module list of the page and the data required by modules. The SSR FaaS function can dynamically load the module code and render HTML directly according to the content.

This solution effectively combines the capabilities of the frontend, client, and server in client scenarios.

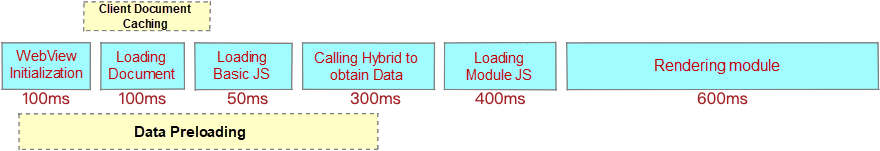

Someone may wonder why this solution improves performance; it just moves the browser's work to the server. Here is an example to help you understand why it works. For normal CSR rendering, the time consumed in each stage is as follows. The FSP is 1,500 ms.

Note: The data is for qualitative analysis only, but does not represent actual values

For the SSR rendering, the time consumption before "Loading basic js" is the same as CSR rendering. However, the reasons for large decrease in time consumption by SSR are:

• Loading module files on the server is much faster than that on the client, and the cache of module resources on the server is public. Once an access request is made, all subsequent accesses will use the cache directly.

• The performance of the server is much higher than that of mobile phones, so it takes less time to render the module on the server. According to the actual time consumption statistics online, the simple rendering at the server takes an average of about 40 ms.

As HTML is included in the data response, the typical value after gzip is increased by 10 KB. Therefore, the network response time increases by 30 ms to 100 ms. The final SSR rendering process and time consumption are as follows. As shown in the figure below, the FSP of SSR rendering is 660 ms, which is 800 ms shorter than that of CSR rendering.

In short, the core idea of the "Data SSR" solution is to transfer the computing of the first screen content to the server with stronger computing power.

Since the main idea has been determined, let's now take a look at the core concerns of SSR application in production.

Don't worry. Let's look at their solutions one by one.

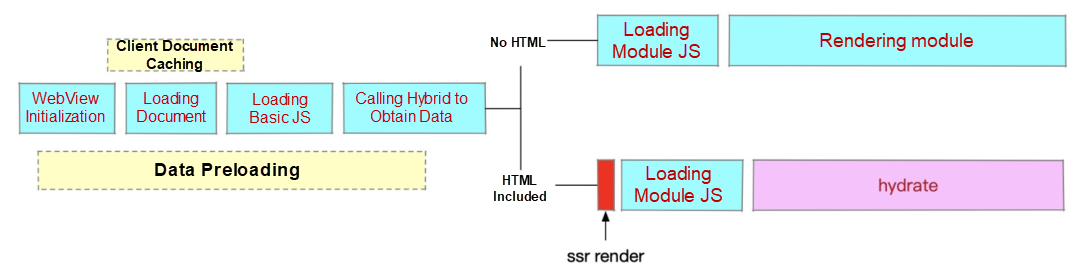

In our page rendering solution, there are two branches.

The advantages are obvious.

• It is of low risk and can seamlessly degrade to CSR. It is only necessary to determine whether the HTML is successfully returned in the response of the data interface. If the SSR request fails or times out (HTML is not returned), the user experience will not be affected by setting a reasonable timeout (for example, 80 ms) on the server.

• SSR rendering can utilize mature client-side performance optimization capabilities, such as the client cache capability and data preloading capability. With the client cache capability, the off-screen time of the page is the same as that of the original CSR rendering. With the data preloading capability, data services can be requested before the page is loaded.

When serving online, traffics can be divided through Hash buckets to slowly apply the SSR solution. Thus, the stability can be ensured, and it is convenient to further evaluate the business effect at the same time.

There is a Hash method for converting strings to numbers called DJBHash. The effect of dividing buckets is relatively stable after verification.

Many sharing about SSR "demo" often ignore an important point, that is, developers.

In the Double 11 scenario, Alibaba has more than 100 developers and over 300 floor modules. Promoting stock code upgrade and reducing the costs of transformation and adaptation for developers are what we are pursuing.

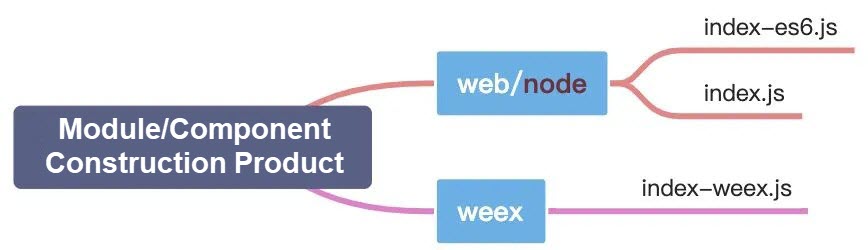

Our original construction products of floor module construction include PC, H5, and Weex. The industry, however, generally aims at SSR, and independently generates a construction product targeted at the node. During the actual POC verification, we find that most modules can be directly adapted to SSR without transformation, and the new construction products involve more developers. So, we want to find other solutions.

One problem with reusing existing Web construction products is that, Webpack 4 injects some node environment-related variables by default. This will cause abnormal judgments similar to const isNode = typeof process! == 'undefined' && process && process.env in common component libraries. Fortunately, this can be closed, and other injections similar to devServer in the development environment can also be closed. Therefore, we finally reuse the construction products of the Web. The newer Webpack 5 weakens the differences between targets and allows for better customization. Thus, we can further develop toward the community in the future.

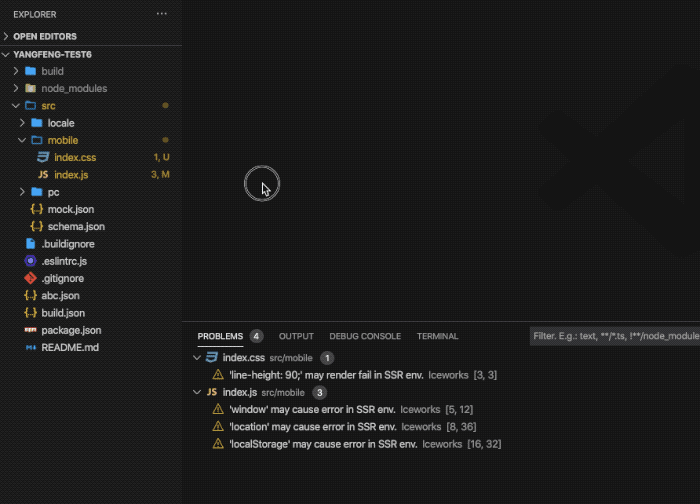

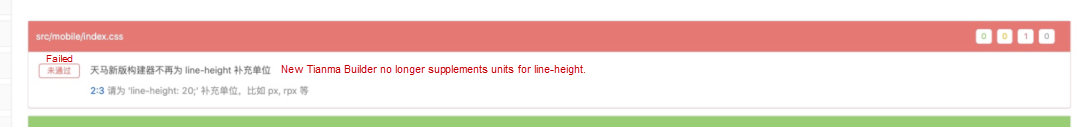

After the problems of the construction products are solved, the Rax team provides a SSR development plug-in called VSCode in local developing stage. The team also integrates VSCode with some best practices and lint rules. By doing so, we can find SSR-related problems so as to avoid and fix them in time, when writing the code.

At the same time, we simulate a real online environment and provide the SSR previewing and debugging plug-in of Webpack locally. Then, we can directly view the SSR rendering result in the development environment.

In some scenarios, developers directly access variables like window and location through code. In view of this, we have developed the unified class library encapsulation to eliminate differences. At the same time, we have also simulated some commonly used browser host variables on the server, such as window, location, navigator, and document. In addition, construction products of Web are public to others, so most modules can be executed on the server without modification.

For the module release stage, we have added a release checkpoint on the engineering platform. If code problems affecting SSR are found during static check, the checkpoint will stop the module release and give a fixing notice.

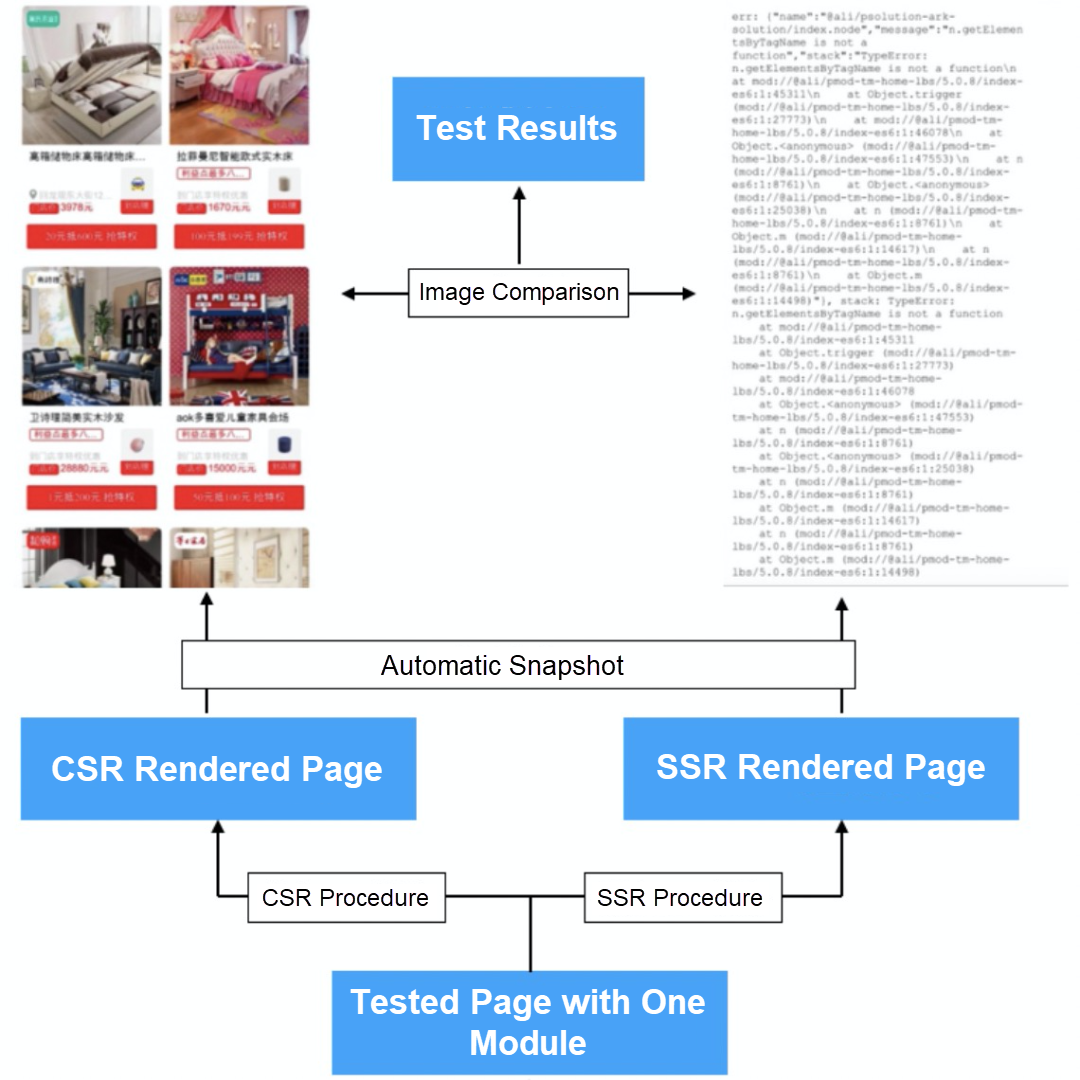

Due to the large number of actual business modules, the test team has provided a batch test solution for modules in order to further narrow the transformation scope. It constructs a mock page of the module to be modified. Then, it checks whether the SSR rendering results are as expected by comparing the results of SSR rendering with those of CSR rendering.

Although we have tried our best to avoid problems through static code checking in the development stage, in fact, there are still some pain points.

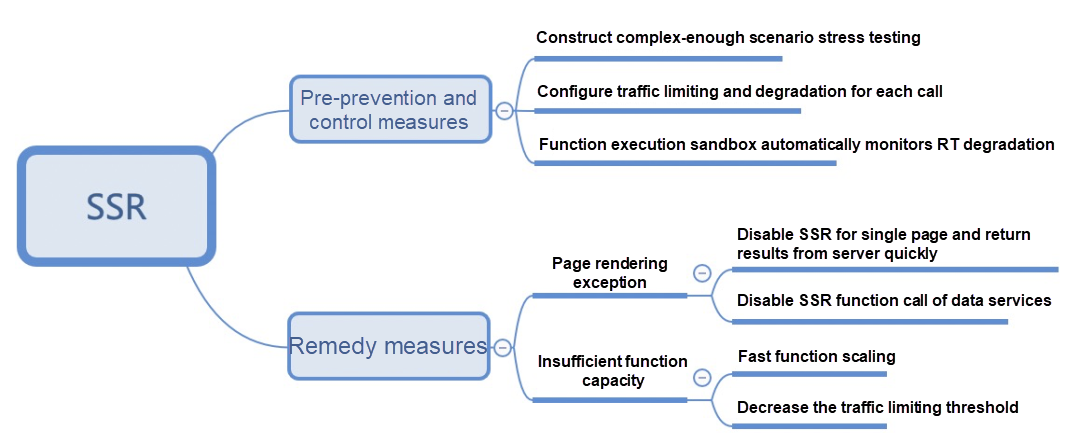

These issues are actually very difficult to be solved through the SSR mechanism. A complete automatic degradation solution is required to avoid affecting the user experience.

Thanks to the smooth switching of CSR/SSR achieved in the frontend in advance, the server do not need to worry every day.

FaaS greatly reduces O&M costs for the server. The design principle of "one function does one thing" also reduces the SSR instability without extra O&M burdens.

Some SSR scenarios in the industry are basically full-page or SPA-type scenarios. It means that the bundle used by SSR exposes the Root Component after the page code is completed. Renderer then renders the Root Component. In our low-code construction scenarios, the entire optional module pool is large, so the floor modules of the page are dynamically selected, sorted, and loaded. It is very simple through CSR in the frontend, as long as a module loader is required. However, it is rather complicated on the server side.

Fortunately, our module specification complies with the special CMD specification, with an explicit dependency declaration. This allows us to calculate all the assets dependencies in the first screen at one time, after obtaining the floor organization information of the page.

/**

An example of CMD-based explicit dependency (seed).

**/

{

"packages": {

"@ali/pcom-alienv": {

"path": "//g.alicdn.com/code/npm/@ali/pcom-alienv/1.0.2/",

"version": "1.0.2"

},

"modules": {

"./index": [

"@ali/pcom-alienv/index"

]

}

}After the code is loaded in the server, the Root Component can be assembled and sent to Renderer for rendering.

There are two major kinds of performance problems.

Due to the large number of floor modules, there are some mechanism problems during actual implementation.

The optimization solution is a little bit tricky.

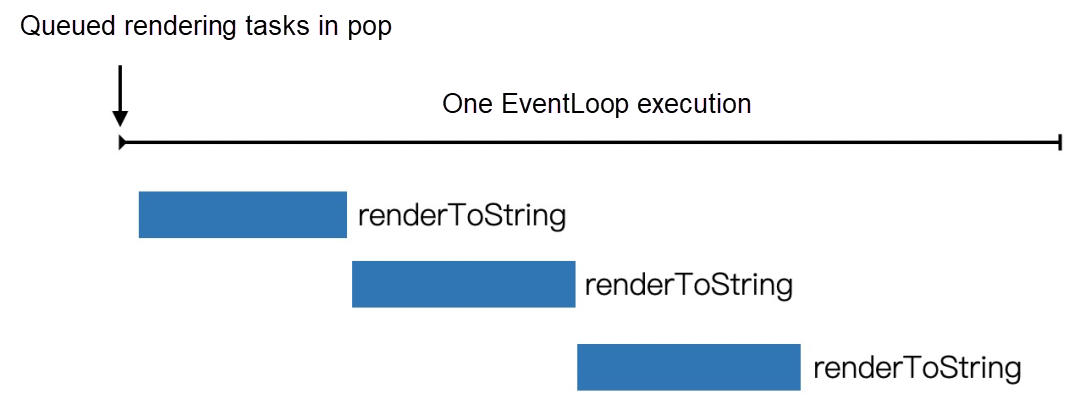

vm.Script instances to avoid repeated parsing.During the inspection, a small range of response time (RT) jitter is also found. After analysis, we find that the synchronous renderToString call has a problem of queuing and execution at the micro level.

In the case shown in above figure, the RT of some rendering tasks may be the superimposed RT of multiple queued tasks actually. This affects the RT of a single request, while the throughput is not affected. This problem requires a more accurate assessment of the back-up resources. The effective solution on the mechanism can execute renderToString through fiber to mitigate the RT superimposing problem caused by micro-level queuing.

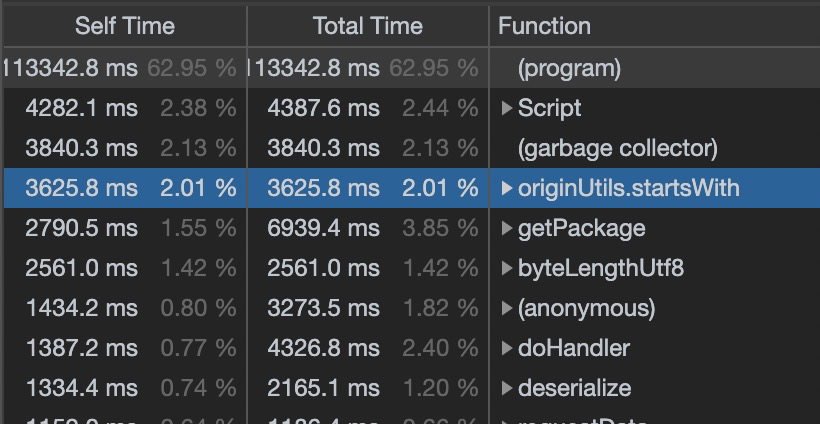

CPU Profile is a commonly used tool for performance analysis. Take the analysis of alinode platform as an example. Hot spots can be quickly found for targeted optimization.

In the preceding figure, the CMD loader calculation marks the hot spot in blue. After the computing results are cached, the hot spot is then removed, improving the overall performance by 80%.

All the above-mentioned effort and investment require a sound evaluation system for optimization evaluation. In the following part, we will evaluate optimizations from the experience performance and business income aspects respectively.

Based on more compatible PerformanceTiming (being replaced by PerformanceNavigationTiming in the future), we can get some key time in the front-end category:

• navigationStart

• firstPaint

The navigationStart will be used as our frontend start time. Outside the frontend, the real starting point for the user interaction path is the clicking and jumping time on the client, which is called jumpTime. We have also conducted full-procedure tracking to connect the client native time with the frontend time. By doing so, all time concepts mentioned above are included in our evaluation system.

There are several core metrics such as FCP and TTI in the original evaluation system. At present, there is no online evaluation solution with good compatibility for the Web implementation. Tools, like DevTools or Lighthouse, can be used for offline evaluation. Therefore, we use some other methods.

| CSR | SSR | |

| FCP | componentDidMount | innerHTML to Body |

| TTI | componentDidMount | componentDidMount |

The data retrieved online is non-sampled and reported by using tracker. This allows us to analyze the data from following dimensions.

• Device model

• Network conditions

• SSR hit or not

• Other front-end optimizations hit or not

The main evaluation metrics are:

• Time from user click to FCP (FCP - jumpTime)

• Time from NavigationStart to FCP (FCP - NavigationStart)

We are worried about whether the experience optimization can bring real income? We directly analyze business data through A/A testing and A/B testing.

Based on the previous bucket solution, we can use ten buckets from No.0 to No.9 for traffics through methods similar to Hash value / 10. Then, the A/A testing is used to verify whether the capacities of ten buckets are equal.

| Bucket No. | 0 | 1 | 2 | 3 | ... |

| PV | 100 | 101 | 99 | 98 | |

| UV | 20 | 21 | 22 | 20 |

A/A testing aims to ensure that the bucket logic will not cause uneven data and then affect the confidence. Next, the A/B testing is conducted. The changes of business data are verified by gradually adding experimental buckets.

After all this, let's look at the final effect of the optimization. During Double 11, we have divided the traffic originating from several core pages. The table below shows our first-hand data collected.

| Stylish Women's Wear Venue | CSR | SSR |

| The time from user click to FSP < 1s | iOS 66% Android 28% |

iOS 86% (30%↑) Android 60% (114%↑) |

| UV click-through rate | 5%↑ |

Qualcomm Snapdragon 820

We can see that the improvement brought by SSR exceeds our expectations in the fragmented Android ecosystem. This stimulates us to combine capabilities of the frontend, client, and server more effectively in the future. Thus, we can bring better user experience and create greater business value.

For better user experience, it will of course! We can simply look at some short-term and long-term developments.

For a long time, a variety of choices are used to implement services on the user side, such as Web, Native, and Hybrid development. Each development system has its own closed evaluation metrics for user experience. Therefore, these metrics cannot be compared under one same assessment standard. What's more, the FCP&LCP general evaluation system defined in Web.dev is not suitable for e-commerce scenarios. For one page, it is actually enough to display core products and stores.

In the future, we can align the assessment standards for experience evaluation metrics. We can also align and implement the concepts, such as start time and paint completion time, among multiple systems. By doing so, the horizontal comparison and sound competition can be achieved.

In the Release Note of Webpack 5, we can see that Webpack is weakening some special processing of target and describing the Web as a browserlike environment. Webpack also provides the ability to customize browserlist, which enables developers to deal with cross-side compatibility issues more conveniently. This change will accelerate our development speed to embrace the community and help us obtain better development experience.

At present, there are some problems in SSR static code check. Is there any better solution that can prevent code risks (performance and security risks) in engineering? This is also one of the directions of our development in the future.

For pages with high re-access rate and little change, ServiceWorker Cache and other solutions can be used to cache the previous rendering results. If user access hits the cache, the cache can be directly used. If the access does not hit the cache, SSR rendering results can be cached and be directly used latter. With these solutions, the server-side pressure can be reduced and user experience can be enhanced.

Currently, Node.js or V8 do not provide adequate support for dynamic code loading, and they lack the security-related protection logic. In terms of performance, SSR is a CPU-intensive workload, which means the advantages of pure asynchronism are not obvious. So, some special solutions may be required.

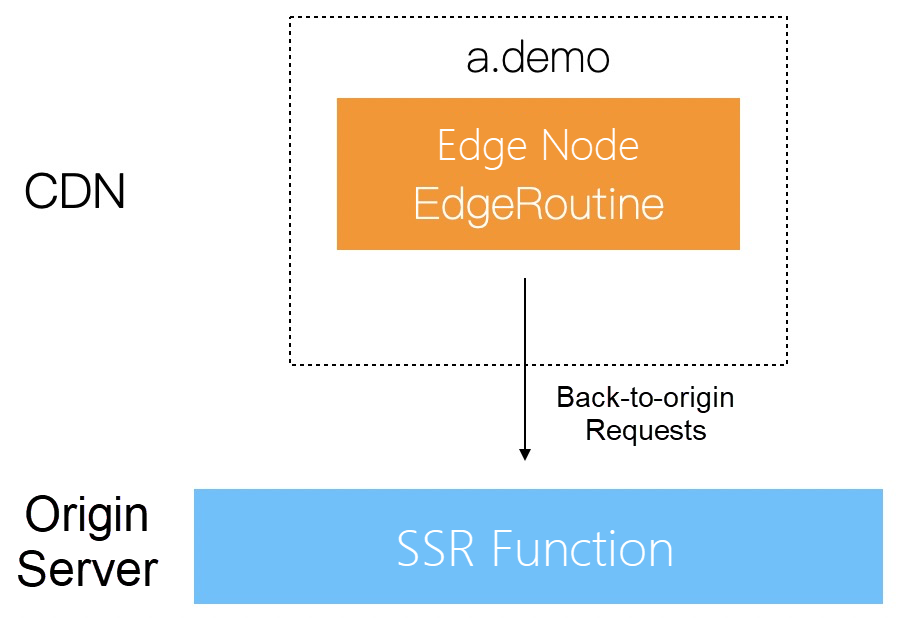

The "Data SSR" solution is the best solution in the side, but the worst solution for external implementation scenarios. In external implementation scenarios, users enter target pages in third-party applications, which correspondingly lack customization and optimization capabilities of the client. In addition, SSR calls will increase the RT of data services and delay FCP.

For these scenarios, the HTML direct-output solution can be applied.

The core advantages of this solution are:

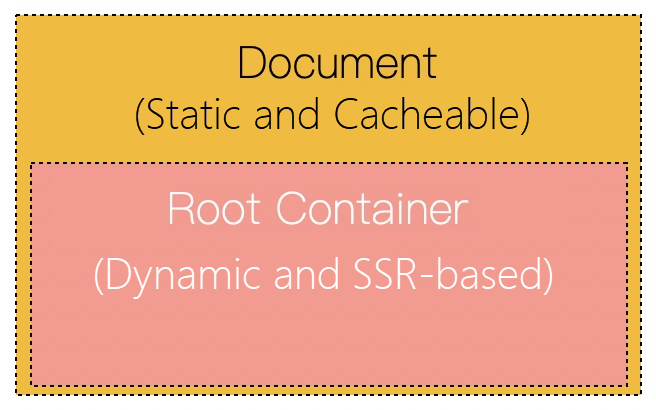

The near-side streaming solution is mentioned frequently. However, in actual practice, ineffective disaster recovery problem on user side, such as irremediable HTML damage, may be encountered, due to stream output errors. Through "static and dynamic data separation", page data can be divided into Document and Root Container.

We can only make the Root Container dynamic, thus we can enjoy the benefits of TTFB move-up brought by stream output. At the same time, the instability of SSR disaster recovery can also be considered. We can discuss with business team about how to better "separate dynamic and static data" of pages from the business perspective, rather than just from the technical perspective.

The continuous improvements of the rendering architecture are essentially our spontaneous adaptation in limited and changing environments (such as terminal performance, complex networks, and changeable services). Maybe one day, the environment will no longer be a problem, and the issue of performance optimization will disappear. Our team sometimes joked that these optimizations will no longer be needed should all new mobile phones being launched in the near future are 5G enabled.

At present, this is still a farfetched idea, but we have achieved a lot in the 2020 Double 11. We hope that the combination of technology and business can bring better experience to users in the future!

2,593 posts | 792 followers

FollowAlibaba Clouder - November 25, 2020

Alibaba Cloud Community - November 23, 2021

Alibaba Clouder - December 11, 2020

Alibaba Clouder - December 23, 2020

Alibaba Clouder - December 19, 2018

Alibaba Clouder - October 27, 2020

2,593 posts | 792 followers

Follow Black Friday Cloud Services Sale

Black Friday Cloud Services Sale

Get started on cloud with $1. Start your cloud innovation journey here and now.

Learn More E-Commerce Solution

E-Commerce Solution

Alibaba Cloud e-commerce solutions offer a suite of cloud computing and big data services.

Learn MoreMore Posts by Alibaba Clouder

5900881896118211 August 16, 2023 at 6:00 pm

If you are looking for a good painting company in Dubai then you can simply visit the website where you can find a number fascinating companies which help you out to find better services regarding painting. To know more, please visit the website:Read More:- https://www.paintmywall.ae/