Backup and restoration are essential capabilities of databases to ensure data security. For traditional standalone databases, the backup and restoration technologies have been relatively well-established, including:

• Logical backup: backs up database objects, such as tables, indexes, and stored procedures. Tools like MySQL mysqldump and Oracle exp/imp are used for logical backup.

• Physical backup: backs up database files in operating systems. Tools like MySQL XtraBackup and Oracle RMAN are used for physical backup.

• Snapshot backup: creates a fully usable copy of datasets based on snapshot technology. You can choose to maintain snapshots locally or perform cross-machine backup. Tools like Veritas File System, Linux LVM, and NetApp NAS are used for snapshot backup.

However, for a distributed database like PolarDB-X, backing up and restoring data poses many challenges.

Point-in-time recovery (PITR) is the first challenge to be addressed. PITR refers to the ability to restore a database to any point in time (within seconds) in the past by relying on backup sets. Traditional databases usually rely on full and incremental physical backup of a standalone server to implement PITR, such as XtraBackup and Binlog of MySQL.

For a distributed database, PITR is more complex due to the involvement of multiple data nodes and distributed transactions. In addition to ensuring data integrity on a single node, it is also necessary to ensure data consistency across multiple nodes during the PITR process.

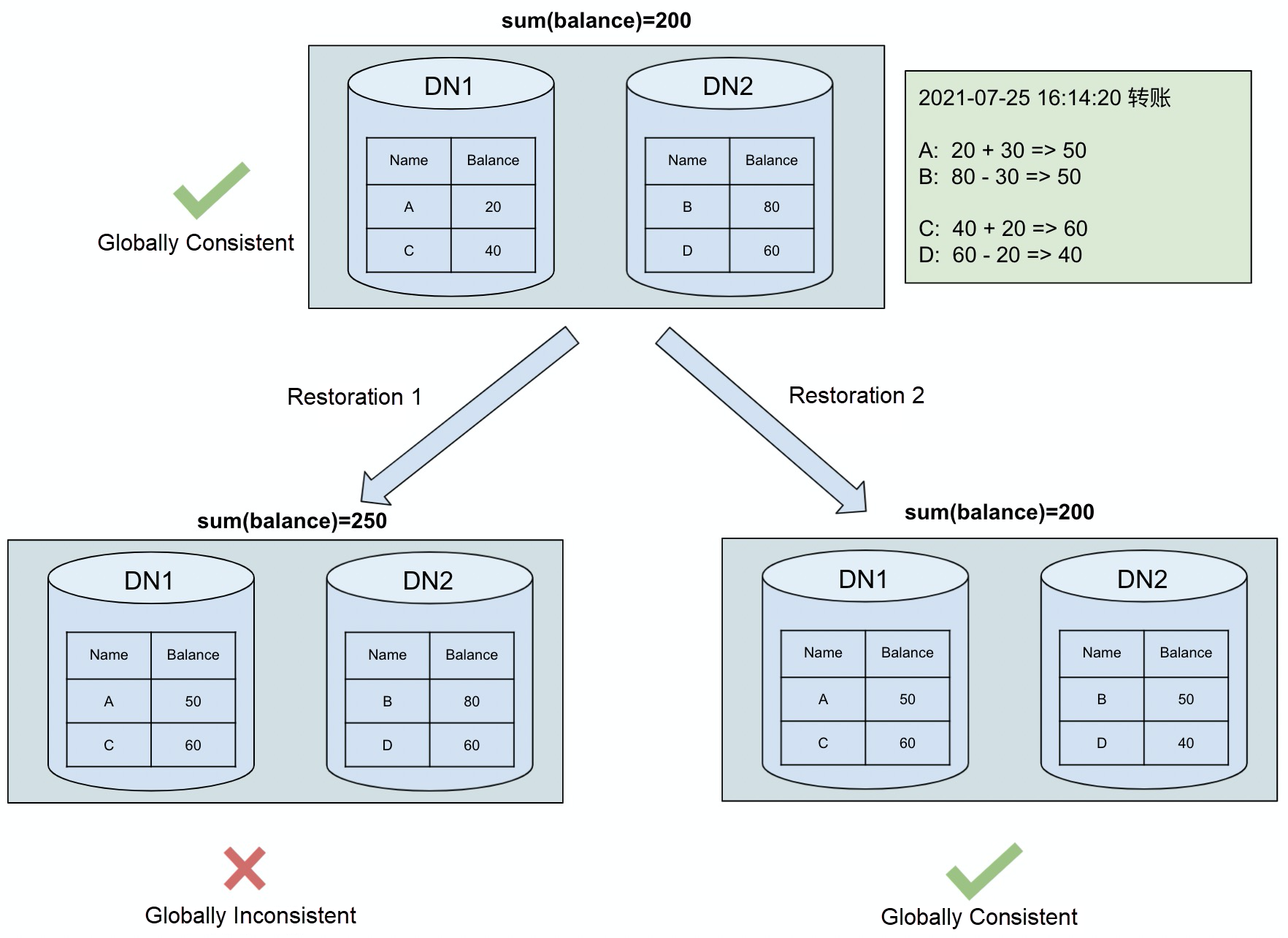

The preceding figure gives an example of data consistency using the classic transfer test. A user's account balance table is distributed across two data nodes. At a certain moment, through a distributed transaction, account C transfers 30 yuan to account A, and account D transfers 20 yuan to account C. If the data node is restored to this point in time, due to the limited accuracy of PITR (only to one second), the restored data may be inconsistent, such as accounts A and C having completed the transfer operation, while accounts B and D have not yet completed (case 1). This inconsistency is unacceptable to users. Therefore, it is necessary to ensure that the restored data is either in the state before the transfer or after the transfer.

Backup is a high-frequency O&M operation for databases. We recommend performing daily backups to ensure data security. Since backup operations are so frequent, the data backup process requires minimal business loss. Therefore, how to perform lossless backup of databases while ensuring data consistency is also a challenge.

The amount of data stored in a distributed database is significantly larger than that of a standalone database, usually tens or even hundreds of terabytes. Faced with such a huge amount of data, how can we perform fast data backup and restoration? As the volume of business data increases, how can we ensure that the backup and restoration speed increases linearly, so that the backup and restoration duration remains relatively stable? These are all problems to be solved.

For example, when the data volume is 10 TB, it takes 1 hour to back up the database. If the data volume increases to 100 TB, the backup time should still be around 1 hour, rather than increasing to 10 hours.

This section describes the PolarDB-X backup and restoration schemes and how they address these challenges. First, let's review how a single data node is restored to any point in time (accurate to one second), and then introduce the overall backup and restoration scheme of PolarDB-X.

Currently, PolarDB-X 2.0 data nodes are built based on MySQL and X-Paxos, providing a distributed high-available database across AZ. Since the data nodes are built on MySQL, their PITR scheme is similar to that of MySQL.

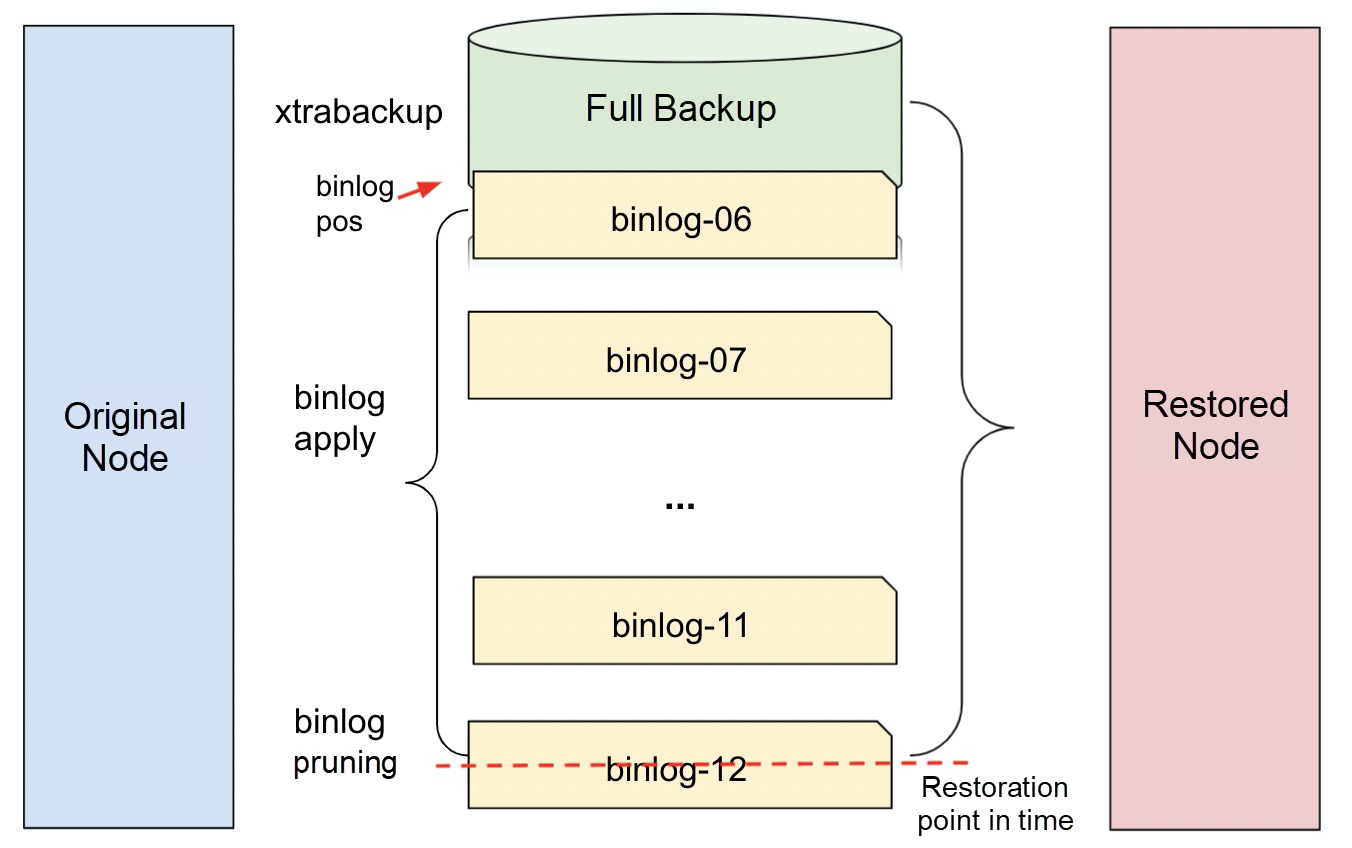

The preceding figure shows the point-in-time backup and restoration method for a single data node. First, use xtrabackup to perform a scheduled full backup for original instances and an incremental backup for binlogs. When we need to restore a node to a specific point in time (accurate to one second), we first need to find the latest full backup set for data restoration, then find all binlog files from the time of the full backup set to the restoration point in time, and apply the events of this part of binlogs through the process of MySQL Crash Recovery. By the steps above, the data to the specified point in time can be restored.

In the preceding restoration process, the first and last binlog files need to be processed separately.

• binlog-06: during the full backup, business data is still written. Therefore, after the full backup is completed, the pos information of binlogs corresponding to the backup time is recorded and stored in the backup set. When binlogs are applied, the binlog-06 needs to apply from the pos recorded in the full backup set, and the previous part needs to be discarded.

• binlog-12: the last data change to be restored may be located anywhere in the binlog file because the restoration time is arbitrary. For the last binlog file, you need to prune it to remove binlog events that are later than the restoration point in time.

Back to PolarDB-X data restoration, relying on the data restoration capability of a single data node, we can restore all data nodes and global meta services under the current instance to the same point in time and achieve PITR. However, due to the existence of distributed transactions, the global consistency of data between data nodes cannot be guaranteed.

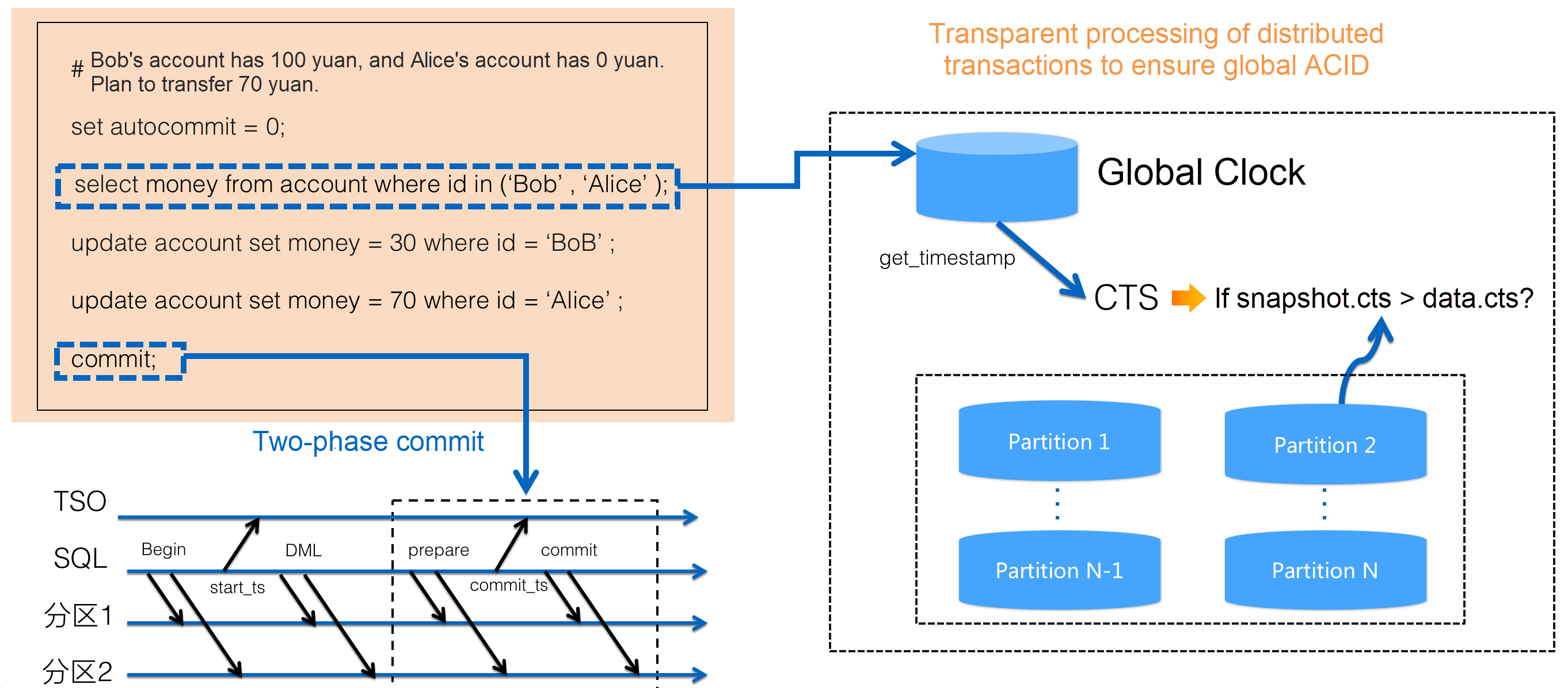

To provide external read consistency and distributed transaction capability at the SI isolation level, PolarDB-X distributed transactions use the classic TimeStampOracle (TSO) solution, as shown in the following figure.

In the implementation of TSO distributed transactions, the Commit Timestamp (CTS) extension is added to the data node. For each distributed transaction, two sequence numbers are defined. One is the snapshot_seq (for snapshot reads) at the start of the transaction, and the other is the commit_seq committed by the transaction. The sequence number is centralizedly distributed by GMS to ensure that it is globally unique and monotonically increasing.

In addition, the preceding transaction sequence number is also written to the binlog. In addition to common XA Start Event, XA Prepare Event, and XA Commit Event, the binlog of each data node involved in a distributed transaction is also accompanied by a CTS Event to identify the start and commit time of the transaction. This CTS Event stores a specific TSO timestamp.

Since binlogs record the TSOs of transactions, when we want to perform a globally consistent PITR, we must convert the time to be restored (for example, 2021-07-25 16:14:21) to the corresponding TSO timestamp. Then, we can use the TSO timestamp to prune the binlogs that need to be applied for each data node and remove those transaction events whose transaction commit sequence number (commit_seq) is greater than the TSO. This ensures that the recovered data is not inconsistent with the partial commit of the transaction.

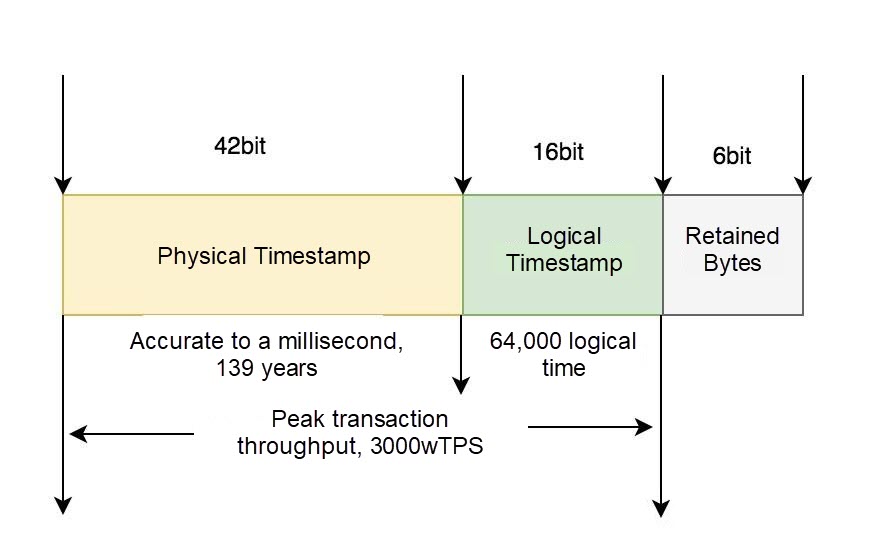

The following figure shows the format of TSO. It can be seen that the TSO timestamp is a 64-bit number that combines a physical timestamp and a logical timestamp. Since PITR only needs to be accurate to one second, we directly move the timestamp of the point in time to be restored (for example, 2021-07-25 16:14:21) left by 42 digits and fill the remaining low 22 with 0 to obtain the corresponding TSO timestamp.

After we have the corresponding TSO, we can prune the binlog. As for when to prune binlogs, we have also considered a variety of solutions:

Solution 1: pruning during binlog application

Solution 2: pruning before the data node applies binlogs

After a comprehensive comparison of the above two solutions, we finally adopt Solution 2.

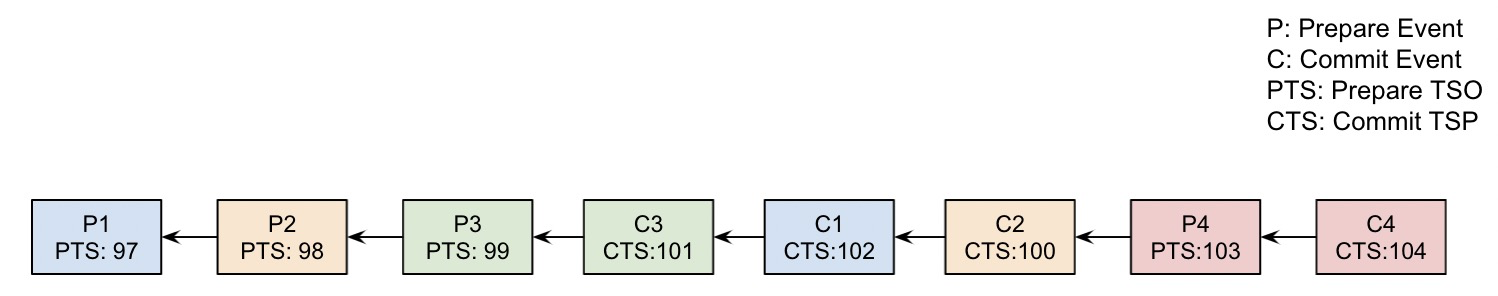

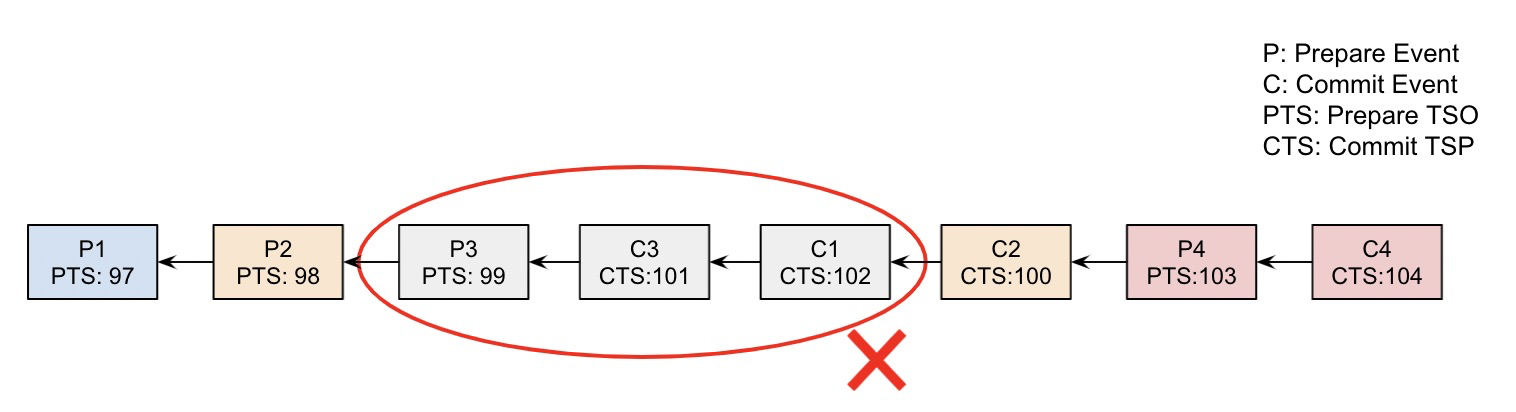

The pruning logic of binlogs seems simple with only the comparison of TSOs and the removal of events. Although the transaction obtains TSOs orderly, due to factors such as different network delays between nodes, the TSO that falls into each data node binlog is not strictly orderly in the actual commit process. The following figure shows a possible sequence of binlog events.

As can be seen from the above figure, although the commit TSO (100) of transaction 2 is smaller than that of transaction 3, this event is after the event committed by transaction 3 in the binlog. This disorder problem brings a question to the pruning: when to terminate the pruning process?

The regular pruning logic is to traverse backward one by one. Once a greater value than the comparison TSO appears for the first time, the process can be terminated, as the following pseudo-code shows:

while(true) {

if (event.tso > restore.tso) {

break;

}

move to next event

}However, this method is no longer applicable to the above binlog order. For example, when the TSO of the point in time we need to restore is 100 and when we scan the event of C3, we find that the TSO of this event is 101 which is greater than 100. However, if the pruning logic is terminated at this time, the event of C2 that needs to be committed will be missed.

So, how do we determine the pruning termination condition in such a disordered situation? The binlog disorder is only within a short time range, that is, when the transaction commit timeout period is not exceeded. If the transaction has not been committed after this timeout period, the transaction will be rolled back instead of being committed. Based on this condition, we introduce a new variable stop_tso:

stop_tso = (recovery point + transaction timeout period (60s by default)+ delta)<< 42In the preceeding formula: delta is an amplification factor, which is 60 seconds by default.

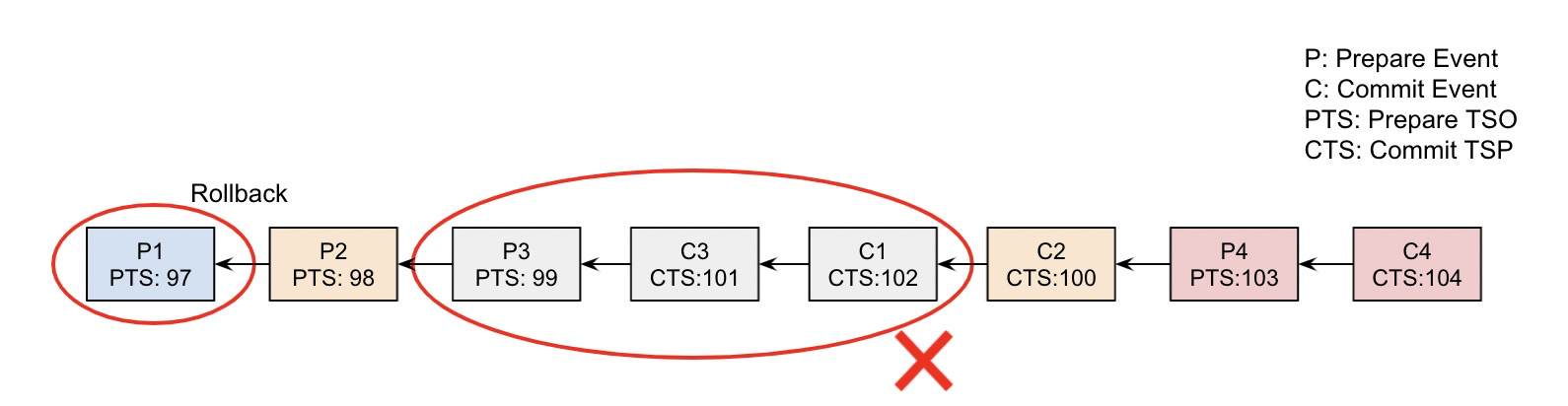

With stop_tso, we can terminate the pruning process when an event greater than this value appears for the first time. For the above example, the TSO of the recovery point in time is 100, but after the calculation of stop_tso, it is 103. According to this condition, we can remove the corresponding binlog. The effect after removal is shown in the following figure. All three events P3, C3, and C1 need to be removed.

Still taking the sequence of the above binlog event as an example, after the binlog pruning is completed and the data node completes the binlog application, we will find that the data has been consistent. Some readers may find that the C1 event corresponding to transaction 1 is removed, but the P1 event is still applied. Although we cannot see the corresponding data changes in the restored data because the transaction is uncommitted, it is a hanging transaction in the system, which should be rolled back through XA Rollback. Otherwise, the executions of subsequent transactions will be affected.

The final effect is as the following figure:

In summary, the TSO-based binlog pruning algorithm is described as follows:

Input: the binlog file to be pruned.

restore_tso: the TSO timestamp of the recovery point in time.

stop_tso: the TSO timestamp of the event that terminates the scanning.

Output: the pruned binlog file.

The process of the algorithm:

1. Traverse events in binlogs

2. If event.tso >= stop_tso, terminate the algorithm. Otherwise, go to step 3.

3. If event.tso > restore_tso, skip the event. Otherwise, go to step 4.

4. If event.tso <= restore_tso, output the pruned binlog file of the event.

5. After the traversal, delete the Prepare Event in the set S and perform the transaction rollback.

Now that we've introduced PolarDB-X's globally consistent backup and restoration schemes, you may wonder: do these schemes provide lossless business and scalability capabilities?

Since backup operations are mainly performed on data nodes that use the MySQL three-node X-Paxos protocol, you only need to perform backup operations on Follower nodes. As Follower nodes don't accept business traffic, the impact of the backup process on the business can be neglected.

In terms of scalability, backup and restoration operations are performed separately for each data node, and data nodes don't need to perform many coordination operations (mainly the delivery and synchronization of the restoration point in time, which is very lightweight). Therefore, when your instance is expanded from 10 TB (10 data nodes) to 100 TB (100 data nodes), the overall backup and restoration time remains close to that of a single data node, without rapidly increasing with the growth of data.

This article focuses on PolarDB-X's backup and restoration schemes, detailing how to ensure global consistency of data and achieve lossless and scalable business.

Of course, the existing backup and restoration schemes also have some unresolved issues, such as the inability to scale during backup and the limitation of only supporting homogeneous restoration, which will be our next optimization direction.

Interpretation of Global Binlog and Backup and Restoration Capabilities of PolarDB-X 2.0

Point-in-Time Recovery for PolarDB-X Operator: Leveraging Two Heartbeat Transactions

ApsaraDB - June 13, 2024

ApsaraDB - October 17, 2024

ApsaraDB - June 12, 2024

ApsaraDB - October 17, 2024

ApsaraDB - June 19, 2024

Nick Patrocky - January 24, 2024

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Database Backup

Database Backup

A reliable, cost-efficient backup service for continuous data protection.

Learn MoreMore Posts by ApsaraDB