By Boyan

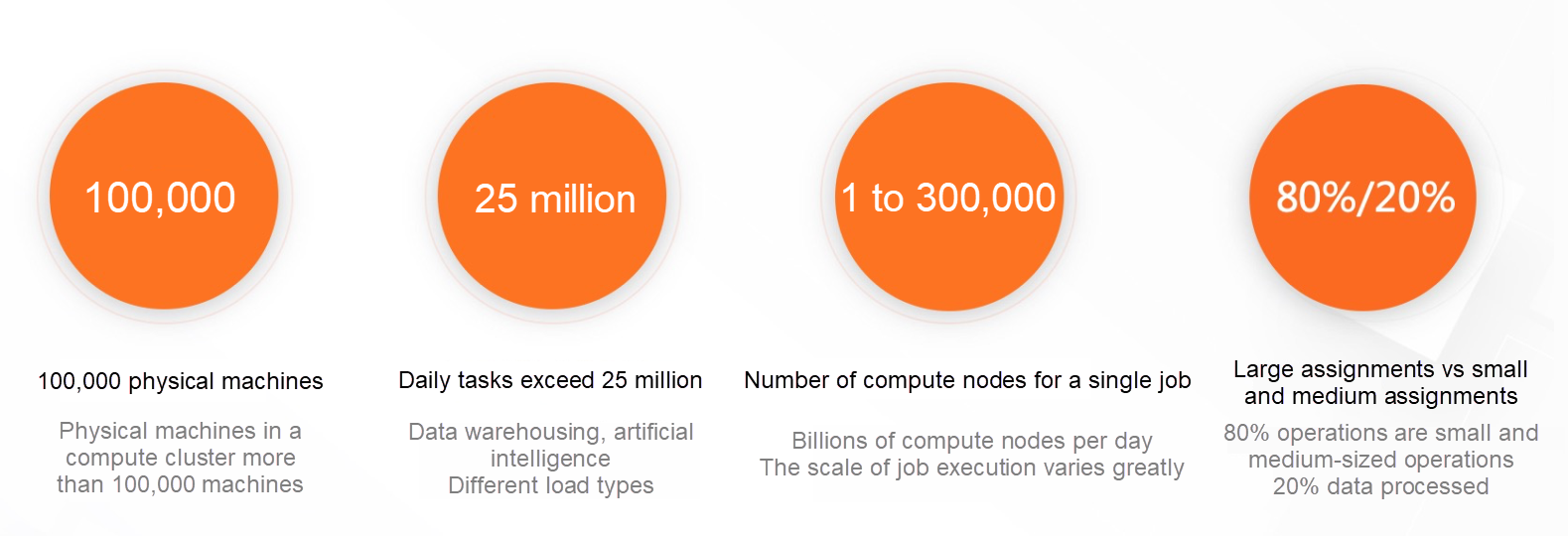

As a rare EB -level data distributed platform in the industry, MaxCompute supports the running of tens of millions of distributed jobs every day. In terms of the number of jobs of this magnitude, there is no doubt that the platform needs to support various characteristics. There are super-large jobs containing hundreds of thousands of computing nodes unique to the big data ecology of Alibaba mass and small and medium-sized distributed jobs. At the same time, different users have different expectations for jobs of different sizes and characteristics in terms of up time, resource utilization efficiency, and data throughput.

Fig. 1: MaxCompute Online Data Analysis

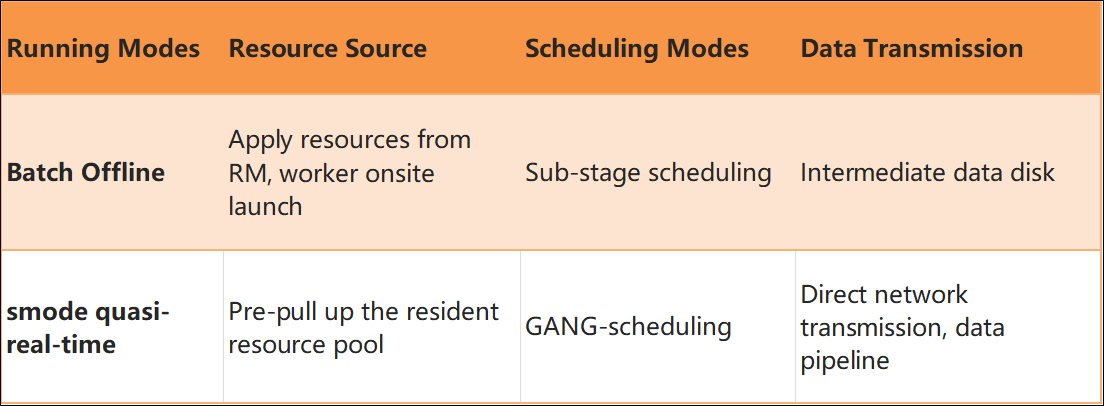

Based on the different sizes of jobs, the MaxCompute platform currently provides two different running modes. The following table summarizes and compares these two modes:

Fig. 2: Offline (Batch) Mode vs. Integrated Scheduling Quasi-Real-Time (SMODE) Mode

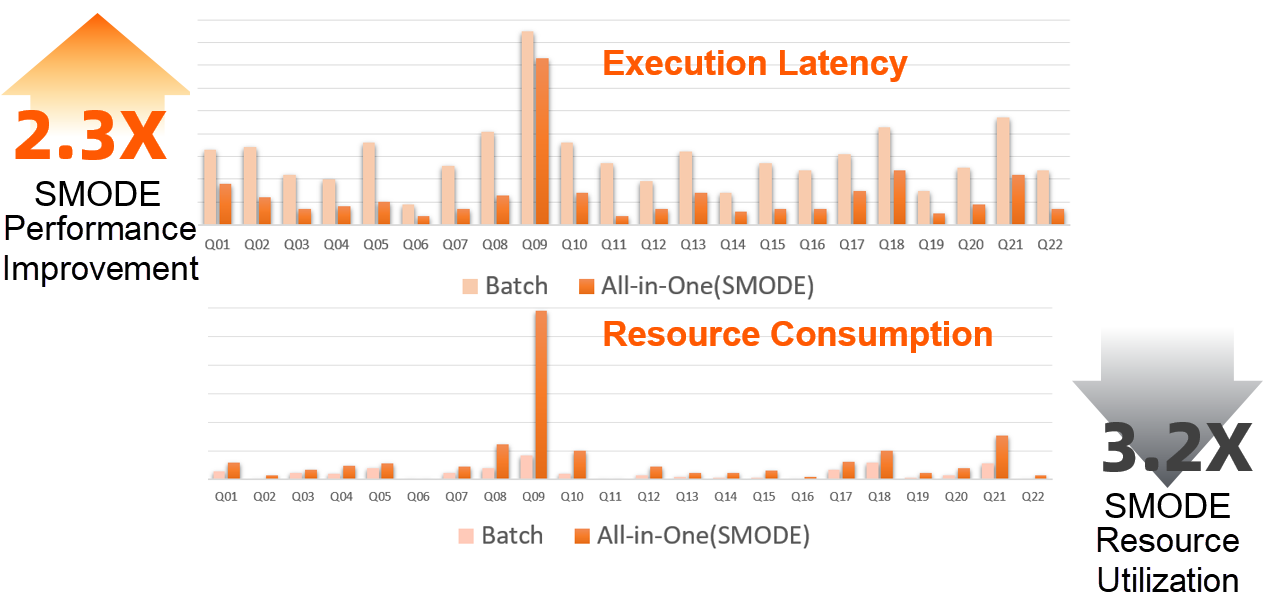

As shown in the preceding figure, offline jobs and quasi-real-time jobs with integrated scheduling are significantly different in terms of scheduling methods, data transmission, and resource sources. These two operation modes represent the two extremes of applying for resources on-demand to optimize throughput and resource utilization in scenarios with large amounts of data and reducing execution latency through full pre-pull of compute nodes (and direct data transmission) when processing medium (and small) amounts of data. These differences will eventually be reflected in aspects, such as execution time and job resource utilization. The offline mode with high throughput as the main optimization target and the quasi-real-time mode with low latency are very different in all aspects of performance. For example, the 1TB-TPCH standard benchmark is used as an example to compare the execution time (performance) and resource consumption of this report. The quasi-real-time (SMODE) has advantages in performance (2.3X), but such performance improvement is not without cost. In the specific scenario of TPCH, the SMODE mode of integrated execution obtains 2.3X performance improvement and consumes 3.2X system resources (cpu * time).

Fig. 3 Performance/Resource Consumption Comparison: Offline (Batch) Mode vs. Integrated Scheduling Quasi-Real-Time (SMODE) Mode

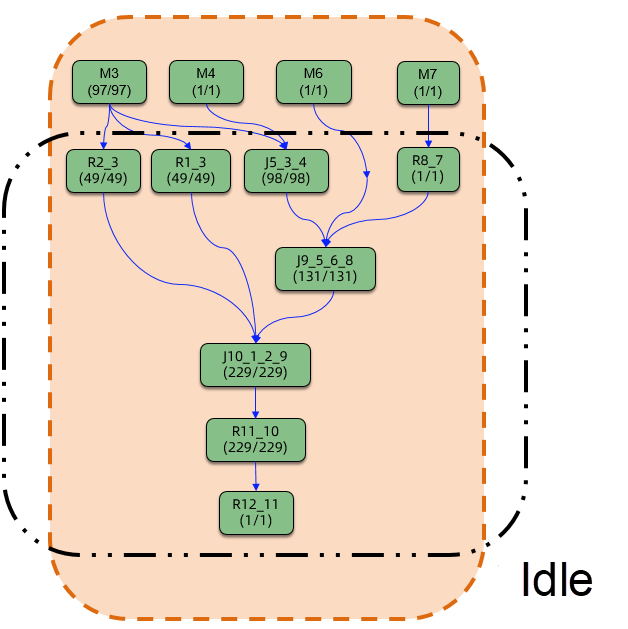

This observation is not surprising. According to the DAG generated by a typical SQL, all compute nodes are pulled up at the beginning of job submission, even though this scheduling method allows data to be built (when needed), which may accelerate data processing. However, not all upstream and downstream computing nodes in all execution plans can have idealized pipelined data flow. For many jobs, in addition to the root node of the DAG (M node in the figure below), the downstream computing nodes are wasted to some extent.

In Fig. 4, Integrated Scheduling Quasi-Real-Time (SMODE) Mode, the Possible Resource Use Is Inefficient.

The inefficiency of resource usage caused by this idling is particularly evident when there is a barrier operator in the data processing process that cannot be pipelined and when the DAG is relatively deep. For scenarios where you want to optimize job up time, it is reasonable to use more resources to obtain extreme performance optimization. In some business-critical online service systems, the average single-digit CPU utilization is not uncommon to ensure that services can always respond quickly and process peak data. However, can a distributed system of the magnitude of computing platform achieve a better balance between extreme performance and efficient resource utilization?

Yes. This is the hybrid computing model we will introduce here: Bubble Execution.

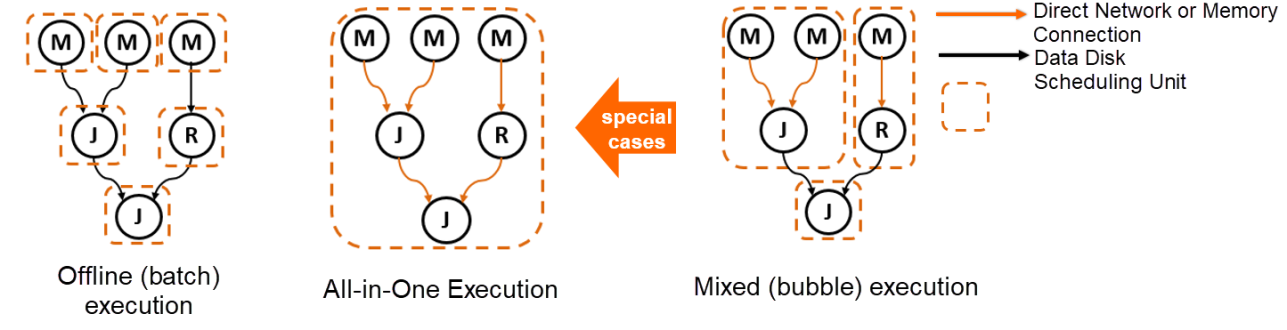

The core architecture idea of the DAG framework lies in the clear hierarchical design of the logical and physical layers of the execution plan. The physical execution diagram is realized by materializing the physical characteristics (such as data transmission medium, scheduling timing, and resource characteristics) of nodes and edges in the logic diagram. Comparing the batch mode and smode mode described in Fig. 2, DAG provides the implementation of unified offline mode and quasi-real-time integrated execution mode on top of a flexible scheduling execution framework. As shown in the following figure, the two existing computing modes can be expressed clearly, and a new hybrid operation mode (Bubble Execution) can be explored after more general expansion by adjusting the different physical characteristics of the computing node and the data connection edge.

Fig. 5: Multiple Computing Modes on the DAG Framework

Intuitively, if we regard a Bubble as a large scheduling unit, the resources inside Bubble apply for operation together, and the data of the upstream and downstream nodes inside are transmitted through the network/memory. In contrast, the data transmission on the connection side between Bubbles is transmitted by dropping the disk. Offline and quasi-real-time job execution can be considered as two extreme scenarios of Bubble execution. The offline mode can be considered as a special case of single-bubble for each stage, while the quasi-real-time framework plans all compute nodes of a job into a large Bubble for integrated scheduling and execution. DAG AM has unified the two computing modes into a set of scheduling execution infra. It makes it possible to complement the advantages of the two modes and lays the foundation for the introduction of Bubble Execution.

Bubble Execution provides a way to select a finer-grained and more general scheduling execution method to obtain an optimized tradeoff between job performance and resource utilization through flexible and adaptive sub-graph (Bubble) cutting. After analyzing information, such as input data volume, operator characteristics, and job scale, the Bubble execution mode of DAG can split an offline job into multiple Bubbles, making full use of network/memory direct connection and preheating of compute nodes within Bubble to improve performance. In this sharding mode, all compute nodes in a DAG can be cut into a Bubble, and different Bubbles can be cut according to their location in the DAG. You cannot cut into any Bubble at all still running in the traditional offline job mode. This highly flexible hybrid operation mode makes the operation of the entire job more flexible and adaptable to the characteristics of a variety of online operations, which is of great significance in production:

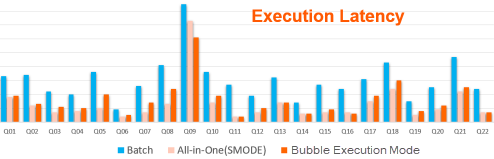

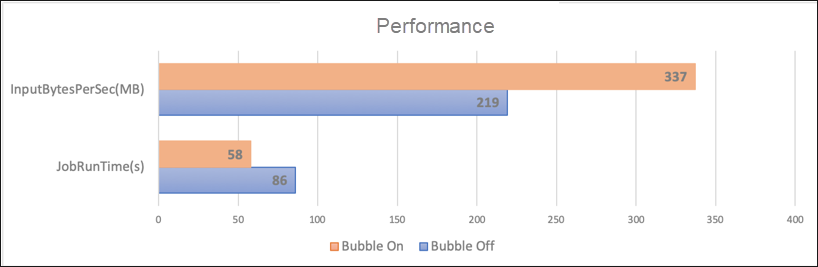

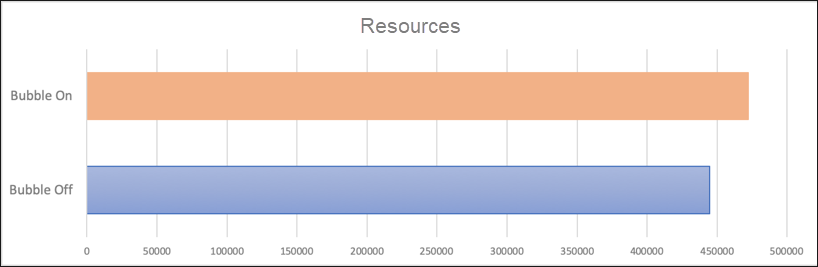

We can visually evaluate the effect of Bubble execution mode through the standard TPCH-1TB test benchmark. When the upper-layer computing engine (MaxCompute optimizer, runtime, etc.) remains unchanged and the size of Bubble remains 500 (specific Bubble segmentation rules are described below), compare the Bubble execution mode with standard offline mode and quasi-real-time mode in terms of performance (Latency) and resource consumption (cpu * time):

Fig. 6a: Performance (Latency) Comparison: Bubble Mode vs. Batch Mode vs. Integrated Scheduling Quasi-Real-Time (SMODE) Mode

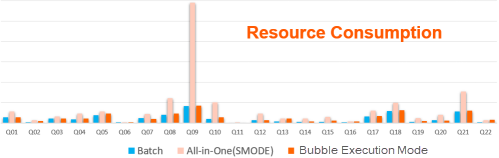

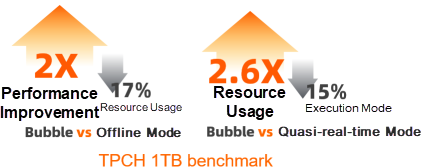

From the up time point of view, Bubble mode is far better than offline mode (overall 2X performance improvement) and compared with quasi-real-time integrated scheduling mode, Bubble's execution performance is not significantly reduced. In some data that can be processed by pipeline (such as Q5, Q8, etc.), quasi-real-time operations still have certain advantages. However, the advantages of SMODE jobs in execution time are not without cost. If resource consumption is also considered, in the following figure, we can see that the performance improvement of quasi-real-time jobs is based on the premise that resource consumption is far greater than that of Bubble mode. While Bubble's performance is far better than that of offline mode, its resource consumption is similar on the whole.

Fig. 6b: Comparison of Resource Consumption (cpu time): Bubble Mode vs. Offline (Batch) Mode vs. Integrated Scheduling Quasi-Real-Time (SMODE) Mode*

Taken together, Bubble Execution can combine the advantages of batch mode and quasi-real-time mode:

Fig. 6c: Overall Comparison of Bubble Mode and Offline/Quasi-Real-Time Mode

It is worth noting that in the TPCH Benchmark comparison above, we simplified the Bubble segmentation condition. The overall limit of the size of the bubble is 500, without fully considering the conditions such as barriers. If we further optimize the segmentation when splitting the bubble (for example, for nodes whose data can be effectively pipelined), we try our best to ensure that the segmentation is inside the bubble. The execution performance and resource utilization of the job can be improved further, which we will pay attention to in the production system online process. For more information, please see Section 3.

After understanding the overall design idea and architecture of Bubble execution mode, the next step is to talk about the implementation details of the specific Bubble mode and the specific work required to push this new hybrid execution mode online.

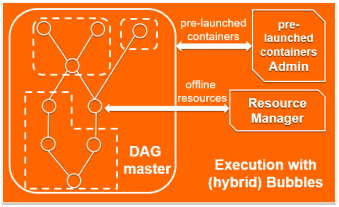

Jobs that use Bubble Execution (hereinafter referred to as Bubble jobs), like traditional offline jobs, use a DAG master (aka Application Master) to manage the lifecycle of the entire DAG. AM is responsible for the reasonable bubble splitting of DAG and corresponding resource application and scheduling. On the whole, the computing nodes inside Bubble will be accelerated based on the principle of computing acceleration, including the use of pre-pulled computing nodes and Data Transmission Service pipeline acceleration through memory/network direct transmission. Compute nodes that are not in the bubble are executed in the classic offline mode, and data that is not on the connected edges (including the edges across the bubble boundary) inside the bubble are transmitted through the disk mode.

Fig. 7: Hybrid Bubble Execution Mode

The Bubble Split method determines the execution time and resource utilization of a job. It needs to be considered based on the concurrency scale of the compute node, the internal operator attributes of the node, and other information. After splitting the bubble, Bubble execution involves node execution and how to combine it with the shuffle method of data pipeline/barrier. Here is a separate description.

The core idea of Bubble Execution is to split an offline job into multiple Bubbles for execution. Several factors need to be considered comprehensively to cut out the bubble that is conducive to the overall efficient operation of the job:

Among the factors above, the barrier attribute of the operator is determined by the upper-layer computing engine (e.g., MaxCompute optimizer). Generally speaking, operators that rely on global sort operations (such as MergeJoin, SorteAggregate, etc.) are considered to cause data barriers, while operators based on hash feature operations are more pipeline-friendly. For the number of compute nodes allowed within a single Bubble, the selected default upper limit is 500 based on our analysis of the features of online quasi-real-time jobs and the actual grayscale experiments of Bubble jobs. This is a reasonable value in most scenarios, which can ensure that full resources can be obtained relatively quickly because the amount of data processed is positively correlated with DoP. The bubble of this scale generally does not have the problem of memory overrun. Of course, these parameters and configurations allow the job level to be fine-tuned through configuration. At the same time, the Bubble execution framework will also provide the ability to dynamically adjust in real-time during job operation.

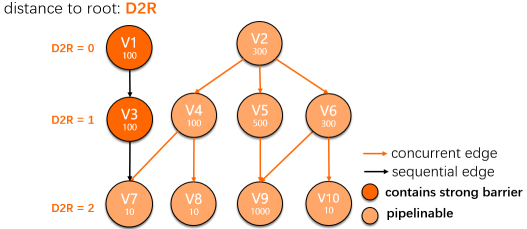

In the DAG system, one of the physical attributes of edge connection is whether the upstream and downstream nodes of the edge connection have running dependencies. For the traditional offline mode, the upstream and downstream run one after another, which corresponds to the property of sequential, which we call the sequential edge. For upstream and downstream nodes in bubble, they are scheduled and run at the same time. We call the edge connecting such upstream and downstream nodes, which is the concurrent edge. Note: The physical properties of this concurrent/sequential in the bubble application scenario are coincident with the physical properties of the data transfer method (network/memory direct transmission vs. data drop.) Note: These two are still separate physical attributes. (For example, concurrent edge can also transfer data through data drop when necessary.)

Based on this hierarchical abstraction, the Bubble segmentation algorithm is essentially a process of trying to aggregate the nodes of the DAG graph and restore the concurrent edge that does not meet the bubble admission condition to a sequential edge. Finally, the subgraph connected by the concurrent edge is a bubble. Here we show how the Bubble cutting algorithm works with a practical example. Assume there is a DAG graph shown in the following figure. The circle in the graph indicates the computing vertex (vertex), and the number in each circle indicates the actual computing node concurrency corresponding to the vertex. V1 and V3 are labeled as barrier vertices because they contain barrier operators at the beginning of job submission. The connection line between the circles indicates the upstream and downstream connection edges. The orange line represents the (initial) concurrent edge, and the black line represents the sequential edge. The sequential edge in the initial state graph is determined according to the principle that the output edges of the barrier vertex are all sequential edges. All other edges are initialized as concurrent edges by default.

Fig. 8: Example DAG (Initial Status)

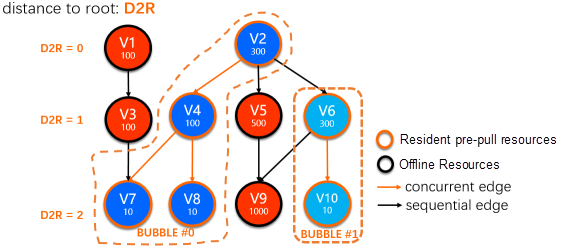

Based on this initial DAG, according to the overall principles described above and some implementation details described at the end of this chapter, the initial state described in the preceding figure can go through multiple rounds of algorithm iteration and finally produce the following Bubble segmentation results. Two Bubbles were produced in this result: Bubble#0 [V2, V4, V7, V8] and Bubble#1 [V6, V10], while other nodes are judged to run in offline mode.

Fig. 9: Example DAG Graph Bubble Split Results

In the segmentation process of the figure above, the vertex is traversed from the bottom to the top, and the following principles are followed:

If the current vertex cannot be added to Bubble, restore its input edge to the sequential edge (for example, V9 in DAG).

If the current vertex can be added to the bubble, the breadth-first traversal algorithm is executed to aggregate to generate the bubble. First, retrieve the vertex of the input edge connection and then retrieve the vertex of the output edge connection. For vertices that cannot be connected, restore the edge to a sequential edge (for example, when traversing the output vertex V5 of V2 in the DAG graph, edge restore will be triggered because the total task count exceeds 500.)

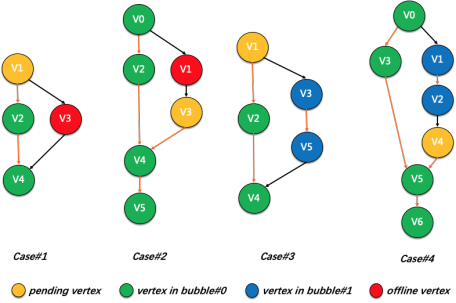

For any vertex, it can only be added to Bubble if the following conditions are met:

The vertex and the current bubble do not have circular dependencies, namely:

Note: The upstream/downstream here represents the direct successor/precursor of the current vertex and includes the indirect successor/precursor.

Fig. 10: Several Scenarios Where the Bubble Process May Have Circular Dependencies

The online bubble segmentation also takes information into account, such as actual resources, and expected up time, such as whether the plan memory of the computing node exceeds a certain value, whether the computing node contains UDF operators, and whether the estimated execution time of the computing node based on historical information (HBO) in the production job is too long. This is not repeated here.

The source of the compute nodes inside Bubble comes from the resident preheating resource pool by default to accelerate computing, which is the same as the quasi-real-time execution framework. At the same time, we provide flexible pluggability, allowing Bubble compute nodes to apply from Resource Manager on the spot (switchable through configuration) if necessary.

In terms of scheduling timing, a Bubble internal node scheduling strategy is related to its corresponding input edge characteristics and can be divided into the following scenarios:

For example, Bubble#1 in Fig. 7 only has one external dependent edge of SequentialEdge. When V2 is completed, the overall scheduling of V6 + V10 (through concurrent edge) will be triggered, thus running the entire Bubble#1.

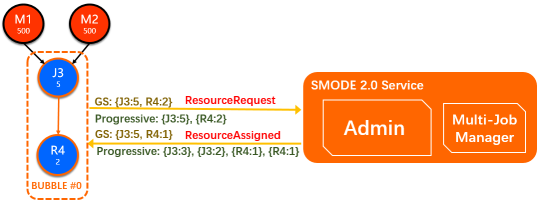

After Bubble is triggered for scheduling, it will apply for resources from the SMODE Admin directly. The resource application mode of Integrated Gang-Scheduling (GS) is used by default. In this mode, the entire Bubble will build a request and send it to the Admin. When the Admin has enough resources to meet this application, it will send the scheduling result that contains the pre-pull worker information to the AM of the bubble job.

Fig. 11: Resource Interaction between Bubble and Admin

In order to support the scenarios of tight resources and dynamic adjustment within Bubble, Bubble also supports the Progressive resource application mode. This mode allows each Vertex in Bubble to apply for resources and scheduling independently. For this application, the Admin sends the result to AM as long as there is incremental resource scheduling until the request of the corresponding Vertex is fully satisfied. For the unique application of this kind of scene, it will not be expanded here.

After the quasi-real-time execution framework is upgraded, the Resource Management (Admin) and multi-DAG job management logic (MultiJobManager) in the SMODE service have been decoupled. Therefore, the resource application logic in the bubble mode only needs to interact with the Admin without any impact on the DAG execution management logic of normal quasi-real-time jobs. In addition, each resident compute node in the resource pool managed by the Admin runs through the Agent + Multi-Labor mode to support the online grayscale hot upgrade capability. When scheduling specific resources, the worker version is also matched according to the AM version, and the labor that meets the conditions is scheduled for Bubble jobs.

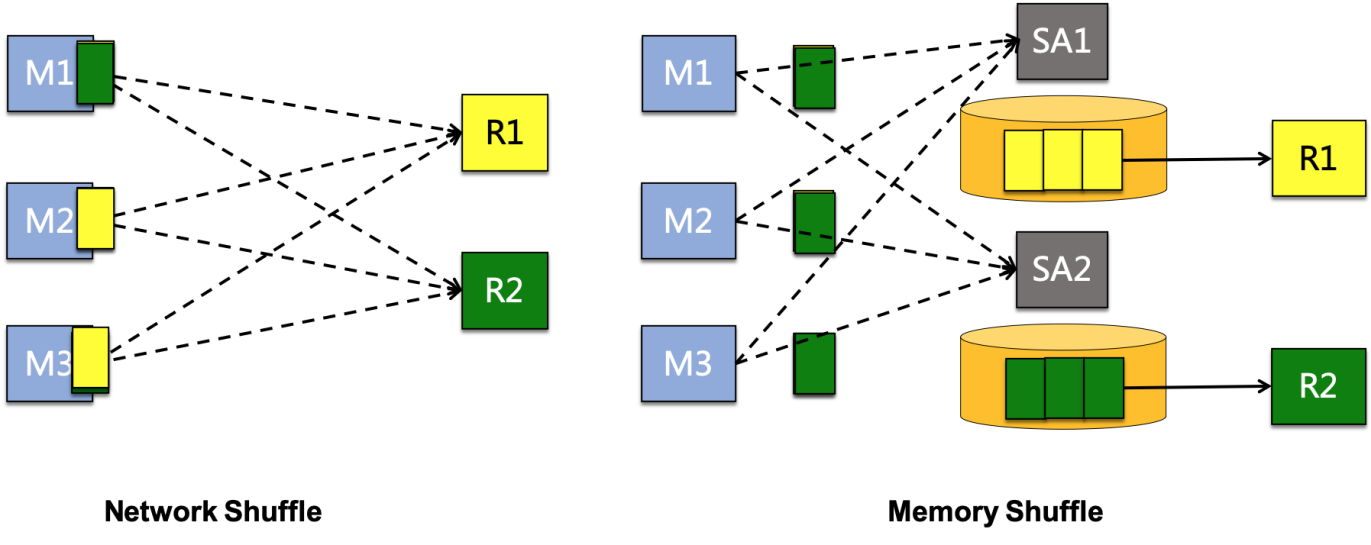

For the sequential edge that traverses the Bubble bourndary, the data transmitted on it is the same as that of ordinary offline jobs and is transmitted by dropping the disk. Here, we mainly discuss the ways of data transmission within Bubble. According to the job bubble segmentation principle described earlier, the bubble usually has sufficient data pipeline characteristics, and the data volume is not large. Therefore, the data on the concurrent edge of the bubble is shuffled by the network/memory direct transmission method with the fastest execution speed.

The network shuffle mode is the same as that of the classic quasi-real-time job. TCP links are established between upstream and downstream nodes to send data through direct network connections. This push-based network data transmission method requires the upstream and downstream to be pulled up at the same time. According to the chain dependency transmission, this network push mode is strongly dependent on Gang-Scheduling. It also limits the flexibility of bubble in terms of fault tolerance, long tail avoidance, and other issues.

The memory shuffle mode is explored in the Bubble mode to solve the problems above. In this mode, the upstream node writes data directly to the memory of the cluster ShuffleAgent (SA) while the downstream node reads data from the SA. Fault tolerance and expansion of the in-memory shuffle mode, including the ability to asynchronously drop some data to ensure higher availability when memory is insufficient, is independently provided by ShuffleService. This mode can support Gang-Scheduling and Progressive scheduling modes, which also provides strong scalability. For example, you can read more local data through SA Locality scheduling and implement a more granular fault-tolerant mechanism through lineage-based instance level retry.

Fig. 12: Network Shuffle vs. Memory Shuffle

In view of the many scalability advantages provided by memory shuffle, this is also the default shuffle mode selected for online Bubble jobs, while network direct transmission is used as an alternative, allowing it to be used through configuration on ultra-small-scale jobs with little cost of fault tolerance.

As a new hybrid execution mode, Bubble execution explores various fine-grained balances between offline jobs and quasi-real-time jobs with integrated scheduling. In complex online clusters, various failures are inevitable during operation. In the new mode of bubble, its strategy for failure handling is also more diversified to minimize the impact of failure and achieve the best balance between reliability and job performance.

According to different exceptions, we have designed various targeted fault-tolerant strategies to deal with various abnormal scenarios that may be involved in the execution process through various efforts from fine to coarse, such as failure to apply for resources from the Admin, task execution failure in bubble, bubble-renew of multiple execution failures in bubble, failover of AM during execution, etc.

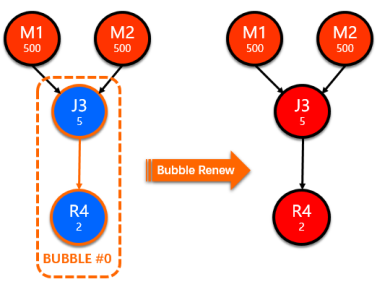

When Bubble fails on an internal compute node, the default retry policy is rerun bubble. When the current execution (attempt) of a node in the bubble fails, the entire bubble will be run immediately and the attempt of the same version being executed will be canceled. When resources are returned, bubble is triggered to execute again. This way, all compute nodes in the bubble have the same (retry) attribute version.

There are many scenarios in which bubble rerun is triggered. The most common ones are listed below:

taskAttempt Failed, thus rerunning bubble.Input Read Error: it is a common mistake that the input data cannot be read while compute node is running. For bubble, there are three different types of this error:

InputReadError in Bubble: Since the shuffle data source is also in the bubble, the corresponding upstream task is also rerun when the rerun bubble. There is no need for targeted treatment.InputReadError at the Bubble Boundary: The shuffle data source is generated by the task in the upstream offline vertex (or another bubble). InputReadError triggers the upstream task rerun. The current bubble rerun will be delayed until the new version of the upstream lineage data is ready before the scheduling is triggered.InputReadError Downstream of Bubble: If an InputReadError occurs on a task downstream of the bubble, this event triggers a task in the bubble to rerun. In this case, the entire bubble rerun is triggered because the memory shuffle data on which the task depends has been released.When Admin resources are tight, Bubble's resource application from the Admin may time out due to waiting. In some abnormal situations (for example, when the bubble applies for resources), the online job service is at the restart interval, and the resource application fails. In this case, all vertices in the bubble will fall back to the pure offline vertex state for execution. In addition, if the number of reruns exceeds the upper limit, the bubble renew is triggered. After bubble renew occurs, all internal edges are restored to sequential edge, and after all vertices are reinitialized, the internal state transition of these vertices is triggered by playing back all internal scheduling state machine trigger events in a purely offline manner. Ensure that all vertices in the current bubble are executed in the classic offline mode after rollback, thus ensuring that the job can be terminated normally.

Fig. 13: Bubble Renew

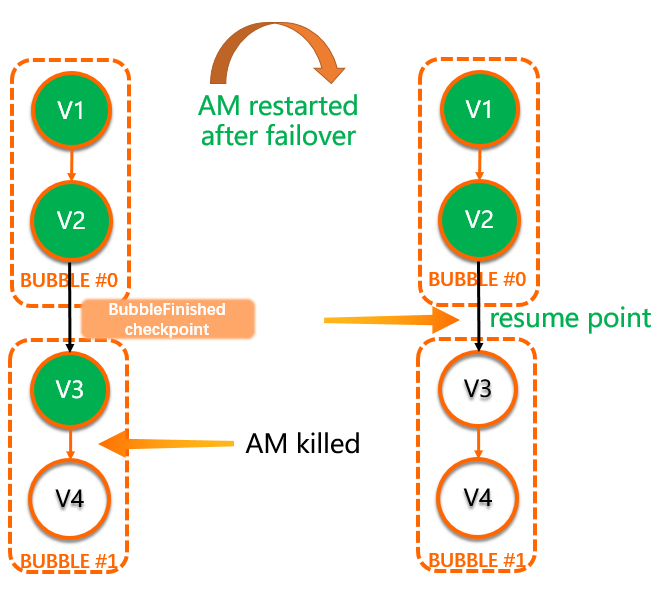

For normal offline jobs, in the DAG framework, internal scheduling events related to each compute node are persistently stored to facilitate incremental failover at the compute node level. However, for a bubble job, if an AM failover restart occurs during the bubble execution process, the bubble is restored by the replay of stored events, which may be restored to the running intermediate state. However, since the internal shuffle data may be stored in the memory and lost, the unfinished compute nodes in the bubble that are restored to the intermediate running state will fail immediately because the upstream shuffle data cannot be read.

This is essentially because the bubble as a whole exists as the minimum granularity of failover in Gang-Scheduled Bubble scenarios. Therefore, once an AM failover occurs, the recovery granularity should also be at the bubble level. Therefore, all scheduling events related to bubble will be treated as a whole in operation, and the bubbleStartedEvent and bubbleFInishedEvent will be brushed out when bubble starts and ends respectively. All related events of a bubble will be taken as a whole when it is restored after failover. Only the bubbleFInishedEvent at the end indicates that the bubble can be considered completely finished. Otherwise, the entire bubble will be rerun.

In the following example, the DAG contains two Bubbles (Bubble#0:{V1, V2} and Bubble#1:{V3, V4}), Bubble#0 has TERMINATED and the BubbleFinishedEvent is written out when an AM restart occurs. V3 in Bubble #1 has also been Terminated, but V4 is in the Running state, and the entire Bubble #1 has not reached the final state. After AM recovers, V1 and V2 will return to the Terminated state, and Bubble#1 will start the execution again.

Fig. 14: AM Failover with Bubbles

The Bubble mode has been fully launched in the public cloud. In SQL jobs, Bubble is 34% executed, and the average daily execution includes 176K Bubbles.

We compared the query with the same signature when the bubble execution was closed and opened. We found that the execution performance of jobs improved by 34%, and the amount of data processed per second increased by 54% on the basis that the overall resource consumption remained virtually unchanged.

Fig. 15: Execution Performance/Resource Consumption Comparison

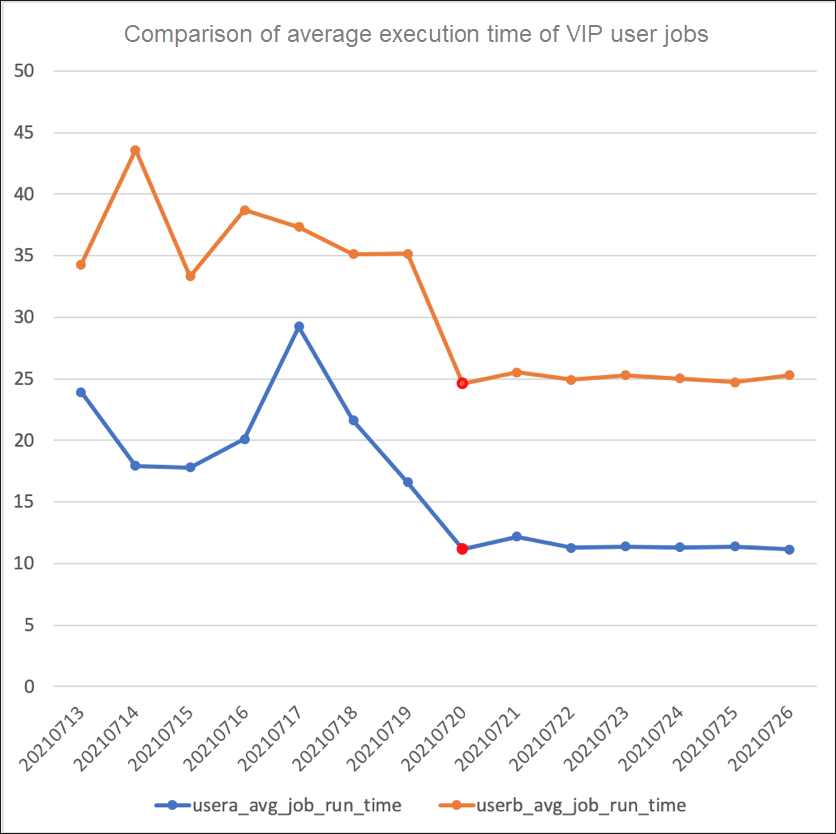

In addition to the overall comparison, we made a targeted analysis for VIP users. After the Bubble switch is turned on for user Project (the red mark in the figure below is the time point when Bubble is turned on), the average execution performance of the job has been improved significantly.

Fig. 16: Comparison of Average Execution Time after VIP Users Turn on Bubble

The Practice of Real-Time Data Processing Based on MaxCompute

Crowd Selection and Data Service Practices Based on MaxCompute & Hologres

137 posts | 21 followers

FollowAlibaba Cloud MaxCompute - August 31, 2020

Alibaba Clouder - December 2, 2020

Alibaba Cloud MaxCompute - August 31, 2020

Alibaba Cloud MaxCompute - January 22, 2024

Alibaba Clouder - November 27, 2017

Hologres - June 30, 2021

137 posts | 21 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Quick BI

Quick BI

A new generation of business Intelligence services on the cloud

Learn MoreMore Posts by Alibaba Cloud MaxCompute