By Zhang Xindong (Biexiang)

Currently, the Patch Recommendation by Empirically Clustering (PRECFIX) technology has been implemented and well-received within Alibaba Group. Papers on the PRECFIX technology are also included in the International Conference on Software Engineering (ICSE.)

Zhang Xindong (Biexiang), an Algorithm Engineer from the Alibaba Cloud R&D Division

Coding is an important component of DevOps. My department is mainly responsible for managing the codes of Alibaba Group. Behind the platform business, we have established a series of intelligent capabilities to empower frontline business. Based on the underlying code graph and data capabilities of offline data warehouses, code intelligence derives capabilities such as fault detection, code generation, code cloning, and code security. Today, we will mainly introduce our preliminary research and practice in the field of fault detection.

For decades, fault detection and patch recommendation have been a knot in software engineering, and one of the most concerning issues for researchers and frontline developers. Here, the fault neither refers to a network vulnerability nor system defect, but the fault hidden in the code. The programmers call it the "bug." Every developer wants to have an intelligent fault detection technology to improve code quality and avoid errors. As the saying goes, "the biggest danger arises from the most charming situation." Naturally, this fascinating technology has many obstacles.

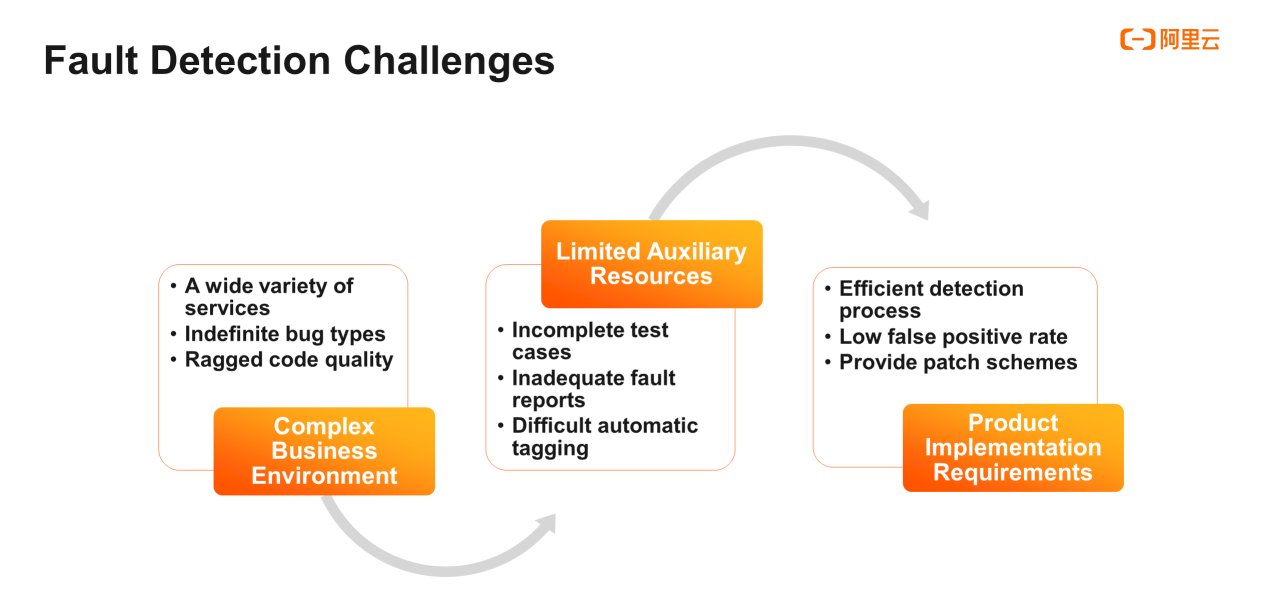

After studying some existing solutions and the characteristics of Alibaba datasets, we've summarized the challenges of implementing the fault detection technology in Alibaba products into three aspects:

Firstly, the Alibaba economy has a wide variety of services that demand codes for underlying system middleware, logistics, security, artificial intelligence (AI), and other fields. Code bug types vary with the changing services. Fault detection is a difficult task due to the increasing code volume and unpredictable code fault types.

Taking the Java language as an example, Defect4J is an open dataset of Java faults commonly used in academia. Defect4J contains 6 code libraries with nearly 400 manually confirmed bugs and patches. These code libraries are relatively basic and conventional, and the fault types are easy to divide. Some researchers study certain types of faults, achieving adequate detection results, but the detection performance is not guaranteed for another type of fault.

It is difficult to define many fault types in Alibaba datasets. Therefore, we want to develop an approach of fault detection and patch recommendation that covers as many types of faults as possible. With no need to eliminate all faults, this approach should be able to adapt to different "diseases" and improve the immunity of codes.

Limited auxiliary resources pose the second challenge. This is also the reason why many academic achievements cannot be directly reproduced and utilized.

Test case, fault report, and fault tag are the three auxiliary resources that are commonly used. Although most of the online codes are tested, a large number of code libraries lack test cases due to varying code quality. We also lack sufficient fault reports to directly locate faults. We also try to learn fault modes through fault tags. Automatic tagging has low accuracy and manually tagging a large dataset is not feasible.

The third and most difficult challenge is the product implementation.

Technology needs to be implemented into products, but implementation is very demanding. The main implementation scenarios we conceived are the static scanning of faults and patch recommendation in code reviews. As a product manager said, "the detection process must be efficient with minimum false positives. Fault localization is not enough, and the patch plan should give access to users as well."

Undoubtedly, it is not wise to stand still or act blindly in terms of research and innovation. First, let me introduce some existing achievements in related fields. This section mainly describes fault detection, patch recommendation, and other related technologies.

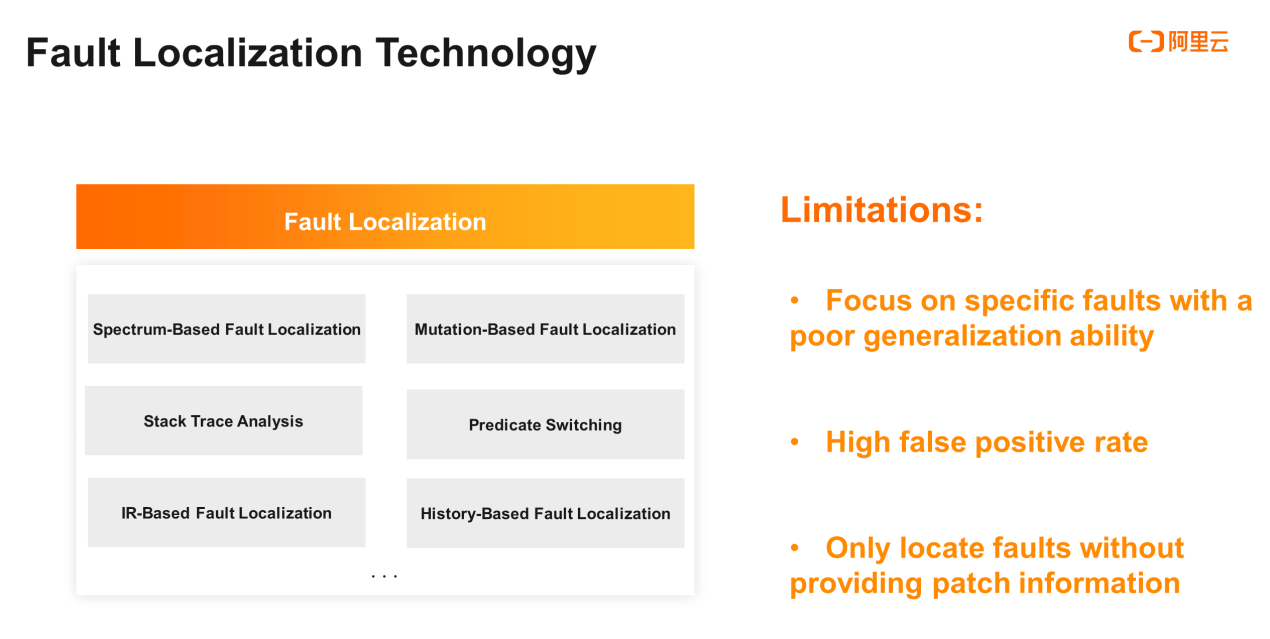

The following summary of fault localization technology is primarily based on the papers of researcher Xiong Yingfei (Associate Professor at Peking University). Major approaches include spectrum-based fault localization, mutation-based fault localization, stack trace analysis, and more.

Spectrum-based fault localization relies on test cases. Positive scores are given to code lines where the test cases succeeded. Negative scores are given to code lines where the test cases failed. In the spectrum-like form, some code lines with lower scores are classified as potential fault lines.

The fault localization approaches mainly focus on specific faults. Compared with locating other faults, locating specific faults is more accurate. For example, Predicate Switching is mainly used to detect bugs in conditional statements.

The second limitation lies in the high rate of false positives. Taking the test results of Defect4J datasets as an example, among the preceding approaches, spectrum-based fault localization has the highest accuracy, but its best hit rate is only about 37%. In some studies, these fault detection approaches with their own strengths are integrated to find that the rate of false positives still exceeds 50%.

Most importantly, the preceding fault localization approaches do not provide patch information, which is fatal in actual application. For example, you may feel confused when spectrum-based fault localization returns multiple potential fault lines without a specific fix.

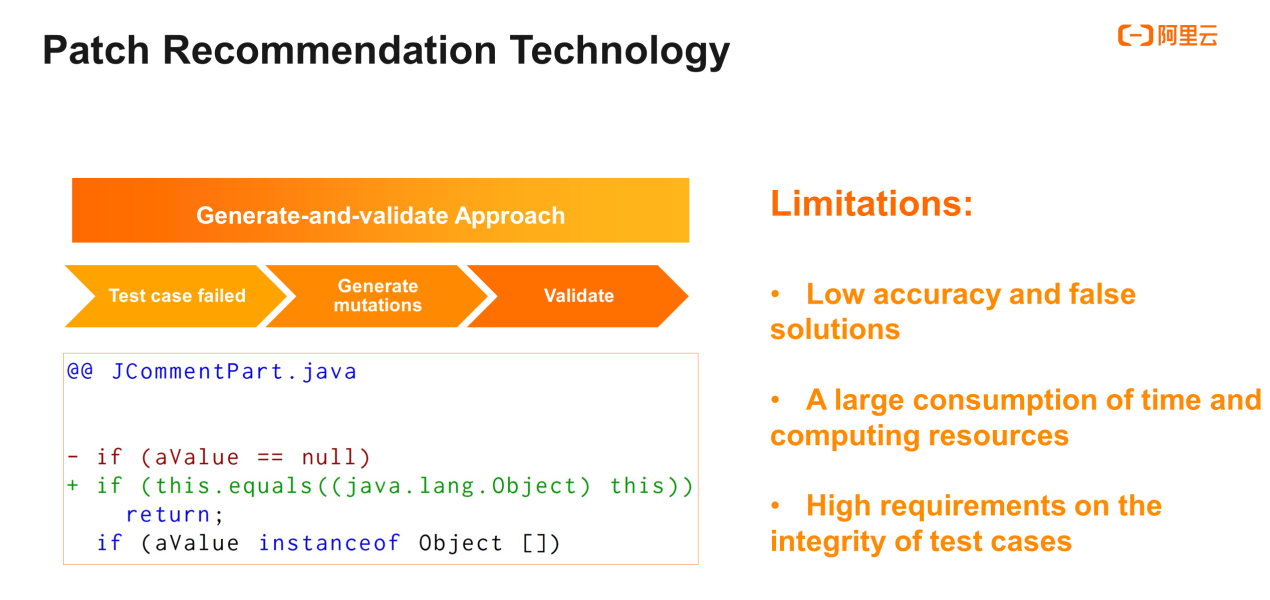

The generate-and-validate approach is a representative study on patch recommendation technology. The general idea of research results under this approach is to locate the code context according to failed test cases, and constantly try to change the nodes in the abstract syntax tree based on semantics, or by randomly replacing code elements. Next, the approach validates the modified results by using test cases or other verifiable information, until the test cases, or other verification approaches work.

These patch generation approaches have three limitations. The first is low accuracy, which is commonly seen in overfitting. The fix segments are generated in different ways from those used by engineers. Some fixes are for test cases rather than for real faults. The left figure is an example of overfitting in a paper. The "generate-and-validate" approach modifies the if condition to a meaningless condition constantly equivalent to true, so the approach can always return during each running. Although these changes worked for the test cases, they are inapplicable to real bugs.

The second limitation is a large consumption of time and computing resources. This fix approach often takes hours to complete a task. Moreover, this approach works based on compilation and requires continuous testing and running, leading to low efficiency. In addition, this approach has high requirements for the integrity of test cases. It tests both the coverage rate of the testing and the rationality of the test case design.

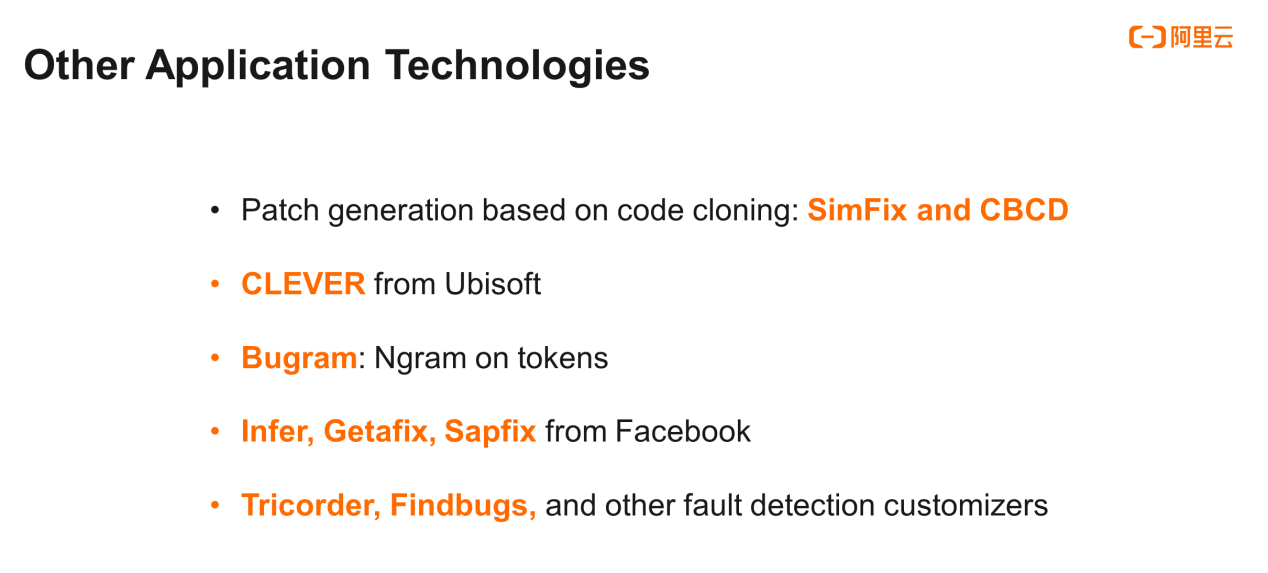

You may know about some other fault detection or patch recommendation technologies, especially from Facebook and Google. Let's take a look at them.

After investigating, we found that the existing external technologies were not able to completely solve the challenges and problems faced by Alibaba, so we put forward the PRECFIX approach.

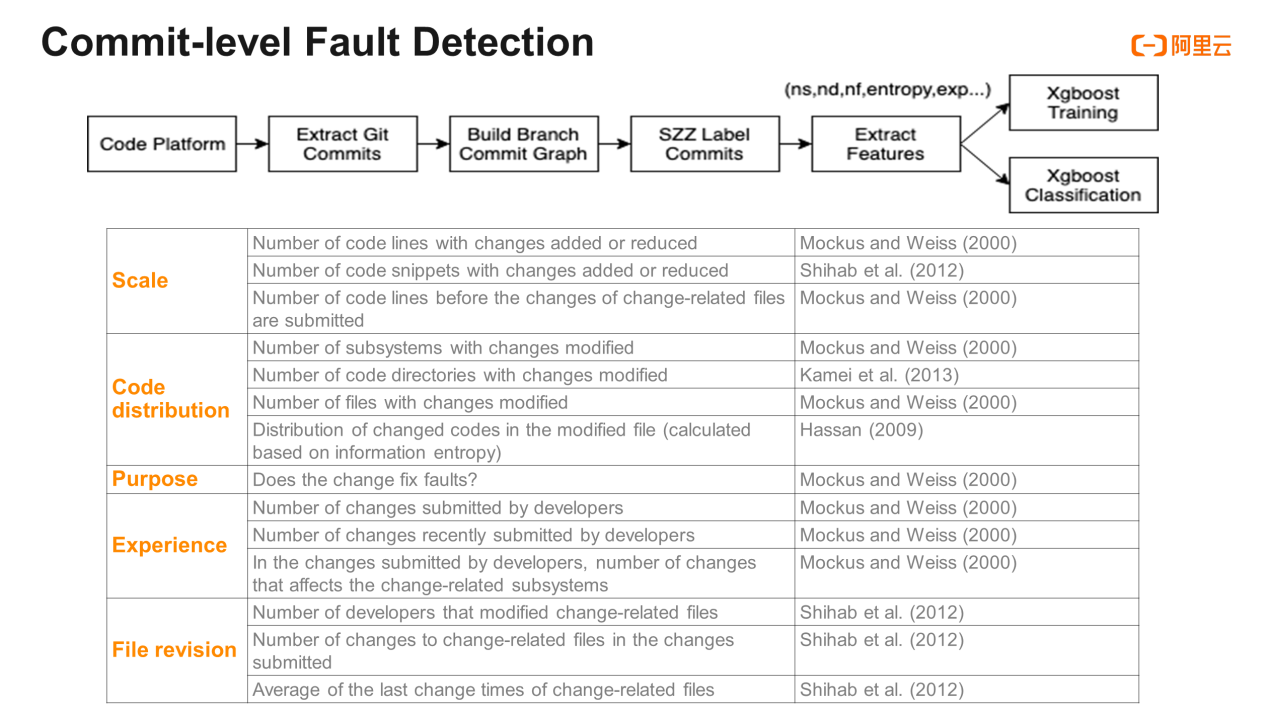

First, we reproduced a commit-level fault detection scenario in Alibaba datasets based on features. This was mentioned when we talked about Ubisoft's Clever. Specifically, this approach is to create the parent-child relationship between commits from the code datasets based on the managed system Git, use the improved SZZ approach to automatically tag the commits, and extract some features. Finally, this approach uses Xgboost or Random Forest to train the features and tags and uses the model to detect commit risks. With a total of 14 sub-features, the features can be divided into categories of scale, code distribution, purpose, developer experience, and file revision.

The model that uses the SZZ algorithm as tag data has a bottleneck inaccuracy. Although the SZZ algorithm is logical and performs better in public datasets, it has low accuracy in our code datasets. We have reviewed hundreds of bug commits marked by the SZZ algorithm and only 53% are fixed. "Noise points" come from the following scenarios: the independence of business-based fixes from codes, the changes in annotation logs, the adjustment in test classes, the optimization of code styles, and the message containing debug information since the code changes are used for debugging. Furthermore, only 37% of the fix-type code changes are migrated to the new code. Some code changes are real fixes, but the changes are too complex or only related to a specific environment or context. Therefore, it lacks universality.

Through careful analysis of tag data, we believe that automated tags do not meet our requirements, and that tagging faults have high technical requirements for markers. Tagging large amounts of code changes is not feasible.

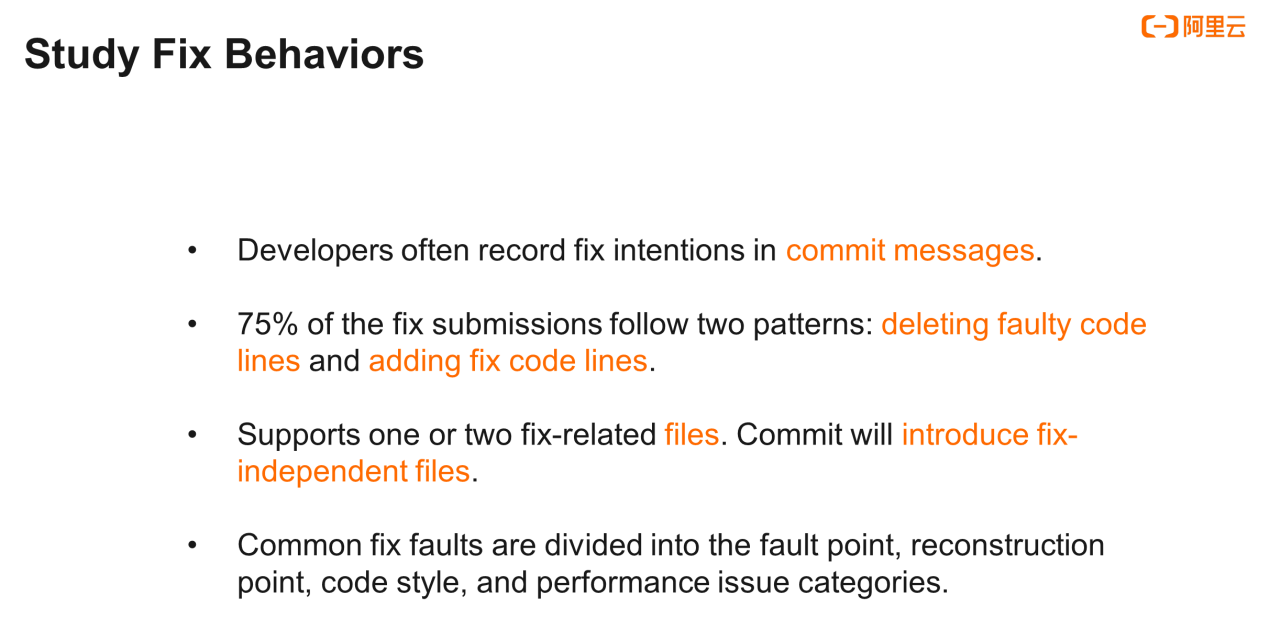

In this context, we started to stop blindly looking for or reproducing approaches, but attentively studied and identified the fix behaviors of developers in their daily development process. We summarized that, with the SZZ algorithm, a commit message usually contains your fix intentions, and you can filter out some data based on the commit message. When we investigated SZZ algorithm data, we found that 75% of the fix submissions followed this pattern: deletion of some faulty code lines and the addition of some other code lines. For example, when you modify a parameter, in diff, a line is deleted and a new line is added.

We also found that a fix operation usually involved no more than two files, and some nonstandard commits contained a large number of files. Even with fix behaviors, they would also be diluted, and the undesirable noise was introduced.

At the same time, we also investigated the faults that you might be more concerned about in the code review phase, such as the failure point, reconstruction point, code style, performance, and more. The records showed that many issues appeared and were repeatedly modified. Then, we had the idea to extract common and repeated faults from large amounts of submissions to prevent developers from making the same mistakes again.

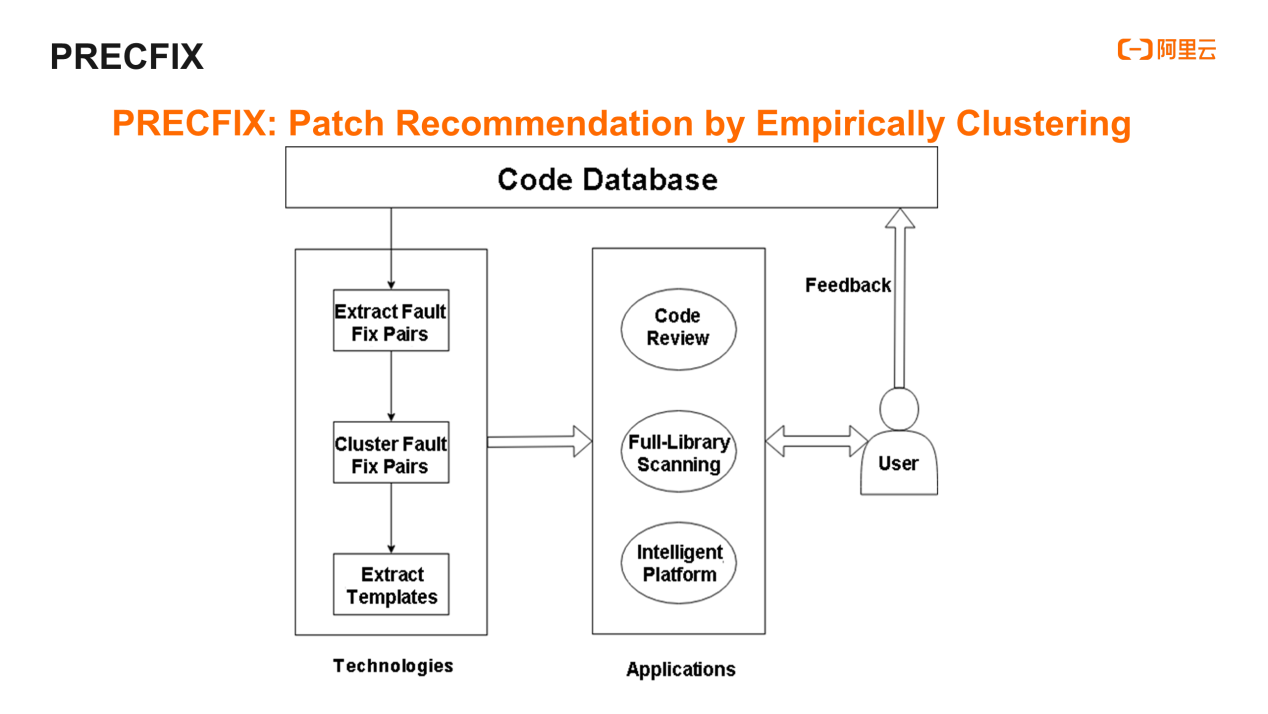

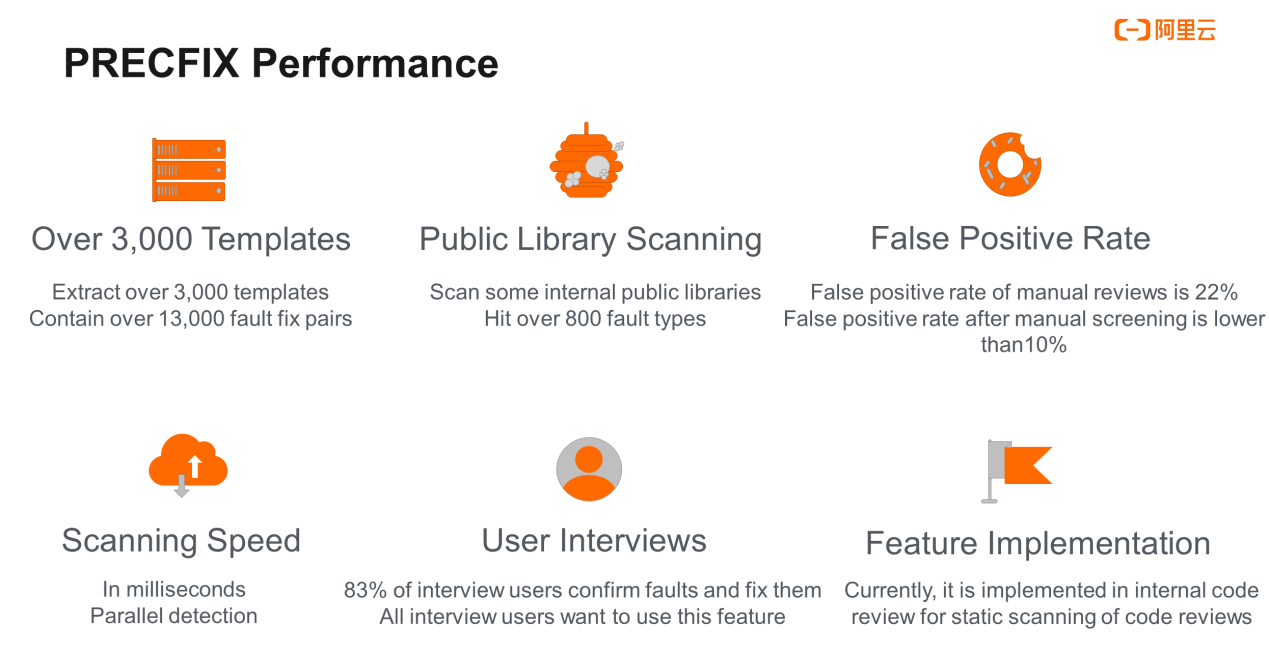

So, we proposed the PRECFIX technology, along with the approach described in papers included in ICSE. It will be used in Apsara DevOps products later. The idea is relatively direct and concise. It is divided into three steps. First, extract the "fault fix pair" from the code submission data and cluster the similar "defect fix pairs." Then, extract the clustering results into a template. This fault detection and patch recommendation technology can be used for code review, offline scanning of the full library, and the like. User feedback and manual review can further improve the quality of model recommendation.

Next, let's talk about the technical details of implementing the PRECFIX approach. The first step is to "extract fault fix pairs", but some of you may ask, "what is a fault fix pair?" and "how can we extract the fault fix pairs?"

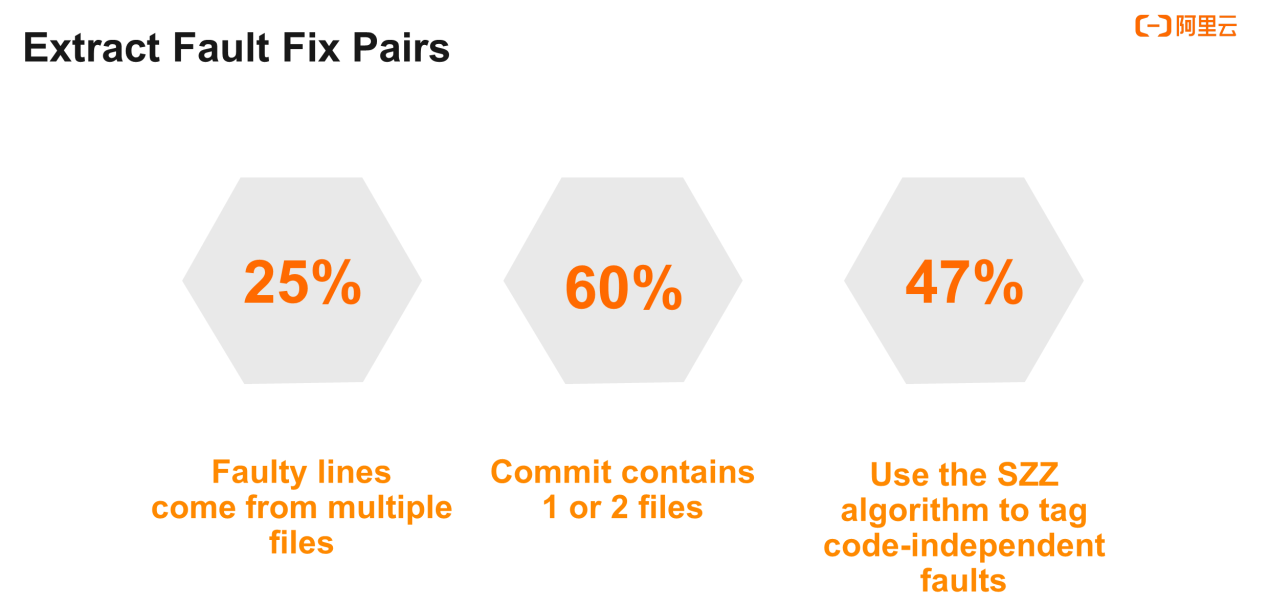

Let's start with a few figures. The SZZ algorithm uses Blame information to trace the commits that introduce the faults and the fault lines. We found that fault lines for 25% of documents originated from multiple documents. This means that the use of Blame information may trace two origins for a fault. However, splitting a fault code line into two lines makes no sense.

Tracing fault code lines through Blame information has low accuracy. The desired fault-related code context is the last submission of this file before the fix-type commit is submitted. We can restore the content before this submission through the file submitted and the corresponding diff file.

We found that most of the fixes were based on approaches. Therefore, we proposed an approach for extracting fault fix pairs based on approaches to merge diff chunks in the approach body to generate a fault fix pair. Of course, we can also extract the fault fix pairs directly in the unit of diff chunks instead of the approach body. These approaches have pros and cons. If diff chunks are used for extraction, you can directly parse the diff without providing source code.

In the extraction process, we need to normalize the fault segments and fix segments. For example, remove spaces and line breaks, compare the two, and filter out the same fault fix pairs. This way, we can filter out some code format modifications.

We found that 60% of commits contained only 1 or 2 files. However, a small number of nonstandard commits contained dozens or hundreds of files. As previously mentioned, we think that a fix often associates with 1 or 2 files. To reduce noise, a filtering mechanism is implemented in the process of extracting fault fix pairs. The number of commit files varies based on the accuracy and recall rate. In practice, we set the threshold to 5 to lower the recall rate.

47% of code faults labeled with the SZZ algorithm are inaccurate. Since we follow the data collection steps of SZZ by using commit message, large amounts of noises still exist in the extraction of fault fix pairs. Then, we consider clustering the common faults and patches into a template. By using this template, some noises or fault fixes of no reference significance can be filtered out.

After extracting the fault fix pairs, we clustered similar fault fix pairs to identify some common faults, minimizing noises and extracting fix records of common faults.

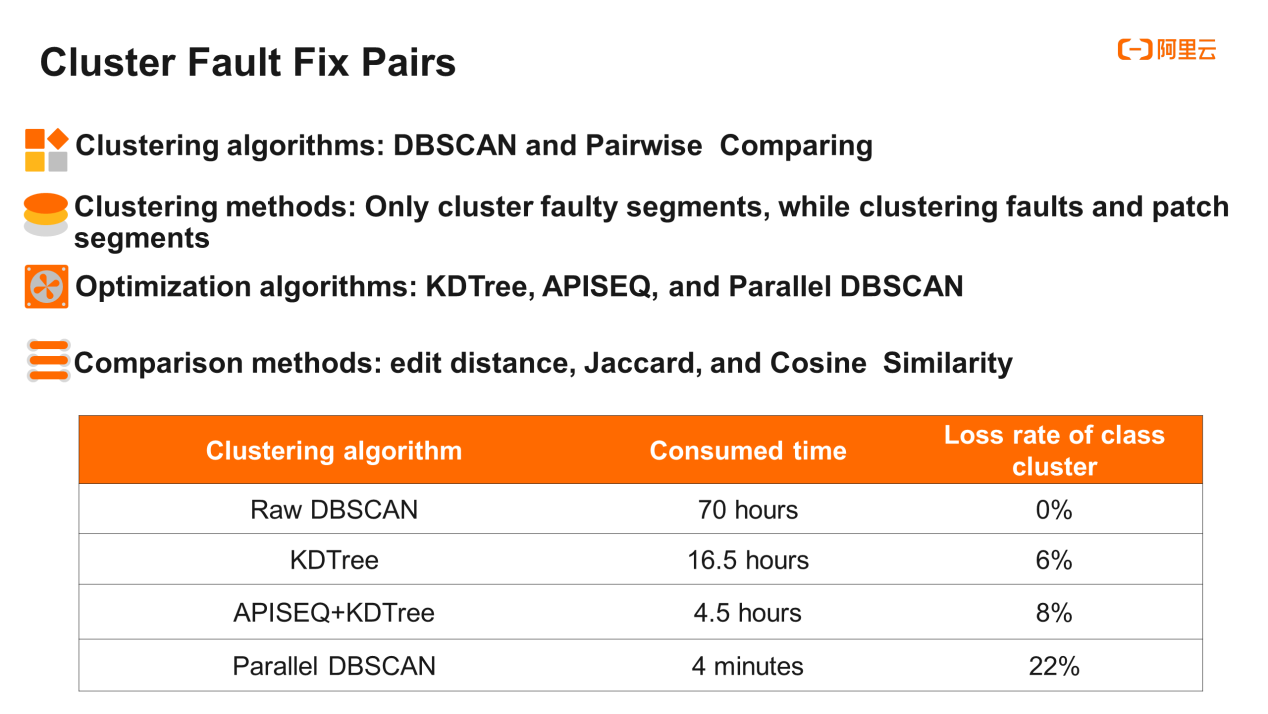

Since the number of class clusters cannot be predicted, we did not use the Kmeans algorithm, but we adopted the relatively mature density-based DBSCAN algorithm instead. Of course, we were also able to perform pairwise comparison and merger. All of the available comparison methods were used.

We have tried many kinds of clustering methods. For example, the fault segments are clustered separately, and the results are good. While the same fault segment has different fix schemes, the analysis of the fix segments becomes a knot. Moreover, automatic analysis is difficult to achieve. Finally, we tried to cluster faults and fix segments at the same time. We can directly provide fix segments after the fault segments were matched, without considering the fix recommendations.

It took about 70 hours to directly use DBSCAN according to the amount of experiment data. Therefore, we made some optimizations based on DBSCAN, including several approaches on the figures. We implemented clustering based on MapReduce, preprocessed the fault fix pairs in the Mapper phase, and ran DBSCAN in the Reducer phase. First, we applied the KDTree or SimHash algorithm in the Mapper phase to distribute similar fault fix pairs to a Reducer for parallel clustering, with the time performance being improved fourfold. The class cluster loss rate was about 6% lower than the basic edition DBSCAN algorithm.

Most of the fault fix pairs are not correlated with each other. Comparing two segments using code cloning technology is the most time-consuming. Therefore, we used the APISEQ approach in the figure to remove "unnecessary comparison." After observing this batch of fault fix pair datasets, we found that almost all segments called methods more or less. Those not calling methods are probably meaningless noise. Therefore, we were able to compare two segments to check whether any similar APIs can be found in the cluster comparison process. If yes, a clustering comparison is performed. This approach improves the time performance fourfold.

We also tried the novel parallel DBSCAN algorithm, which is very fast but the loss rate of class clusters is relatively high. This data process is regular offline computing with a low frequency. Finally, we chose KDTree or APISEQ+KDTREE that features less time consumption and a lower loss rate.

As for the code cloning comparison between two segments in the clustering process, we found that the results of the two complementary computing methods were much better than a single computing method. Our best practice is the weighted average of "edit distance" and "Jaccard", because "edit distance" can capture the sequence relationship of tokens and Jaccard can calculate the overfitting ratio of tokens.

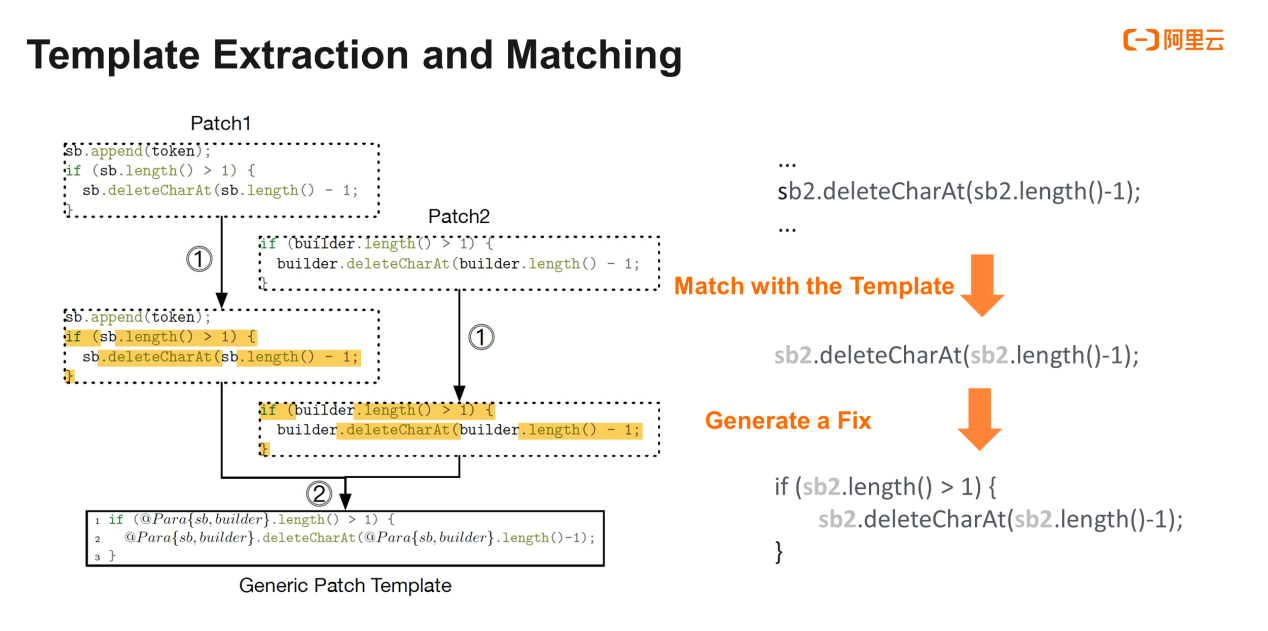

The last step is "template extraction." To improve your experience, we want to cluster similar segments to extract a template, reducing your cost of understanding.

As shown in the preceding figure, two segments of a class cluster are very similar. So, we recursively use the longest sub-sequence algorithm, and the yellow part is the matched contents. Except for one more sentence in the left segment, other parts of the mismatched contents have different variable names. We extract different variable names and save them in "@Para" format for later matching.

When a new fault segment is scanned, we can identify its variable name based on the template and replace "@Para" in the patch template with the new variable name. Then, the system automatically recommends fix solutions with new parameters.

Let's look at a few templates obtained by PRECFIX clustering. The first category is "rationality check." The fix segment has its length judged and the null check is included in the rationality check.

The second category is "API change." Gpu id is added to the API parameters and the source of the first parameter is changed accordingly. The "API change" also includes the change of the approach name, the addition, deletion, and modification of parameters. These are all common changes.

The third category is "API encapsulation." We did not think of this category before viewing the clustering results. Engineers often encapsulate code segments that are functionally independent and frequently reused to reduce code repetition and maintenance costs. In addition, the approach can be well written in the tool class to reduce unnecessary mistakes generated by developers when writing.

Here, note that PRECFIX is not a specific answer to code faults, but an idea of mining faults from the code library. The number of templates and the false positive rate need to be monitored and maintained continuously.

The PRECFIX approach has been implemented within Alibaba Group. More than 30,000 faults of over 800 types are scanned in the internal public library, and the results are shared with interview users. It has received favorable comments. Later, this approach will be applied in Apsara DevOps products so more developers can use it.

Apsara DevOps is an enterprise-level all-in-one DevOps platform based on Alibaba's advanced management concepts and engineering practices. It aims to be the R&D efficiency engine for digital enterprises. Apsara DevOps provides end-to-end online collaboration services and development tools that cover the entire lifecycle from requirement collection to product development, testing, release, maintenance, and operations. By using AI and cloud-native technologies, developers can improve their development efficiency and continue to deliver valuable products to customers.

The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

A Guide to Data Visualization Definition, Tools and Examples

Breaking the Limits of Relational Database in Cloud Native Era

2,597 posts | 775 followers

FollowAlibaba Cloud Community - February 14, 2022

Alibaba Cloud Community - September 3, 2024

Alibaba Cloud Community - March 1, 2022

Alibaba Cloud Community - February 28, 2022

Alibaba Cloud Community - March 2, 2022

Alibaba Cloud Community - October 10, 2022

2,597 posts | 775 followers

Follow Mobile Testing

Mobile Testing

Provides comprehensive quality assurance for the release of your apps.

Learn More YiDA Low-code Development Platform

YiDA Low-code Development Platform

A low-code development platform to make work easier

Learn More Penetration Test

Penetration Test

Penetration Test is a service that simulates full-scale, in-depth attacks to test your system security.

Learn More Security Overview

Security Overview

Simple, secure, and intelligent services.

Learn MoreMore Posts by Alibaba Clouder