A real-time system is a system that processes input data within milliseconds so that the processed data is available almost immediately for feedback. Real-timeliness in the video recommendation system is mainly reflected in three layers:

Reflection of user profiles in the interest model is a common occurrence. By building users' long-term and short-term interest models, businesses can satisfy users' interests and demands. There are various ways to provide recommendations, such as synergy and a variety of small tricks. However, user profile-based and video profile-based recommendations are difficult in the initial phase. In the long run, these user profiles can promote the team's understanding of users' video consumption habits and support businesses other than recommendations.

A user's refresh actions triggers the recommendation calculation. Once refreshed, the user information is sent to Kafka asynchronously, and the Spark Streaming program will analyze the data and match candidate sets with users. The user's private queue in Redis receives the calculation result. The interface service is only responsible for getting the recommendation data and sending the user refresh actions. The private queue of a new user or a user who has not accessed the service in a long time may have expired. In this case, the asynchronous operation will cause problems. Once the front-end interface discovers this issue, it will perform one of the following actions to resolve the problem:

Asynchronous calculation covers most of the calculations, except for new users.

In 2014, the concept of stream computing concept did not exist and the reuse of existing technical system was not possible. As a result, our recommendation system was overly complicated and difficult to be productized. To make matters worse, the recommendation effects were only visible the next day, resulting in a prolonged cycle of effect improvement. During that time, the entire development cycle exceeded one month.

On the contrary, today's system based on StreamingPro has two or three developers, each investing only two to three hours a day. The developers can complete the entire development within just two weeks. Stream computing has had a large impact on the approach and implementation of the recommendation system.

The recommendation system includes all other computing-related features, except interface services. However, the features are not limited to:

All these processes are completed using "Spark Streaming." For long-term synergy (data of more than one day) and the user's long-term interest models among others, Spark batch processing is adopted. Thanks to the utilization of the StreamingPro project, all the calculation processes can be configured. You will see a list of description files that constitute the core computing processes of the entire recommendation system.

We would like to mention three points here, which are as follows:

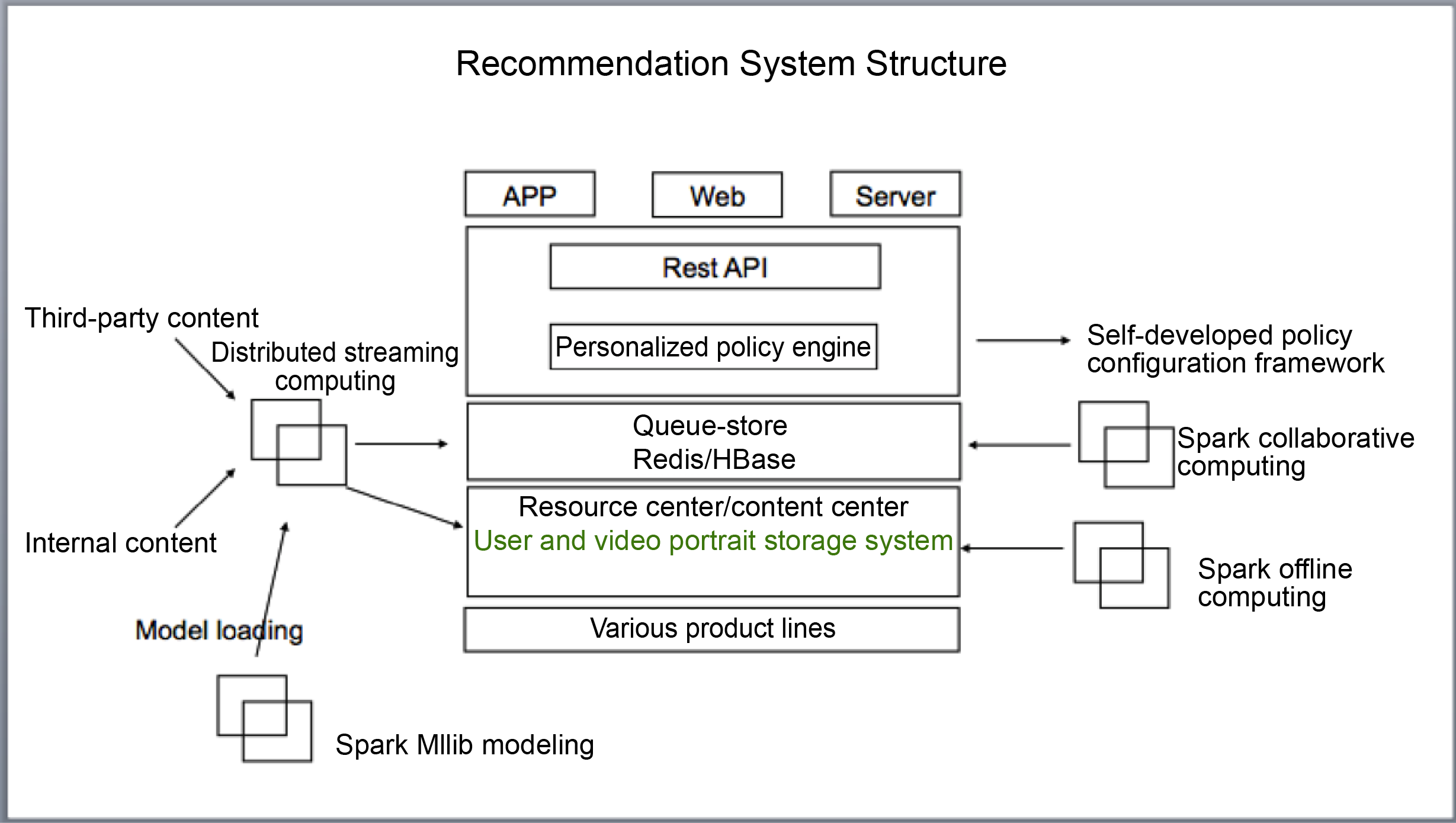

The figure below shows the structure of the entire recommendation system:

Figure 1. Recommendation System Structure

Distributed streaming computing is mainly responsible for five sections:

The storage solutions include:

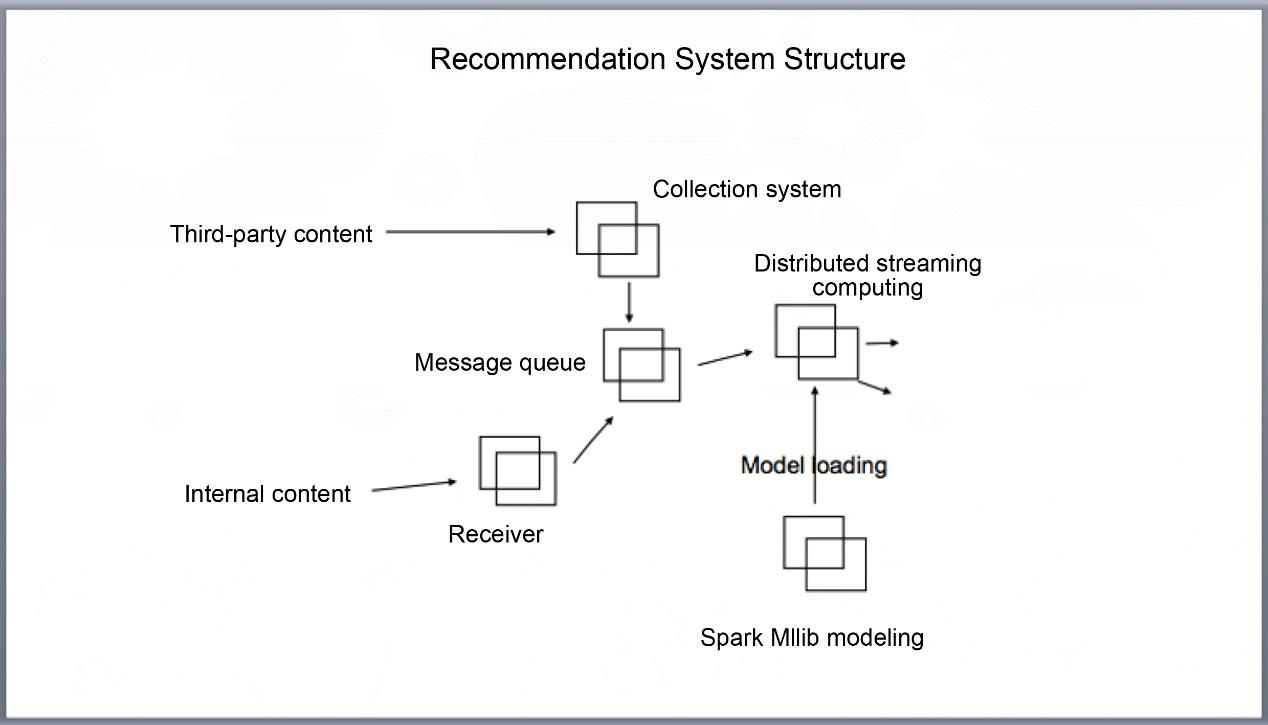

The following figure shows more details about the streaming computing section:

Figure 2. Detailed Recommendation System Structure

Technical solutions adopted for user reporting:

For third-party content (full-site), we developed a collection system on our own.

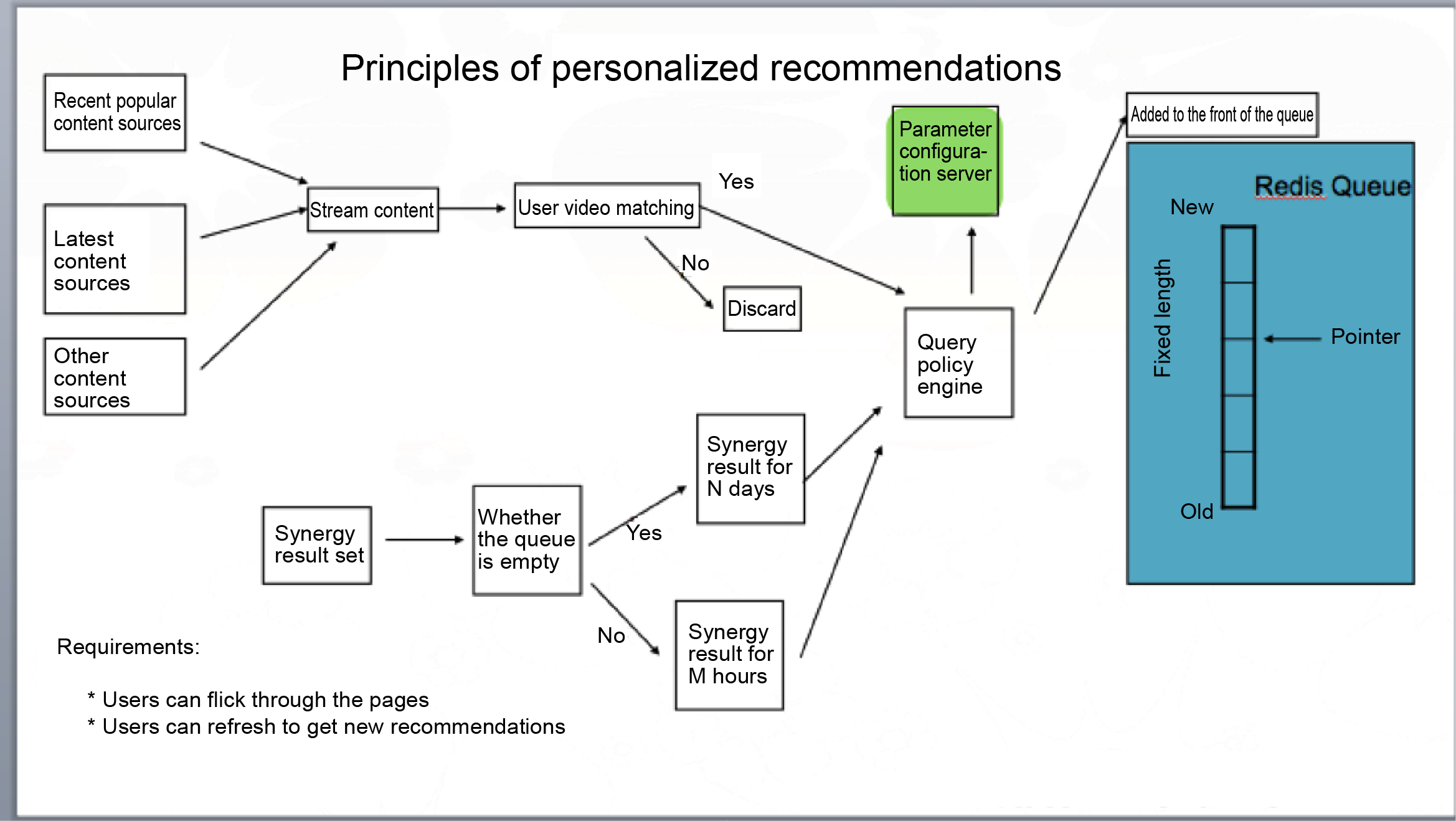

Figure 3. Principles of Personalized Recommendations

The recommendation system updates all candidate sets in real time.

The concept of parameter configuration servers can be understood as follows.

Suppose, we have two algorithms A and B, each of which are completed by independent streaming programs. Each program calculates its result set. The content data size and frequency calculated by different candidate sets and algorithms vary. Let us assume that the result set from A is too large, while that from B is small but of excellent quality. In this case, when the recommendation queue of a user receives algorithms A and B, the algorithms submit their own situations to the parameter configuration server. These algorithms will determine the final amount to be sent to the queue. The parameter server can likewise control the corresponding frequency. For example, if algorithm A generates a new recommendation in just 10 seconds after the last recommendation result, the parameter server can refuse to write its content to the recommendation queue of the user.

The above-mentioned case is a multi-algorithm process control. However, there is an alternate approach to this process. We can introduce a new algorithm, K, by blending the results from A and B. Since every algorithm is a configurable module in StreamingPro, A, B, and K will be put into a Spark Streaming application now. K can periodically call A and B for calculation and mix the results, and finally, write the result to the recommendation queue of the user as authorized by the parameter configuration server.

This article explores the usage of stream computing for the personalized video recommendation system. In this approach, a tag system is designed and then applied users and videos. Multiple algorithms, including LDA and Bayesian, are combined to gain a wholesome and useful experience.

2,593 posts | 793 followers

FollowAlibaba Clouder - April 1, 2021

PM - C2C_Yuan - April 18, 2024

Alibaba Clouder - May 11, 2020

Alibaba Cloud MaxCompute - October 18, 2021

ApsaraDB - October 30, 2020

Iain Ferguson - January 6, 2022

2,593 posts | 793 followers

Follow Real-Time Livestreaming Solutions

Real-Time Livestreaming Solutions

Stream sports and events on the Internet smoothly to worldwide audiences concurrently

Learn More Real-Time Streaming

Real-Time Streaming

Provides low latency and high concurrency, helping improve the user experience for your live-streaming

Learn More Application Real-Time Monitoring Service

Application Real-Time Monitoring Service

Build business monitoring capabilities with real time response based on frontend monitoring, application monitoring, and custom business monitoring capabilities

Learn More Personalized Content Recommendation Solution

Personalized Content Recommendation Solution

Help media companies build a discovery service for their customers to find the most appropriate content.

Learn MoreMore Posts by Alibaba Clouder