By digoal

PostgreSQL 10.0 features quorum-based synchronous replication. You can use it to configure several standby nodes and specify how many copies of the Write Ahead Log (WAL) need to be sent before the end of a transaction message is returned to the client.

ANY num_sync ( standby_name [, ...] ) For more information, see:

Example:

s1,s2,s3为standby recovery.conf配置的application_name, 即standby的唯一标示

下面配置表示WAL需要复制到s1,s2,s3中的任意2个副本。

synchronous_standby_names = 'ANY 2 (s1, s2, s3)'

下面配置表示WAL需要复制到任意standby的任意2个副本。

synchronous_standby_names = 'ANY 2 (*)' Using the flexible quorum-based configuration of PostgreSQL, you can configure the synchronous_standby_names based on the region, latency, and protection level.

Example:

The master has four standby applications: s1, s2, s3, s4. s1 is in the same data center. s2 and s3 are in a data center within 45 kilometers of a city, and s4 in other cities.

Now, you can configure it as below.

下面配置表示WAL至少在一个其他机房有一份拷贝,防止整个机房的故障。

synchronous_standby_names = 'ANY 2 (s1,s2,s4)' More flexible configurations may be available in the future.

下面配置表示s2,s3只算一次,但是它们任意一个feedback都算数。 不失可靠性的情况下,提高可用性。

synchronous_standby_names = 'ANY 2 (s1,[s2,s3],s4)' One issue arises here: When the master fails, how to achieve failover or switchover?

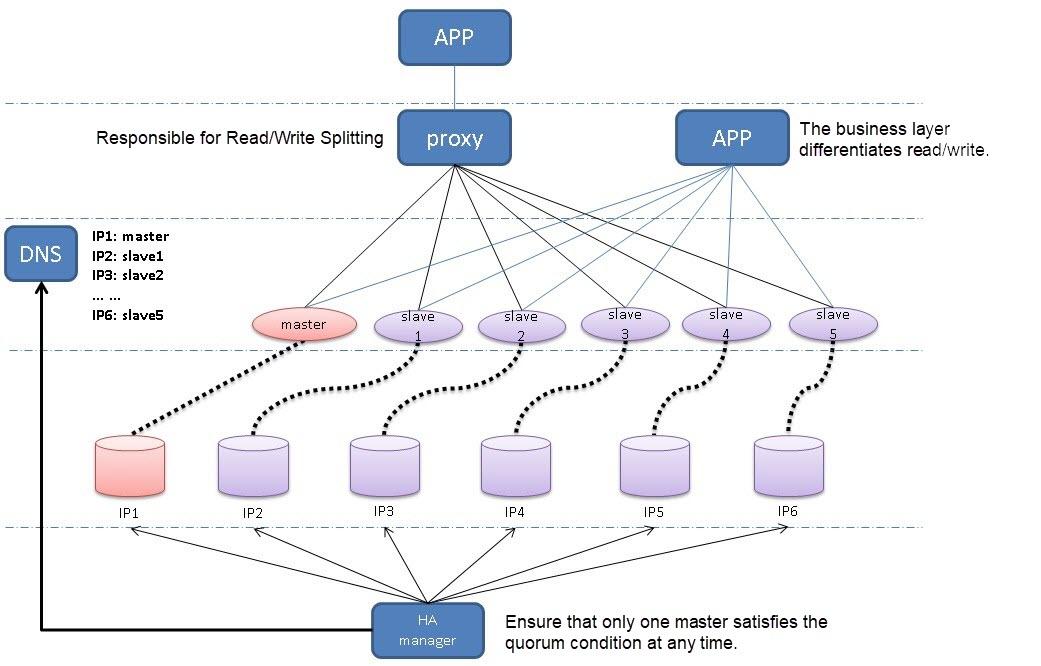

1) Each database instance corresponds to a static IP, which is fixed when the PostgreSQL master-slave is built.

2) Each database instance corresponds to a role, master or slave.

3) Each role corresponds to a domain name. If the instance is master, the corresponding domain name is master. Similarly, if the role is slave, the corresponding domain name is the slave.

4) After you create the cluster for the first time, write the corresponding relationship between the IP and domain name into Domain Name System (DNS).

5) The High Availability (HA) management software uses the master domain name to obtain static IP from DNS, connect to static IP, and detect whether the master is normal. When an exception occurs, it enters the failover process. (The failover process will be discussed later.)

6) The final application and proxy connect to the database through the domain name.

For example, this is an open source PostgreSQL proxy of Crunchy Data, where Tom Lane works. It is written in the Go Programming Language (Golang) and does not do Structured Query Language (SQL) parsing. But it can do simple routing through the SQL HINT with relatively high efficiency.

If you use a client to connect multiple instances, you can refer to the following article (in Chinese): PostgreSQL 10.0 Preview: Libpq Supports Multi-Host Connection (Failover, LB) to Make Database HA and Applications More Compatible

7) The master role changes when the failover ends, and the HA management software notifies DNS to modify the resolution information.

8) Even if the applications and proxy may temporarily connect to the wrong master before DNS Time-To-Live (TTL) fails due to DNS cache, it does not matter because the master is configured with synchronous replication. Therefore, after the failover ends (even if the old master suddenly gets better), the write requests will not be responded to.

The read requests may be affected and may read the data of the old master (short-term impact).

For the initial configuration, you configure the master and the slaves according to the previous figure and copy the master configuration to three copies.

For the slave configuration, you need to connect the master domain name and configure the application_name as master ID + slave ID.

Example (key configuration):

DNS:

N1 : 192.168.1.100 : master

N2 : 192.168.1.101 : slave1

N3 : 192.168.1.102 : slave2

N4 : 192.168.1.103 : slave3

N5 : 192.168.1.104 : slave4

N6 : 192.168.1.105 : slave5 master(N1) postgresql.conf

synchronous_standby_names = 'ANY 3 (N1_N2, N1_N3, N1_N4, N1_N5, N1_N6)' slave recovery.conf

N2:

primary_conninfo = 'host=master port=xx user=xx password=xx application_name=N1_N2'

N3:

primary_conninfo = 'host=master port=xx user=xx password=xx application_name=N1_N3'

N4:

primary_conninfo = 'host=master port=xx user=xx password=xx application_name=N1_N4'

N5:

primary_conninfo = 'host=master port=xx user=xx password=xx application_name=N1_N5'

N6:

primary_conninfo = 'host=master port=xx user=xx password=xx application_name=N1_N6' TTL setting must try to shorten the DNS cache time of the client — for example, 10 seconds — and configure the domain name mapping.

192.168.1.100 : master

192.168.1.101 : slave1

192.168.1.102 : slave2

192.168.1.103 : slave3

192.168.1.104 : slave4

192.168.1.105 : slave5 You can use a separate database to store or use file configuration.

N1 : 192.168.1.100 , 1921

N2 : 192.168.1.101 , 1921

N3 : 192.168.1.102 , 1921

N4 : 192.168.1.103 , 1921

N5 : 192.168.1.104 , 1921

N6 : 192.168.1.105 , 1921 master : master

slave : slave1

slave : slave2

slave : slave3

slave : slave4

slave : slave5 user : xx

pwd : xx 1) Request resolution and get IP from the domain name "master" of the master role.

2) Detect IP and database listening port connectivity. (If an exception occurs, pay attention to the number of retries and the length of timeout.)

If an exception occurs, the system retries several times (set the retry interval and the number of retries). If the number of retries is unavailable, it enters the failover process. Once the database is available during the restart period, it exits the failover process.

3) Detect whether you can log on to the database normally. (If an exception occurs, pay attention to the number of retries and the length of timeout.)

If an exception occurs, the system retries several times (set the retry interval and the number of retries). If the number of retries is unavailable, it enters the failover process. Once the database is available during the restart period, it exits the failover process.

4) Log on to the database and detect the availability of the database. To check if the database is alive, encapsulate the codes into a database function. The function returns true or false to indicate the database availability. (If an exception occurs, pay attention to the number of retries and the length of timeout.)

If it returns false or returns an exception, the database is unavailable.

If an exception occurs, the system retries several times (set the retry interval and the number of retries). If the number of retries is unavailable, it enters the failover process. Once the database is available during the restart period, it exits the failover process.

Slave isolation means that the slave is isolated. It can receive read requests, but the WAL receiver process does not work and does not send feedback to the master, which is not in a relationship with the master.

The isolation must be persistent. For example, it remains isolated after a restart.

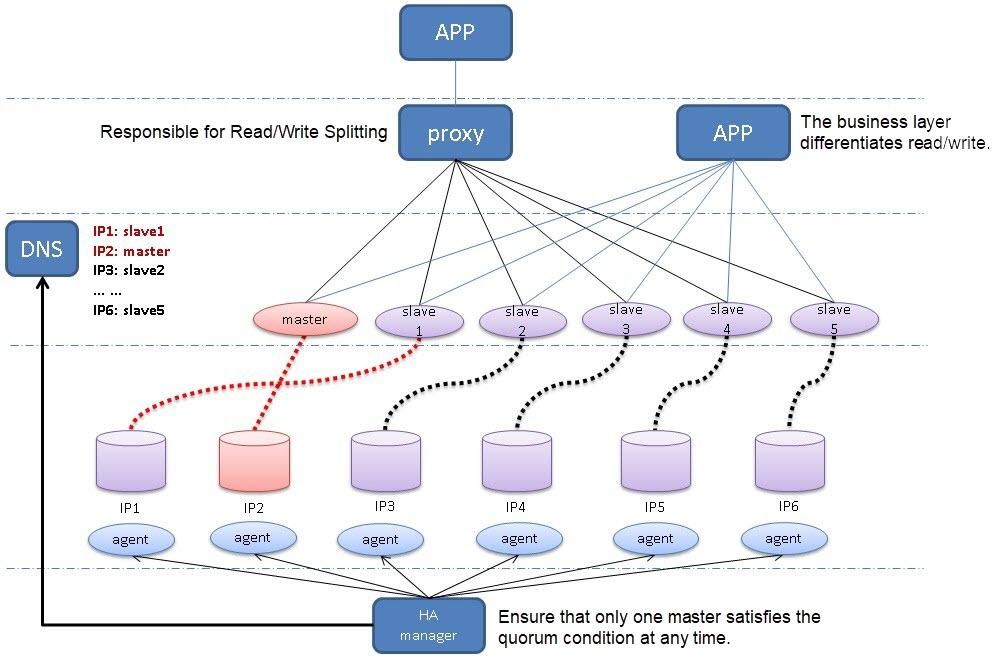

The failover process involves instance operations, which you can implement using various methods.

1) Implement operations such as files and scripts of the database instance through database User-Defined Function (UDF).

2) Implement operations such as files and scripts of the database instance by deploying agent software on the database host.

We recommend using an agent.

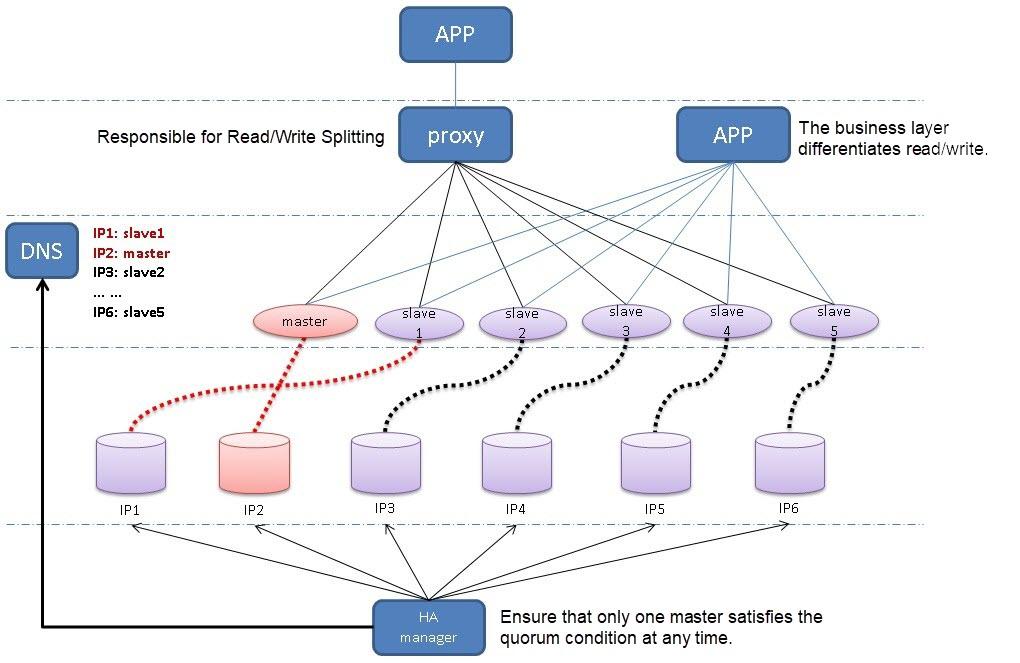

1) Use the configuration to obtain the domain name corresponding to the slave role and the IP address corresponding to the domain name. The following operations use IP to connect the slave.

IP and the node name already have a mapping relationship.

2) Isolate several slave nodes and calculate the number of the nodes using the following formula.

Formula:

若干 = 总节点数(包括master) - quorum数 = 6-3 = 3 3) After you isolate several nodes, the non-isolated slave nodes will continue to be isolated.

The second step continues even if the isolation of slave nodes is unsuccessful (assuming N2 is also abnormal).

4) From the isolated slave nodes, select a slave node with the largest Log Sequence Number (LSN) (assuming N3) as the new master and modify postgresql.conf.

synchronous_standby_names = 'ANY 3 (N3_N1, N3_N2, N3_N4, N3_N5, N3_N6)' 5) Rename the recovery.conf of the new master.

rename recovery.conf to recovery.done 6) Modify other isolated slave nodes — recovery.conf

N4:

primary_conninfo = 'host=master port=xx user=xx password=xx application_name=N3_N4'

N5:

primary_conninfo = 'host=master port=xx user=xx password=xx application_name=N3_N5'

N6:

primary_conninfo = 'host=master port=xx user=xx password=xx application_name=N3_N6' 7) Notify DNS to modify the domain name mapping.

-- 对调old master和new master

192.168.1.102 : master

192.168.1.101 : slave1

192.168.1.102 : slave2

192.168.1.100 : slave3

192.168.1.104 : slave4

192.168.1.105 : slave5 8) Restart the isolated instance.

9) Lift isolation.

10) Activate the new master.

11) End the failover process.

If any step fails, lift the isolation and start all over again.

Usually, no repair is required because data loss is impossible. For transactions that have been completed from the user's perspective, the WAL content of the new master must be the same as that of the old master.

However, some transactions that have not ended (or have not received the transaction of ending Alibaba Cloud Container Service for Kubernetes (ACK) from the user's perspective, although these transactions may have been persisted locally in the old master), maybe in the old master. As a result, the old master has more WALs (from a business perspective, there is no difference because the transaction ACK is not returned to the customer.), so the old master may need to rewind. Refer to the pg_rewind method.

1) Configure the recovery.conf

old master:

primary_conninfo = 'host=master port=xx user=xx password=xx application_name=N3_N1' N2 (abnormal slave nodes):

primary_conninfo = 'host=master port=xx user=xx password=xx application_name=N3_N2' 2) Configure the old master postgresql.conf

注释

# synchronous_standby_names = .......... Restart or start to repair the instance

If you don’t use the quorum nodes but have let the database elect the new leader by itself, you can consider using the Raft protocol.

Among more than half of the nodes locked (that does not receive the WAL from the leader, the primary database), the new leader's WAL is the largest.

The purpose of locking is to prevent fluctuations in the election process and ensure that the elected WAL is the largest.

The purpose of locking most of the nodes is to ensure that no transaction from the client's perspective is lost. (Because for the leader, it is necessary to wait for WAL to be copied to most replicas before returning the ACK of a successful client transaction commit. Before the client receives the ACK, the transaction status from the client perspective is unknown.)

1) A Follower that does not receive voting or heartbeat packets from any other node within a random timeout (150~300ms) will convert into a Candidate. Then, the Candidate puts itself into the WAL stable state (that is, WAL that does not receive any source), then current TermID +, and then votes for itself. It sends an election request to all Followers (carrying WAL's maximum location information, log Term ID, current Term ID) and then resets the random timeout period.

2) After receiving the request for election voting, other Followers will enter the WAL stable state (not receiving WAL from any source) and then judge if "(requester log Term ID> own log Term ID) or (requester current TermID> own current TermID and requester WAL >= own WAL and requester log Term ID = own log Term ID)" vote for it (otherwise don't vote). After voting, set its current Term ID to the current Term ID of the requester. It resets its own selection timeout after voting. (If the Follower does not vote, it continues to wait for the previous random timeout.)

3) When the Candidate gets a majority vote (including itself), it will be converted to a new leader. Then, it changes the log Term ID to the current Term ID and changes the log leader to its own ID. Reset the random timeout period, and send a message announcing the message package (content current Term ID) to all roles to inform them of the persistent log Term ID and re-elect when the timeout occurs.

If the winner hangs up before all nodes of the fourth step complete the adjustment, it will not affect the continuation of the selection.

4) Before receiving the announced heartbeat packet, Followers can also vote for others as long as Followers meet the requirements in the second step.

The Follower receives the announcement (heartbeat) nodes and determines whether (current Term ID = = its own current Term ID in the received heartbeat packet) is true. If so, it changes the log Term ID to the current Term ID of the new leader and changes the log leader to the new leader ID. Then, as the secondary database of the new leader, it contacts the new leader to execute pg_rewind to configure recovery.conf to start the database.

Note that the pg_rewind here must be an atomic operation, either as the new leader or as it is, and cannot have an intermediate state.

In this way, the Raft protocol meets the zero data loss requirement.

5) The new leader sends the ruling heartbeat after every heart timeout.

6) After the Follower receives the ruling heartbeat, the Follower resets the random election timeout time.

7) Repeat the above steps.

Note:

1) The current Term ID will increase every time an election is initiated.

2) The log Term ID is the persistent Term ID in the log, which is used to determine the leader’s election.

3) The log leader is the persistent leader ID in the log.

4) Member ID: Each member has a unique ID, and each member ID corresponds to its own attributes (role, IP, port, user name, and other connection string information).

5) The block-level backtracking commands of pg_rewind and PostgreSQL can achieve a consistent state according to the leader.

6) The heart timeout is to maintain the ruling heartbeat after the successful election.

7) The random election timeout time is 150 to 300 milliseconds. If no heartbeat or election request is received during this period, then the election begins. Otherwise, reset the time after the heartbeat or vote.

The resurrected nodes also follow the new leader according to the above rules.

It is because a follower has only one leader. Only one leader can obtain most WAL copies and normally provide write services when a network splits and more leaders and more writes occur. Even if the user submits the transaction, the other leader cannot succeed. It will hang, and no ACK will be returned to the client.

According to the rules of electing the leader, after the restoration, the old leader cannot be elected as the new leader before keeping up with the new leader.

An unknown transaction is a transaction in which the client does not receive a commit ACK and does not know whether it is a success or failure.

The unknown transactions may occur in multi-nodes and single nodes, with the same effect and shape.

How to handle unknown transactions?

The unknown transactions are divided into the following situations.

The processing methods for unknown transactions of rollback, commit, rollback ps, and commit ps:

1) Solve the unknown state issues in two phases

In the prepare phase, after switching the leader, the client checks the prepare status. If there is no ps, the entire transaction is re-initiated because the database has the transaction atomicity. If ps exists, submit or roll back ps directly.

In the commit or rollback phase, after switching the leader, the client checks the ps status. Retry commit or rollback ps if the unknown transaction exists. If it does not exist, it is already submitted successfully — no need to deal.

2) For non-two-phase transactions, you don’t need to process the rollback unknown because it will be rolled back anyway. Either it didn't happen at all or successfully rolls back, or the database handles the transaction's abnormal rollback. This guarantees the atomicity of the database.

3) As to the unknown commit processing of non-two-phase transactions, you can design transaction status backtracking in extremely rigorous scenarios, such as recording the transaction number or the unique receipt ID. The transaction number is a unique receipt ID in the database, and you can query its status according to the transaction number, such as postgresql. However, not all databases have this interface. For example, in the non-physical streaming replication databases, you can add a globally unique serial number to the transaction to check whether the transaction is committed. Here, the atomicity of transactions is utilized, either all success or all failure. We can give an example.

Although we select the largest WAL node in the majority as the new leader, WAL is generated and copied first and then returned to the client ACK, so the dead or isolated nodes in the WAL may be more than those in the new leader. (These transactions do not return to the client ACK, but they will still be generated, which can be considered useless or unknown transactions.)

Therefore, it is necessary to correct the block level to follow the new leader through pg_rewind.

1) We recommended using the Virtual IP (VIP). Otherwise, the management software also needs to maintain a set of IP change methods.

2) When the number of nodes is not 6 or the quorum number is not equal to 3, you can support it using the isolation formula mentioned earlier.

3) The freeze function is added to the leader. (When it is disconnected from more than sync standbys — ANY N +1 sync standbys for more than N seconds, the write operation of the leader is frozen and becomes a read-only node.)

4) The load balancing policy for a proxy that only reads requests identifies the host where the VIP is currently located. It performs load balancing on the host as a unit instead of VIP. This will not cause multiple VIPs to run on the same host (for example, if some hosts are hung up, numerous VIPs may run on one host) and not cause the load imbalance.

PostgreSQL: Financial Accounting Data Snapshot Analysis Use Cases

How to Enable dblink and postgres_fdw Permissions in ApsaraDB RDS for PostgreSQL 11

ApsaraDB - May 29, 2025

ApsaraDB - January 16, 2026

tianyin - November 8, 2019

Alibaba Cloud Native Community - October 26, 2023

oceanbaseworld - November 29, 2019

Alibaba Clouder - February 4, 2019

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn More ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by digoal