By digoal

A large amount of data is produced in the financial industry or business payment scenarios concerning reconciliation, virtual currency, and transaction flow in accounting systems.

An account’s final state is not enough, and the flow is required owing to log data that records every account movement of users. You can either use it for auditing or analysis. Additionally, you can use it for data tracing — for example, you can request evidence in the form of videos or pictures if the traffic police personnel accuses that you violated traffic rules.

You can use multiple methods to generate flow data, such as logging and WAL. Logging generates business data, whereas WAL restores historical logs of user account changes, such as old value, new value, and transaction number.

The following examples show how to manage flow data.

Write flow data of business.

Schema of tables:

1) Account table

create table tbl

(

xid_num int8, -- 产生、变更这笔记录的作业号,如果一个作业中包含多个账号的变更(例如转账),可以通过作业号关联起来。

uid int primary key, -- 用户账号ID

balance float8 check (balance >=0 ), -- 余额

crt_time timestamp default now(), -- 记录创建时间

mod_time timestamp -- 记录修改时间

); 2) Flow table

create table tbl_history (

xid_num int8, -- 作业号

uid int,

crt_time timestamp default now(),

audit_old hstore, -- 变更前的记录,删除前的记录

audit_new hstore, -- 变更后的记录,新增的记录

tag text -- insert,update,delete标记

);

create index idx_tbl_history_xid on tbl_history (xid_num);

create index idx_tbl_history_uid on tbl_history (uid); Considering the table’s time sequence, it is best to use Block Range Indexes (BRIN).

create index idx_tbl_history_time on tbl_history using brin(crt_time); 3) (Optional) The flow table can use the form of the partition table for its time sequence.

create table tbl_history (

xid_num int8, -- 作业号

uid int,

crt_time timestamp default now(),

audit_old hstore,

audit_new hstore,

tag text

)

partition by range(crt_time);

do language plpgsql $$

declare

s1 date;

s2 date;

suffix text;

begin

for i in 1..60 loop

s1 := date '2017-06-01'+(i||' month ')::interval;

s2 := date '2017-06-01'+((i+1)||' month ')::interval;

suffix := to_char(s1,'yyyymm');

execute 'create table tbl_history_ptr_'||suffix||' partition of tbl_history for values from ('''||s1||''') to ('''||s2||''')';

end loop;

end;

$$; 4) Control the writing of flow data in business, or use built-in database rules or triggers to generate flow records automatically.

An example of automatic generation by using rules:

After creating rules, insert on conflict syntax is not supported.

create or replace function ftg1() returns trigger as $$

declare

begin

insert into tbl_history (xid_num,uid,audit_new,tag) values (txid_current(),NEW.uid,hstore(NEW),'insert');

return null;

end;

$$ language plpgsql strict;

create or replace function ftg2() returns trigger as $$

declare

begin

insert into tbl_history (xid_num,uid,audit_old,tag) values (txid_current(),OLD.uid,hstore(OLD),'delete');

return null;

end;

$$ language plpgsql strict;

create or replace function ftg3() returns trigger as $$

declare

begin

insert into tbl_history (xid_num,uid,audit_old,audit_new,tag) values (txid_current(),OLD.uid,hstore(OLD),hstore(NEW),'update');

return null;

end;

$$ language plpgsql strict;

create trigger tg1 after insert on tbl for each row execute procedure ftg1();

create trigger tg2 after delete on tbl for each row execute procedure ftg2();

create trigger tg3 after update on tbl for each row execute procedure ftg3(); 5) Pressure testing, account generation, and capital deduction:

\set uid random(1,10000000)

insert into tbl (uid,balance,crt_time) values (:uid, 100000, now()) on conflict (uid) do update set balance=tbl.balance+(random()*100)::int-50,mod_time=now(); Pressure testing:

pgbench -M prepared -n -r -P 1 -f ./test.sql -c 64 -j 64 -T 120 6) Query the status of an account at a certain time point in the past.

postgres=# select * from tbl_history limit 10;

xid_num | uid | crt_time | audit_old | audit_new | tag

------------+---------+----------------------------+-----------+--------------------------------------------------------------------------------------------------------------------+--------

2833936976 | 6301000 | 2017-07-05 18:58:33.014571 | | "uid"=>"6301000", "balance"=>"100000", "xid_num"=>NULL, "crt_time"=>"2017-07-05 18:58:33.014571", "mod_time"=>NULL | insert

2833936980 | 6082888 | 2017-07-05 18:58:33.015117 | | "uid"=>"6082888", "balance"=>"100000", "xid_num"=>NULL, "crt_time"=>"2017-07-05 18:58:33.015117", "mod_time"=>NULL | insert

2833936981 | 941218 | 2017-07-05 18:58:33.015222 | | "uid"=>"941218", "balance"=>"100000", "xid_num"=>NULL, "crt_time"=>"2017-07-05 18:58:33.015222", "mod_time"=>NULL | insert

2833936977 | 1400395 | 2017-07-05 18:58:33.014793 | | "uid"=>"1400395", "balance"=>"100000", "xid_num"=>NULL, "crt_time"=>"2017-07-05 18:58:33.014793", "mod_time"=>NULL | insert

2833936979 | 1298648 | 2017-07-05 18:58:33.014791 | | "uid"=>"1298648", "balance"=>"100000", "xid_num"=>NULL, "crt_time"=>"2017-07-05 18:58:33.014791", "mod_time"=>NULL | insert

2833936985 | 5278098 | 2017-07-05 18:58:33.017009 | | "uid"=>"5278098", "balance"=>"100000", "xid_num"=>NULL, "crt_time"=>"2017-07-05 18:58:33.017009", "mod_time"=>NULL | insert

2833936978 | 9522366 | 2017-07-05 18:58:33.014795 | | "uid"=>"9522366", "balance"=>"100000", "xid_num"=>NULL, "crt_time"=>"2017-07-05 18:58:33.014795", "mod_time"=>NULL | insert

2833936986 | 9902071 | 2017-07-05 18:58:33.017085 | | "uid"=>"9902071", "balance"=>"100000", "xid_num"=>NULL, "crt_time"=>"2017-07-05 18:58:33.017085", "mod_time"=>NULL | insert

2833936982 | 5473115 | 2017-07-05 18:58:33.015527 | | "uid"=>"5473115", "balance"=>"100000", "xid_num"=>NULL, "crt_time"=>"2017-07-05 18:58:33.015527", "mod_time"=>NULL | insert

2833936988 | 8698002 | 2017-07-05 18:58:33.017249 | | "uid"=>"8698002", "balance"=>"100000", "xid_num"=>NULL, "crt_time"=>"2017-07-05 18:58:33.017249", "mod_time"=>NULL | insert

(10 rows)

select * from tbl_history where xid_num in (

select xid_num from tbl_history where uid=? and crt_time between ? and ?

); As the flow data is historical, it becomes cold data as time progresses, and the query probability reduces.

The cost of putting all data in a database is relatively high, so you can only do so if the cost is not a concern.

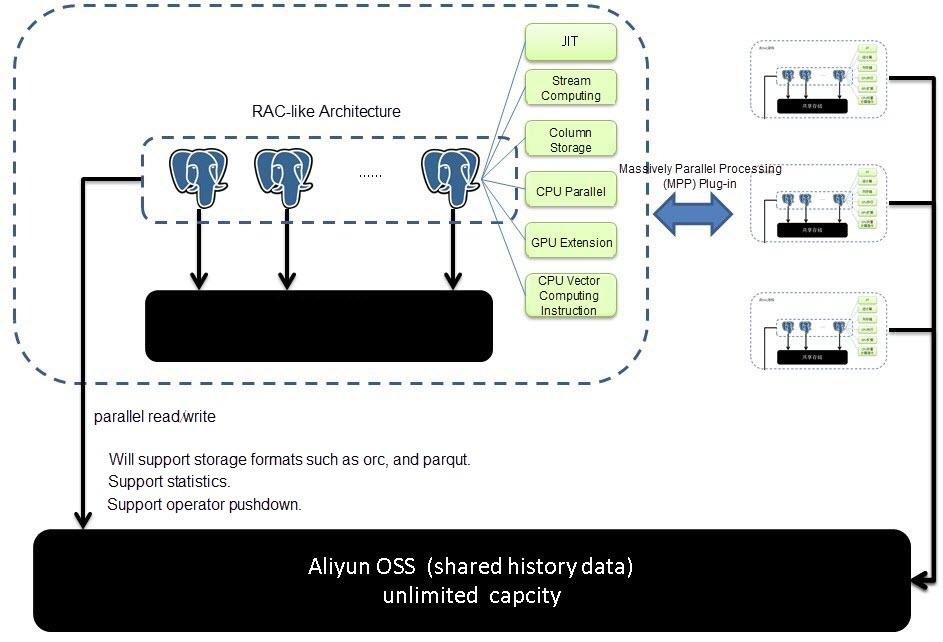

You can deeply integrate the Alibaba Cloud RDS for PostgreSQL with Object Storage Service (OSS). Using the RDS for PostgreSQL’s OSS_FDW external table, you can store flow data in OSS, while RDS for PostgreSQL enables seamless querying.

For example, RDS for PostgreSQL can define data generated a year ago as cold data and write the data into OSS through the OSS_FDW external table interface. Then, RDS for PostgreSQL deletes the local data to free up space.

You can use the external table defined by OSS_FDW to query cold data from a year ago. The usage is the same as the SQL query table.

You can learn more about the usage of OSS_FDW at this link: https://www.alibabacloud.com/help/doc-detail/44461.htm

A simple demo:

# 创建插件

create extension oss_fdw;

# 创建 server

CREATE SERVER ossserver FOREIGN DATA WRAPPER oss_fdw OPTIONS

(host 'oss-cn-hangzhou.aliyuncs.com' , id 'xxx', key 'xxx',bucket 'mybucket');

# 创建 oss 外部表的定义

CREATE FOREIGN TABLE ossexample

(date text, time text, open float,

high float, low float, volume int)

SERVER ossserver

OPTIONS ( filepath 'osstest/example.csv', delimiter ',' ,

format 'csv', encoding 'utf8', PARSE_ERRORS '100');

# 查询外部表

select * from ossexample where .....;

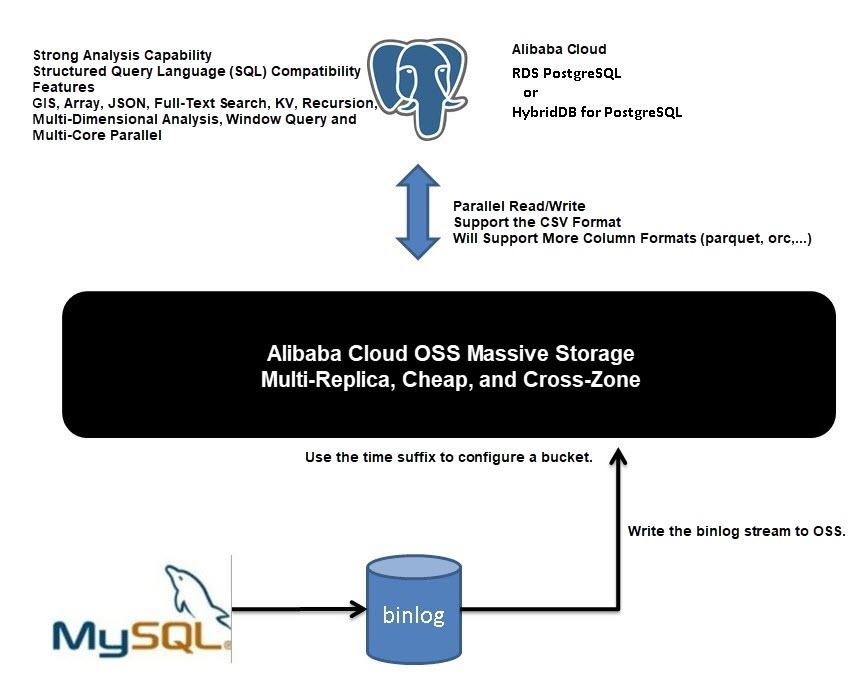

The flow data of a database, such as the binary log (binlog) of a MySQL database and the WAL log of a PostgreSQL database, stores records before and after data changes, insertions, and deletions.

You only need to store the final status of accounts in the database if you’re a MySQL user. Then, you can use binlog to extract the log data generated by data insertion, update, or deletion as flow data.

When you write log data into OSS, you can connect the flow data from MySQL to RDS for PostgreSQL through the RDS for PostgreSQL and OSS integration.

With an instance of RDS for PostgreSQL as the SQL query interface, you can easily query data at any point of time.

The advantages of using RDS for PostgreSQL are that it is compatible with SQL syntax and has robust data analysis capabilities. For example:

1) It is supported by SQL standard and most compatible with Oracle.

2) It supports multi-dimensional analysis syntax, including grouping sets, cube, and rollup. It also supports recursive query syntax, scientific computing function library, multi-core parallel, vector computing, Just in Time (JIT), Hash JOIN, MERGE JOIN, etc.

3) It supports parallel reading and writing of OSS.

4) It supports extended data types such as array, JavaScript Object Notation (JSON), Key-Value (KV), geographical location, and full-text search.

5) It supports nine types of indexes and can accelerate the queries of most data types.

RDS for PostgreSQL can help businesses meet the requirements of more scenarios.

By connecting to OSS, you can store the binlog of databases at lower costs.

The integration of OSS and RDS for PostgreSQL integration enables you to use general SQL syntax for analyzing flow data.

Besides, you can leverage the other features of RDS for PostgreSQL, including OLAP analysis capabilities, better syntax support, and robust computing capabilities.

PostgreSQL: How to Predict Stock Price Using Univariate Linear Regression

PostgreSQL: Multi-node (Quorum-based), Zero Data Loss, and HA (Failover, Switchover) Solution

Hernan Pardo - March 31, 2020

Hernan Pardo - March 31, 2020

Apache Flink Community - March 14, 2025

Alibaba Clouder - April 10, 2018

Alipay Technology - December 26, 2019

Alibaba Cloud Project Hub - August 19, 2025

Hybrid Cloud Distributed Storage

Hybrid Cloud Distributed Storage

Provides scalable, distributed, and high-performance block storage and object storage services in a software-defined manner.

Learn More OSS(Object Storage Service)

OSS(Object Storage Service)

An encrypted and secure cloud storage service which stores, processes and accesses massive amounts of data from anywhere in the world

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Hybrid Cloud Storage

Hybrid Cloud Storage

A cost-effective, efficient and easy-to-manage hybrid cloud storage solution.

Learn MoreMore Posts by digoal