By Yixiu

Large events such as Alibaba's Double 11 Global Shopping Festival and the Spring Festival travel rush in China involve a lot of people, and require large amounts of computing resources. Computation of large amounts of urgently needed computing resources is referred to by us as pulse computation. On Alibaba Cloud, both ECS servers and databases need to cope with such sudden pulse fluctuations to ensure the smooth and stable operation of the entire system.

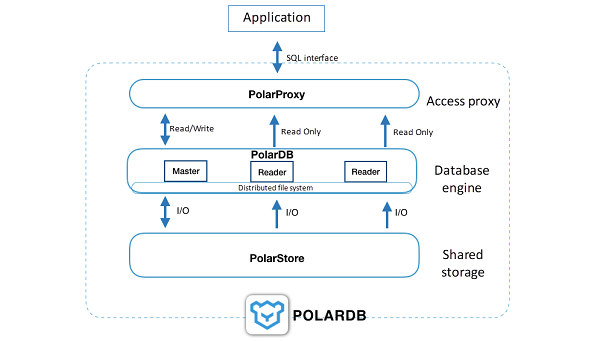

The greatest feature of PolarDB is the separation of storage and compute. Specifically, the compute node (DB Engine) and the storage node (DB Store) are on different physical servers. All I/O operations that go to the storage device are network I/O operations. Some may ask about the network latency and performance. In the previous article of this series, I briefly introduced the latency comparison between using PolarFS to write three data block replicas to PolarStore over the network, and writing one data block replica to a local SSD. The results are very close.

PolarDB's storage and compute separation architecture reduces storage costs, ensures high data consistency between the master and backup data, and prevents data loss. In addition, it has a huge advantage that it makes "auto scaling" of the database extremely simple and convenient.

Auto scaling is a major feature of the cloud that attracts many people to migrate their IT systems to the cloud. However, auto scaling of the database has always been an industry pain point. Unlike ECS instances that purely provide computing services, database elastic scaling has the following difficulties.

First, difficult horizontal expansion. Databases are usually the core of business systems. Data must flow and be shared to create value. When the scale is not very large, databases are generally subject to centralized deployment for the convenience of use. For example, we can use a single SQL statement to complete a query across multiple business databases. Therefore, it is almost impossible to achieve linear scaling by horizontally increasing the number of database servers.

Second, the zero down time requirements. The core position of the database means that if it fails, the entire business will be paralyzed. Therefore, the database must be highly available and be protected from any hardware failures to ensure uninterrupted business. Implementing auto scaling while ensuring high availability is like changing the engine of a flying plane. As you can imagine, it is not easy.

In addition, data is "heavy". The essential task of a database is to store data, but data is eventually stored in a storage device. When you find that the I/O performance of your storage device is insufficient, upgrading the storage device is never an easy task. If data storage and computing are on the same physical server, the CPU core number and clock speed of the physical server determine the upper limit of its computing power, which makes is difficult to scale up.

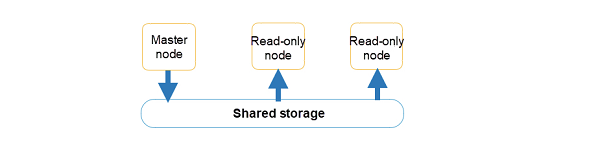

Now, when the bottleneck is gone as a result of storage and computing separation, we can finally make new progress in the field of database auto scaling by combining the architecture design of multiple nodes sharing the same data.

As shown above, PolarDB is a layered architecture. In the top layer, PolarProxy provides read-write splitting and SQL acceleration functions. In the middle layer, the database engine node PolarDB has a multiple-read single-write (MRSW) database cluster. In the bottom layer, the distributed storage PolarStore provides multi-node shared storage for the top layer. Each of these three layers have different roles. Together, they make up a PolarDB cloud database cluster.

From the product definition of PolarDB, we can see that the node count and specification (such as 4-core, 16 GB) for which a user pays, refer to the configuration of PolarDB in the middle layer. PolarProxy in the top layer is adaptively adjusted according to the PolarDB configuration. Users do not need to pay for it, or be concerned about its performance or capacity. The storage of PolarStore in the bottom layer is resized automatically. Users only need to pay for the actual volume used.

Generally speaking, there are two types of database scalability - vertical scaling (also known as scaling up) and horizontal scaling (also known as scaling out). Vertical scaling is the process of upgrading the configuration, and horizontal scaling is the process of adding nodes without changing the configuration. For a database, we first use vertical scaling. For example, upgrading from 4 cores to 8 cores. However, there will be bottlenecks. On the one hand, the performance improvement is nonlinear, which is related to the database engine's own design and application access model (for example, the multi-threaded design of MySQL does not show the multi-core advantage if there is only one session). On the other hand, there are upper limits for the configuration of a physical computing server. Therefore, the ultimate means is to scale out by adding more nodes.

In short, PolarDB can be scaled out to a maximum of 16 nodes, and be scaled up to a maximum of 88 cores. The storage capacity is dynamically resized without additional configuration.

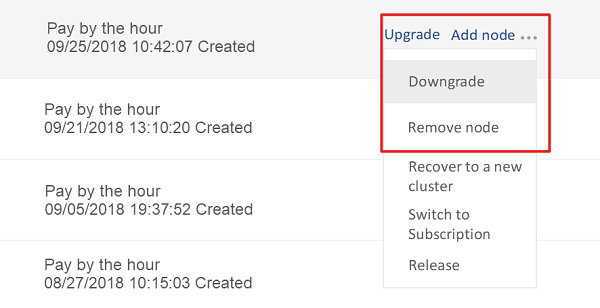

Thanks to the separation of storage and computation, we can scale up/down the configuration of a PolarDB database node separately. If it does not involve data migration, the whole process takes only 5-10 minutes (being constantly optimized). If the current server resources are insufficient, we can also quickly migrate to another server. However, when it involves cross-server data migration, there may be tens of seconds of transient disconnection (in the future, this effect can be eliminated by PolarProxy, and the upgrade will have completely no impact on the business application).

Because all nodes in the same cluster must be bound for upgrade, we will adopt a very soft rolling upgrade method to further reduce the unavailable time by controlling the pace of the upgrade, and adjusting the master and backup node switches.

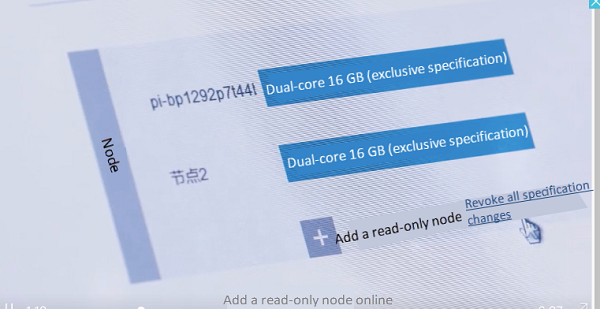

Because of shared storage, we can quickly add nodes without copying any data. The whole process only takes 5-10 minutes (being constantly optimized). Adding a node does not affect the business application. Removing a node only affects connections to the removed node, and the connections can be re-established later.

PolarProxy can dynamically detect the newly added node and automatically include it to the read nodes of the read/write splitting backend. This allows applications that are connected to PolarDB using the cluster access address (read/write splitting address) to immediately enjoy better performance and throughput.

You don't need to be concerned about the storage space of PolarDB. It is charged based on the actual volume used, which is automatically settled on an hourly basis.

In the current design, the I/O performance is related to the specification of the database nodes. The larger the specification, the higher the IOPS and I/O throughput. I/O operations are isolated and restricted on the nodes to avoid I/O contention among multiple database clusters.

Data is stored in a storage pool that is made up by a large number of servers. For the sake of reliability, each data block has three replicas to be stored in different servers that are mounted on different racks. The storage pool can manage itself - it dynamically resizes the storage, balances the load, and avoids storage fragmentation and data hotspots.

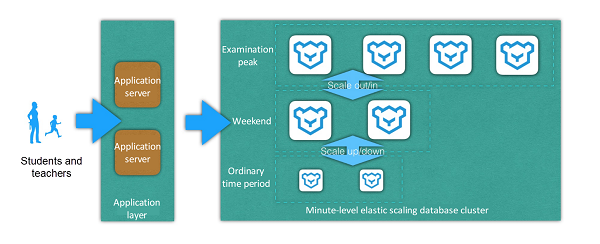

An online education company in Beijing deployed an online examination system for primary school students. There are 50,000 to 100,000 users simultaneously online on weekdays in ordinary time, 200,000 on weekends, and 500,000 to 1 million during the peak examination period. The data size is less than 500 GB. The major dificulties are highly-concurrent user access, read-write contention, and high I/O. The cost will be too high to always buy the highest configuration. The elastic scaling capability of PolarDB allows the company to temporarily increase database configuration and cluster scale during peak days. This reduces the overall cost by 70% compared to the previous solution.

Alibaba Clouder - May 20, 2020

ApsaraDB - January 17, 2024

ApsaraDB - July 3, 2019

ApsaraDB - January 4, 2024

Alibaba Clouder - July 15, 2020

Alibaba Clouder - September 28, 2020

ApsaraDB RDS for MySQL

ApsaraDB RDS for MySQL

An on-demand database hosting service for MySQL with automated monitoring, backup and disaster recovery capabilities

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn MoreMore Posts by ApsaraDB