By Fanrui

After reading this article, you will learn:

Apache Flink supports multiple types of state backends. When the state is large, only RocksDBStateBackends are available.

RocksDB is a key-value (KV) database based on the Log-Structured Merge-tree (LSM-tree). Due to the severe read amplification of the LSM tree, high disk performance is required in RocksDB. We recommend using a solid-state drive (SSD) as the storage medium for RocksDB in the production environment. However, some clusters may be configured with common hard disk drives (HDDs) instead of SSDs. When Flink processes large jobs with frequent state accesses, the high I/O of the HDD may restrict the performance. How can we resolve this issue?

RocksDB stores data in both the memory chips and disks. When the state is large, the disk usage is high. If the RocksDB is frequently read, the disk I/O will restrict the performance of Flink jobs.

We recommend that you configure the state.backend.rocksdb.localdir parameter in the flink-conf.yaml file to specify the directory where RocksDB stores data in the disk. When a TaskManager has three slots, three parallel instances for a task running on a single server perform frequent read and write operations on the disk and contend with each other for the same disk I/O. As a result, the throughput of each of the parallel instances decreases.

Fortunately, the state.backend.rocksdb.localdir parameter provided by Flink allows you to specify multiple directories. In most cases, many disks are mounted to one big data server. To reduce resource contention, we hope that the three task slots of the same TaskManager use different disks. The following code shows the specific parameter configurations.

state.backend.rocksdb.localdir: /data1/flink/rocksdb,/data2/flink/rocksdb,/data3/flink/rocksdb,/data4/flink/rocksdb,/data5/flink/rocksdb,/data6/flink/rocksdb,/data7/flink/rocksdb,/data8/flink/rocksdb,/data9/flink/rocksdb,/data10/flink/rocksdb,/data11/flink/rocksdb,/data12/flink/rocksdbNote: Point the directories to different disks. Do not point multiple directories to a single disk. The directories are configured to enable multiple disks to share the workload.

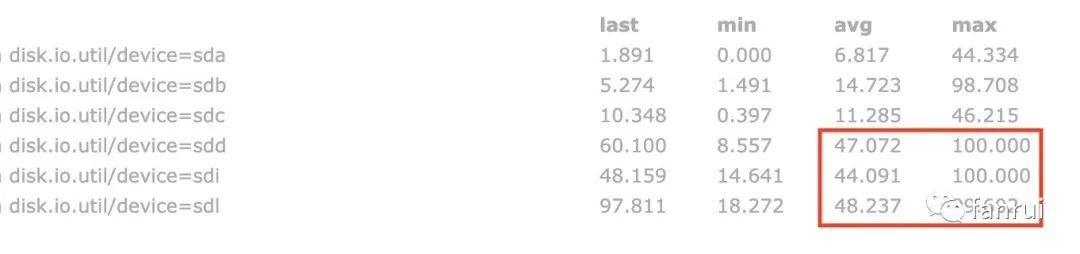

The following figure shows the disk I/O usage during a test. You can see that the three parallel instances of the large state operator correspond to three different disks. The average I/O usage of each disk is maintained at approximately 45%, and the maximum I/O usage of each disk is almost 100%. In contrast, the average I/O usage of the other disks is approximately 10%, which is much lower. This indicates that the disk I/O performance deteriorates when a RocksDB is used as the state backend and has a large state that is frequently read and written.

The preceding figure shows an ideal case. When multiple local disk directories are configured for RocksDB, Flink randomly selects a directory to use. In this case, the three parallel instances may share the same directory.

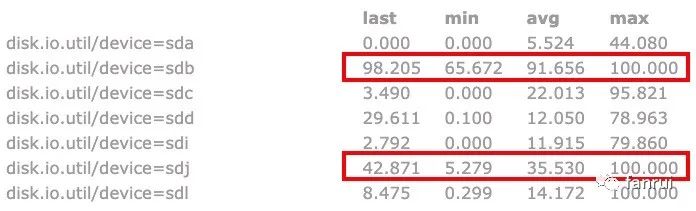

As shown in the following figure, two of the parallel instances share the disk sdb, and one uses the disk sdj. As you can see, the average I/O usage of the disk sdb has reached 91.6%. In this case, the disk I/O of disk sdb will restrict the performance of the Flink job. As a result, the throughput of the two parallel instances corresponding to disk sdb drops significantly, leading to a decrease in the overall Flink job throughput.

This problem does not occur if a large number of hard disks are mounted to the server. However, if the throughput is low after the server restarts, you can check whether multiple parallel instances share the same disk.

In Flink, multiple parallel instances may share the same disk. If this happens, what can we do?

Assume 12 disks are allocated to the RocksDB and only three parallel instances need to use the disks, with each instance using one disk. However, there is a high possibility that two parallel instances may share the same disk, and a low possibility that three parallel instances will share the same disk. In this case, the performance of Flink jobs will be easily restricted due to the disk I/O.

The preceding disk allocation policy is a load balancing policy used in the industry. General load balancing policies include hash, random, and round robin policies.

After a certain hash policy is implemented for a job, the system distributes the workload to multiple workers. Correspondingly, in the preceding scenario, the RocksDB directories used by multiple slots are pointed to multiple disks to share the workload. However, a hash collision may occur. Specifically, after different parallel instances run the same hash function, the same hashCode is output or different hashCode values are output but the different parallel instances are distributed to the same disk based on the remainders obtained by dividing the hashCode values by the number of disks.

The random policy generates a random number for each Flink job and randomly allocates the workload to a worker. This means the workload is randomly allocated to a disk. However, random numbers may conflict with each other.

The round robin policy is easy to understand: Two workers receive data in turn. When a Flink job applies for a RocksDB directory for the first time, directory 1 is allocated. Then, when the Flink job applies for a RocksDB directory for the second time, directory 2 is allocated, and so on. This policy allocates jobs most evenly. If this policy is used, the maximum difference between the number of jobs allocated to different disks is 1.

This policy allocates jobs based on the response time of workers. If the response time of a worker is short, the worker has a light load, and you can allocate more jobs to the worker. Correspondingly, in the preceding scenario, this policy checks the I/O usage of each disk. A low I/O usage indicates that the disk is relatively idle, and you can allocate more jobs to the disk.

This policy allocates different weights to workers. A worker with a higher weight is allocated more jobs. The number of jobs allocated to a worker is in direct proportion to the weight of the worker.

For example, if Worker0 is allocated a weight of 2 and Worker1 is allocated a weight of 1, the system will try to allocate jobs so that the number of jobs allocated to Worker0 is twice that of Worker1. This policy may not be suitable for the current business scenario. Generally, the load capacity of the disks on the same server does not vary a lot, unless the local directories of RocksDB include both SSD and HDD directories.

When Flink 1.8.1 is used online, some disks may be allocated multiple parallel instances, while other disks may not be allocated any parallel instance. This usually occurs when a hash or random policy is used in the source code. In most cases, each disk is allocated only one job, and there is a low probability that multiple jobs are allocated to one disk. Therefore, the problem to be resolved is the unlikely scenario that multiple jobs are allocated to one disk.

If you use a round robin policy, the system ensures that each disk is allocated one of the parallel instances before a second job is allocated to any disk. In addition, the round robin policy can ensure that the indexes of disks to be allocated jobs are continuous.

Let's look at some source code of the RocksDBStateBackend class:

/** Base paths for RocksDB directory, as initialized.

The 12 local directories of RocksDB that we specified in the preceding section. * /

private transient File[] initializedDbBasePaths;

/** The index of the next directory to be used from {@link #initializedDbBasePaths}.

The index of the directory to be used next time. If nextDirectory is 2, the directory with the subscript of 2 in the initializedDbBasePaths is used as the storage directory of RocksDB.*/

private transient int nextDirectory;

// In the lazyInitializeForJob method, this line of code is used to determine the index of the directory to be used next time.

// Generates a random number based on initializedDbBasePaths.length.

// If initializedDbBasePaths.length is 12, the random number to be generated ranges from 0 to 11.

nextDirectory = new Random().nextInt(initializedDbBasePaths.length);This source code tells us that the random policy is used to allocate directories. This explains the issues we witnessed. There is a low probability that a conflict occurs during random allocation. When I wrote this article, the latest master branch code in Flink still used the preceding policy and had not been modified.

When a large number of jobs exist, the random and hash policies can ensure that each worker undertakes the same number of jobs. However, if a small number of jobs exist, for example, when 20 jobs are allocated to 10 workers using the random algorithm, some workers may not be allocated any jobs, and some workers may be allocated three or four jobs. In this case, the random and hash policies cannot resolve the problem of uneven job allocation to disks in RocksDB. What about the round robin policy or the minimum load policy?

The round robin policy can resolve the preceding problem in the following way:

// Define the following code in the RocksDBStateBackend class:

private static final AtomicInteger DIR_INDEX = new AtomicInteger(0);

// Change the policy for allocating nextDirectory to the following code. Each time a directory is allocated, the value of DIR_INDEX increases by 1 and then the remainder is calculated based on the total number of directories.

nextDirectory = DIR_INDEX.getAndIncrement() % initializedDbBasePaths.length;You can define the preceding code to implement the round robin policy. This way, disk 0 is the first disk allocated when a worker applies for a disk, disk 1 is allocated next, and so on.

In Java, static variables are at the Java Virtual Machine (JVM) level and each TaskManager belongs to a separate JVM. Therefore, the round robin policy is implemented within each TaskManager. If multiple TaskManagers run on the same server, these TaskManagers write data in disks starting from the disk with an index of 0. As a result, disks with lower index values are used more frequently, while a disk with higher indexes may be used infrequently.

When you initialize DIR_INDEX, do not initialize it to 0 each time. Instead, you can initialize DIR_INDEX to a random number. This ensures that disks with smaller indexes are not used first each time. The following sample code shows the implementation code:

// Define the following code in the RocksDBStateBackend class:

private static final AtomicInteger DIR_INDEX = new AtomicInteger(new Random().nextInt(100));However, the preceding solution cannot completely resolve disk conflicts. When a server contains 12 disks, TaskManager0 uses disks with the indexes of 0, 1, and 2, and TaskManager1 may use disks with the indexes of 1, 2, and 3. In this case, although the round robin policy is implemented within a TaskManager to ensure load balancing, the load is not globally balanced.

To achieve global load balancing, you must ensure that multiple TaskManagers communicate with each other to implement absolute load balancing. You can use third-party storage to implement communication between TaskManagers. For example, in Zookeeper, a znode is generated for each server, and the znode can be named host or ip. You can use Curator's DistributedAtomicInteger to maintain the DIR_INDEX variable and store the variable in the znode corresponding to the current server. When any TaskManager applies for a disk, the system can use DistributedAtomicInteger to increase the value of the DIR_INDEX variable corresponding to the current server by 1. This way, a global round robin policy is implemented.

How DistributedAtomicInteger Increments Values: This feature uses the withVersion API, which adopts the CompareAndSet (CAS) concept of Zookeeper to increment the value by 1. If the operation is successful, the value increases by 1. If the operation fails, distributed mutex is used to increment the value by 1.

This tells us that we can use two policies to implement round robin. AtomicInteger can only ensure round robin within a TaskManager, but cannot ensure global round robin. To implement global round robin, you must use Zookeeper or another component. If a global round robin policy is absolutely necessary, you can use a Zookeeper-based round robin policy. If you do not want to depend on external components, you can use AtomicInteger.

This policy monitors the average I/O usage of all disks corresponding to local directories of RocksDB for the previous one to five minutes when a TaskManager starts, filters out disks with high I/O usage, and preferentially select disks with low average I/O usage. In this scenario, a round robin policy is still implemented for the disks with low average I/O usage.

Do not measure the load of disks during startup. Load balancing between disks can be ensured using the DistributedAtomicInteger. If the average I/O usage of a disk increases relative to other disks due to a Flink job sometime after the job is started, you can choose to migrate data from a heavily-loaded disk to a lightly-loaded disk.

According to our analysis, the minimum load policy is difficult to use, and I have not implemented this policy.

This article analyzes problems that occur in scenarios where Flink uses large states. It also provides several solutions.

Currently, I use the random policy, the round robin policy within a TaskManager, and the Zookeeper-based global round robin policy. I have applied these policies to the production environment. Now, I can configure any of the policies in the flink-conf.yaml file as needed. So far, the Zookeeper-based global round robin policy has proved to be excellent. In the future, I will continue to share my experiences with the community.

Data Warehouse: In-depth Interpretation of Flink Resource Management Mechanism

206 posts | 54 followers

FollowApache Flink Community - April 9, 2024

Apache Flink Community - May 7, 2024

Alibaba Cloud Big Data and AI - October 27, 2025

Apache Flink Community - October 12, 2024

Apache Flink Community China - February 28, 2022

Apache Flink Community - September 5, 2025

206 posts | 54 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More Time Series Database (TSDB)

Time Series Database (TSDB)

TSDB is a stable, reliable, and cost-effective online high-performance time series database service.

Learn MoreMore Posts by Apache Flink Community