By Zhang Cheng (Yuanyi), Alibaba Cloud Storage Service Technical Expert

In recent years, more users have sought consulting on how to build a log system for Kubernetes or how to solve issues throughout this process. This article describes our years of experience in building log systems to give you a shortcut to successfully building a log system for Kubernetes. This article is the third in a Kubernetes-related series that focuses on our practical experience. The content is subject to updates, as the involved technology evolves.

In the previous article Design and Practices of Kubernetes Log Systems, I introduced how to build a Kubernetes log system from the global dimension. This article is centered on practice and introduces how to build a log monitoring system for Kubernetes step by step.

The first step in building a log system is to generate logs. This is often the most complicated and difficult step.

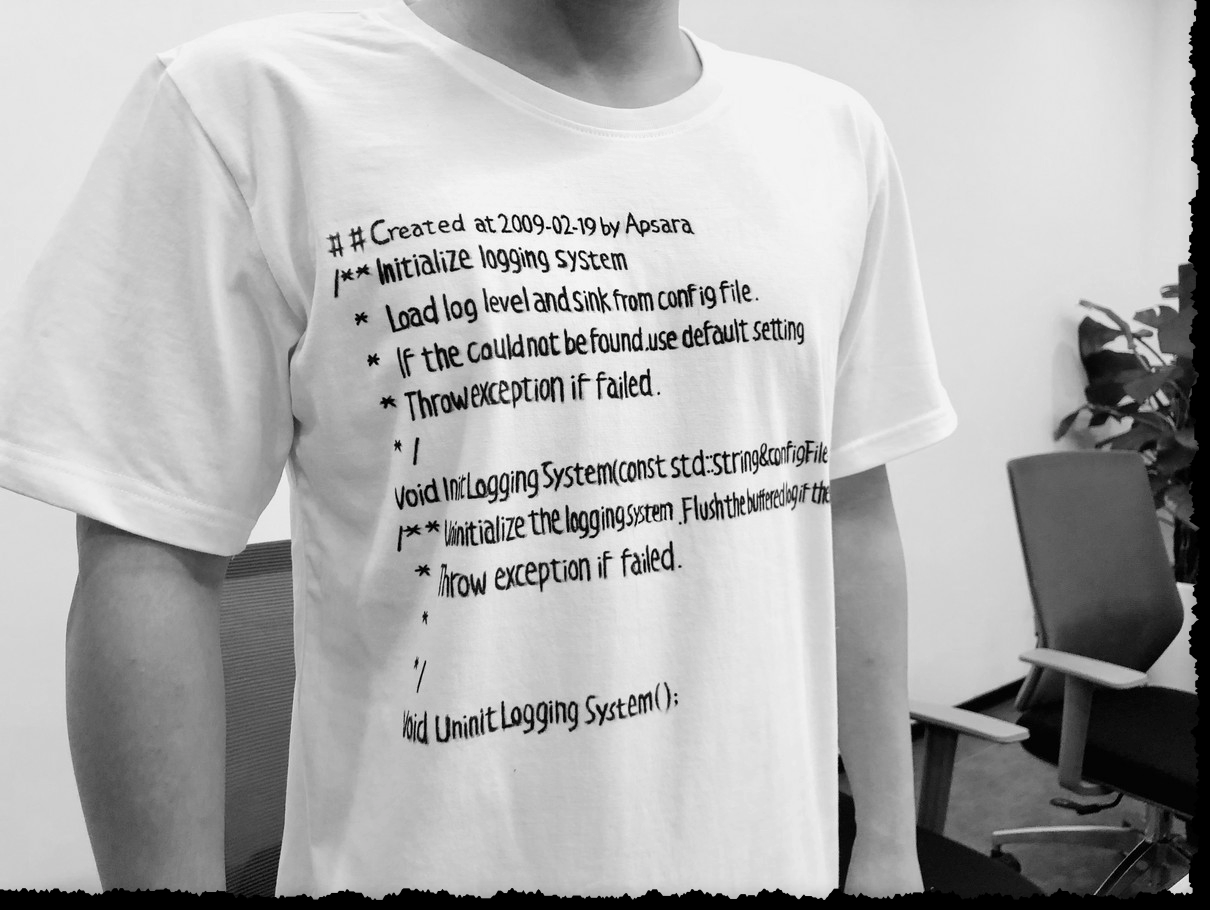

On the first working day after the Chinese New Year Festival in 2009, in a freezing office in Beijing, a group of engineers wrote the first line of code for "Apsara." The Apsara platform is the core technical platform of Alibaba Cloud. It was named after an Angkor mythological deity.

The Alibaba Cloud Apsara system was originally designed as a log system. Now, Logstores of the Apsara logging system are applied in all Apsara systems including Apsara Distributed File System, Apsara Name Service and Distributed Lock Synchronization System, Job Scheduler, and Cloud Network Management.

Logs are essentially records about how an application runs. Many functions are derived from logs, such as online monitoring, alerting, operation analysis, and security analysis. In turn, these functions have certain requirements for logs. The logs must be standardized to reduce the costs of log collection, parsing, and analysis.

In Kubernetes, the environment is highly dynamic and logs are volatile. It is necessary to collect logs to centralized storage in real-time. To facilitate log collection, log output must meet a series of requirements.

The following describes the common considerations for log output in Kubernetes. Items marked with asterisks (*) are Kubernetes-specific.

Every log must have a log level, which is used to determine the severity of log events. Logs are usually classified into six levels:

Programmers must set the log level properly. Here is some of my in-practice experience from development:

Programmers generally write code in unconstrained styles, resulting in a hodge-podge of log content. These logs can be understood only by the developers themselves, making analysis and alerting difficult. Therefore, you need a set of top-down log specifications to constrain developers, so that all logs appear to be output by one person and can be analyzed easily.

Generally, a log contains the required Time, Level, and Location fields. Specific modules, processes, or businesses may also require some common fields. For example:

The log field specifications are preferred to be implemented in a top-down fashion from O&M platforms or middleware platforms. This is to restrict programmers of each module or process to output logs according to the specifications.

Generally, we recommend that you use the key-value pair format. Alibaba Cloud's Apsara Logstore uses this format:

[2019-12-30 21:45:30.611992] [WARNING] [958] [block_writer.cpp:671] path:pangu://localcluster/index/3/prom/7/1577711464522767696_0_1577711517 min_time:1577712000000000 max_time:1577715600000000 normal_count:27595 config:prom start_line:57315569 end_line:57343195 latency(ms):42 type:AddBlockLogs in the key-value pair format are completely self-parsable and easy to understand. They are automatically parsed during log collection.

Another recommended log format is JSON. It is widely supported by many log libraries and most log collection agents.

{"addr":"tcp://0.0.0.0:10010","caller":"main.go:98","err":"listen tcp: address tcp://0.0.0.0:10010: too many colons in address","level":"error","msg":"Failed to listen","ts":"2019-03-08T10:02:47.469421Z"}Note: In most scenarios, do not use non-readable log formats such as ProtoBuf and Binlog.

Do not output a log into multiple lines unless necessary. This increases the costs of log collection, parsing, and indexing.

The output volume of logs directly affects disk utilization and application performance. An excessive output hinders log viewing, collection, and analysis. By contrast, an excessively low output hinders monitoring and can make troubleshooting impossible.

The log data volume must be properly controlled for online applications:

We recommend that you output different types of logs of one application to different targets or files to facilitate collection, viewing, and monitoring by category. For example:

In a business system, logs are an auxiliary module and must not affect the normal operation of business. You must pay special attention to the resource consumption of the log module. When you select or develop a log library, carry out a performance test on it. Make sure the resource consumption of logs accounts for less than 5% of the overall CPU utilization under normal circumstances.

Note: Log output must be asynchronous and must not jam the business system.

There are dozens of open-source log libraries in each programming language. When you select a log library for your company or business, use a stable version of a mainstream log library whenever possible. For example:

In a virtual machine or physical machine scenario, most applications output logs into files, and only certain system applications output logs into the syslog or journal. In a container scenario, there is one more standard output mode. In this mode, applications output logs to the stdout or stderr, and the logs are imported to the Docker log module. You can view the logs by running docker logs or kubectl logs.

The standard output mode of containers applies only to relatively simple applications and some system components of Kubernetes. By contrast, online service applications usually involve multiple levels (middleware) and interact with a variety of services. As a result, an online service application generates multiple types of logs. If all these logs are output in container standard output mode, it is difficult to differentiate and process them.

The container standard output mode greatly reduces the performance of DockerEngine. In a test, every additional output of 100,000 entries every second occupied one more CPU core (100% of the single core) of DockerEngine.

In Kubernetes, you can directly integrate a log library with a log system. This allows logs to directly go to the backend of the log system for output without being flushed into a disk. In this way, logs do not need to be stored into disks or collected by agents, greatly improving the overall performance.

However, we recommend that you use this method only in scenarios with a large number of logs. In general cases, flushing logs into disks improves the overall reliability because it provides additional file caching. In the event of a network failure, data can be cached. This allows developers and O&M engineers to check log files when the log system is unavailable.

Kubernetes provides multiple storage methods. For an off-premises application, you can choose local storage, remote file storage, or object storage. Log writing involves high query per second (QPS) and directly affects application performance. Remote storage requires two or three additional network interactions. Therefore, we recommend that you use local storage, such as HostVolume and EmptyDir, to minimize the impact on data writing and collection performance.

Compared with conventional virtual machine or physical machine scenarios, Kubernetes provides robust fault tolerance and powerful scheduling and scaling capabilities for the application layer and nodes. Kubernetes readily provides you with high reliability and ultimate elasticity but the dynamic creation or deletion of nodes or containers destroys relevant logs. Consequently, the log retention period may be unable to meet DevOps, audit, or other requirements.

Long-term log storage in a dynamic environment can only be implemented with centralized log storage. Through real-time log collection, logs of every node or container are collected to the centralized log system within seconds. In this way, logs can be used for backtracking when a node or container is down.

Log output is an essential part of log system construction. Enterprises and product lines must follow unified log specifications to ensure smooth log collection, analysis, monitoring, and visualization.

In the next article, I will talk about the best practices for planning log collection and storage for Kubernetes.

The first article of this blog series is available by clicking here.

664 posts | 55 followers

FollowAlibaba Clouder - January 5, 2021

Alibaba Clouder - November 18, 2020

Alibaba Cloud MaxCompute - December 6, 2021

Alibaba Clouder - December 29, 2020

Alibaba Cloud Native Community - June 30, 2023

Alibaba Cloud Community - June 6, 2022

664 posts | 55 followers

Follow Simple Log Service

Simple Log Service

An all-in-one service for log-type data

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More Application Real-Time Monitoring Service

Application Real-Time Monitoring Service

Build business monitoring capabilities with real time response based on frontend monitoring, application monitoring, and custom business monitoring capabilities

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn MoreMore Posts by Alibaba Cloud Native Community