The Alibaba Cloud 2021 Double 11 Cloud Services Sale is live now! For a limited time only you can turbocharge your cloud journey with core Alibaba Cloud products available from just $1, while you can win up to $1,111 in cash plus $1,111 in Alibaba Cloud credits in the Number Guessing Contest.

Catch the replay of the Apsara Conference 2020 at this link!

By ELK Geek, with special guest, Zhang Di, Senior Technical Expert of Alibaba Group

Today's topic will focus on deep learning algorithm engineering. By introducing the best practices of search, recommendation, and advertising on Taobao, we will elaborate on how Alibaba Cloud builds an efficient device-to-device platform for AI algorithm platforms.

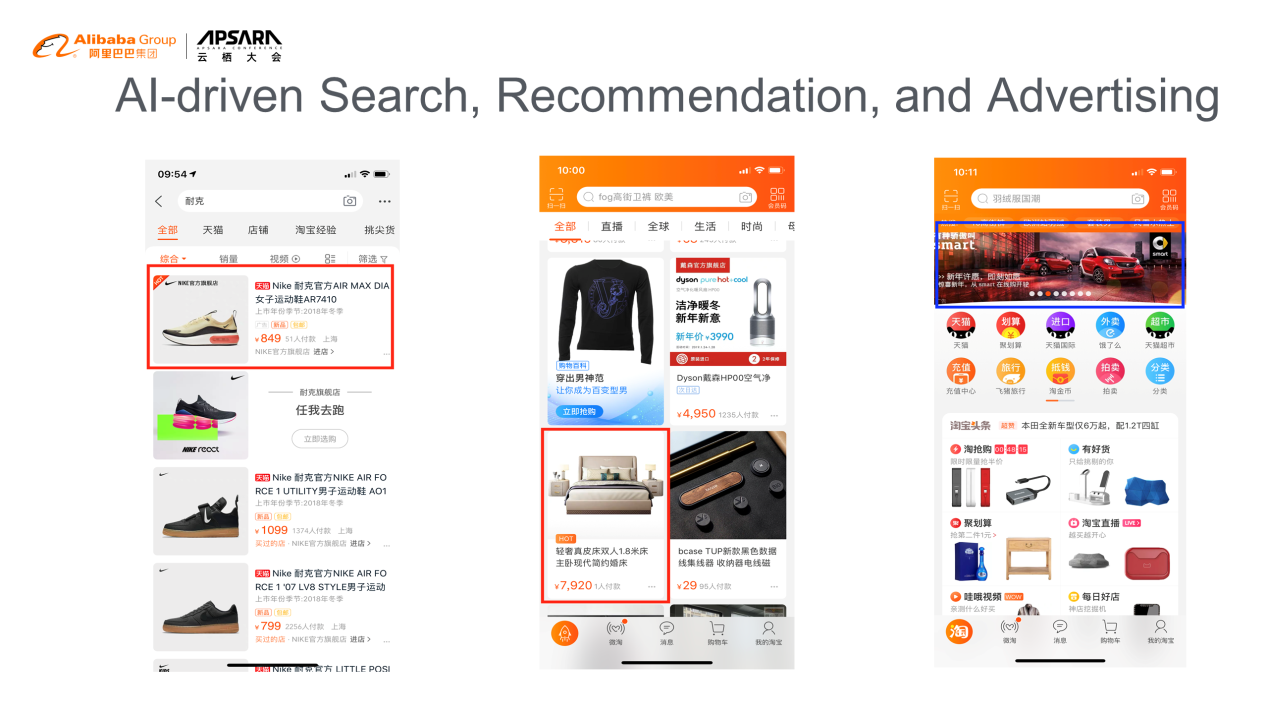

A large amount of content presented on Taobao today has been personalized for thousands of users. Taking search, recommendation, and advertising as its core, content delivery has played an important role in personalization. In the past five years, AI technology, such as deep learning technology, has become the core driving force for the improvement of search, recommendation, and advertising on Taobao. The key elements of deep learning are computing power, algorithms, and data. The way to build an efficient device-to-device AI platform directly determines the business scale and iteration efficiency.

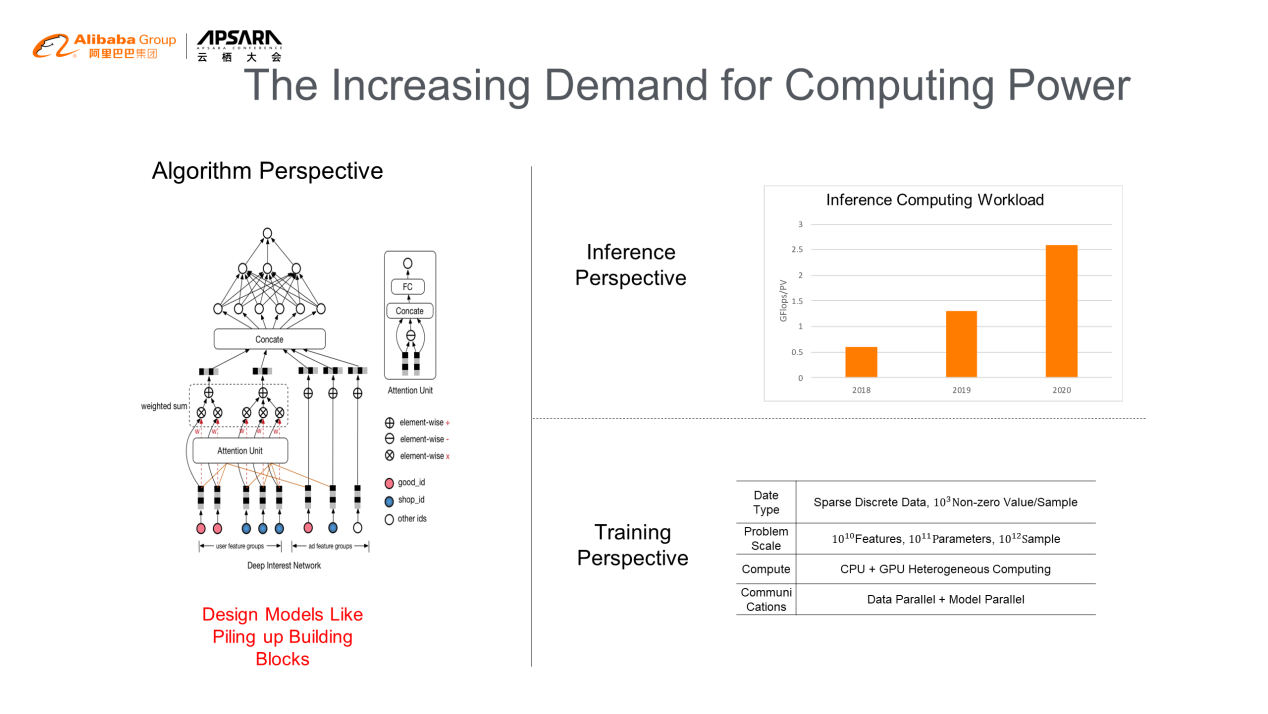

As AI algorithms becoming intelligent, the demand for computing power continues to increase.

The algorithms are becoming more and more diverse. In addition to the standard Deep Neural Networks (DNN) model, technologies, such as a graph-sound-based network, reinforcement learning, and tree-based deep learning, have also been widely used in Taobao's business.

The increasing complexity and diversity of algorithms require support from an efficient device-to-device algorithm platform.

1. Unlimited Demand for AI Computing Power

The platform can continuously release the computing power of in-depth learning to improve algorithm effects.

2. Acceleration of Iteration Efficiency

The platform provides a constant device-to-device experience and ensures the whole-process iteration efficiency of algorithms.

3. Support for Algorithm Innovation

The platform should be designed with high flexibility to support the continuous innovation of algorithms.

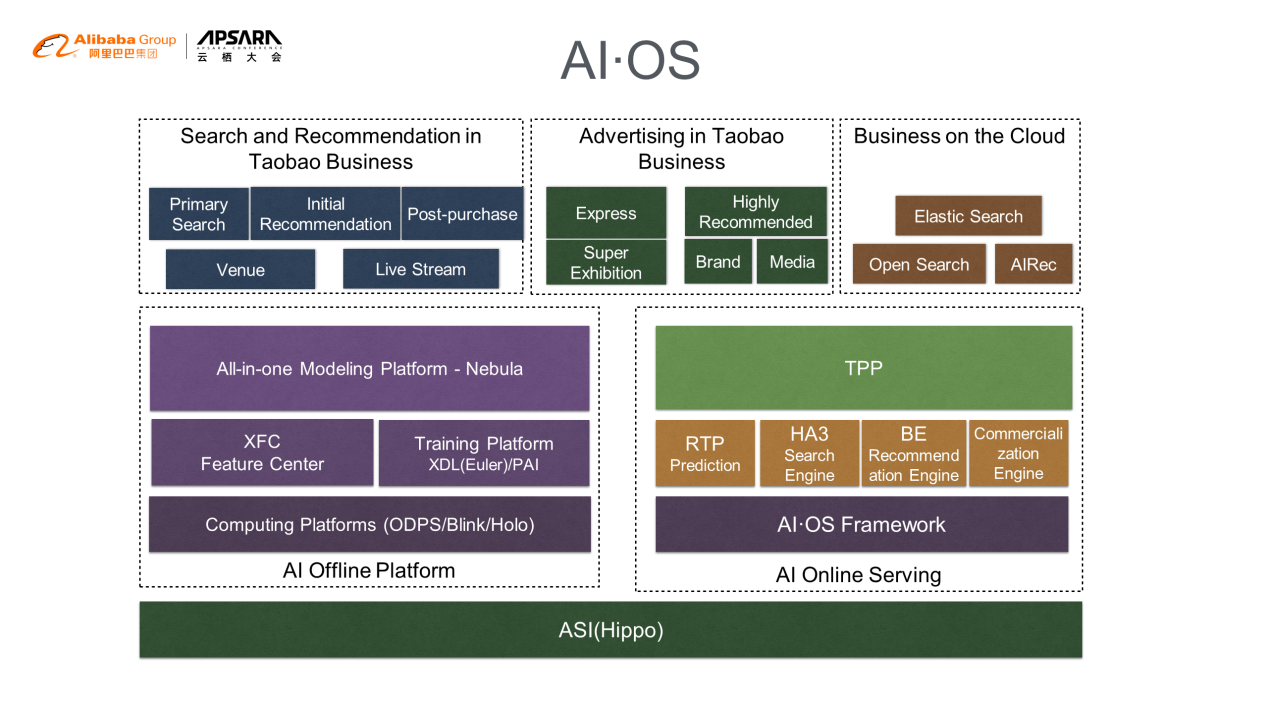

As an engineering technology system for big data deep learning, AI-OS has covered AIOfflinePlatform (a comprehensive modeling platform) and AIOnlineServing (an online AI service system). They are engines of device-to-device big data AI for seamless connections of offline systems. Currently, AI-OS deals with big data all the time to support the search, recommendation, and advertising business on Alibaba.com. The transactions it guided dominate the e-commerce market of Alibaba Group. In addition, as the backbone of the middle platform technology, AI-OS has become the infrastructure of Alibaba Group, Alibaba.com, Alibaba Cloud, Youku, Cainiao, Freshippo, and DingTalk. More importantly, the matrix of cloud products in AI-OS is provided to developers around the world through Alibaba Cloud.

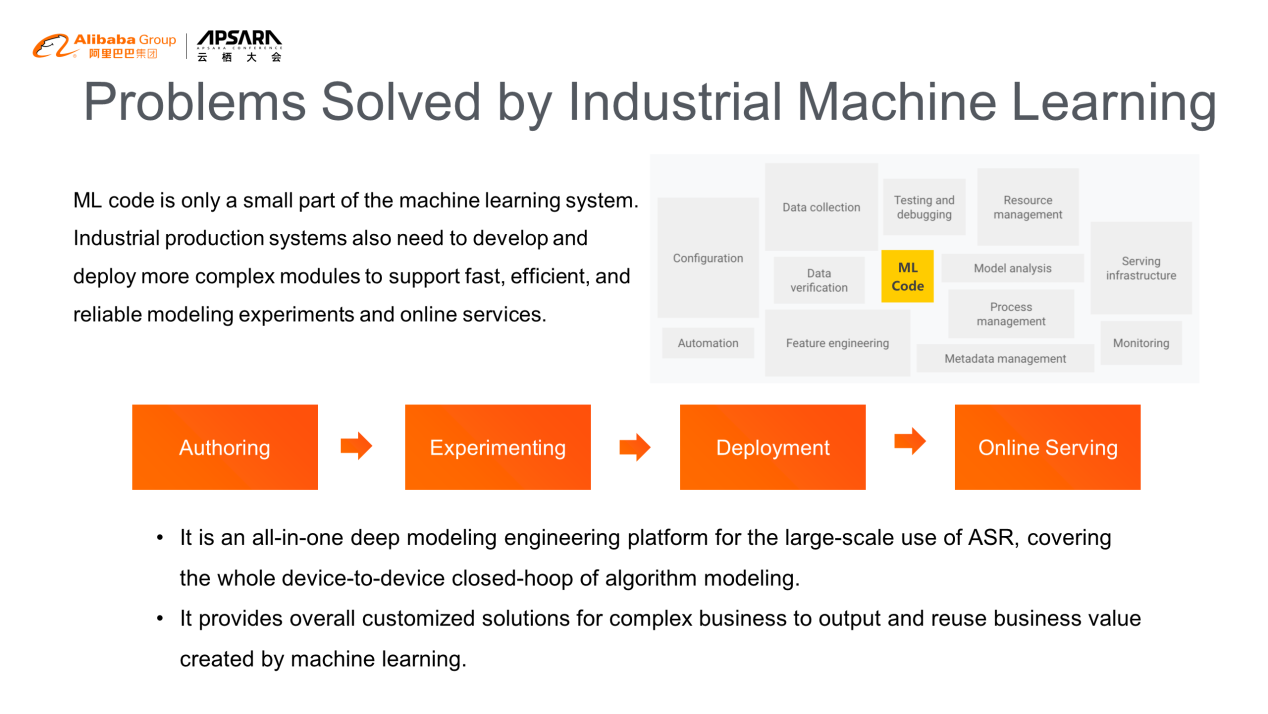

Problems solved by industrial machine learning involve the code development for algorithm models. It also covers full-procedure problems in an offline closed-loop environment, including features, samples, and models.

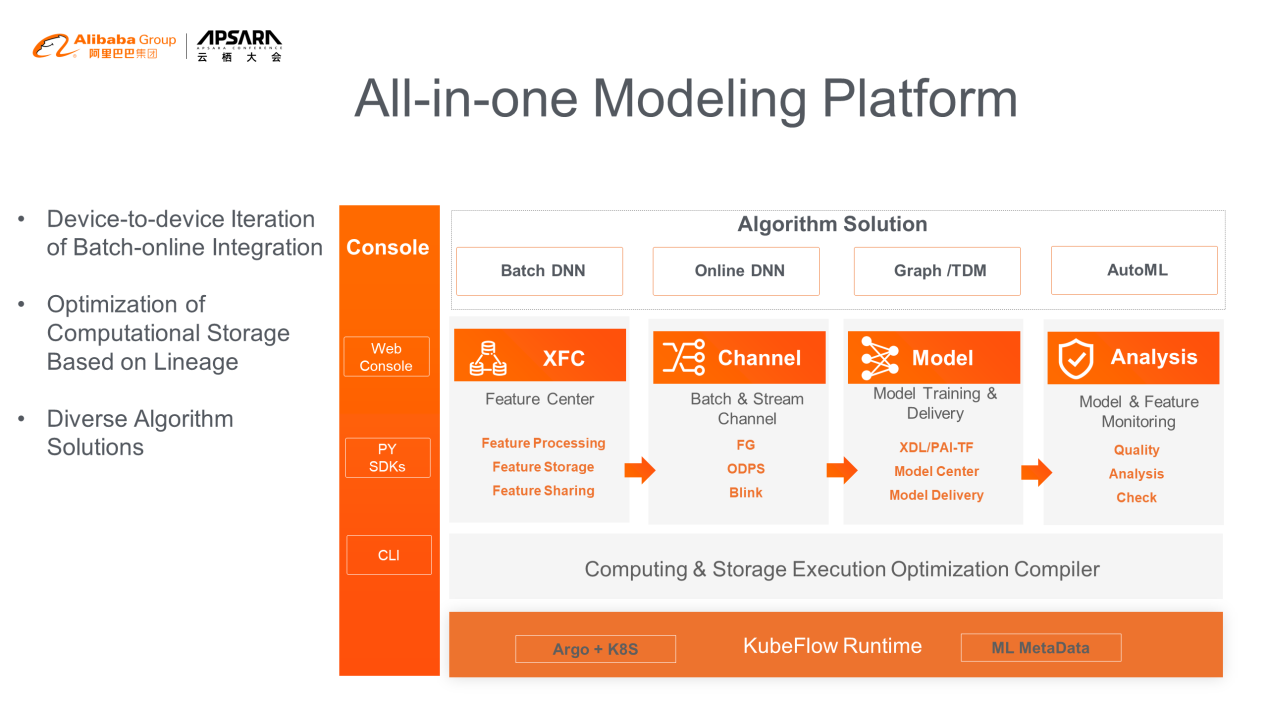

For scenarios, such as search, recommendation, and advertising, Alibaba Cloud has developed an all-in-one modeling platform. The platform provides device-to-device services in the full procedure, such as feature management, sample assembly, model training and evaluation, and model delivery.

Based on KubeFlow's cloud-native foundation in the bottom layer, the all-in-one modeling platform provides batch learning and online learning for users.

XFC provides standardized management and trends of features. Channel is an abstract concept of sample computing, while the Model center is provided by model factories for model training, sharing, and delivery.

The visualization analysis of multi-dimensional models and the verifying of models' security in the model analysis system requires no concern in the bottom system operation. On this basis, algorithm engineers can edit the logic of the algorithm process to complete development, deployment, and the online O&M of the algorithm process. The platform has built-in and unified lineage management for computational storage. Based on the relationship mentioned above and the analysis of algorithm logic descriptions, the platform has a set of optimization layers for computational storage and editing. These optimization layers can automatically optimize the sharing of features, samples, and model data, as well as computational storage. For example, when the overlap ratio of two sets of algorithm experiment processes is high, the system will automatically merge those two sets of features for computing and storage, improving the storage efficiency of the entire platform.

With the all-in-one modeling platform, more business innovations can be achieved and projects and effect verification can be implemented at lower costs. Thus, users realize rapid iteration and circulation from product ideas and algorithms to projects.

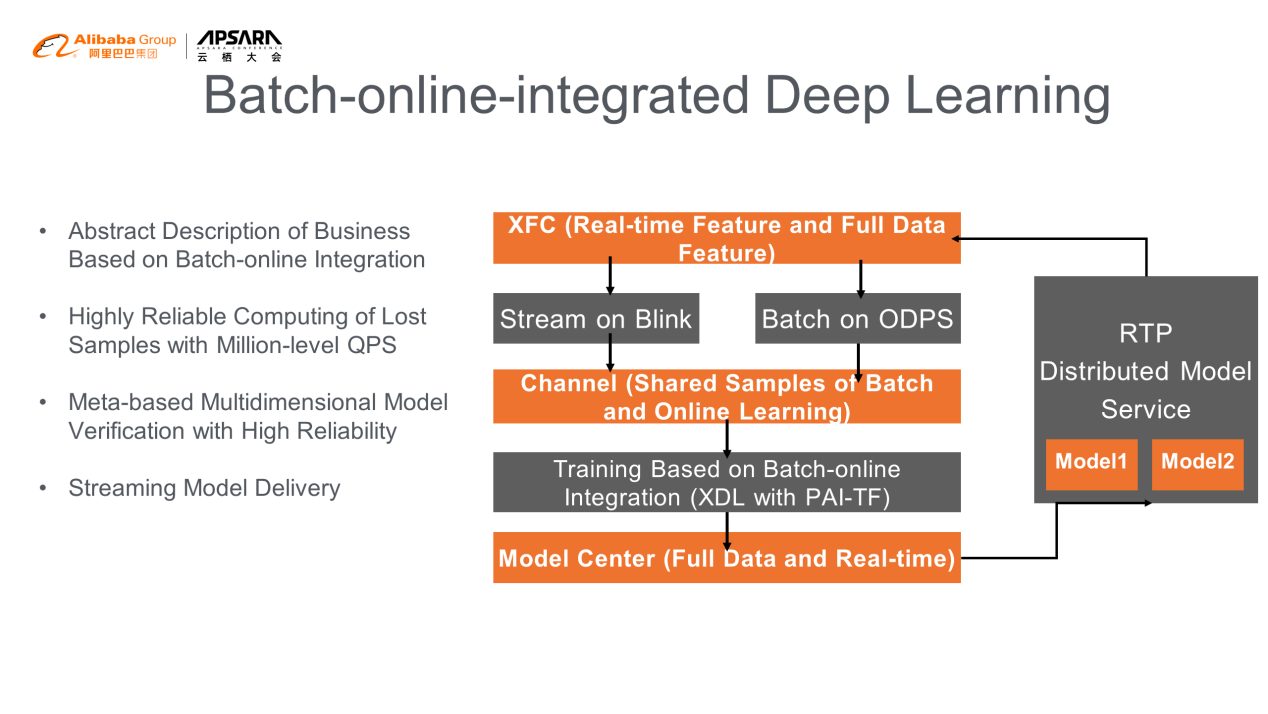

With the increasing pursuit of timeliness for services, online deep learning becomes increasingly important. Therefore, Alibaba Cloud provides the batch-online-integrated deep learning solution, which allows models to be updated in real-time and users to capture behavior changes of their customers in real-time.

Batch-online integration refers to users that are allowed to complete daily batch learning and online real-time learning with a set of algorithm logic. It can reduce the complexity of the algorithm development process and ensure consistency between full data models and real-time models.

The improvement of computing power in deep learning mainly involves two key aspects:

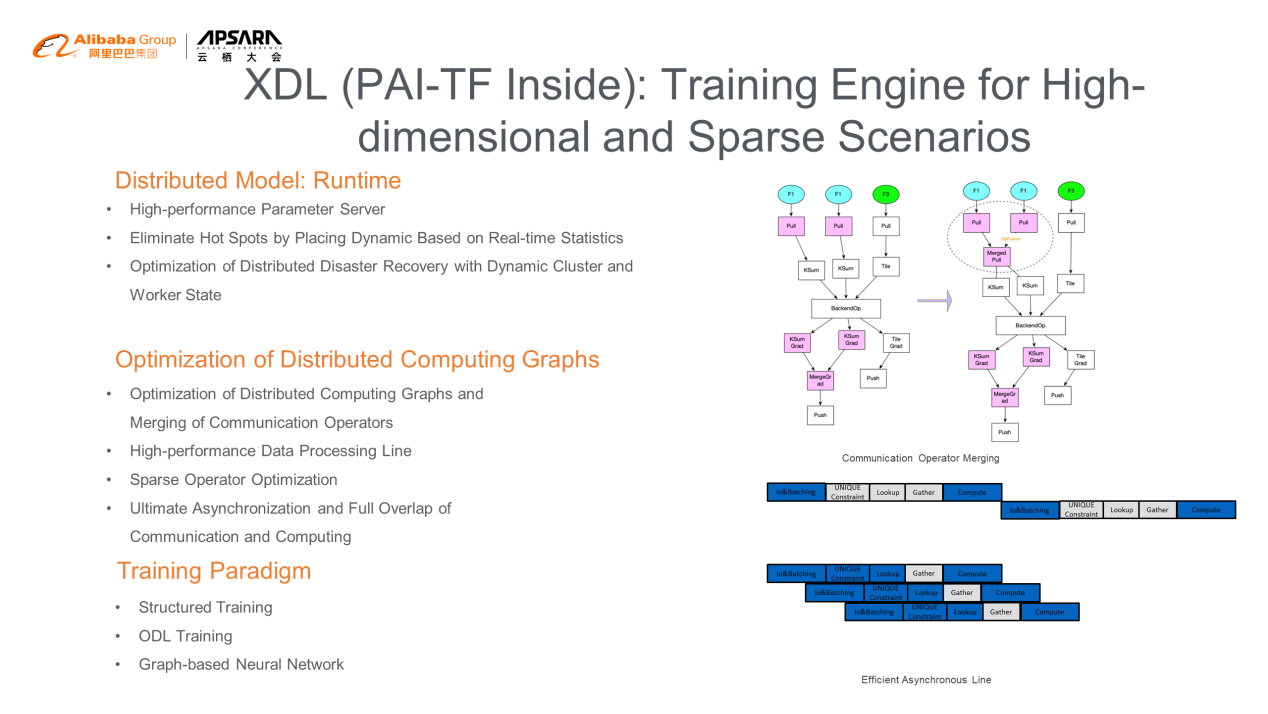

Search, recommendation, and advertising are high-dimensional and sparse scenarios. They are characterized by tens of billions of features and hundreds of billions of parameters. Models of those scenarios are wide and deep. Therefore, they need both width and depth computing optimization simultaneously.

XDL is a distributed training framework for deep learning designed for high-dimensional and sparse scenarios.

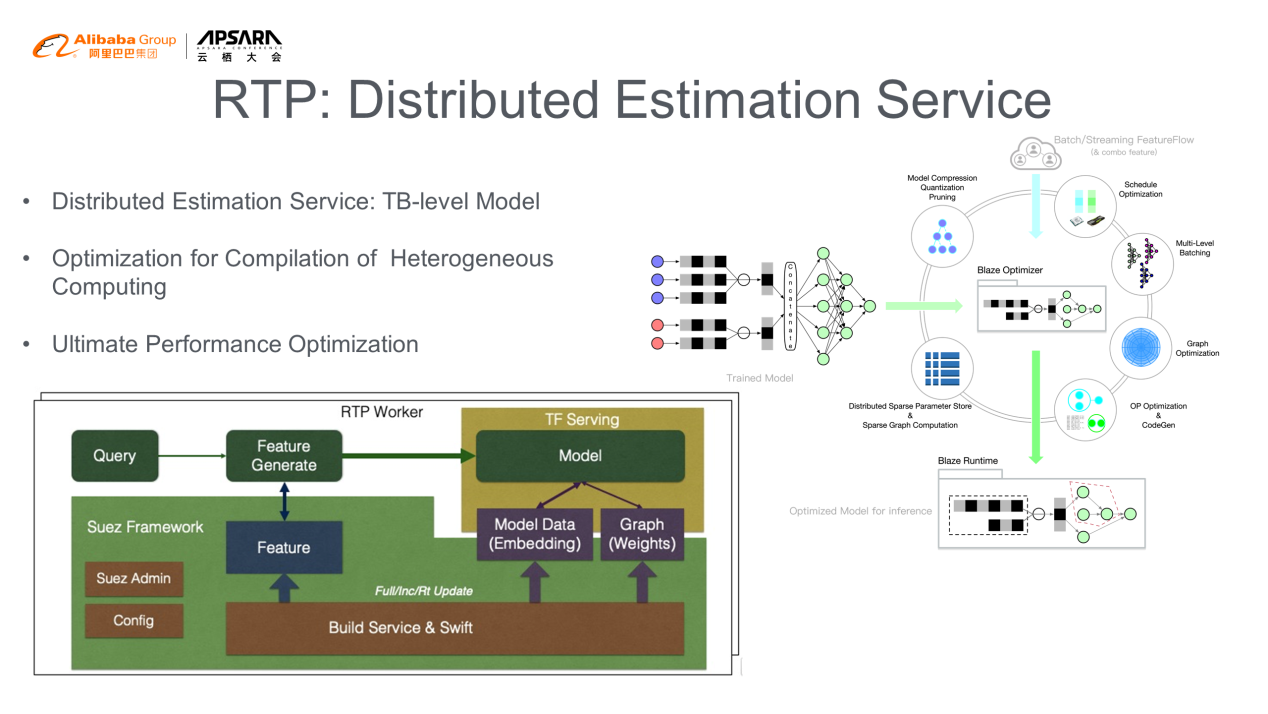

As a distributed estimation service for deep learning of AI-OS, RTP provides powerful orchestration capabilities for model applications by modularizing the online estimation capability of machine learning. As a result, machine learning technology can be applied to the whole procedure of online services, such as search, recommendation, and advertising, including recall, fine-grained sorting, shuffling, and summary selection.

RTP can also support consistency semantics, switching of full data between distributed models, and distributed features. It can also support the online services of TB-level models.

To support fast algorithm iteration, Taobao built a device-to-device algorithm platform in an offline closed-loop environment. This platform allows algorithm solutions to be quickly replicated and migrated between different scenarios. Based on the core training engine and estimation engine, the platform uses high-dimensional and sparse scenarios in search, recommendation, and advertising for in-depth scenario optimization. The platform also allows AI algorithms to make full use of the computing power to minimize algorithm results.

Alibaba Cloud Delivers Innovation and Entrepreneurship Results at the Apsara Conference

2,597 posts | 772 followers

FollowAlibaba Clouder - April 1, 2021

Alibaba Clouder - May 11, 2020

Alibaba Clouder - April 26, 2021

Alibaba Cloud Community - September 3, 2024

Alibaba Cloud Serverless - April 11, 2023

GarvinLi - December 27, 2018

2,597 posts | 772 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn MoreMore Posts by Alibaba Clouder

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free