In the current database ecosystem, most database systems support data synchronization among multiple node instances such as MySQL Master/Slave synchronization and Redis AOF master/slave synchronization. MongoDB even supports replica set synchronization among three or more nodes; this solidly ensures the high availability of a logical unit's data redundancy.

Cross-logical unit, cross-unit, or cross-data center data synchronization sometimes are very important in the business layer; this makes it possible to achieve load balancing among multiple data centers in one city, to have multiple data centers' multi-master architecture, for there to be multi-data center disaster tolerance in different regions, and for there to be multiple active masters in different regions. Because MongoDB's current built-in replica set master/slave sync mechanism has many limitations for this type of business scenarios, therefore we have developed the MongoShake system to achieve data replication among multiple instances and data centers; this also meets the needs for disaster tolerance and multiple active masters.

Note that data backup is MongoShake's core function, but data backup is not its only function. Using MongoShake as a platform service, you can meet your needs in different business scenarios by means of data subscription and consumption.

MongoShake is a general platform service written in Golang. MongoShake reads a MongoDB cluster's Oplog, replicates MongoDB data, and then meet specific needs based on the operation log. Log can be used in many scenarios. Therefore, MongoShake was designed to be built into a general platform service. Using the operation log, we are able to provide the log data subscription/consumption PUB/SUB function; this supports flexible connection to SDK, Kafka, and MetaQ in order to meet user needs in different scenarios such as: log subscription, data center synchronization, and asynchronous cache elimination. One of the core applications is cluster data synchronization. MongoShake crawls the oplog and conducts the log playback operation to achieve disaster tolerance and multiple-active-master business scenarios.

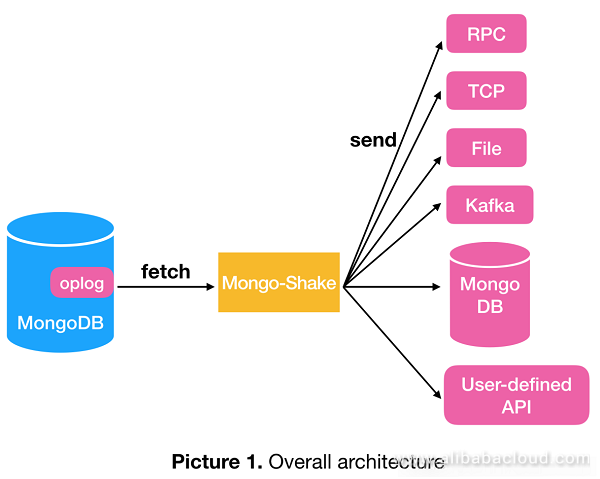

MongoShake crawls oplog data from the source database, and sends it to different tunnels. It currently supports the following tunnel types:

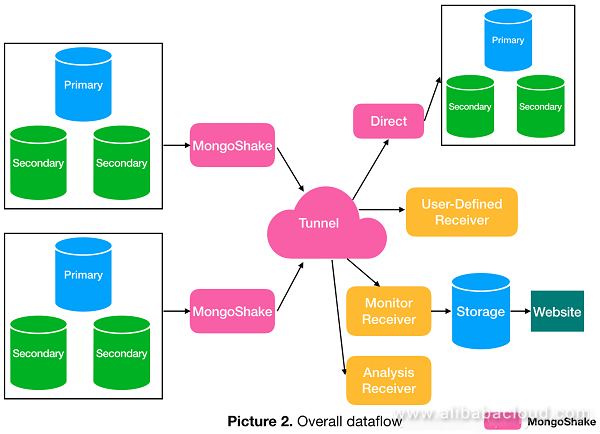

Consumers can get the data that they have followed by connecting to a tunnel, for example connecting to the Direct tunnel to directly write data into the target MongoDB, or connecting to the RPC tunnel for synchronized data transmission. You can also create your own APIs for flexible connection. The basic architecture and data flow are shown in the following two pictures.

MongoShake's source database supports three different modes: mongod, replica set, and sharding. The target database supports mongod and mongos. If the source database uses the replica set mode, we suggest that you use a standby database to reduce pressure of the master database. If it uses the sharding mode, then each shard will be connected to MongoShake for paralleled crawling. The target database can be connected to multiple mongos, and different data will be hashed before writing into different mongos.

MongoShake provides the capability of parallel replication, and the replication granularity options (shard_key) are: id, collection, or auto. Different files or tables may be sent to different hash queues for paralleled execution. The id option means hashing by files; the collection option means hashing by tables; and auto means automatic configuration. It will be equivalent to the collection option if a table has a unique-key, or be equivalent to the id option if otherwise.

MongoShake regularly synchronizes the context for storage. The storage object could be a third-party API (registration center) or a source database. The current context content is the "successfully synchronized oplog timestamp". In this case, if the service is switched over or restarted, this API or database can be connected to allow the new service to run properly.

MongoShake also provides the Hypervisor mechanism to pull up a service when it is down.

MongoShake provides both blacklist and whitelist mechanisms to allow optional db and collection synchronization.

MongoShake supports oplog compression before sending it out, and the currently supported compression formats are: gzip, zlib, and deflate.

Data in the same database may come from different sources: self-generated or replicated from somewhere else. Without corresponding control measures, there will be an infinite data replication loop. For example, data may be replicated from A to B, and then from B to A, and so forth. As a result, the service will crash. It's also possible that when data is replicated back from B to A, the write operation will fail due to the unique-key restriction, and cause unstable service.

Alibaba Cloud MongoDB has the function to prevent infinite data replication loop. The main mechanism is to modify MongoDB's kernel, insert a gid that indicates the current database information into the oplog, and use the op_command command to ensure that data carries the gid information during the replication process. In this way, each entry of data carries the source information. If we only need data generated from the current database, we only need to crawl the oplog with a gid equivalent to the current database id. Therefore, to solve the infinite data replication loop, MongoShake crawls data with a gid equivalent to id_A (gid of database A) from database A, and craws data with a gid equivalent to id_B (gid of database B) from database B.

Note: Modification to MongoDB kernel's gid is currently not available in the open-source version. However, it is supported in Alibaba Cloud ApsaraDB for MongoDB. That's why I mentioned that the "Mirror backup for data among MongoDB clusters" for open source is currently restricted.

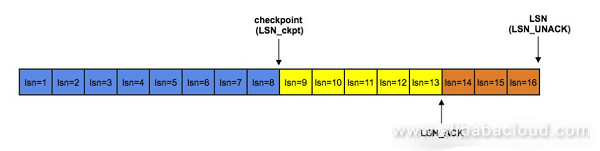

MongShake uses the ACK mechanism to ensure successful playback of oplog, and will trigger retransmission if the playback fails. The retransmission process is similar to TCP's sliding window mechanism. This is designed to ensure reliability at the application layer against events such as decompression failure. To better describe this function, let's define several terms first:

LSN: Log Sequence Number; this refers to the sequence number of the last transmitted oplog.

LSN_ACK: Acked Log Sequence Number; this refers to the greatest LSN that has been Acked (acknowledged), or the LSN of data that has been successfully written into the tunnel.

LSN_CKPT: Checkpoint Log Sequence Number; this refers to an LSN that has gone through the checkpoint, or a persistence LSN.

All values of LSN, LSN_ACK, and LSN_CKPT are from an Oplog's ts (timestamp) field, and the implicit restriction is: LSN_CKPT <= LSN_ACK <= LSN.

As shown in the above picture, LSN=16 indicates that 16 oplogs have already been transmitted, and the next transmission LSN will be 17 (LSN=17) if no re-transmission occurs. LSN_ACK=13 indicates that the previous 13 oplogs have already been acknowledged. If retransmission is required, then it will start from LSN=14. LSN_CKPT=8 indicates that checkpoint=8 is persistent. The purpose of persistence is that if MongoShake crashes and restarts, it reads oplogs from the source database starting from the position of the LSN_CKPT, rather than LSN=1. Because oplog DML's idempotence, multiple transmission of the same data will not cause any problems. However, for DDL, retransmission may cause errors.

MongoShake provides external users with a Restful API to allow them to view the data synchronization status of each internal queue in real time; this makes troubleshooting easier. MongoShake also provides the traffic restriction function to help users reduce database pressure in real time.

Currently, MongoShake supports table-level (collection) and file level (id) concurrencies. Id-level concurrencies require the db to be free of unique-index restrictions, and the table-level concurrencies deliver poor performance when the quantity of tables is small or when data distribution is very uneven. Therefore, for table-level concurrencies, the table distribution must be even, and we must solve the unique key conflicts inside the tables. We have the conflict detection function in place for the direct tunnel type.

We currently only support unique index and do not support any other indexes such as prefix index, sparse index, and TTL index.

There are two preconditions for the conflict detection function:

Additionally, operations on indexes during MongoShake's synchronization process may cause exceptions:

To support the conflict detection function, we modified MongoDB's kernel to ensure that the oplogs contain the uk field to indicate the unique index information involved. For example:

{

"ts" : Timestamp(1484805725, 2),

"t" : NumberLong(3),

"h" : NumberLong("-6270930433887838315"),

"v" : 2,

"op" : "u",

"ns" : "benchmark.sbtest10",

"o" : { "_id" : 1, "uid" : 1111, "other.sid":"22222", "mid":8907298448, "bid":123 }

"o2" : {"_id" : 1}

"uk" : {

"uid": "1110"

"mid^bid": [8907298448, 123]

"other.sid_1": "22221"

}

}Key under uk indicates the column name of the unique key; if a key is connected with "^", it indicates composite indexes. There is a composite index other.sid_1 of three unique indexes: uid, mid, and bid in the above log. The value has different meanings for addition, deletion, and modification operations. If it is an addition operation, the value will be null; if it's a deletion or modification operation, then the log will be deleted or the previous value will be modified.

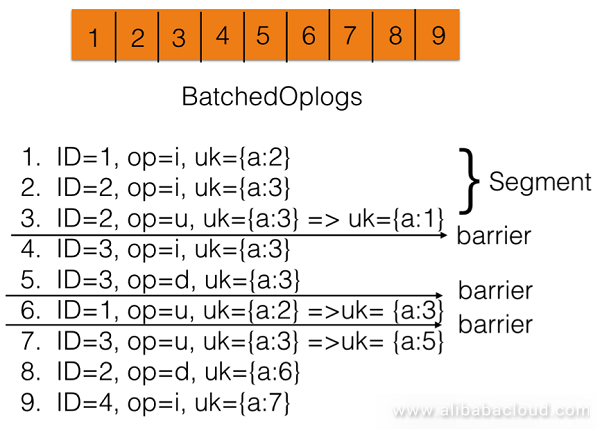

The specific process is as follows: MongoShake packs k consecutive oplogs into a batch, analyzes dependencies within each batch one by one, and splits them into segments based on the dependencies. If a conflict exists, MongoShake splits the batch into multiple segments based on the dependencies and time sequence. If there is no conflict in a batch, then this batch is taken as one segment. Then MongoShake conducts concurrent write operations within the same segment, and sequential write operations among different segments. Concurrency within the same segment means multiple concurrent threads perform write operations on data within the same segment simultaneously, and the same id within the same segment must have an order. Different segments are executed in a sequential order: only when the previous segment has been completely written can the subsequent segment start the write operation.

If oplogs with different ids simultaneously operate on the same unique key, then these oplogs are deemed to be in a sequential relationship; this is also called the dependency relationship. We must divide oplogs that are in a dependency relationship into two segments.

MongoShake has two ways to handle oplogs that are in a dependency relationship:

(1) Insert barriers

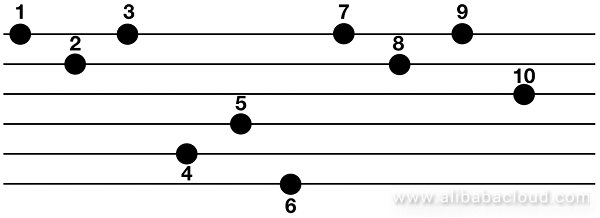

MongoShake inserts barriers to split a batch into different segments and then conducts concurrent operation within the each segment. For example, as shown in the following picture:

ID stands for a document id; op stands for the operation; i stands for update; d stands for delete; uk stands for all unique keys within this document; uk={a:3} => uk={a:1} means to modify the value of the unique key from a=3 into a=1, where a stands for the unique key.

At the beginning, there are 9 oplogs in this batch. MongoShake analyzes the uk relations and splits them into different segments. For example, items 3 and 4 operate on the same uk={a:3} when their ids are different, so MongoShake inserts a barrier between items 3 and 4 (it is deemed as a conflict if their uks are the same before or after the modification), the same applies to items 5 and 6, and items 6 and 7. Operations with the same id on the same uk is allowed to be performed within the same segment. So items 2 and 3 can be included into the same segment. After the split, operations are performed concurrently within the same segment based on their ids. For example, in the first segment, item 1 can be performed concurrently with items 2 and 3, but items 2 and 3 must be performed in a sequential order.

(2) Split based on the dependency graph

Each oplog corresponds to a time order N, there may always be a time order M corresponding to time order N, to the extent that:

Therefore, this graph becomes a directed acyclic graph. MongoShake simply concurrently writes points where the indegree (no inedge) is 0 based on the topological sorting algorithm. If the indegree of a point is not 0, then this point will not be written before its indegree becomes 0. That is, points with greater time order numbers will not be written before points with smaller time order numbers complete the write operation.

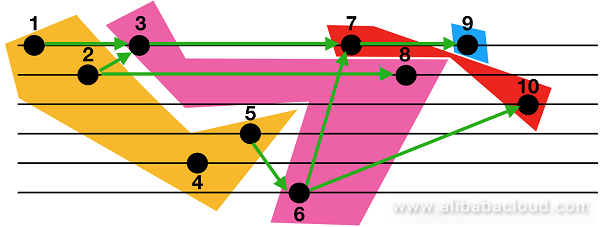

For example, in the following pictures: There are 10 oplog points, points on the same horizontal line which share the same file Ids. Arrows in the second picture indicates dependency relationships with conflicting unique keys. Then concurrent write operations on these 10 points are performed in the following order: points 1, 2, 4, and 5; points 3, 6, and 8; points 7 and 10; and then point 9.

Note: Conflicting uk detection in MongoDB gid is currently not available in the open-source version. However, it is supported in Alibaba Cloud ApsaraDB for MongoDB.

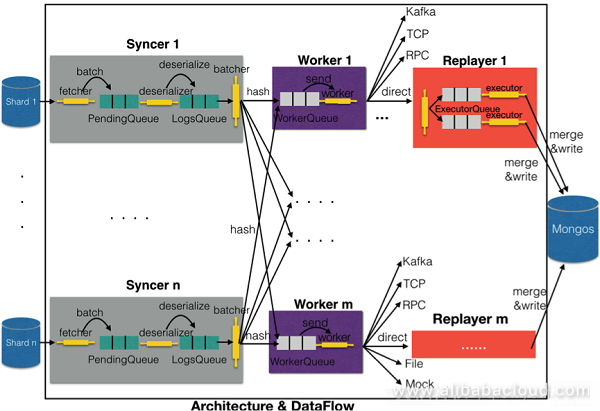

The above picture shows MongoShake's internal architecture and dataflow. Generally, the entire MongoShake system can be divided into three major parts: Syncer, Worker, and Replayer. Replayer is only applicable to the direct tunnel type.

Syncer is responsible for crawling data from the source database. If the source database is Mongod or ReplicaSet, then it requires only one Syncer. If the source database uses the Sharding mode, then it requires multiple Syncers to match the shards. Inside a Syncer, a fetcher uses the mgo.v2 database to crawl data from the source database, batch compresses the data, and places it into a PendingQueue. Then a deserializer thread deserializes data in the PendingQueue and places it into the LogsQueue. A batcher reorganizes data from the LogsQueue, consolidates data to be sent to the same Worker, and hashes the data before sending it to the WorkerQueue.

The main function of Worker is to take data from the WorkerQueue, and send it out. Because the ACK mechanism is used, two queues will be maintained internally; these are respectively the to-send queue and the sent queue. The former stores data that is not sent, and the later stores data that has been sent but has not been acknowledged. After a piece of data is sent, it will be transferred from the to-send queue to the sent-queue. Upon receiving an acknowledgment, to ensure reliability, the Worker will delete data with a seq smaller than ack from the sent queue.

Workers can be connected to different tunnels to meet different user needs. For direct tunnels, Replayers will be connected to directly write data into the target MongoDB database. Workers and Replayers are in a 1-to-1 match. First, the Replayer distributes data to different ExecutorQueue based on the conflict detection rule, and then an executor will take data from the queue to conduct concurrent write operations. To ensure the write efficiency, MongoShake consolidates adjacent oplogs with the same operations and Namespaces before performing the write operation.

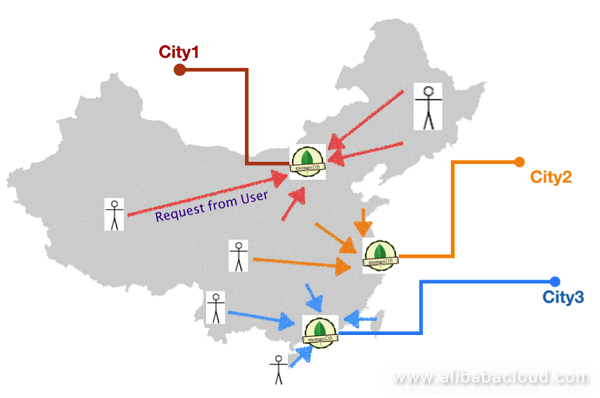

Amap is one of China's first class map and navigation application. Alibaba Cloud MongoDB database provides storage support for some of Amap's features; billions of data records are stored. Now, Amap uses a domestic dual-center policy; this routes requests to the nearest data center based on geolocation and other information to enhance service quality. Amap routes user requests to data centers in three cities as shown in the following picture; there is no dependency computation among these three data centers.

These three cities are geographically arranged from north to south throughout China. This imposes challenges on data replication and disaster tolerance. If the data center or network in one region fails, how can we smoothly switch traffic to another data center without affecting user experience?

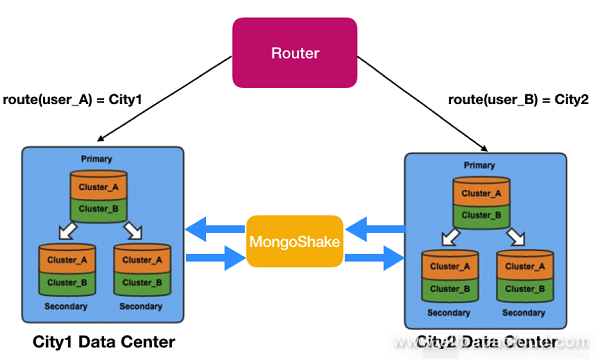

Our current policy is to topologically connect each data center to each of the other two data centers, as a result data in each data center will be synchronized to the other two data centers. Amap's router layer routes user requests to different data centers and the read and write operations are conducted in the same data center to ensure transmission reliability. MongoShake will then replicate data from and to the other two data centers asynchronously so as to ensure that each data center has the full data (final consistency). In this case, when one data center fails, either of the other two data centers could take its place and provide the read and write services. The following picture shows the synchronization between City 1's and City 2's data centers.

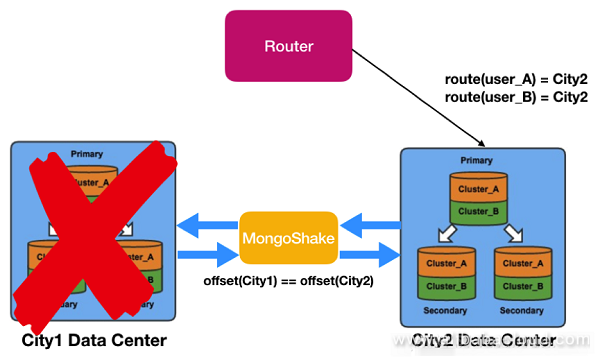

When a unit cannot be accessed, MongoShake's external Restful management interface can obtain the synchronization offset values and time stamps of each data center and determine if asynchronous replication has completed at one time point by analyzing the collected and written values. Then MongoShake collaborates with Amap's DNS in traffic diversion, diverting the traffic from the failed unit to a new unit, and ensures all requests can be read and written at the new unit.

MongoShake will be maintained in a long run. The complete and lite versions will be subject to continuous iteration. Your questions and comments will be highly appreciated. You are also welcomed to join us in the open source development.

Visit the official product page to learn more about Alibaba Cloud ApsaraDB for MongoDB.

ApsaraDB - September 3, 2018

Alibaba Clouder - July 30, 2019

ApsaraDB - June 29, 2021

ApsaraDB - March 26, 2024

ApsaraDB - July 23, 2025

ApsaraDB - September 8, 2021

Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More Time Series Database (TSDB)

Time Series Database (TSDB)

TSDB is a stable, reliable, and cost-effective online high-performance time series database service.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More ApsaraDB for MongoDB

ApsaraDB for MongoDB

A secure, reliable, and elastically scalable cloud database service for automatic monitoring, backup, and recovery by time point

Learn MoreMore Posts by ApsaraDB