Recently, Alibaba officially published the open source code for its distributed scientific computing engine – Mars. Developers can download and install Mars from PyPI or obtain the source code from GitHub and participate in the development.

Alibaba announced this open source project as early as September 2018 at the Computing Conference held in Yunqi, Hangzhou. Mars is different from existing big data computing engines, of which the computing models are mainly based on relational algebra. Mars introduces distributed technologies into the scientific computing/numerical computation field and significantly improves the computing scale and efficiency of scientific computing. Currently Mars has been applied to both business and production scenarios at Alibaba or for its customers on the cloud. This article elaborates on the design objectives and technical architecture of Mars.

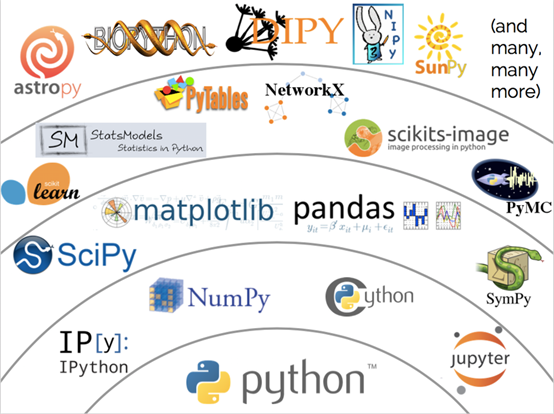

Scientific computing, or numerical computation, is a rapidly growing field that uses computers to solve numerical computation problems in scientific research and engineering. Scientific computing is applied in a variety of fields such as image processing, machine learning, and deep learning. Many scientific computing tools are available for different languages and libraries. Among these tools, NumPy stands out with its simple and easy-to-use syntax and powerful performance and contributes to a large technology stack that is based on NumPy itself ( as shown in the following picture).

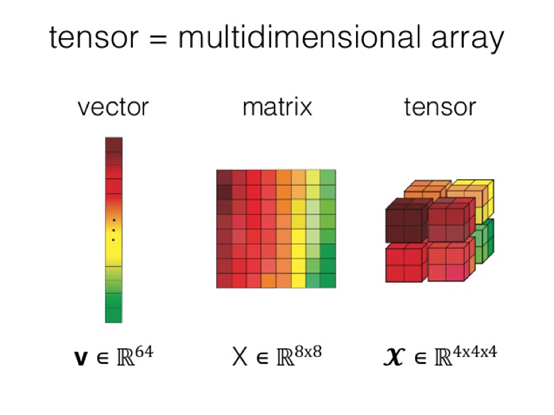

Multidimensional arrays are a core concept of NumPy, and are the foundation for various upper-layer tools. A multidimensional array is also called a tensor. Compared with two-dimensional tables or matrices, tensors have more powerful expressiveness. Therefore, popular deep learning frameworks usually adopt data structures that are based on tensors.

With increasing trends in machine learning and deep learning, the tensor concept is becoming more ubiquitous and the need for general-purpose computing on tensors also increases. However, the reality is that, as powerful as NumPy is, this scientific computing library is still used on a single machine and cannot break the performance bottlenecks. Currently popular distributed computing engines are not designed specifically for scientific computing. The inconsistent upper-layer interfaces make it hard to write scientific computing tasks in traditional SQL/MapReduce. These engines are not optimized for scientific computing and therefore provide unsatisfactory computing efficiency.

Understanding the aforementioned problems currently encountered in the scientific computing field, the Alibaba Cloud MaxCompute R&D team finally broke the boundary between big data and scientific computing, built the first version of Mars and published its open source code after over one year of research and development. Mars is a universal distributed computing framework based on tensors. Mars makes it possible to perform large-scale scientific computing tasks using only several lines of code, whereas using MapReduce requires hundreds of lines of code.

In addition, Mars can significantly improve computing performance. Currently Mars has implemented tensors, that is, Mars has basically implemented the distribution of NumPy with 70% of the common NumPy interfaces already available. Mars 0.2 is implementing the distribution of Pandas and will soon provide fully compatible Pandas interfaces to build the whole ecology.

As a new-generation ultra-large scientific computing engine, Mars not only accelerates scientific computing into the "distributed" era but also makes it possible to perform efficient scientific computing on big data.

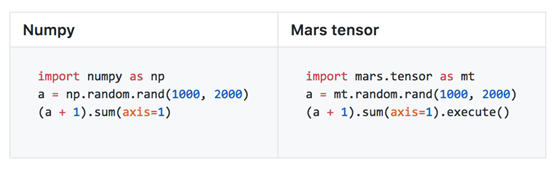

Mars provides interfaces that are compatible with NumPy by using the tensor module. Users can transplant their code logic into Mars simply by replacing and importing their existing code written in NumPy. By doing so, users can implement a scale that is tens of thousands of times larger than the original scale as well as increase processing capacity by several tens of times. Mars has implemented around 70% of the common NumPy interfaces.

Additionally, Mars also expands NumPy and makes full advantage of existing GPU achievements in the scientific field. When creating a tensor, let subsequent computing tasks run on GPUs simply by specifying gpu=True. Example:

a = mt.random.rand(1000, 2000, gpu=True)# Specifies creation on GPU

(a + 1).sum(axis=1).execute()Mars also supports two-dimensional sparse matrices. To create a sparse matrix, simply specify sparse=True. Take the eye interface for example. This interface creates a unit diagonal matrix in which entries outside the main diagonal are all 0 and the diagonal entries are all 1. So sparse matrix storage can be used.

a = mt.eye(1000, sparse=True) # Creates a sparse matrix

(a + 1).sum(axis=1).execute()This section describes the system design of Mars to show you how Mars enables parallel and automated scientific computing tasks and powerful performance.

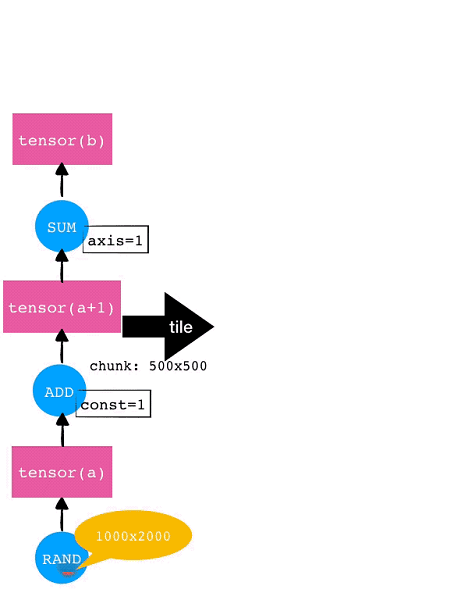

Mars typically splits scientific computing tasks. If a tensor is given, Mars will automatically split it by each dimension into small chunks and then process these chunks separately. Automatic splitting and task parallelism are supported for all operators implemented in Mars. This automatic splitting is called Tiling in Mars.

For example, consider a 1000×2000 tensor. If each chunk for each dimension is 500×500, then this tensor will be tiled into 8 (2×4) chunks. The tile operation will also be automatically performed for subsequent operators such as Add and SUM. The operation tiling for this example tensor is shown in the following diagram.

Currently it is required to explicitly invoke "execute" in order to trigger code written in Mars. This is called the Mars-based delayed execution mechanism. Users don't need to perform any actual data computing tasks when writing intermediate code. This allows users to make more optimizations for the intermediate process, enabling more optimized task execution. Currently the main optimization method in Mars is the fusion optimization, that is, to merge multiple operations into one operation and then execute that operation.

In the preceding example, after the tile operation is completed, Mars performs the fusion optimization targeting fine-grained chunk-level graphs. For example, each of the eight chunks respectively for RAND, ADD, and SUM can form one node separately. On one hand, this allows generating acceleration code by invoking libraries like NumExpr; on the other hand, reducing the number of running nodes can significantly reduce the overhead of the scheduling execution graphs.

Mars supports a variety of scheduling methods:

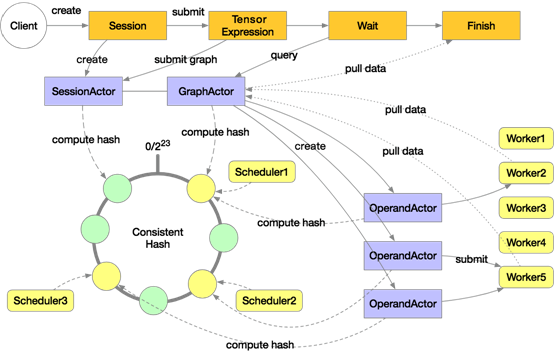

The distributed execution architecture of Mars is shown in the following diagram:

A distributed execution in Mars will start multiple schedulers and workers. The preceding diagram includes three schedulers and five workers. These schedulers make up a consistent hash loop. When a user explicitly or implicitly creates a session on the client, a SessionActor is assigned to a scheduler according to the consistent hash. Then when the user submits a tensor computing task by using "execute", a GraphActor is created to manage the execution of this tensor. This tensor will be tiled into chunk-level graphs in the GraphActor.

Take three chunks for example. Three OperandActors will be created on the scheduler respectively for the three chunks. These OperandActors will be submitted to individual workers for execution, depending on whether the dependencies of these OperandActors are completed and whether cluster resources are sufficient. After this process is completed on all the OperandActors, the GraphActor will be informed that the task has been completed, then the client can pull data to display or draw graphs.

The flexible execution graphs and multiple scheduling modes in Mars can allow code in Mars to flexibly scale in and scale out. Scale in to a single machine and use multiple cores to perform scientific computing tasks; scale out to distributed clusters to allow hundreds of workers to complete tasks that otherwise could never be done on a single machine.

In one real scenario, we needed to multiply two large matrices. Each matrix contained about a hundred billion elements and was around 2.25 TB. Mars completed this computing task within 2.5 hours using only 5 lines of code and 1600 CUs (two hundred 8-core workers, each with 32 GB memory). Before Mars, tasks like this would need more than one thousand lines of code in MapReduce and would take 10 hours and 9,000 CUs to complete the task.

Let's make a comparison. Consider a matrix containing 3.6 billion elements. For each element in this matrix, we need to add 1 and multiply by 2. In the following chart, the red cross represents the computation time for NumPy, the green solid line represents the computation time for Mars, and the blue dotted line represents the theoretical computation time. As the chart shows, Mars provides much faster performance than NumPy in the case of a single machine. As the number of workers increases, Mars can implement an almost linear acceleration ratio.

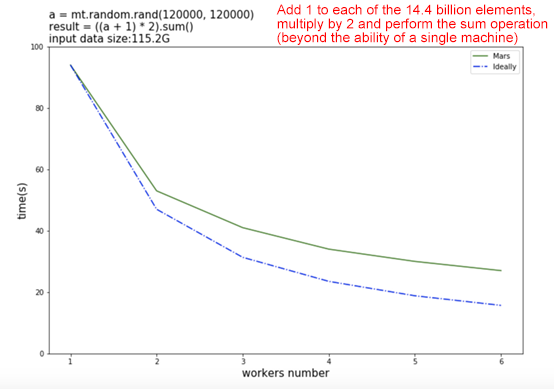

Let's increase the computing scale. Consider a matrix containing 14.4 billion elements, and for each element in this matrix, add 1, multiply by 2 and find the sum. In this case, the input data is up to 115 GB, and a single NumPy machine cannot complete the operation. However, Mars can still perform the operation and provide a good acceleration ratio as the number of machines increases.

The open source code of Mars is available in GitHub: https://github.com/mars-project/mars The future development of Mars will be performed in GitHub by using standard open-source software. You are welcome to use Mars and become a contributor.

Converting JSON-formatted Log Data with MaxCompute Built-in Functions and UDTF

137 posts | 21 followers

FollowAlibaba Cloud MaxCompute - June 22, 2020

Alibaba Clouder - April 22, 2021

Alibaba Cloud MaxCompute - March 2, 2020

Alibaba Cloud MaxCompute - December 6, 2021

Alibaba Cloud MaxCompute - March 20, 2019

Alibaba Cloud MaxCompute - March 24, 2021

137 posts | 21 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by Alibaba Cloud MaxCompute