Join us at the Alibaba Cloud ACtivate Online Conference on March 5-6 to challenge assumptions, exchange ideas, and explore what is possible through digital transformation.

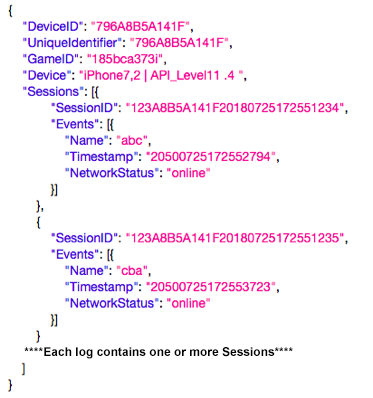

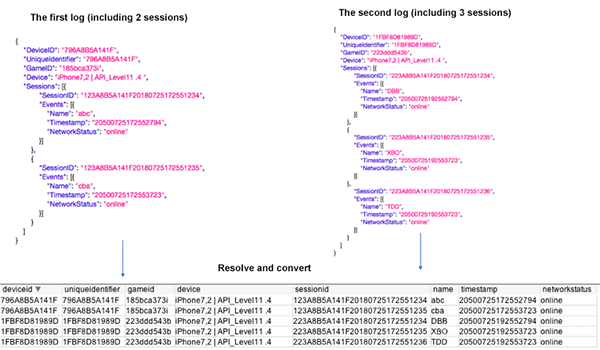

Due to the complexity of the business, data developers need to handle different types of data from different sources. They need to extract this data to the data platform and extract key business fields according to the designed data model, to form a two-dimensional table for subsequent statistical analysis and contextual computing in the big data platform or data warehouse.

This article introduces how to use MaxCompute to convert JSON-formatted log data with a specific case.

In the solution presented in this article, the Alibaba Cloud's Log Service + MaxCompute product portfolio is selected to meet the business requirements above. The log service only performs the functions of log collection and delivery, and does not perform data resolution and conversion.

Log data is obtained from the log service to logstore (for this part, see the Log Service Help Document)s

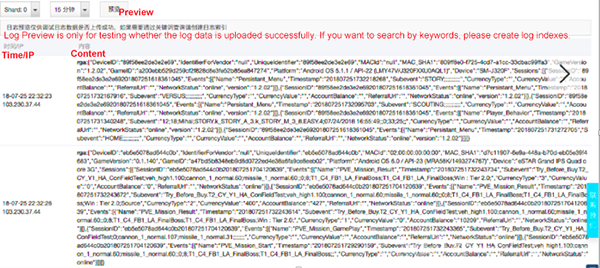

Using the delivery function of Log Service, the log is regularly delivered and archived to one raw log table of MaxCompute, in which all information of each log is written into the "content" field of the raw log table.

Use MaxCompute to perform field resolution and extraction of the raw data.

Use the built-in function get_json_object to extract data

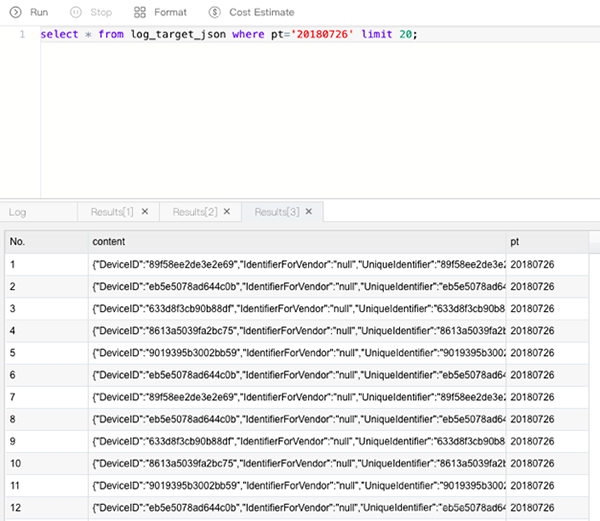

select

get_json_object(content,'$. DeviceID') as DeviceID,

get_json_object(content,'$. UniqueIdentifier') as UniqueIdentifier,

get_json_object(content,'$. GameID') as GameID,

get_json_object(content,'$. Device') as Device,

get_json_object(content,'$. Sessions\[0]. SessionID') as Session1_ID,

get_json_object(content,'$. Sessions\[0]. Events\[0]. Name') as Session1_EventName,

get_json_object(content,'$. Sessions\[1]. SessionID') as Session2_ID,

get_json_object(content,'$. Sessions\[1]. Events\[0]. Name') as Session2_EventName

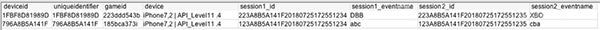

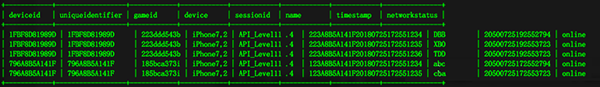

from log_target_json where pt='20180725' limit 10The extraction results are as follows:

Summary of the Solution:

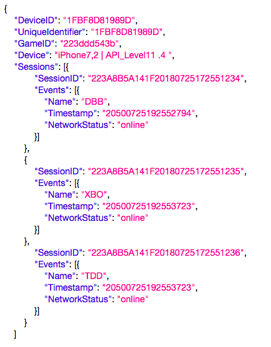

The processing logic above extracts the business fields of a log into row fields respectively, which is suitable for JSON records that have fixed information and can be mapped into table fields. For example, in the example above, after extracting the information of session1 and session2, they are treated as different column fields respectively. However, if the number of sessions contained in each log record is dynamic rather than fixed, this processing logic has difficulty meeting the requirements. For example, the next log contains 3 sessions. If the information of each session is to be extracted, the resolved SQL is required to add Session3_ID and Session3_EventName logic. What if the next log contains 100 sessions? This extraction method has difficulty in dealing with this situation.

In this case, the UDTF custom function can be used.

According to the characteristics of the data, 1 log contains multiple sessions, which has a 1:N relationship. When the log is converted into a two-dimensional table of the data warehouse, the session information needs to be resolved to the minimum granularity, and 1 row needs to be converted into N rows to extract all session information. The business objectives are as follows:

In MaxCompute, UDTF is needed to convert a row of records into multiple rows of records.

Taking JAVA UDTF as an example, each JSON record in the "content" field is resolved to obtain and return the business field to be extracted The UDTF processing logic here goes as far as JSON level 3, cyclically resolving the data with the smallest granularity and returning multiple records.

package com.aliyun.odps;

import com.aliyun.odps.udf.UDFException;

import com.aliyun.odps.udf.UDTF;

import com.aliyun.odps.udf.annotation.Resolve;

import com.google.gson.Gson;

import java.io.IOException;

import java.util.List;

import java.util.Map;

@Resolve("string->string,string,string,string,string,string,string,string")

public class get_json_udtf extends UDTF {

@Override

public void process(Object[] objects) throws UDFException, IOException {

String input = (String) objects[0];

Map map = new Gson().fromJson(input, Map.class);

Object deviceID = map.get("DeviceID");

Object uniqueIdentifier = map.get("UniqueIdentifier");

Object gameID = map.get("GameID");

Object device = map.get("Device");

List sessions = (List) map.get("Sessions");

for (Object session : sessions) {

Map sMap = (Map) session;

Object sessionID = sMap.get("SessionID");

List events = (List) sMap.get("Events");

for (Object event : events) {

String name = (String) ((Map) event).get("Name");

String timestamp = (String) ((Map) event).get("Timestamp");

String networkStatus = (String) ((Map) event).get("NetworkStatus");

forward(deviceID, uniqueIdentifier,gameID,device,

sessionID,name,timestamp,networkStatus);

}

}

}

}Note: For the details of writing, packaging and uploading, and creating functions for UDF, see the official documentation

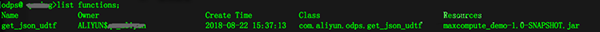

After the program is written, you need to package UDTF, upload it and create a UDF function:

Package the compiled program, generate a jar package, and upload this resource in the MaxCompute client (odpscmd):

add jar maxcompute_demo-1.0-SNAPSHOT.jar -f;Then, create a function from the command line:

create function get_json_udtf as com.aliyun.odps.get_json_udtf using maxcompute_demo-1.0-SNAPSHOT.jar';View the function after creation:

Test and verification:

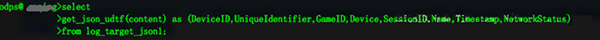

Query the table containing the raw log and query the "content" field with the created get_json_udtf:

The query results are as follows: UDFT function processes each JSON record and generates multiple records as expected:

And if the processing logic needs to be fixed, you can also use the "insert into" syntax to query the resolved results to a new table, and realize periodic data conversion through task scheduling.

Based on a big data analysis scenario of log analysis, and taking a common JSON log processing requirement as an example, this article introduces how to collect logs for MaxCompute through the log service, and how to use MaxCompute built-in functions and UDF to resolve and convert JSON-formatted log data, extract key business fields and generate log tables that can be used for subsequent analysis.

Mars – Alibaba's Open Source Distributed Scientific Computing Engine

137 posts | 21 followers

FollowAlibaba Cloud MaxCompute - April 26, 2020

Alibaba Cloud MaxCompute - January 29, 2024

Alibaba Clouder - March 31, 2021

Alibaba Cloud MaxCompute - February 4, 2024

ApsaraDB - November 21, 2023

JDP - May 20, 2021

137 posts | 21 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Simple Log Service

Simple Log Service

An all-in-one service for log-type data

Learn MoreMore Posts by Alibaba Cloud MaxCompute