By Xingfeng, a Senior Development Engineer, Alibaba Cloud Elasticsearch Team

Released by ELK Geek

We helped an Elasticsearch user migrate their user-created clusters to Alibaba Cloud Elasticsearch. In the process, we found that the master node responded slowly and took more than 1 minute to create and delete indexes after the user-created cluster version was upgraded to Elasticsearch 7.4.0 from Elasticsearch 6.3.2. This cluster contained 3 dedicated master nodes, 10 hot nodes, 2 warm nodes, and more than 50,000 shards. Most shards and indexes were disabled. When an index expired and was moved to a warm node, it was closed. To query the index, we had to run the open command to enable it. After trying multiple versions from v6.x to v7.x, we found that v7.2.0 and later versions have these issues, even if the specifications of dedicated master nodes are upgraded to 32-core and 64 GB.

It is inconvenient and risky to log on to a user-created online production cluster and check the status of the cluster. Therefore, we planned to modify the Elasticsearch code to find the pull request that most likely changed the scheduling of master nodes in v7.2. We soon found that shard replication for disabled indexes introduced by pr#39499 was the most likely cause.

Before the introduction of pr#39499, the engine of data nodes with disabled indexes would be shut down, so it would no longer provide the query and write services. As a result, shard relocation and data replication were not allowed. When a data node is deprecated, the shard data for which the index has been disabled is lost.

After the introduction of pr#39499, the master nodes retain the disabled index in the cluster state and schedule the shards of the index. The data node uses NoOpEngine to open the index and does not support the query and write services. In this case, the overhead is much less than that of a common engine. When there are many shards in the cluster state, master node scheduling is very slow.

Built a minimized test environment with the following specifications:

Created 5,000 indexes. Each index with 5 primary shards and 0 replica shards for a total of 25,000 shards. During the test, we found that it took 58s to create an index. The CPU utilization of the master node cluster was always 100%. We ran the top -Hp $ES_PID command to get IDs of busy threads. By using jstack to call stack information for master nodes, we found that this problem occurs because the masterServices thread keeps calling shardsWithState.

"elasticsearch[iZ2ze1ymtwjqspsn3jco0tZ][masterService#updateTask][T#1]" #39 daemon prio=5 os_prio=0 cpu=150732651.74ms elapsed=258053.43s tid=0x00007f7c98012000 nid=0x3006 runnable [0x00007f7ca28f8000]

java.lang.Thread.State: RUNNABLE

at java.util.Collections$UnmodifiableCollection$1.hasNext(java.base@13/Collections.java:1046)

at org.elasticsearch.cluster.routing.RoutingNode.shardsWithState(RoutingNode.java:148)

at org.elasticsearch.cluster.routing.allocation.decider.DiskThresholdDecider.sizeOfRelocatingShards(DiskThresholdDecider.java:111)

at org.elasticsearch.cluster.routing.allocation.decider.DiskThresholdDecider.getDiskUsage(DiskThresholdDecider.java:345)

at org.elasticsearch.cluster.routing.allocation.decider.DiskThresholdDecider.canRemain(DiskThresholdDecider.java:290)

at org.elasticsearch.cluster.routing.allocation.decider.AllocationDeciders.canRemain(AllocationDeciders.java:108)

at org.elasticsearch.cluster.routing.allocation.allocator.BalancedShardsAllocator$Balancer.decideMove(BalancedShardsAllocator.java:668)

at org.elasticsearch.cluster.routing.allocation.allocator.BalancedShardsAllocator$Balancer.moveShards(BalancedShardsAllocator.java:628)

at org.elasticsearch.cluster.routing.allocation.allocator.BalancedShardsAllocator.allocate(BalancedShardsAllocator.java:123)

at org.elasticsearch.cluster.routing.allocation.AllocationService.reroute(AllocationService.java:405)

at org.elasticsearch.cluster.routing.allocation.AllocationService.reroute(AllocationService.java:370)

at org.elasticsearch.cluster.metadata.MetaDataIndexStateService$1$1.execute(MetaDataIndexStateService.java:168)

at org.elasticsearch.cluster.ClusterStateUpdateTask.execute(ClusterStateUpdateTask.java:47)

at org.elasticsearch.cluster.service.MasterService.executeTasks(MasterService.java:702)

at org.elasticsearch.cluster.service.MasterService.calculateTaskOutputs(MasterService.java:324)

at org.elasticsearch.cluster.service.MasterService.runTasks(MasterService.java:219)

at org.elasticsearch.cluster.service.MasterService.access$000(MasterService.java:73)

at org.elasticsearch.cluster.service.MasterService$Batcher.run(MasterService.java:151)

at org.elasticsearch.cluster.service.TaskBatcher.runIfNotProcessed(TaskBatcher.java:150)

at org.elasticsearch.cluster.service.TaskBatcher$BatchedTask.run(TaskBatcher.java:188)

at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingRunnable.run(ThreadContext.java:703)

at org.elasticsearch.common.util.concurrent.PrioritizedEsThreadPoolExecutor$TieBreakingPrioritizedRunnable.runAndClean(PrioritizedEsThreadPoolExecutor.java:252)

at org.elasticsearch.common.util.concurrent.PrioritizedEsThreadPoolExecutor$TieBreakingPrioritizedRunnable.run(PrioritizedEsThreadPoolExecutor.java:215)

at java.util.concurrent.ThreadPoolExecutor.runWorker(java.base@13/ThreadPoolExecutor.java:1128)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(java.base@13/ThreadPoolExecutor.java:628)

at java.lang.Thread.run(java.base@13/Thread.java:830)By reviewing the corresponding code, we found that in all the reroute triggering requests, such as create, delete, and cluster status update requests, BalancedShardsAllocator was called to traverse all the started shards in the cluster. Then, the disk space occupied by relocated shards on the node where the shards reside was calculated. Therefore, we must find all the shards that are being initialized and relocated, by traversing all shards of the node.

The complexity of shard traversal for the outermost layer is O(n) while that for the inner layer to loop shards on each node is O(n/m), where n indicates the total number of shards in the cluster and m indicates the number of nodes. The overall complexity is O(n^2/m). For a cluster with a fixed number of nodes, m can be considered as a constant, and the scheduling complexity of each reroute operation is O(n^2).

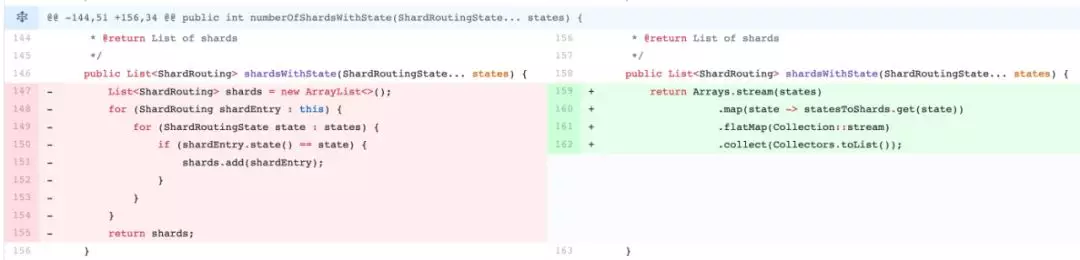

Each time, all shards need to be traversed to find the initializing and relocating shards. Therefore, the calculation can be performed once during initialization, and then a simple update can be performed upon each shard status change, which reduces the overall complexity to O(n). To address this problem, we made a simple modification to the Elasticsearch 7.4.0 code and packaged it for testing. The response time for requests that trigger reroute, such as index creation requests, was reduced to 1.2s from 58s. Both the hot threads API and jstack of Elasticsearch show that shardsWithState is no longer a hot spot. Therefore, performance is improved significantly.

A master node uses the MasterService class to manage cluster jobs. To ensure status consistency, the jobs are processed in the sequence in single-thread mode. Therefore, we cannot solve this problem by upgrading the cluster specifications of master nodes.

When you encounter this problem in your Elasticsearch cluster, set cluster.routing.allocation.disk.include_relocations to false so that disk usage of relocating shards is ignored during master node scheduling. However, this may result in an incorrect estimation of disk usage and the risk of repeatedly triggering relocation.

2,599 posts | 764 followers

FollowAlibaba Clouder - January 29, 2021

Data Geek - November 11, 2024

Alibaba Clouder - January 4, 2021

Apache Flink Community China - November 6, 2020

Alibaba Clouder - December 30, 2020

Data Geek - August 6, 2024

2,599 posts | 764 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Alibaba Cloud Elasticsearch

Alibaba Cloud Elasticsearch

Alibaba Cloud Elasticsearch helps users easy to build AI-powered search applications seamlessly integrated with large language models, and featuring for the enterprise: robust access control, security monitoring, and automatic updates.

Learn More ApsaraDB for MyBase

ApsaraDB for MyBase

ApsaraDB Dedicated Cluster provided by Alibaba Cloud is a dedicated service for managing databases on the cloud.

Learn MoreMore Posts by Alibaba Clouder