By Yu Lei (Liangxi)

The Java platform provides a large number of class libraries and frameworks, helping developers quickly build applications. Most of the Java framework class libraries run concurrently based on the thread pool and blocking mechanism, mainly for the following reasons:

1) The Java language provides powerful concurrency capabilities in the core class library, and multi-thread applications help achieve good performance.

2) Some Java EE standards are used for thread-level blocking modes (such as Java Database Connectivity (JDBC)).

3) Quick application development based on the blocking modes.

However, with the emergence of many new asynchronous frameworks and languages (such as Go) that support coroutine, the thread scheduling of operating systems (OSs) has become a performance bottleneck in many scenarios. People now wonder whether Java is still applicable to the latest cloud scenarios. Four years ago, the Java Virtual Machine (JVM) team at Alibaba independently developed Wireless Internet Service Provider 2 (WISP 2), bringing the coroutine capability of Go into the Java community. WISP 2 not only allows users to enjoy the rich resources of the Java ecosystem but also supports asynchronous programs, keeping the Java platform up to date.

WISP 2 is mainly designed for I/O-intensive server scenarios, where most companies use online services (offline applications are mostly used for computation, so they are not applicable). WISP 2 is a benchmark for Java coroutine functionality and is now an ideal product in terms of product format, performance, and stability. To date, hundreds of applications, and tens of thousands of containers have already been deployed on WISP 1 or WISP 2. The WISP coroutine is fully compatible with the code for multi-thread blocking. You only need to add JVM parameters to enable the coroutine. The coroutine models of core Alibaba Cloud e-commerce applications have been put to the test during two Double 11 Shopping Festivals. These models not only enjoy the rich resources of the Java ecosystem but also support asynchronous programs.

WISP 2 focuses on performance and compatibility with existing code. In short, the existing multi-thread I/O-intensive performance of Java applications may improve asynchronously simply by adding the JVM parameters of WISP 2.

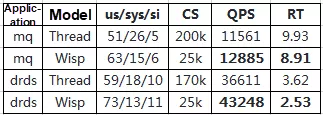

In the following example, the stress test results are compared between the message middleware proxy, Message Queue (MQ) and Distributed Relational Database Service (DRDS) after JVM parameters are added without modifying the code.

According to the table, the context switching and sys CPU usage are significantly reduced, the response time (RT) is reduced by 11.45%, and queries per second (QPS) is increased by 18.13%.

WISP 2 is completely compatible with the existing Java code and, therefore, easy to use.

For any standard online application which uses /home/admin/$ APP_NAME/setenv.sh to configure parameters, run the following command in the admin user to enable WISP 2:

curl https://gosling.alibaba-inc.com/sh/enable-wisp2.sh | shOtherwise, manually update the JDK and Java parameters using the commands below.

ajdk 8.7.12_fp2 rpm

sudo yum install ajdk -b current #Run the yum command to install the latest JDK.

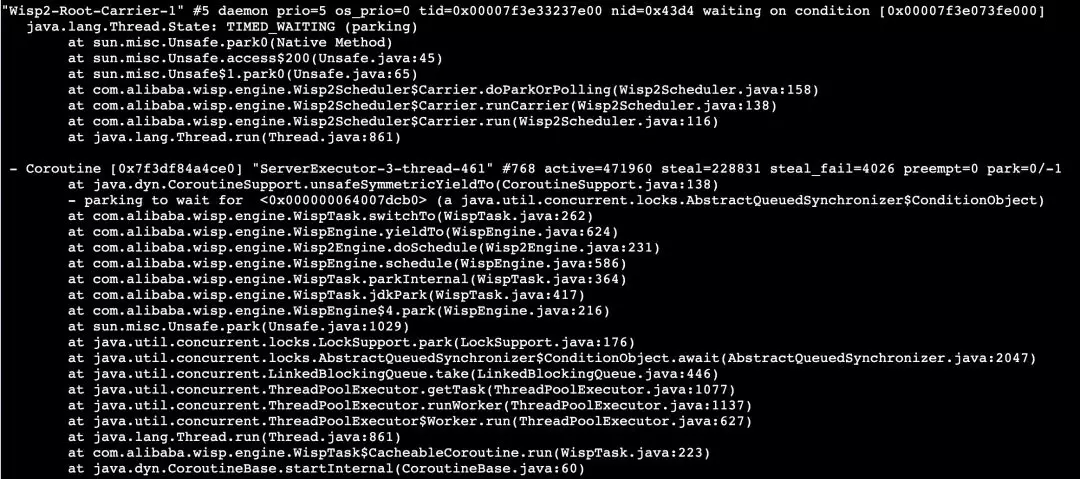

java -XX:+UseWisp2 .... #Use the WISP parameters to start the Java application. Then, run the jstack command to check whether the coroutine is enabled. The Carrier thread is used to schedule a Coroutine. In the following figure:

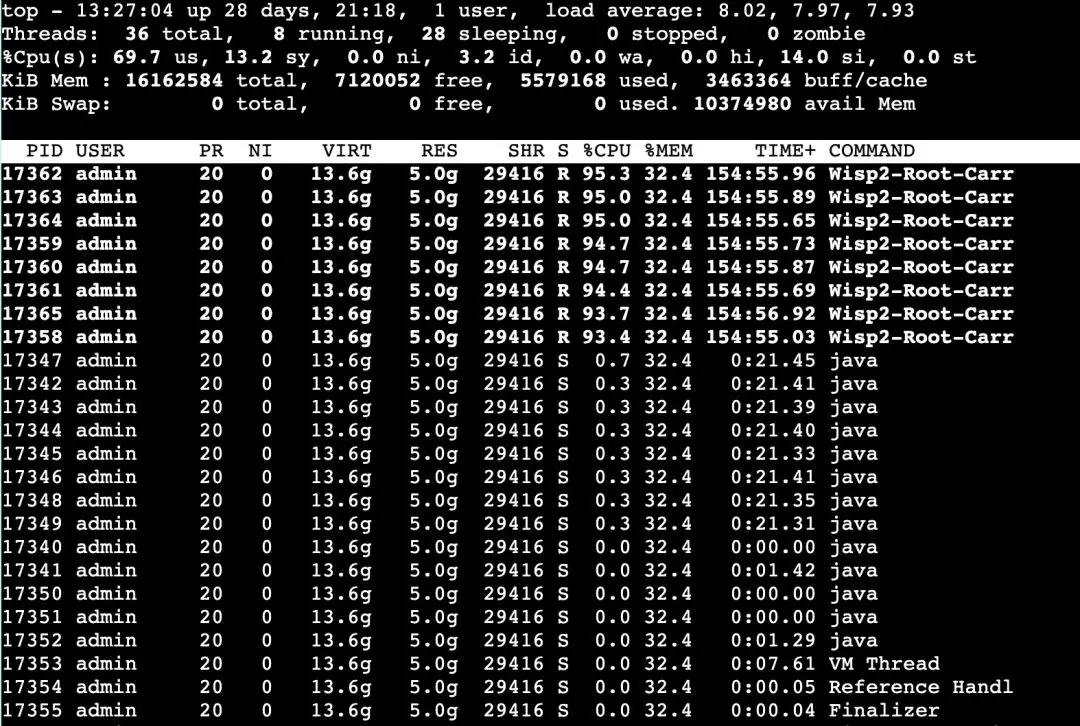

The following figure shows the top-H of the DRDS stress test on Elastic Compute Service (ECS). According to the figure, hundreds of application threads are hosted by eight Carrier threads and distributed evenly on several CPU core threads to be run. Some Java threads are GC threads.

The following snippet shows a test program.

pipe(a);

while (1) {

write(a[1], a, 1);

read(a[0], a, 1);

n += 2;

}

The probability of context switching is very low when this program is running. In fact, the preceding I/O system calls are not blocked. Therefore, the kernel does not need to suspend the thread or switch the context. In fact, it is the user or kernel-mode that is switched.

When the preceding program is tested on the ECS Bare Metal Instance server, each pipe operation only takes about 334 ns.

Essentially, both user-mode and kernel-mode context switching are very lightweight operations and support some hardware commands. For example, PUSHA is used to store general-purpose registers. Threads in the same process share the page table. Therefore, context switching overhead is generally only caused by either storing registers or switching SPs. The call command automatically stacks PCs, and the switch is completed in dozens of commands.

Since kernel switching and context switching are fast, it's crucial to understand what produces multithreading overhead.

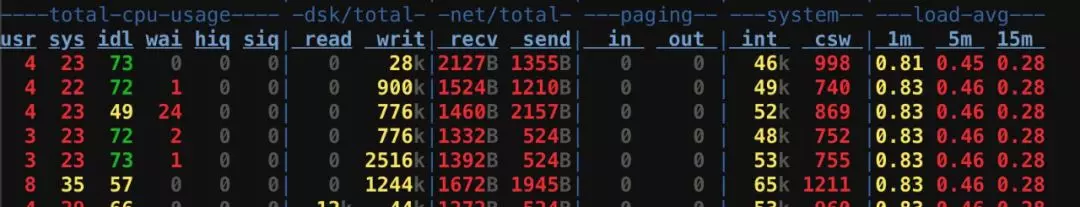

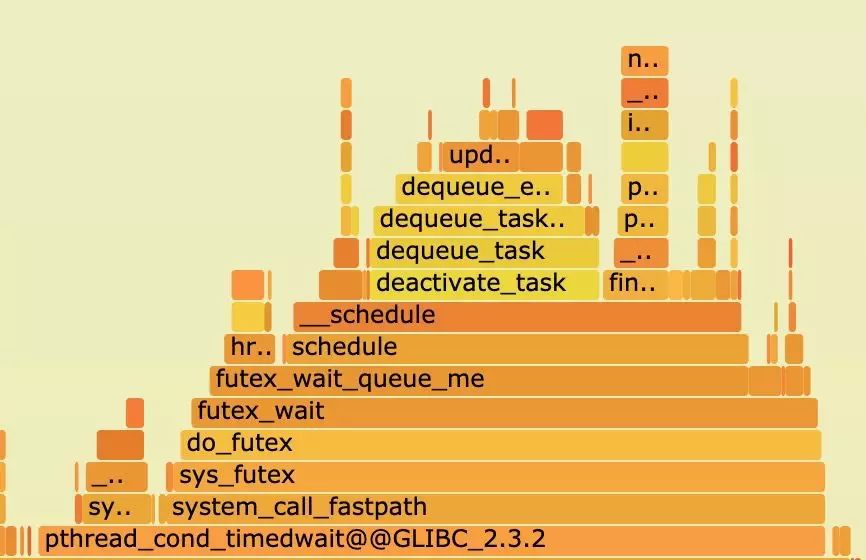

Let's take a look at the hotspot distribution of the blocked system call futex.

As shown in the above figure, the hotspot produces a large amount of scheduling overhead. Let's look at the process.

1) Call system calls (which may need to be blocked).

2) System calls need to be blocked. The kernel needs to determine the next thread to be run or scheduled.

3) Switch the context.

Therefore, the two preceding misunderstandings have a certain causal relationship with multithreading overhead, but the actual overhead comes from thread blocking and wake-up scheduling.

In conclusion, use a thread model to improve web server performance according to the following principles:

1) The number of active threads is approximately equal to the number of CPUs.

2) Each thread does not have to be blocked.

This article will introduce these two principles in the following sections.

To meet these two conditions, an ideal method is eventloop + asynchronous callback.

For simplicity, let's take a Netty write operation on an asynchronous server as an example (write operations may also be blocked).

private void writeQuery(Channel ch) {

ch.write(Unpooled.wrappedBuffer("query".getBytes())).sync();

logger.info("write finish");

}Here, sync() blocks the thread, which does not meet expectations. Netty itself is an asynchronous framework, so let's introduce a callback.

private void writeQuery(Channel ch) {

ch.write(Unpooled.wrappedBuffer("query".getBytes()))

.addListener(f -> {

logger.info("write finish");

});

}Note that writeQuery returns the result after the asynchronous write operation is called. Therefore, if the code to be run after write must logically be contained in the callback, "write" must be in the last row of the function. This is the simplest case. If the function has other callers, CPS conversion is required.

Constantly extract the "lower part" (continuation part.) of the program. This requires some thinking and hence, let's introduce the kotlin coroutine to simplify the program.

suspend fun Channel.aWrite(msg: Any): Int =

suspendCoroutine { cont ->

write(msg).addListener { cont.resume(0) }

}

suspend fun writeQuery(ch: Channel) {

ch.aWrite(Unpooled.wrappedBuffer("query".toByteArray()))

logger.info("write finish")

}In this example, suspendCoroutine is introduced to obtain a reference to the current Continuation, run a segment of code, and ultimately suspend the current coroutine. Continuation represents a continuation of the current calculation. Use Continuation.resume() to resume the execution context. Therefore, just call cont.resume(0) back when the write operation is completed. The program status is returned to the execution status (including caller writeQuery) at suspendCoroutine. The system continues to run the program and then runs a log after the code result is returned. With writeQuery, perform asynchronous operations by using the synchronous write method. After a coroutine is switched by suspendCoroutine, the system schedules other executable coroutines to run the thread and thus the thread is not actually blocked. Hence, this improves performance.

From this point of view, there is only a need for a mechanism to save or resume the execution context and requirement to use the non-blocking thread + callback method in the blocking library function to release or resume the coroutine. This helps to run the programs written in the synchronous mode in the asynchronous mode.

Theoretically, as long as one library encapsulates all JDK blocking methods, it is easy to write asynchronous programs. The rewritten blocking library function itself needs to be widely used in many programs. Also, the kotlin support of vert.x has already encapsulated all JDK blocking methods.

Despite its wide application, vert.x cannot balance the legacy code and lock blocking logic in the code. Therefore, vert.x is not the most common choice. In fact, all Java programs must use the library JDK. WISP supports coroutine scheduling by using non-blocking methods and event recovery coroutines in all blocking calls in JDK. While providing users with the greatest convenience, this ensures compatibility with the existing code.

With the preceding method, each thread does not need to be blocked, and WISP converts the thread into a coroutine at Thread.start() to make the number of active threads approximately equal to the number of CPUs. Therefore, just use the WISP coroutine to enable all the existing Java multi-thread code to implement asynchronous performance.

For applications based on traditional programming models, given the logical integrity, the convenience of exception handling, and the compatibility with existing libraries, it is costly to transform these applications to run asynchronously. WISP has obvious advantages over the asynchronous programming mode.

Next, let's discuss how to select technology for new application programming only considering the performance.

To write a new application, we usually use common protocols or components such as JDBC, Dubbo, and Jedis. If the library uses the blocking mode and does not expose the callback interface, it's not possible to write asynchronous applications based on these libraries (unless the thread pool is packed, but this is putting the cart before the horse). Assume that all the libraries we depend on, such as Dubbo, support callbacks.

(1) Assume using Netty to accept requests. We call this the entry eventLoop. The received requests might be processed in the Netty handler, or in the service thread pool for real-time I/O.

(2) Assume that Dubbo needs to be called during request processing. Since we did not write Dubbo and it contains its own Netty eventLoop, we process I/O requests in the Netty eventLoop inside Dubbo and wait until the backend responds to initiate a callback.

(3) Dubbo eventLoop calls callback in the eventLoop or callback thread pool after receiving the response.

(4) The subsequent logic is continued in the callback thread pool or the original service thread pool.

(5) The response to the client is always ultimately written back by eventLoop at the entry.

As eventLoops are separated in this encapsulation mode, even if the complete callback is used, the request must be transmitted between multiple eventLoops or thread pools, but each thread is not fully run, resulting in frequent OS scheduling. This is contrary to the preceding principle that each thread does not need to be blocked. Therefore, with this method, although the number of threads is reduced and memory is saved, the performance benefits are very limited.

For a new application with limited functions (for example, an NGINX application that only supports HTTP and mail protocols), rewrite the application without relying on existing components. For example, write a database proxy server based on Netty to share the same eventLoop with the client connections and the real backend database connections. In this way, applications that precisely control the thread model generally have good performance.

Generally, their performance is superior to coroutines that are converted from non-asynchronous programs for the following reasons:

However, coroutine still has an advantage as WISP correctly switches the scheduling of ubiquitous synchronized blocks in JDK.

Based on the preceding background information, note that WISP and other coroutines are suitable for I/O-intensive Java programs. Otherwise, the threads are not switched and only need to run on the CPU without much intervention from the OS. This is a typical scenario for offline or scientific computing.

Online applications usually need to access Remote Procedure Call (RPC), databases, caches, and messages, which are blocked. Therefore, WISP allows for improving the performance of these applications.

The earliest version, WISP 1, was deeply customized for these scenarios. For example, requests received by HSF are automatically processed in a coroutine instead of a thread pool. It allows to set the number of I/O threads to 1 and then call epoll_wait(1ms) to replace selector.wakeup(). Therefore, one of the main challenges is deciding whether WISP is only suitable for Alibaba workloads.

This is proved by using the techempower benchmark set, the most authoritative in the web field. We chose common blocking tests such as com.sun.net.httpserver and Servlet (the performance is not optimal, but is closest to the performance of common users' devices and has some room for improvement) to verify the performance of WISP 2 under common open source components. The test results show that, under high pressure, QPS and RT are improved by 10% to 20%.

Project Loom is a standard coroutine implementation on OpenJDK. But, should Java developers use Project Loom? Let's, first compare WISP and Project Loom.

(1) Project Loom serializes the context and then save it, which saves memory but reduces the switching efficiency.

(2) Similar to GO, WISP uses an independent stack. For coroutine switching, it only requires switching registers. This operation is highly efficient but consumes memory.

(3) Project Loom does not support ObectMonitor, but WISP supports it.

synchronized/Object.wait() occupies threads, so the CPU cannot be fully utilized.(4) WISP supports switching (such as reflection) when a native function is installed on a stack, but Project Loom does not.

In our view, it will take at least two years for Project Loom to achieve stability and improve its functions. WISP features excellent performance, provides more comprehensive functions, and is a much more mature product. As an Oracle project, Project Loom might be included in the Java standard. We are also actively contributing some feature implementations of WISP to the community.

Besides, WISP is currently fully compatible with the Fiber API of Project Loom. If our users' program is based on the Fiber API, we ensure that the code behaves exactly the same on Project Loom and WISP.

Coroutines are suitable for I/O-intensive scenarios, which means that, generally, a task is blocked for I/O after it is performed for a short period of time and then is scheduled. In this case, as long as the system's CPU is not used up, the first-in-first-out scheduling policy basically ensures fair scheduling. Besides, the lock-free scheduling implementation greatly reduces the scheduling overhead compared to kernel implementation.

ForkJoinPool is excellent, but it is not suitable for WISP 2 scenarios. For ease of understanding, view a coroutine wake-up operation as an Executor.execute() operation. ForkJoinPool supports task stealing, but the execute() operation is performed by a random thread or a thread in the thread queue (depending on whether asynchronous mode is used). As a result, the thread on which the coroutine is woken up is also random.

At the underlying layer of WISP, the cost of a steal operation is high. Therefore, there is a need for an affinity to bind the coroutine to a fixed thread as far as possible. In this way, work-stealing occurs only when the thread is busy. We have implemented our own workStealingPool to support this feature. It is basically comparable with ForkJoinPool in terms of scheduling overhead and latency.

To support the M and P mechanisms similar to those of Go, we need to force the thread blocked by the coroutine out of the scheduler. But these features cannot be added in ForkJoinPool.

The Reactive programming model has been widely accepted and is an important technology trend. It is difficult to completely avoid Java code blocking. We believe that coroutines can be used as an underlying worker mechanism to support Reactive programming. In this way, the Reactive programming model is retained, and avoid situations where the entire system is blocked due to user code blocking.

This idea is taken from a recent speech by Ron Pressler, the developer of Quasar and Project Loom. He clearly pointed out that the callback model will pose many challenges to current programming modes.

The four-year R&D process of WISP is divided into the following phases:

(1) WISP 1 did not support objectMonitor and parallel class loading but could run some simple applications.

(2) WISP 1 supported objectMonitor and was deployed with core e-commerce services, but did not support workStealing. Therefore, only some short tasks could be converted to coroutines (otherwise the workload would be uneven). Netty threads were still threads and required complex and tricky configurations.

(3) WISP 2 supports workStealing and therefore converts all threads into coroutines. The preceding Netty problems were also solved.

Currently, blocked JNI calls are not supported. WISP inserts hook in JDK to schedule calls before they are blocked. Hook cannot be inserted for customized JNI calls.

The most common scenario is to use Netty's EpollEventLoop.

(1) This feature is enabled for bolt components of Ant Finance by default and can be disabled through -Dbolt.netty.epoll.switch=false. This has little impact on performance.

(2) Use -Dio.netty.noUnsafe=true to disable this feature, but other unsafe functions may be affected.

(3) For Netty 4.1.25 and later versions, use -Dio.netty.transport.noNative=true to disable only jni epoll. We recommend using this method.

Are you eager to know the latest tech trends in Alibaba Cloud? Hear it from our top experts in our newly launched series, Tech Show!

Alibaba Cloud Tech Show: Technologies behind Alibaba Singles' Day

2,599 posts | 764 followers

FollowAlibaba Cloud Community - March 9, 2022

Alibaba Clouder - March 5, 2019

Alibaba Clouder - June 20, 2018

Alibaba Clouder - July 27, 2017

Alibaba Cloud Community - March 16, 2023

Alibaba Developer - January 29, 2021

2,599 posts | 764 followers

Follow Super App Solution for Telcos

Super App Solution for Telcos

Alibaba Cloud (in partnership with Whale Cloud) helps telcos build an all-in-one telecommunication and digital lifestyle platform based on DingTalk.

Learn More ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More Edge Network Acceleration

Edge Network Acceleration

Establish high-speed dedicated networks for enterprises quickly

Learn More EMAS Superapp

EMAS Superapp

Build superapps and corresponding ecosystems on a full-stack platform

Learn MoreMore Posts by Alibaba Clouder