By Umesh Kumhar, Alibaba Cloud Community Blog author.

Argo Workflows is an open-source container-native workflow engine for orchestrating sequential and parallel jobs on Kubernetes Cluster, which means that, by using Argo, your workflow can be executed as a container. Argo supports multiple step workflows to work as a sequence of tasks as well as captures the dependencies or artifacts between tasks using a directed acyclic graph (DAG).

In this tutorial, you will be learning how you can install and set up the Argo Workflow engine in your Kubernetes cluster. In the tutorial, you will install and configure the Argo Workflow, and install Artifact repositories, configure the controller, and also get to know a bit more about the Argo Workflow user interface.

Before you can begin to follow the steps provided in this tutorial, make sure that you first have the following:

/.kube/config.Argo comes with three main interfaces that you can use to interact with it:

Below I'll show you how you can install each of these interfaces so that you can use any of them to interact with Argo.

You can download the latest Argo Command Line Interface (CLI) version from here. The interface is available with Linux, Windows, along with Darwin and macOS Homebew versions. If your system is Linux as is mine, you can download it using the following command:

curl -sSL -o /usr/bin/argo https://github.com/argoproj/argo/releases/download/v2.2.1/argo-linux-amd64

chmod +x /usr/bin/argoTo setup the Argo controller in a Kubernetes cluster, you'll want to create a separate namespace for the Argo components. You can do so with the following commands:

kubectl create namespace argo

kubectl apply -n argo -f https://raw.githubusercontent.com/argoproj/argo/v2.2.1/manifests/install.yamlThen, to verify that these components were installed successfully, you'll want to also check the status of the controller and UI pods. You can do so with the commands below:

kubectl get pods –n argo

kubectl get services –n argo Now, that we've got all of these interfaces installed, let's continue with the rest of the setup process, which is mainly some basic configurations.

Initially, the default service account of any namespace is restricted to having minimal access. For this tutorial, however, we want the default account to have more privileges so that we can more clearly demonstrate some of the features of Argo Workflow such as Artifacts, outputs, and secrets access.

For this tutorial purpose specifically, you'll want to grant the admin privileges to default service account of default namespace. You can do it by running the below commands:

kubectl create rolebinding namespace-admin --clusterrole=admin --serviceaccount=default:defaultNext, we will want to figure out how we can manage our Argo Workflow. For this, there are two methods to manage Argo Workflow. We will take a look at both below.

Argo Workflow is implemented as a Kubernetes CRD (Custom Resource Definition), which therefore means that it can be natively integrated with other Kubernetes services, such as ConfigMap, secrets, persistent volumes, and role-based access control (RBAC). Following this, Argo Workflow can also be managed by kubectl. You can use Kubectl to run the below commands to submit hello-work Argo workflow:

kubectl create -f https://raw.githubusercontent.com/argoproj/argo/master/examples/hello-world.yaml

kubectl get wf

kubectl get wf hello-world-xxx

kubectl get pods --selector=workflows.argoproj.io/workflow=hello-world-xxx --show-all

kubectl logs hello-world-zzz -c mainAfter you have run the hello-world example, you can find the output when you check the logs for the workflow pod.

Argo CLI offers a lot other extra features that Kubectl does not provide directly. Some special features such as YAML validation, parameter passing, retries and resubmits, suspend and resume, as well as an interface for workflow visualization, and so on. Run the below Argo commands to submit hello-world workflow and get the details and logs.

argo submit --watch https://raw.githubusercontent.com/argoproj/argo/master/examples/hello-world.yaml

argo list

argo get xxx-workflow-name-xxx

argo logs xxx-pod-name-xxx Once after you have run the above hello-world example, you can find the output when you check the logs for the workflow pod. Next, you can also run the below examples to get a better idea of how Argo works.

argo submit --watch https://raw.githubusercontent.com/argoproj/argo/master/examples/coinflip.yaml

argo submit --watch https://raw.githubusercontent.com/argoproj/argo/master/examples/loops-maps.yamlSince Argo supports Artifactory as well as MinIO as artifacts repositories. We will go ahead with MinIO for its open-source object storage and its portability. The artifact repositories are very useful to store logs, final exports and reuse them in later stage of Argo workflow

Install MinIO using below Helm commands to the Kubernetes cluster:

helm install stable/minio \

--name argo-artifacts \

--set service.type=LoadBalancer \

--set defaultBucket.enabled=true \

--set defaultBucket.name=my-bucket \

--set persistence.enabled=falseWhen minIO is installed using helm charts, it uses the following hard-coded default credentials. These are used to login to the MinIO user interface.

AccessKey: AKIAIOSFODNN7EXAMPLE

SecretKey: wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY

To get the exposed external IP address for the minIO user interface, you'll want to run the below command:

kubectl get service argo-artifacts -o wideNext, you'll want to log in to the minIO user interface by using the endpoint obtained from above command, port 9090, in a web browser. After you're logged in, you'll then want to create a bucket named my-bucket from in the MinIO interface.

Now, you should have installed the minIO artifact repository in which you can storeyou're your workflow artifacts. So, with that done, you can go on to configure minIO as an artifact repository in your Argo Workflow, as will be outlined in the following steps.

We need to modify the Argo workflow controller ConfigMap to point to the minIO service (specifically, the argo-artifacts) and secret (argo-artifacts) to use as artifact repository. For this, you can use the command below.

Remember that, of course, the minIO secret is retrieved from the namespace that the Argo Workflow uses to run workflow pod. After you saved the changes to the ConfigMap, then you can run the Workflow and save artifacts to minIO bucket.

kubectl edit cm -n argo workflow-controller-configmap

...

data:

config: |

artifactRepository:

s3:

bucket: my-bucket

endpoint: argo-artifacts.default:9000

insecure: true

# accessKeySecret and secretKeySecret are secret selectors.

# It references the k8s secret named 'argo-artifacts'

# which was created during the minio helm install. The keys,

# actual minio credentials are stored.

accessKeySecret:

name: argo-artifacts

key: accesskey

secretKeySecret:

name: argo-artifacts

key: secretkeyRun the command below to run the demo workflow that uses the minIO bucket to store artifacts.

argo submit https://raw.githubusercontent.com/argoproj/argo/master/examples/artifact-passing.yaml

or

kubectl create –f https://raw.githubusercontent.com/argoproj/argo/master/examples/artifact-passing.yamlAfter that you can log in to MinIO UI and check the artifacts generated in the my-bucket.

The Argo user interface is designed to showcase all your executed workflow. It will present your workflow in form of flow diagram to give the better visualising of the workflow. There you can monitor and fetch logs for all steps of your workflow. This helps in troubleshooting and understanding the workflow easily.

Note: Argo user interface does not provide the function for create workflows on its own. Rather, the interface is used for the visualisation of already executed workflows.

We have also installed Argo UI along with the controller. By default, the Argo UI service is not exposed nor is it restricted to ClusterIP type. To access the Argo UI, you can use one of the following methods:

kubectl proxyNext, after you ran the command, you can access your application in browser, at this address: http://127.0.0.1:8001/api/v1/namespaces/argo/services/argo-ui/proxy/

kubectl -n argo port-forward deployment/argo-ui 8001:8001Then, after that, you can access your application in browser at this address: http://127.0.0.1:8001

kubectl patch svc argo-ui -n argo -p '{"spec": {"type": "LoadBalancer"}}'You may need to wait a while for this command to process. More specifically, you'll need to wait until once the external endpoint is assigned to the Argo user interface. Then, once that's out of the way, you can run the below command to get the endpoint:

kubectl get svc argo-ui -n argoNote: If you are running on-premises kubernetes cluster, then LoadBalancer will not work. Instead, you'll want to try using the NodePort type.

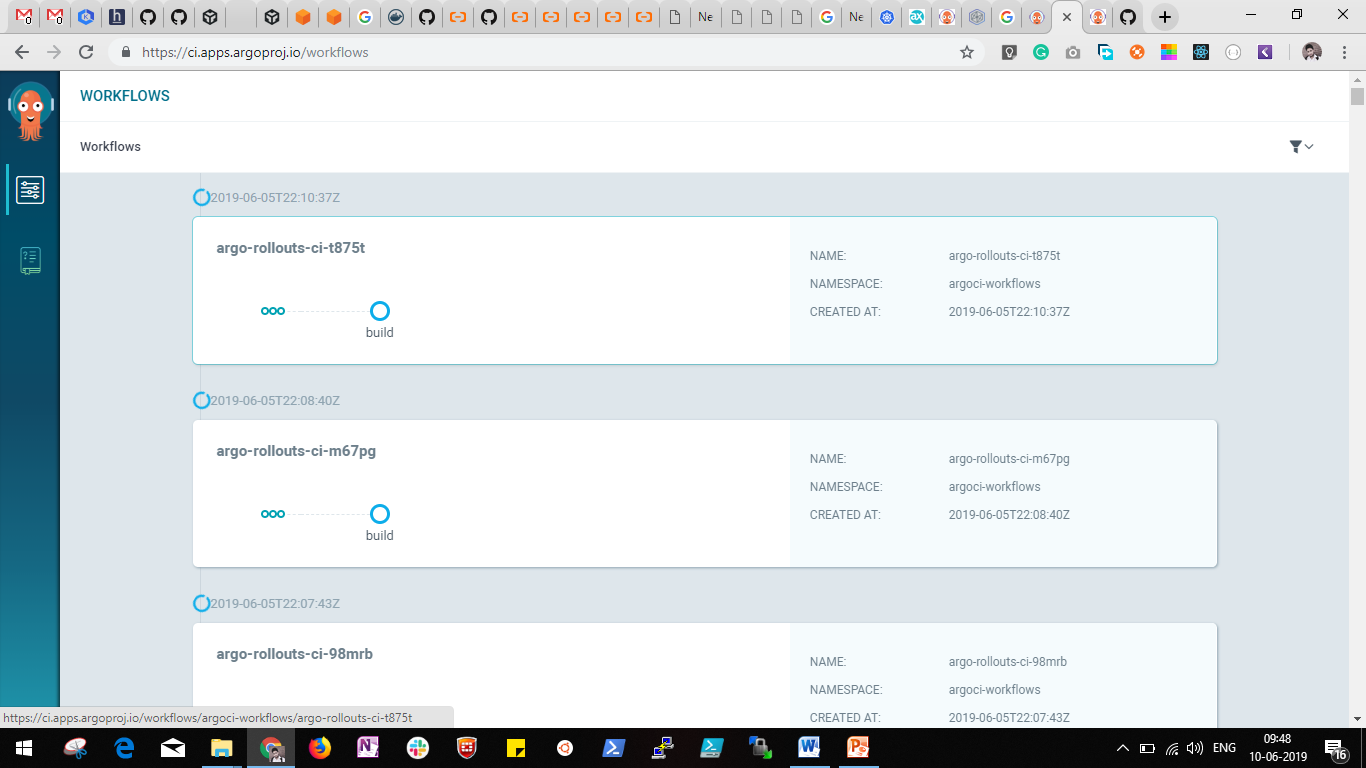

You will find the Argo user interface for all the workflows executed.

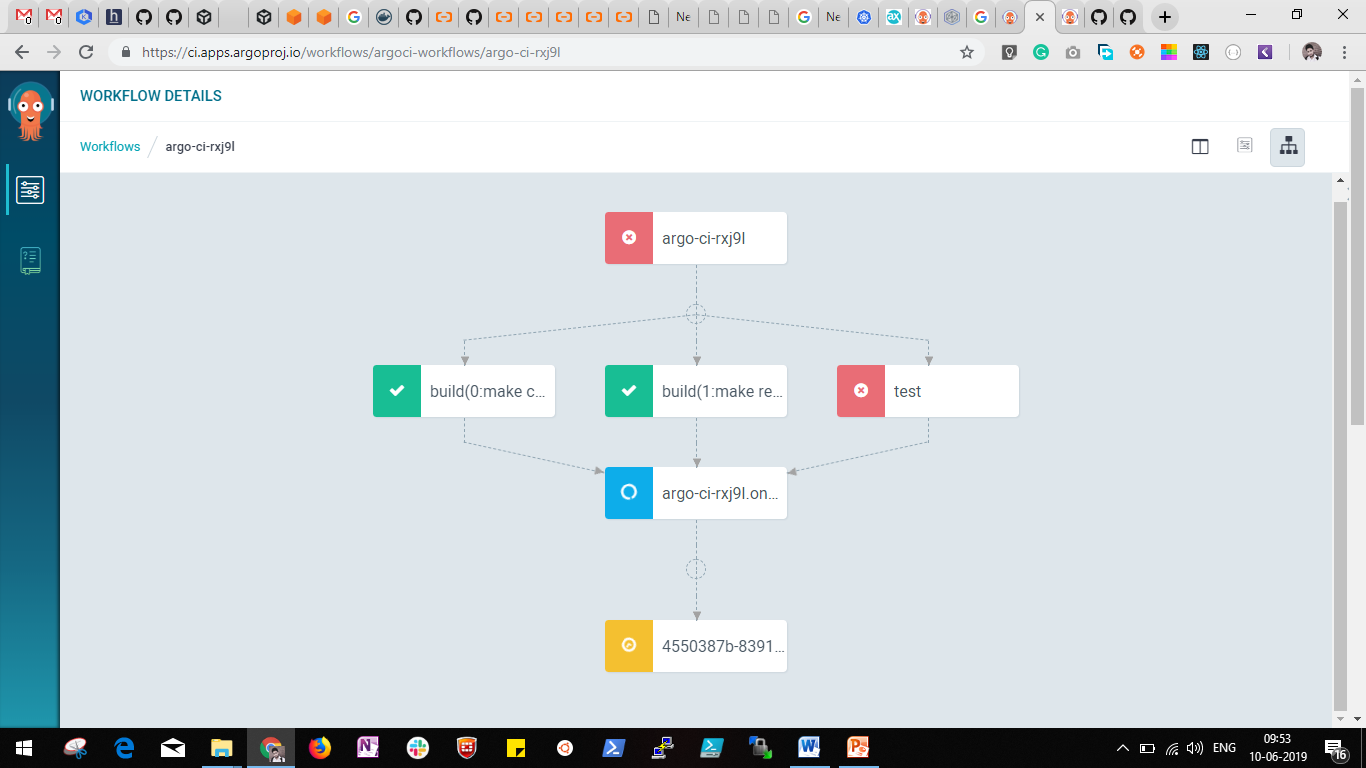

Next, you can go with the selection of any workflow executed. Then, you will find out the flow graph for that workflow if that has multiple steps.

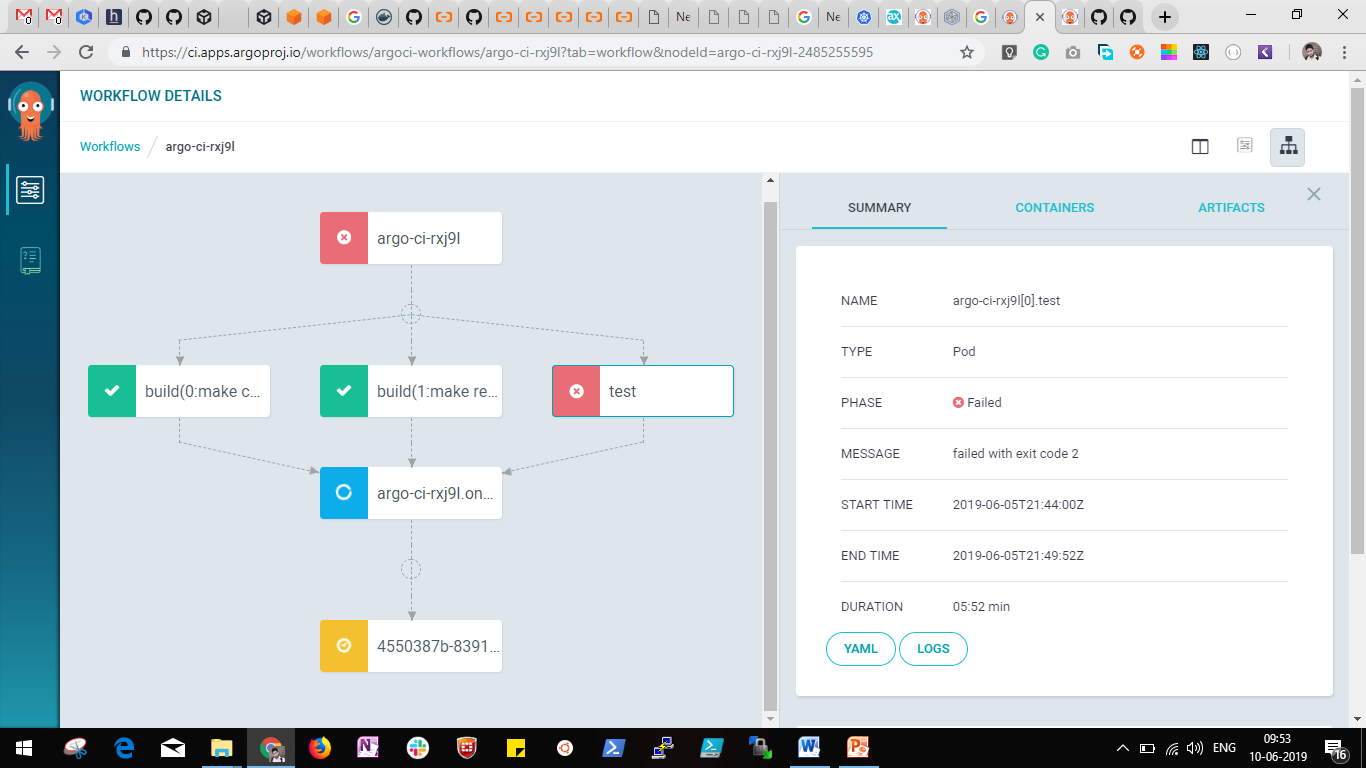

Here you can visualize and figure out the dependency between steps and their relations. Following this, you'll want to select the step on which you want to explore details or fetch logs.

The Argo interface has a lot of other features and even better visuals that you can explore in the future.

In this tutorial, you got to see how you can set up the Argo Workflow engine in your Kubernetes cluster, configure it, and see how you can use its main interface. One thing that is important to note is that this tutorial has consisted of the Argo workflow setup and using this workflow for learning and development purposes. For production use, however, I'd recommend that you consider also using the role-based access control (RBAC) and RoleBinding to limit the access to the default service account for security reasons.

2,593 posts | 793 followers

FollowAlibaba Cloud Native Community - December 29, 2023

Alibaba Cloud Native - October 8, 2022

Alibaba Container Service - December 5, 2024

Alibaba Container Service - December 19, 2024

Alibaba Container Service - September 19, 2024

Alibaba Developer - September 7, 2020

2,593 posts | 793 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn MoreMore Posts by Alibaba Clouder

5814894225829244 July 8, 2020 at 4:31 pm

Is there a way to run the samples with bare metal k8s cluster and metallb load balancer. https://metallb.universe.tf/