By Mingyi

Image generated by DALL-E

From GPT-3 to ChatGPT and from GPT-4 to GitHub Copilot, fine-tuning plays a key role in these developments. But what exactly is fine-tuning? What problems can it solve? What is low-rank adaptation (LoRA)? How is fine-tuning performed?

This article answers these questions and provides code examples demonstrating how to use LoRA for fine-tuning. The technical barrier for fine-tuning is relatively low. When working with models containing 10 billion parameters or fewer, the hardware requirements remain manageable, allowing even non-specialists to experiment with their own fine-tuned models.

Beyond the aforementioned products such as ChatGPT and GitHub Copilot, fine-tuning offers many other possibilities. It can be used to orchestrate APIs for specific tasks (as described in the paper GPT4Tools: Teaching Large Language Models to Use Tools via Self-Instruction), mimic specific speaking styles, or enable a model to support new languages.

Fine-tuning involves further training a pre-trained model (typically a large-scale model) on new datasets to tailor it for specific application scenarios. This article introduces the concepts and processes of fine-tuning, followed by an analysis of the fine-tuning code.

GPT-3 was trained on large amounts of web data, but it is not well-suited for conversational tasks. For example, if you ask GPT-3 the question "What is the capital of China?", it might respond with "What is the capital of the United States?".

This issue arises because both sentences frequently appear together in the training data, and similar associations may be repeated throughout the dataset. However, such outputs are unsuitable for ChatGPT. A multi-stage optimization process is required to make ChatGPT proficient in handling conversations and better equipped to meet users' needs.

The fine-tuning process for GPT-3 involves several key steps:

In the following sections, we will explore the benefits of fine-tuning in detail.

1. Enhancing domain-specific capabilities: Fine-tuning sharpens a pre-trained model's capabilities within a specific domain. For example, while a general-purpose model may have some sentiment analysis capabilities, fine-tuning can enhance its performance in this area.

2. Incorporating new knowledge: Fine-tuning allows the model to learn new information. For instance, the model can be trained to answer questions such as "Who are you?" or "Who created you?" accurately, providing consistent and predictable answers.

1. Reducing hallucinations: Fine-tuning reduces or eliminates instances where the model generates incorrect or unrelated information.

2. Increasing consistency: Fine-tuning enhances the stability of the model's responses. A higher temperature allows for more creativity and diversity in the responses. Even when the outputs vary, the quality remains consistently high, rather than fluctuating between good and bad.

3. Filtering out unnecessary information: For example, the model can be fine-tuned to politely decline responses to religious questions, which is useful for safety and regulatory compliance.

4. Reducing latency: Fine-tuning optimizes smaller models to achieve the desired performance, reducing response times and improving user experience.

1. Local or virtual private cloud deployment: Models can be deployed on local servers or virtual private clouds (VPCs) to retain full control over data and operations.

2. Preventing data leakage: This is critical for companies whose competitive edge relies on proprietary data accumulated over time.

3. Controlling security risks: For highly confidential data, fine-tuning allows companies to build custom security environments instead of relying on third-party inference services.

1. High costs of building large models from scratch: For most companies, training a large model from scratch is too expensive. For instance, Meta's LLaMA 3.1, a 405-billion-parameter model, required 24,000 H100 GPUs running for 54 days. Fine-tuning open source models using quantization (precision reduction) can significantly lower these barriers while delivering satisfactory results.

2. Lowering per-request costs: Fine-tuned models typically contain fewer parameters, making them cheaper to operate while maintaining comparable performance to larger models.

3. Greater control: By adjusting the number of parameters and resource usage, fine-tuning provides flexibility in balancing model performance, response times, and throughput, offering opportunities for cost optimization.

According to public information from OpenAI, the primary improvements in ChatGPT were achieved through fine-tuning and RLHF. The general development process from GPT-3 to ChatGPT is as follows: Pre-training → SFT → RLHF → Model Pruning and Optimization. What distinguishes reinforcement learning (RL) from fine-tuning?

In simple terms, fine-tuning during ChatGPT's development enables the model to generate more natural and relevant conversations. RL, on the other hand, leverages human feedback to improve the quality of these conversations.

RLHF is a specific application of RL, which is a branch of machine learning.

In RL, a model learns decision-making strategies by interacting with its environment. The model receives rewards or penalties based on its choices, with the goal of maximizing cumulative rewards over time. In natural language processing (NLP), RL can be used to optimize model outputs, ensuring they align more closely with the desired goals.

SFT is a type of fine-tuning. Depending on whether labeled data is available, fine-tuning can take the form of unsupervised fine-tuning, where the model is trained further on new data without explicit labels, and self-supervised fine-tuning, where pseudo-labels are created from input data (such as by masking parts of the data or predicting the context) and used for fine-tuning.

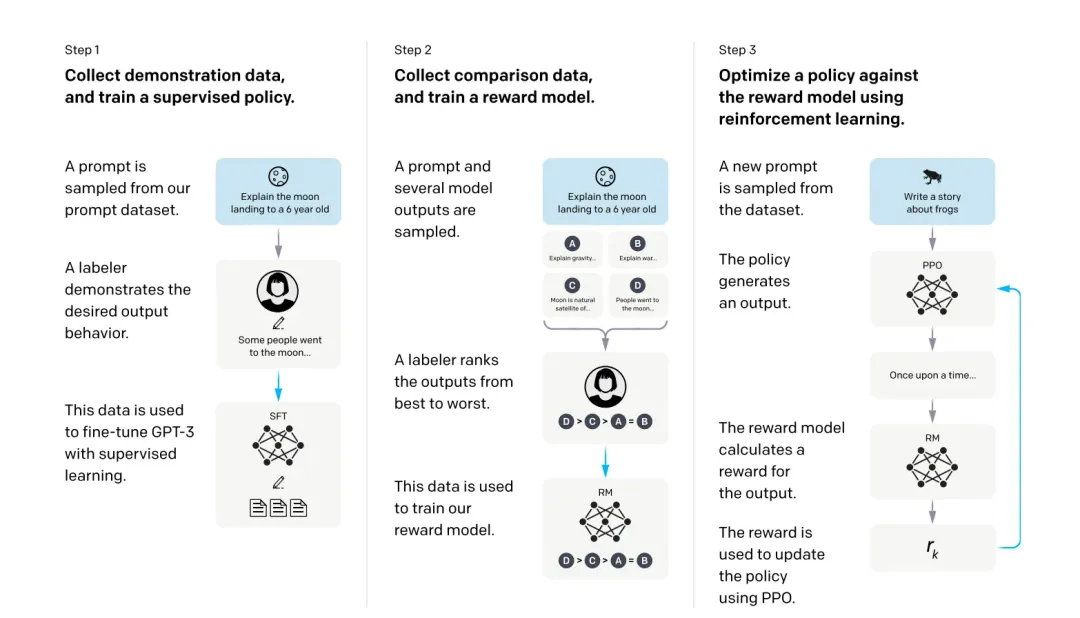

Image from the OpenAI paper: Training Language Models to Follow Instructions with Human Feedback

OpenAI utilized RLHF to train ChatGPT. The typical RLHF process includes the following steps:

1. Initial Model Generation: The base model is trained using supervised learning (Step 1 as shown in the image above) to generate reasonable conversational content.

2. Human Feedback: Human reviewers interact with the model, evaluate its responses, and rank them based on quality. This corresponds to the "A labeler ranks the outputs" step in Step 2 as shown in the image above.

3. Training the Reward Model: A reward model (as mentioned in Step 2 in the image above) is trained using human feedback data to score the model's outputs based on their quality.

4. Policy Optimization: RL is used to optimize the model to produce higher-scoring outputs (Step 3 as shown in the image above).

Compared to fine-tuning, RL has a higher technical barrier and requires more effort in data preparation, training costs, and time. Additionally, RL is characterized by a high degree of uncertainty regarding the final performance. RL often requires extensive data labeling to train the reward model, followed by numerous trials and iterations to optimize the language model.

In practice, although reinforcement learning can improve the model's performance on specific tasks, SFT is often more effective for specialized tasks. RL is expensive and highly dependent on labeled data, which limits its widespread use compared to SFT.

ChatGPT is designed as a general-purpose conversational product. However, for specific industries or fields, similar products need to be more focused. For example, we often hear about large medical models, legal models, or financial security models. Many of these specialized models are developed by continued pre-training of the base model.

Continued pre-training further trains a pre-trained model on domain-specific data to improve its understanding and performance in that field. The data used for continued pre-training is typically unlabeled and relatively large in scale.

In contrast, fine-tuning focuses on optimizing the model for specific tasks. It is generally applied to smaller datasets and aims to achieve the best possible performance on a given task.

Both approaches can be combined. For example, in the security domain, a model needs to tag specific fraud patterns. In this case, the process will be like this:

1. General pre-training: Train the base model on large-scale web data (at the company level).

2. Continued pre-training: Use domain-specific data for further pre-training (at the industry or departmental level).

3. Fine-tuning: Use task-specific data to fine-tune the model for specific needs (handled by individual teams or departments within the organization).

Fine-tuning improves a model's performance on specific tasks. Compared to pre-training and RL, fine-tuning is used more frequently in production environments. With a basic understanding of these concepts, even non-technical personnel can perform fine-tuning. The next chapter will focus on the steps involved in the fine-tuning process.

Fine-tuning is based on an already-trained neural network model, adjusting its parameters slightly to better adapt it to specific tasks or data. By continuing to train some or all layers of the model on new, smaller datasets, the model can optimize itself for new tasks while retaining its original knowledge, improving its performance in specialized fields.

Fine-tuning can be categorized based on the scope of the adjustments:

Full model fine-tuning: This approach updates all parameters of the model. It is suitable when the target task differs significantly from the pre-training task or when maximizing model performance is crucial. Although it provides the best performance, full model fine-tuning requires substantial computational resources and storage. Moreover, when data is limited, this approach carries a higher risk of overfitting.

Partial fine-tuning: This approach updates only part of the model's parameters, while the rest remain frozen. Partial fine-tuning reduces computational and storage costs and lowers the risk of overfitting, making it suitable for tasks with limited data. However, in highly complex tasks, partial fine-tuning might not fully unleash the model's potential.

In practice, partial fine-tuning is more common. LLMs contain a vast number of parameters, and even partial adjustments require significant computational resources. One widely used method is parameter-efficient fine-tuning (PEFT). PEFT reduces resource demands by introducing additional low-rank matrices (such as LoRA) or adapters.

LoRA is an efficient fine-tuning technique that significantly reduces the number of parameters to be fine-tuned and the required computational resources. LoRA allows the model to maintain its original capabilities while efficiently adapting to specific tasks. This makes LoRA particularly well-suited for fine-tuning large models. The following section focuses on how LoRA works.

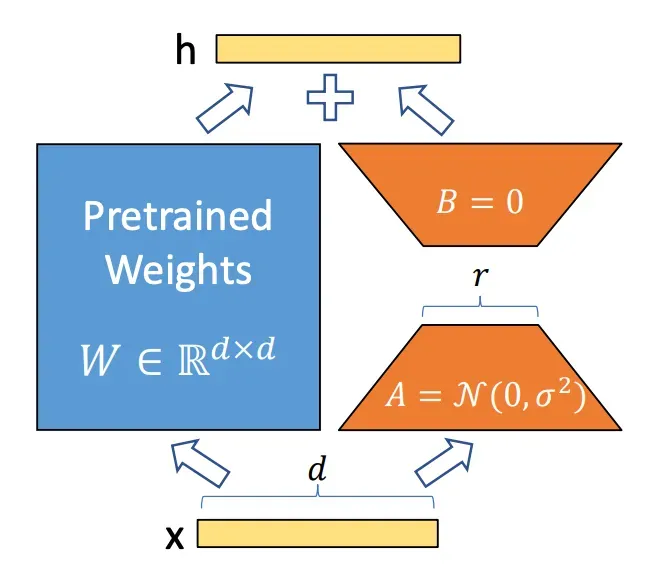

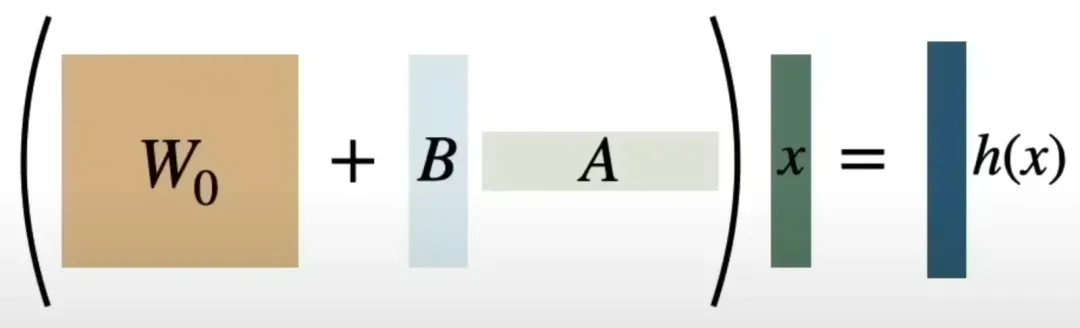

LoRA principle (Illustration from the LoRA paper: LORA: Low-rank Adaptation of Large Language Models)

LoRA reduces the number of parameters to be updated during fine-tuning by introducing low-rank matrices (matrix A and matrix B). This technique dramatically decreases computational resource requirements, reducing them to one-third of the original cost, as shown in the paper.

Another essential feature of LoRA is reusability. Since LoRA does not modify the original model's parameters, the same base model can be reused across multiple tasks or scenarios. Different tasks can have their own low-rank matrices, which can be stored and loaded independently, enabling flexible adaptation.

For example, on a mobile device, a single application might use a large model to handle various tasks. Each task can have its own LoRA parameters, and during runtime, the application can dynamically load the required LoRA parameters for the current task while reusing the same base model. This approach reduces the need for storage and operational space compared to deploying a separate model for each task.

In machine learning, models often rely on complex matrices to process data. These matrices are highly generalized, capable of handling various types of information. However, studies have shown that models do not need to utilize all their complex capabilities for specific tasks. Instead, they only need a subset of those capabilities to perform effectively.

This concept can be compared to a Swiss army knife. Although the knife contains many tools—like scissors and screwdrivers—you only need a few tools to complete most tasks. Similarly, a model's matrices are complex, but for specific tasks, only a portion of those matrices (low-rank matrices) is required.

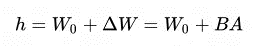

During fine-tuning, only the parameters relevant to the specific task are adjusted. Let's assume the original matrix W0 is a dk matrix. To modify the matrix while preserving the original data for reuse, the simplest method is to add another dk matrix ΔW. However, this approach would still involve a large number of parameters. LoRA reduces the parameter count by expressing the added matrix as a low-rank decomposition:

where

The diagram above shows the decomposed matrix, allowing you to intuitively understand the number of parameters by looking at the area of the matrix. W0 is the original weight matrix. For full model fine-tuning, the entire area corresponding to W0 would need to be adjusted. In contrast, LoRA adjusts only the areas corresponding to matrix A and matrix B, which are much smaller than that of W0.

For example, let d be 1,000 and k be 1,000. Normally, modifying ΔW would involve 1,000,000 parameters. However, with LoRA, if r = 4, the number of parameters drops to 1,000 × 4 + 4 × 1,000 = 8,000 parameters.

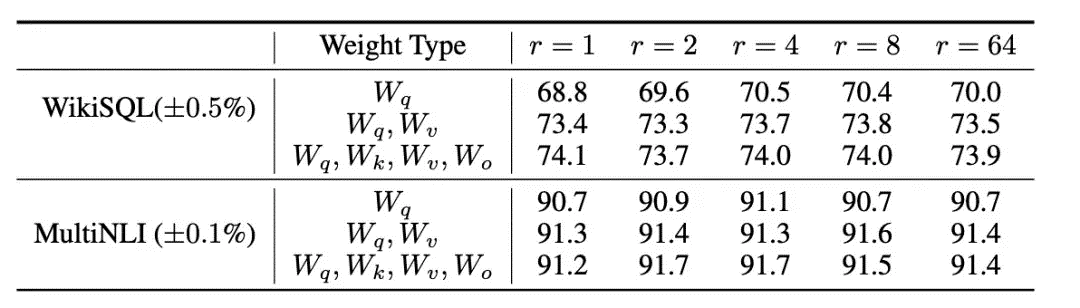

The choice of r = 4 is not arbitrary to reduce parameters. It is a typical value used in practice. According to experimental data from the paper, when adjusting the weight matrices in the Transformer model, a rank value of 1 (r = 1) can yield excellent results for specific tasks.

The table above demonstrates the accuracy of using LoRA with different rank values on the WikiSQL and MultiNLI datasets. When adapting the Wq and Wv matrices, a rank of 1 is sufficient. However, when training only Wq, a larger rank value is required. The Wq, Wk, Wv, and Wo matrices are components of the Transformer architecture's self-attention module.

The basic process of fine-tuning consists of the following steps:

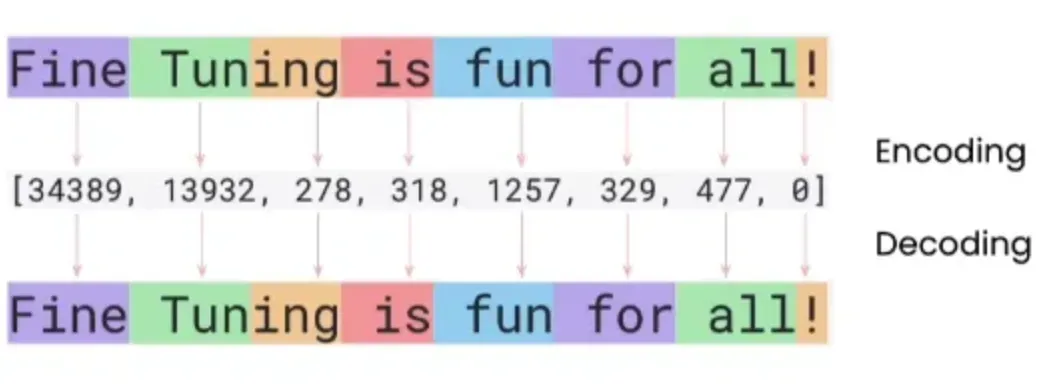

1. Preparing data: Collect labeled data relevant to the target task. Split the data into training and validation sets and process it with tokenization.

2. Setting fine-tuning parameters: Configure LoRA parameters and other fine-tuning parameters, such as the learning rate, to ensure that the model converges.

3. Training the model: Train the model using the training dataset and adjust hyperparameters to prevent overfitting.

4. Evaluating the model: Assess the model's performance on the validation dataset.

When fine-tuning, the following requirements for the data should be observed:

1. High quality: Data quality is paramount. Garbage in, garbage out. The paper, Textbooks Are All You Need, highlights the importance of high-quality data.

2. Diversity: Similar to test cases for coding, it is essential to use varied data that covers as many scenarios as possible.

3. Manual generation: Text generated by language models often follows specific "patterns" that can be easily identified. Wherever possible, use manually crafted data to avoid such issues.

4. Adequate quantity: According to the LoRA paper, improvement becomes noticeable with at least 100 examples. Around 1,000 examples yield satisfactory results.

Regarding the data size, OpenAI recommends a minimum of 10 examples for fine-tuning. However, practical experience suggests that 50 to 100 examples usually yield significant improvement. It is recommended to start with 50 examples and gradually increase the amount if improvement is observed.

Example count recommendations

To fine-tune a model, you are required to provide at least 10 examples. We typically see clear improvements from fine-tuning on 50 to 100 training examples with gpt-3.5-turbo but the right number varies greatly based on the exact use case.

We recommend starting with 50 well-crafted demonstrations and seeing if the model shows signs of improvement after fine-tuning. In some cases that may be sufficient, but even if the model is not yet production quality, clear improvements are a good sign that providing more data will continue to improve the model. No improvement suggests that you may need to rethink how to set up the task for the model or restructure the data before scaling beyond a limited example set.

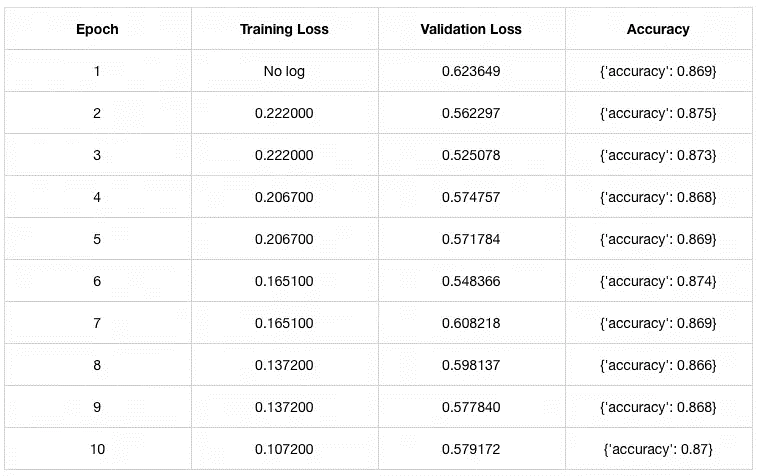

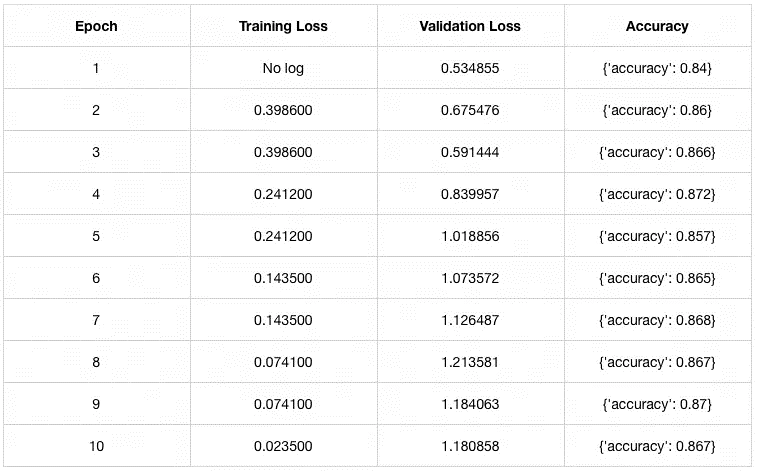

This section demonstrates how to fine-tune a 67M DistilBERT model (distilbert/distilbert-base-uncased) using LoRA to classify movie reviews as positive or negative. The dataset used is stanfordnlp/imdb.

Using Colab's free T4 GPU (note: running the code on a CPU is more than ten times slower), the fine-tuning process took approximately 6 minutes with 1,000 examples over 10 epochs. Larger models can also be fine-tuned for free, though fine-tuning models with 10 billion parameters may require purchasing additional computational resources.

The result improved the accuracy from 50% (random guessing) before fine-tuning to 87% after fine-tuning, with only the Wq weight matrix being fine-tuned.

Results for r = 4:

Results for r = 1:

The results align with the conclusions in the LoRA paper: when fine-tuning the Wq matrix, a rank value of 1 (r = 1) is sufficient (with differences below 0.01, which can be ignored).

The following section focuses on code analysis.

!pip install datasets

!pip install transformers

!pip install evaluate

!pip install torch

!pip install peft

from datasets import load_dataset, DatasetDict, Dataset

from transformers import (

AutoTokenizer,

AutoConfig,

AutoModelForSequenceClassification,

DataCollatorWithPadding,

TrainingArguments,

Trainer)

from peft import PeftModel, PeftConfig, get_peft_model, LoraConfig

import evaluate

import torch

import numpy as np# # load imdb data

imdb_dataset = load_dataset("stanfordnlp/imdb")

# # define subsample size

N = 1000

# # generate indexes for random subsample

rand_idx = np.random.randint(24999, size=N)

# # extract train and test data

x_train = imdb_dataset['train'][rand_idx]['text']

y_train = imdb_dataset['train'][rand_idx]['label']

x_test = imdb_dataset['test'][rand_idx]['text']

y_test = imdb_dataset['test'][rand_idx]['label']

# # create new dataset

dataset = DatasetDict({'train':Dataset.from_dict({'label':y_train,'text':x_train}),

'validation':Dataset.from_dict({'label':y_test,'text':x_test})})

import numpy as np # Import the NumPy library

np.array(dataset['train']['label']).sum()/len(dataset['train']['label']) # 0.508Example data from the IMDB dataset:

{

"label": 0,

"text": "Not a fan, don't recommed."

}The dataset uses 1,000 examples for both training and validation. Positive and negative reviews are evenly distributed, with each representing 50% of the data.

from transformers import AutoModelForSequenceClassification

model_checkpoint = 'distilbert-base-uncased'

# model_checkpoint = 'roberta-base' # you can alternatively use roberta-base but this model is bigger thus training will take longer

# define label maps

id2label = {0: "Negative", 1: "Positive"}

label2id = {"Negative":0, "Positive":1}

# generate classification model from model_checkpoint

model = AutoModelForSequenceClassification.from_pretrained(

model_checkpoint, num_labels=2, id2label=id2label, label2id=label2id)

# display architecture

modelModel architecture:

DistilBertForSequenceClassification(

(distilbert): DistilBertModel(

(embeddings): Embeddings(

(word_embeddings): Embedding(30522, 768, padding_idx=0)

(position_embeddings): Embedding(512, 768)

(LayerNorm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

(dropout): Dropout(p=0.1, inplace=False)

)

(transformer): Transformer(

(layer): ModuleList(

(0-5): 6 x TransformerBlock(

(attention): MultiHeadSelfAttention(

(dropout): Dropout(p=0.1, inplace=False)

(q_lin): Linear(in_features=768, out_features=768, bias=True)

(k_lin): Linear(in_features=768, out_features=768, bias=True)

(v_lin): Linear(in_features=768, out_features=768, bias=True)

(out_lin): Linear(in_features=768, out_features=768, bias=True)

)

(sa_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

(ffn): FFN(

(dropout): Dropout(p=0.1, inplace=False)

(lin1): Linear(in_features=768, out_features=3072, bias=True)

(lin2): Linear(in_features=3072, out_features=768, bias=True)

(activation): GELUActivation()

)

(output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

)

)

)

)

(pre_classifier): Linear(in_features=768, out_features=768, bias=True)

(classifier): Linear(in_features=768, out_features=2, bias=True)

(dropout): Dropout(p=0.2, inplace=False)

)In a 6-layer Transformer model, LoRA affects the weights of the following layer: (q_lin):Linear(in_features=768, out_features=768, bias=True). The weights of this layer form a 768×768 matrix.

# create tokenizer

from transformers import AutoTokenizer # Import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained(model_checkpoint, add_prefix_space=True)

# add pad token if none exists

if tokenizer.pad_token is None:

tokenizer.add_special_tokens({'pad_token': '[PAD]'})

model.resize_token_embeddings(len(tokenizer))

# create tokenize function

def tokenize_function(examples):

# extract text

text = examples["text"]

#tokenize and truncate text

tokenizer.truncation_side = "left"

tokenized_inputs = tokenizer(

text,

return_tensors="np",

truncation=True,

max_length=512, # Change max_length to 512 to match model's expected input length

padding='max_length' # Pad shorter sequences to the maximum length

)

return tokenized_inputs

# tokenize training and validation datasets

tokenized_dataset = dataset.map(tokenize_function, batched=True)

from transformers import DataCollatorWithPadding # Import DataCollatorWithPadding

data_collator = DataCollatorWithPadding(tokenizer=tokenizer)

tokenized_datasetRegarding tokenization and padding, here are a few key points:

1. Digital representation aligned with the model: Language models cannot directly understand raw text data. They process numerical representations. Tokenization converts text into sequences of integers that the model can handle, with each integer corresponding to a specific word or subword in the vocabulary. Since different models use different tokenization methods, it is crucial to match the model's tokenization approach during fine-tuning.

2. Reducing vocabulary size: Tokenization splits text into the smallest units the model can recognize—such as words, subwords, or characters—based on the vocabulary. This reduces the overall vocabulary size, lowers the model's complexity, and enhances its ability to handle rare or new words.

3. Facilitating parallel computation: Tokenization standardizes input texts to the fixed length expected by the model. For longer texts, the process can truncate them. For shorter texts, padding is used to reach the required length. This consistency in input lengths makes parallel computation more efficient.

In the tokenization example above, the word "Tuning" is broken into two smaller tokens. This approach allows all words to be represented using a limited set of tokens. It is also why LLMs sometimes "invent words": due to generation errors, the decoded tokens may form words that do not actually exist.

Before fine-tuning:

import torch # Import PyTorch

model_untrained = AutoModelForSequenceClassification.from_pretrained(

model_checkpoint, num_labels=2, id2label=id2label, label2id=label2id)

# define list of examples

text_list = ["It was good.", "Not a fan, don't recommed.", "Better than the first one.", "This is not worth watching even once.", "This one is a pass."]

print("Untrained model predictions:")

print("----------------------------")

for text in text_list:

# tokenize text

inputs = tokenizer.encode(text, return_tensors="pt")

# compute logits

logits = model_untrained(inputs).logits

# convert logits to label

predictions = torch.argmax(logits)

print(text + " - " + id2label[predictions.tolist()])The untrained model produces random predictions.

Untrained model predictions:

It was good. - Positive

Not a fan, don't recommed. - Positive

Better than the first one. - Positive

This is not worth watching even once. - Positive

This one is a pass. - Positive

import evaluate # Import the evaluate module

# import accuracy evaluation metric

accuracy = evaluate.load("accuracy")

# define an evaluation function to pass into trainer later

def compute_metrics(p):

predictions, labels = p

predictions = np.argmax(predictions, axis=1)

return {"accuracy": accuracy.compute(predictions=predictions, references=labels)}

from peft import LoraConfig, get_peft_model # Import the missing function

peft_config = LoraConfig(task_type="SEQ_CLS",

r=1,

lora_alpha=32,

lora_dropout=0.01,

target_modules = ['q_lin'])peft_config:

LoraConfig(peft_type=, auto_mapping=None, base_model_name_or_path=None, revision=None, task_type='SEQ_CLS', inference_mode=False, r=1, target_modules={'q_lin'}, lora_alpha=32, lora_dropout=0.01, fan_in_fan_out=False, bias='none', use_rslora=False, modules_to_save=None, init_lora_weights=True, layers_to_transform=None, layers_pattern=None, rank_pattern={}, alpha_pattern={}, megatron_config=None, megatron_core='megatron.core', loftq_config={}, use_dora=False, layer_replication=None, runtime_config=LoraRuntimeConfig(ephemeral_gpu_offload=False))

Notes:

1. task_type="SEQ_CLS": Specifies that the task is sequence classification.

2. r=1: This rank value is discussed in the LoRA paper under section 7.2 "What is the Optimal Rank r for LoRA?" The typical range is 1 to 8, with 4 being a common setting.

3. lora_alpha=32: This scaling factor serves as the weight coefficient for B × A in the equation: h = W0 + lora_alpha × B × A. This scaling factor controls the influence of the LoRA matrices on the original weight matrix. It is recommended to start with a larger value (such as 32).

4. lora_dropout=0.01: This parameter helps prevent overfitting by randomly dropping neurons during training. A small value (such as 0.01) is typically used.

5. target_modules = ['q_lin']: This parameter specifies that only the q_lin layer in the model will be fine-tuned.

model = get_peft_model(model, peft_config)

model.print_trainable_parameters()trainable params: 601,346 || all params: 67,556,356 || trainable%: 0.8901

The number of trainable parameters is less than 1% of the total parameters. As the model size increases, the percentage of parameters that need fine-tuning becomes even smaller.

# hyperparameters

lr = 1e-3

batch_size = 4

num_epochs = 10

from transformers import TrainingArguments # Import Trainer

# define training arguments

training_args = TrainingArguments(

output_dir= model_checkpoint + "-lora-text-classification",

learning_rate=lr,

per_device_train_batch_size=batch_size,

per_device_eval_batch_size=batch_size,

num_train_epochs=num_epochs,

weight_decay=0.01,

evaluation_strategy="epoch",

save_strategy="epoch",

load_best_model_at_end=True,

)

from transformers import Trainer

# creater trainer object

trainer = Trainer(

model=model,

args=training_args,

train_dataset=tokenized_dataset["train"],

eval_dataset=tokenized_dataset["validation"],

tokenizer=tokenizer,

data_collator=data_collator, # this will dynamically pad examples in each batch to be equal length

compute_metrics=compute_metrics,

)

# train model

trainer.train()The output is the same as the untrained model predictions.

Run the test data again and the output is as follows:

Trained model predictions:

It was good. - Positive

Not a fan, don't recommed. - Negative

Better than the first one. - Positive

This is not worth watching even once. - Negative

This one is a pass. - Positive

After fine-tuning, the model correctly classifies the movie reviews.

This section demonstrates how to fine-tune a model using LoRA. Due to space limitations, not all parameters were explained in detail. Google Colab has now integrated the free Gemini model. If you are unsure about the meaning of any parameters, feel free to ask Colab. After trying it out, I found its performance is comparable to GPT-4o. Moreover, if you encounter an error, you can report it with a single click, and Gemini will provide improvement suggestions that usually resolve the problem immediately.

This article introduces the basic concepts of fine-tuning and explains how to fine-tune LLMs. Although fine-tuning is less expensive than pre-training large models, it still incurs significant costs when dealing with models with a large number of parameters. Fortunately, thanks to Moore's Law, the cost of fine-tuning is expected to decrease over time, enabling broader applications.

The paper, Textbooks Are All You Need, emphasizes the importance of data quality in pre-training. Similarly, deep learning courses highlight the critical role of high-quality training data. As Naval Ravikant, founder of AngelList, once said: "Read the best 100 books over and over again." Fine-tuning a model is akin to humans learning new skills or mastering specific knowledge. High-quality input is essential, just as mastering a subject requires repeated study of classic texts.

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

Alibaba Cloud Unveils New Research Model for Enhanced Visual Reasoning

AI Forward: Alibaba Cloud Developer Summit 2025 Now Open for Registration

1,348 posts | 478 followers

FollowFarah Abdou - September 9, 2024

Alibaba Cloud Data Intelligence - June 18, 2024

Farruh - March 20, 2024

Rupal_Click2Cloud - August 19, 2024

Farruh - October 2, 2023

Data Geek - November 4, 2024

1,348 posts | 478 followers

Follow AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Alibaba Cloud Model Studio

Alibaba Cloud Model Studio

A one-stop generative AI platform to build intelligent applications that understand your business, based on Qwen model series such as Qwen-Max and other popular models

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn MoreMore Posts by Alibaba Cloud Community