By Hua Xiang

This article is based on the speech delivered by Hua Xiang at "Kubernetes & Cloud Native Meetup - Guangzhou". It introduces some tools and steps for Alibaba to build a CI/CD pipeline.

Hello everyone, I am Hua Xiang from the Alibaba Cloud Container Service Team. First, let me explain briefly what Kubernetes is, to help you understand. Kubernetes is a container orchestration system available for production. Kubernetes makes all Node resources a resource pool in the cluster, and its scheduling unit is the Pod. A Pod can have multiple containers. It is just like a person holding ECS resources or computing resources in their left hand, holding containers in their right hand, and then matches the two, and in this way the person is playing the role of a container orchestration system.

However, the Cloud Native concept comes up quite frequently lately, and many people are confused about the connection between Cloud Native and Kubernetes. How can we determine whether an application is a Cloud Native application? In my opinion, three criteria are available:

First, the application can make resources a pool;

Second, the application can quickly access the network of the pool. Kubernetes has a layer of its own independent network, and I only need to specify the service name I want to access, that is, it can quickly access various service discovery functions through the service mesh;

Third, the application has the failover function. If a pool contains a host, or a node is down, and thus the entire application is unavailable, then it is definitely not a Cloud Native application.

From these three points, we can see that Kubernetes is doing very well. First, let's look at the concept of a resource pool. A large Kubernetes cluster is a resource pool. We no longer have to worry about the host of an application. All we have to do is publish the deployed yaml file to Kubernetes. It will automatically make these schedules, and it can quickly access the network of the entire application. In addition, the failover is also automatic. Next, I will share with you how to implement an elastic CI/CD system based on Kubernetes.

First, let's take a look at the current status of CI/CD. The concept of CI/CD has been put forward for many years. However, with the evolution of technology and the continuous introduction of new tools, the entire process and implementation methods of CI/CD are gradually enriched. Initially, we typically use CI/CD to submit code, trigger an event, and then perform automatic builds on a CI/CD system.

The following figure shows the current status of CI/CD:

It involves many processes. The pipeline starts with triggering an event after the code submission. Then, the CI/CD system can build a layer "Build" through Maven, perform a unit test, perform code specification scanning, and then deploy the service. Next, it performs an end-to-end test for UI, which automatically tests the UI.

Then, it performs a stress test (a performance stress test), which is only done at the development and testing environment levels. Then, it can progress to a QA environment, and eventually to a UAT environment. The pipeline is a very long process. CI/CD is widely used. The code writing and submission to the code repository can be taken as the starting point of the entire IT infrastructure. From this point on, every step can be included in the scope of CI/CD. However, as the scope of its control is increasing, the entire process becomes increasingly complex, and it also occupies more resources.

If you know about C++, then you may know that C++ used to be a well-known language with a very long build time. One of the authors of the Go language, who is also an author of the C language, talked about writing the Go language simply as an alternative to writing code that took so long to compile. Therefore, one of the major advantages of the Go language is that the compilation time is very short. The compilation time of Go is indeed very short.

I used an I5 notebook to compile the Kubernetes source code for a complete build. It took about 45 minutes to compile, which was a very long process. Therefore, even if the compilation process has been greatly optimized and improved, as long as the project is large, the build phase would be long, not to mention some subsequent automated tests. Furthermore, the CI/CD process consumes and occupies a very large amount of resources.

Next, let's take a look at the selection of CI/CD tools and their development. First, Jenkins is the most established option. In fact, CI/CD was almost equivalent to Jenkins before the emergence of container technology. However, after the emergence of container technology, many new CI/CD tools have also emerged, such as Drone, which is a fully container-based CI/CD tool. It works well with containers, and its building process is fully implemented in containers.

In addition, GitLab CI is one of the tools. It used to better integrate with Gitlab code management tools. Its main feature is that it works well with Gitlab code management tools. Jenkins 2.0 introduces the Pipeline as Code feature, which can automatically generate a Jenkins file.

In Jenkins 1.0, if we want to configure a pipeline, we need to log on to Jenkins to create a project, and then write some shell in it. Although the same effect can be achieved, the biggest drawback is that the reproducibility and migration are not very good. Moreover, this is naturally separated from DevOps. For example, the Jenkins system is generally managed by O&M personnel, and developers write code. But how to build and where to publish the code is completely unknown to developers. This leads to the separation of development and O&M. However, after the Pipeline as Code method was introduced, a Jenkins file and the source code can be placed in the same repository.

First, it has a major advantage that the release process can also be incorporated into version management, so that errors can be traced. This is a huge change, but we have actually found through communication with users that, although many of them have upgraded Jenkins to 2.0, their usage is still completely stuck in version 1.0. Many users have not used the implementation method of a Jenkins file. Another advantage is the support for containers. Around 2016, the support for containers was weak, and running Jenkins and the built products in the container were also troublesome with Docker.

However, Drone provides excellent container support. First, it runs completely in Docker mode, that is, the build environment is also in a container. You need to build a Docker build image and then when it is pushed out, it also runs in the container, and it needs the privilege permission. This method has several special advantages. First, it will not leave any temporary files on the host. For example, when your container is destroyed, the intermediate files generated in the build will be completely removed. However, if you use Jenkins, a lot of temporary files will accumulate, and Jenkins will occupy more and more space over time. As a result, you need to clean up these files regularly, and you cannot directly clear it with one click during the cleanup process, which is very troublesome.

And, when it comes to managing plug-ins, a serious problem exists with Jenkins, that is, the plug-in upgrade. First, log on to Jenkins, and then upgrade the plug-in. If you want to temporarily start Jenkins for testing, or perform some debugging in a new environment, these plug-ins may need to be upgraded every time a new environment is created. In addition, all the configurations made in Jenkins would need to be reconfigured, which is a very tedious.

However, Drone has a big advantage in that all its plug-ins are Docker containers. For example, if you use a plug-in in a pipeline, all you have to do is declare this plug-in, instead of having to manage where to download the plug-in and how to install it. It is fully automated, as long as your network can access the plug-in container image, which is very convenient.

Regarding the ecosystem construction, the biggest advantage of Jenkins is that it has many plug-ins, that is, everything you want to use is available, and with a strong base, your plug-ins can be very powerful. For example, the pipeline. Although it is available in 2.0, it is completely implemented through plug-ins. But, the Jenkins development seems to have reached its second peak. Its support for Kubernetes has increased significantly. First, starting with JenkinsX, it integrates some Kubernetes ecosystem related tools, such as Harbor, and Helm. This makes it very convenient to do some builds on the Kubernetes cluster, and put some orchestration files for service solidification into Helm.

In addition, it now has a new sub-project named Config as Code, which adds some configuration in all Jenkins files, so that they can be output in the form of code. This improvement facilitates the migration or replication of Jenkins.

Despite the shortcomings of Jenkins mentioned above, in fact, we will choose Jenkins in the end, because the most important thing is the build of the ecosystem, and Jenkins is already very good in this aspect. Today, for the implementation of an elastic CI/CD system discussed below, Jenkins has already provided relevant plug-ins. However, in the Drone community, it has not yet been realized in any way, although some people have mentioned this.

Let's take a look at the business scenarios of the CI/CD system. The typical scenario and characteristics: first, it is oriented to developers, which is relatively rare, because developers are generally a bit picky. If the system is not robust enough, or the response time is slow, it will often be criticized.

Second, it has a time-effectiveness requirement. After the code is written and submitted, we do not want to keep queuing in the build of this code, but rather start the build immediately and have sufficient resources. Third, the peaks and valleys occupied by its resources are very obvious. This is because developers cannot submit code all the time. Some may submit code just a few times a day, and some may submit code many times.

I've seen someone share a curve that reflects the build tasks of the company. The amount of code submitted by the company is highest around 15:00 to 16:00 every day, and the curve is relatively flat at other times. This indicates that in that company, programmers all submit code between 15:00 and 16:00 before starting something else. As the demand for CI/CD resources increases, building a cluster is a must. This will improve the load capacity, and shorten task waiting times. However, one downfall with a cluster is that it has only one Master. However, this can also be improved through plug-ins.

This is not the focus of our discussion today, because in many cases, it is also acceptable if the Master is temporarily unavailable but can be recovered quickly. In addition, the CI/CD system needs to meet various build scenarios. A company may use many development environments, just like although we all use Java, the versions used may still differ, such as 1.5, 1.6, and 1.7. If Java is not used, many other languages, such as Python, Go, and NodeJS, are likely to be used for many build environments. In addition, if we do not introduce containers, these build environments cannot be reused, and a single host can only be used by PHP.

Containers can inject a new capability into the CI/CD system, namely the capability of environmental isolation. We can use Kubernetes to inject more capabilities into the CI/CD system, and then a conflict arises. Developers always hope that the CI/CD system can quickly respond to a code submission event, but companies don't have unlimited resources. As mentioned above, if the code is submitted at a peak at 15:00 or 16:00 every day, then 30 or 40 machines may be required to meet the build task requirements. However, it is impossible to start 30 or 40 machines a day, just for the purpose of building for an hour or two at 15:00 or 16:00 every day.

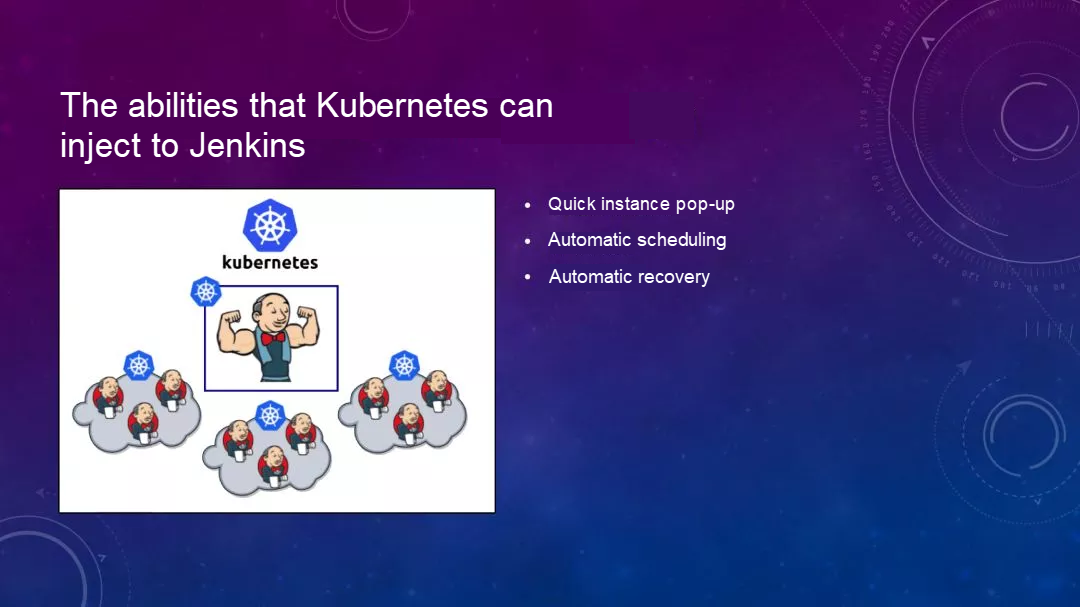

Kubernetes can inject new capabilities into Jenkins to enable the CI/CD system to achieve elasticity. What are our expected goals? When we have build tasks, new machines can be automatically added for the resources, or more computation capabilities can be added. Either way, when needed, a resource expansion can be automatically carried out, and when not needed, these resources can be automatically released.

This is what we expect. And Kubernetes can realize this capability for Jenkins. As a container orchestration system, Kubernetes can provide the ability to quickly generate some new instances, automatically schedule them to idle machines to serve as a resource pool, and schedule a task in the resource pool. After it completes the task, it can perform a recovery operation. If the Jenkins Master is also deployed on Kubernetes, Kubernetes can perform a failover for the Master. That is to say, if the system can tolerate this, then even if the Master dies, I can also quickly schedule it to another machine, and it wont have a slow response time.

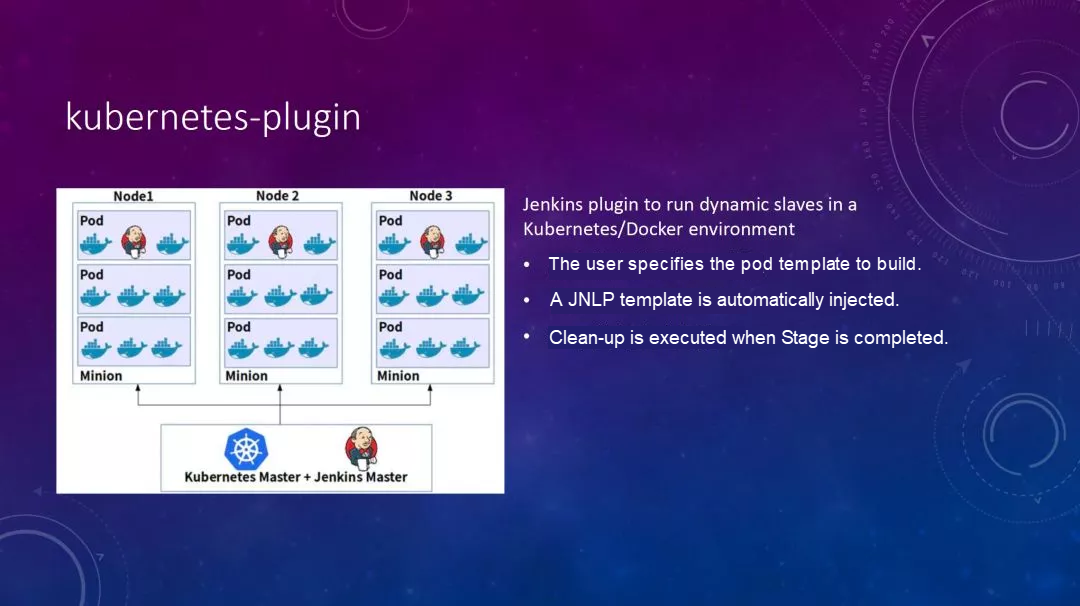

This is also implemented using a plug-in, called Kubernetes-plugin. The plug-in provides the ability to directly manage a Kubernetes cluster. After the plug-in is installed in Jenkins, it can listen to the build tasks of Jenkins. With a build task, when waiting for resources, the plug-in can apply for new resources from Kubernetes, and apply for a new Pod to perform an automatic build. After the build is completed, it will automatically clean up.

The above briefly introduces its capability. In addition, after the plug-in is installed, it also has a modification to the pipeline syntax. Let's take a look at an example later. But even at this point, it is still not feasible. First, the planning of the Kubernetes cluster is still a problem. For example, a cluster has 30 nodes, the real Master is deployed on it, and then the plug-ins are installed. After some management, we can find that when a new task arrives, it starts a new Pod to complete the build task.

After the execution, the Pod is automatically destroyed so as not to occupy the cluster resources. When we do not perform build tasks on this cluster at ordinary times, we can do some other tasks on it. But this still has a disadvantage after all, that is, how large is the scale of this cluster to be planned. However, if other tasks are being executed, and some build tasks suddenly arrive, it may cause resource conflicts.

In general, some imperfections still remain. We can use some less common features of Kubernetes to solve the problems mentioned above. These two features are called Autoscaler and the Virtual node, respectively. Let's look at Autoscaler first. Autoscaler is an official component of Kubernetes. Three features are supported under the Kubernetes group:

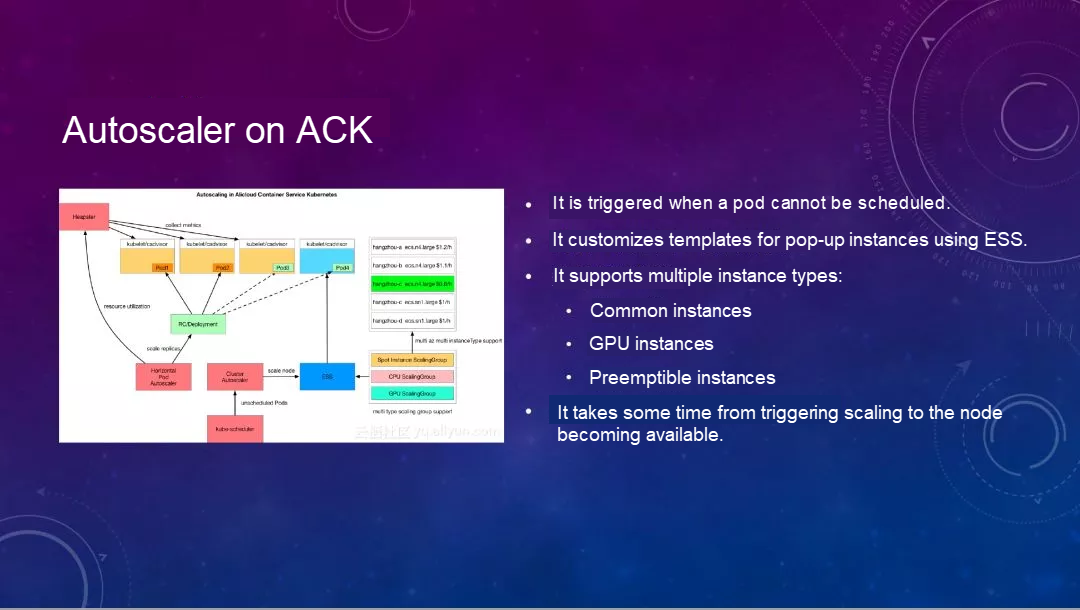

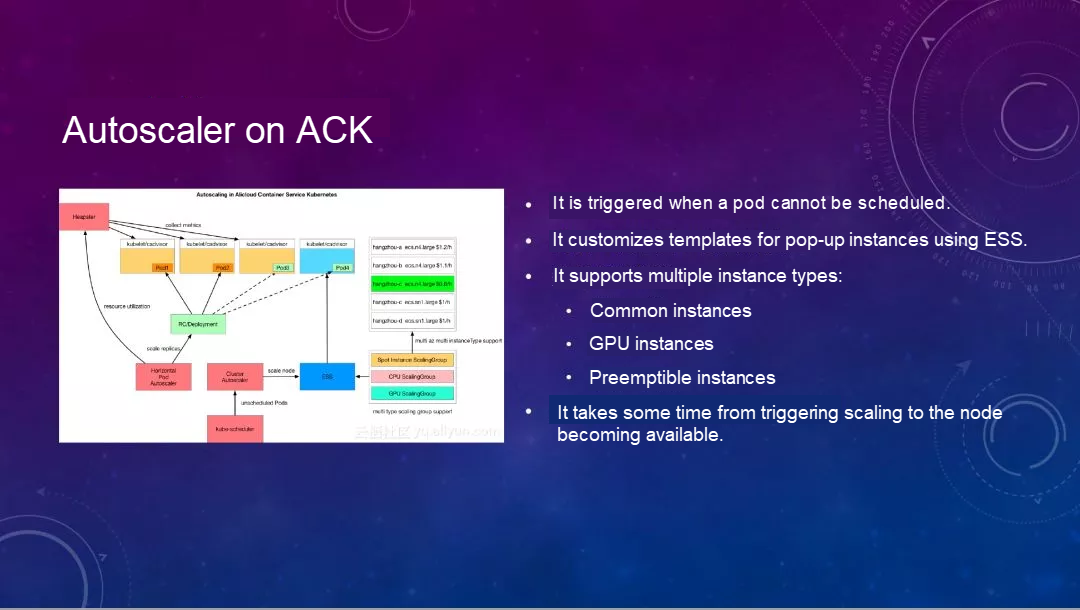

I want to talk about Cluster Autoscaler, which scales the number of nodes in the cluster up or down. First, the following figure shows one way to implement Autoscaler in Alibaba Cloud Container Service. Let's take a look at this figure. This is a scenario where HPA and Autoscaler are used in combination.

When HPA monitors monitored events and finds that the resource usage reaches a certain level, HPA automatically notifies the workload to bring up a new Pod. The cluster resources may be insufficient at this time, so the Pod may be pending here. This will trigger the Autoscaler event, and Autoscaler will generate a new node based on the configured ESS template, and then automatically add the node to our cluster. It uses the customized function of ESS template, and supports multiple node instance types, such as common instances, GPU instances, and preemptible instances.

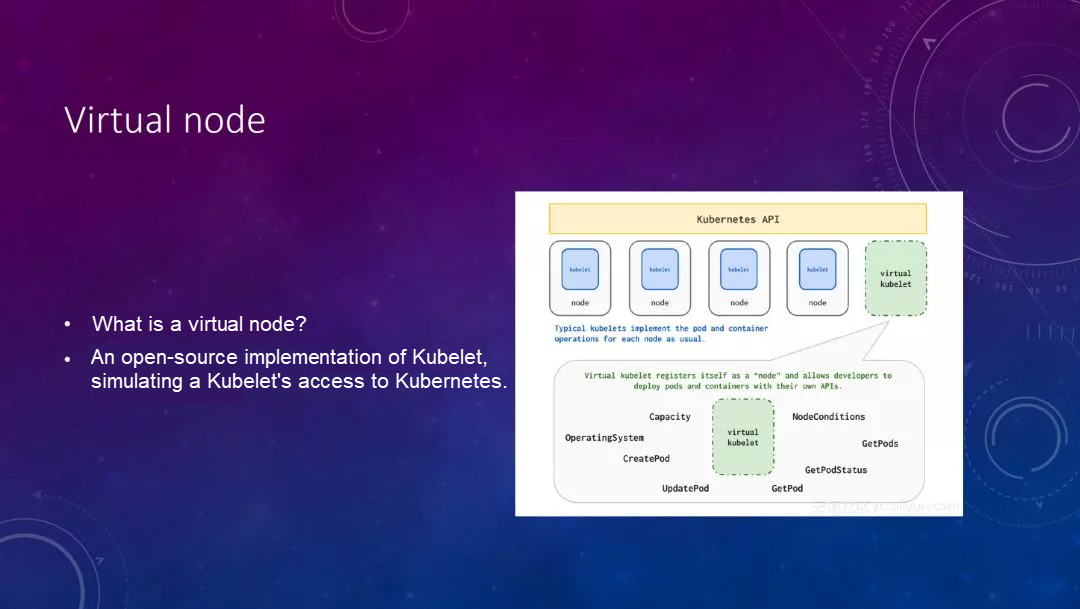

The second is the Virtual node, which is implemented based on the open-source Virtual Kubelet project by Microsoft. It implements a virtual Kubelet, and registers it with the Kubernetes cluster. For the convenience of understanding, imagine a MySQL proxy disguises itself as a MySQL server. The backend may manage a lot of MySQL servers, and can automatically route or splice SQL queries.

Virtual kubelet does similar work, that is, it registers itself as a node with Kubernetes, but in fact, it may manage a lot of resources on the public cloud on the backend, and may interface with some ECIs on the public cloud, or with these VPCs. The following shows a general schematic.

On Alibaba Cloud, they interface with the ECI on Alibaba Cloud to make an elastic container instance, which responds very quickly, because it does not need to add nodes to the cluster. It can achieve the performance of about 100 Pods per minute. In addition, we can declare the usage of such resources on the Pod. The response is very fast.

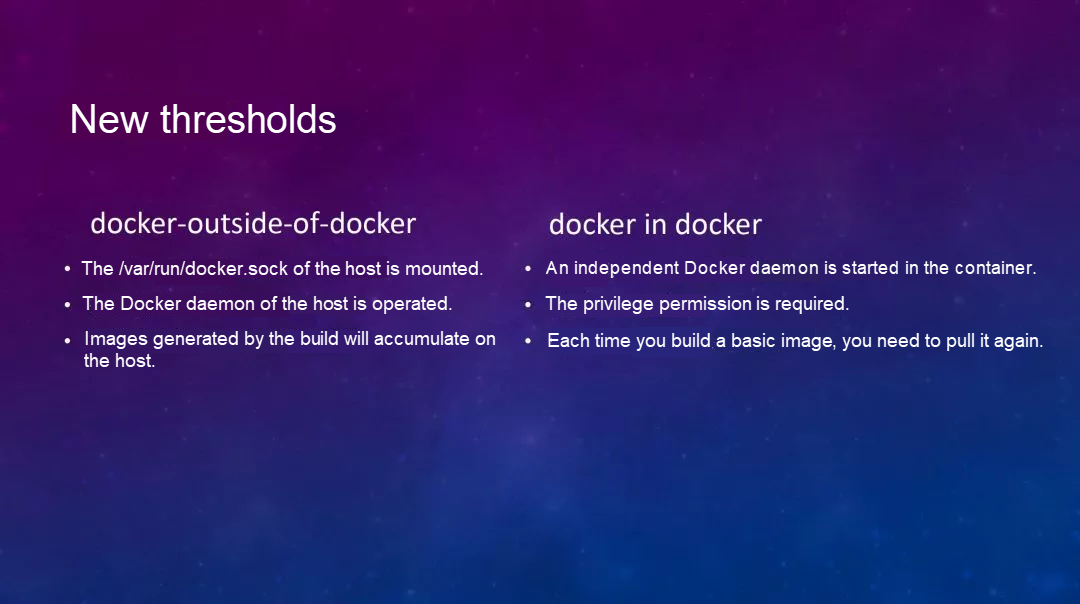

Then, we can use these two methods to make new changes to our elastic CI/CD system. We do not have to plan the scale of the cluster very early, and we can make the cluster scale automatically as needed. However, after all the above operations, some new thresholds have been introduced: docker-outside-of-docker and docker in docker.

We usually run Jenkins in Docker in one of two ways: One is to mount the docker.sock of the host into the container, to allow Jenkins to communicate with the local docker daemon through this file. Then, docker build images are created, or these images are pushed to a remote repository, so all the images generated in the middle will be stacked on the local machine, which is a problem.

In some Serverless scenarios, it has some limitations, because Serverless itself does not allow socket files to be mounted. The other is the Docker-in-Docker method. Its advantage is that it starts a new Docker daemon in the container. All the intermediate and build-related components are removed along with the container. However, it needs the privilege permission.

Most of the time, we try not to use it. In addition, when the docker build image can be created on the host computer, and if an image already exists, then the image will be directly used. However, if you use the Docker-in-Docker method, the image will be pulled again every time, and this also takes some time, depending on various usage scenarios.

At this time, a new open-source Google tool, Kaniko, was introduced. It uses the Docker-in-Docker method. One of its major advantages is that it does not rely on Docker, and therefore it can completely build a docker image in the container in user mode without the privilege permission. Run the Dockerfile command in user mode to build the image completely.

This is an expected elastic CI/CD system. In this case, the computing resources from the real node to the underlying layer are scaled elastically, and meet the delivery requirements, so our resources can be managed in a very refined manner.

Let's take a look at the Demo: https://github.com/hymian/webdemo

Here is an example I have prepared. The focus is on this Jenkinsfile file, which defines the pod template of the agent, and contains two containers, one for the Golang build and the other for the image build.

Then, we will start the build. At the beginning of the build, we have only one Master in this environment, so no build node will exist. As we can see, it now launches a new Pod, which is added as a node. However, I have defined a label in this Pod template, so it does not have this node, and thus the Pod status is pending. Therefore, the agent node shown in the build log is offline.

However, we define an Autoscaler in this cluster. When no node exists, it automatically allocates a new node and adds it. We can see that a node is being added. We need to wait a moment for this to finish. That is to say, this may take one or two minutes.

When there aren't enough nodes, it can automatically scale up the number of nodes and add them the cluster, which takes a little bit of time. This takes a little longer, because it triggers the scaling first, which has a polling time difference. The entire process may take about three minutes. Now, look at the server. There were just 3 servers. Now this server has been added. This is preemption, and this is what I just added.

This is an exception, because the node is being added to the cluster, and should not be shown yet. We can see from the command line that it is already four nodes, with a node added. At this time, we can see that the Pod is being created in the agent. Note a small detail that 0/3 shows that the Pod has three containers, but what I defined above is that only two containers exist in the Pod. This is what we wrote in the PPT just now.

The JNLP container is a container automatically added by the plug-in. It uses this container to report the intermediate status of the build to the Master in real time. I will send the logs to the Master. This is a process of agent node initialization. At this time, the slave node is already running. I have finished the output, and the build is complete.

Hua Xiang, a solution architect at Alibaba, focuses on business containerization, Kubernetes management, DevOps practice and other fields.

High-Availability Deployment of Pods on Multi-Zone Worker Nodes

167 posts | 30 followers

FollowAlibaba Clouder - July 27, 2020

JeffLv - December 2, 2019

Alibaba Clouder - March 5, 2019

Alibaba Container Service - July 24, 2024

Alibaba Container Service - June 12, 2019

Alibaba Container Service - September 19, 2024

167 posts | 30 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Robotic Process Automation (RPA)

Robotic Process Automation (RPA)

Robotic Process Automation (RPA) allows you to automate repetitive tasks and integrate business rules and decisions into processes.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Alibaba Container Service