By Huafeng Wang (Jiru), Hologres R&D

In recent years, with the popularity of mobile applications, new scenarios such as application tracking and user tag computing have emerged. To better support these scenarios, more and more big data systems are using a semi-structured JSON format to store such data, enabling more flexible development and processing. Hologres, developed by Alibaba Cloud, is a unified cloud-native real-time data warehousing service. It supports online analytical processing (OLAP) of petabytes of data and provides high-concurrency and low-latency online data services. Hologres constantly optimizes its technical capabilities for semi-structured data analysis. In version 1.3, Hologres introduces support for the JSONB type, the JSONB GIN index, and the JSONB column-oriented storage. This improves the write and query performance of JSONB data and reduces storage costs without sacrificing flexibility.

This article discusses the technical principles of Hologres' JSONB semi-structured data and highlights the exceptional analysis performance of JSON semi-structured data.

Before diving into semi-structured data, let's first clarify what structured data is. Structured data can be visualized as a table in a relational database management system (RDBMS). Each table has a clear and strict structural definition, including the columns it contains and the data type of each column. The stored data must strictly adhere to the table's structural definition.

On the other hand, semi-structured data is non-fixed, subject to frequent changes, and generally self-descriptive. Its structure and content are intertwined, and JSON format data is a perfect example of such data. JSON follows a standard format definition, primarily consisting of objects and arrays. Objects store key-value pairs, where keys are strings and values can be strings, arrays, Boolean values, null values, objects, or arrays. Arrays can store any number of values.

Here's a simple JSON example that I'm sure you're all familiar with.

{"user_name": "Adam", "age": 18, "phone_number": [123456, 567890]}Hologres provides the ability to handle semi-structured data through its support for the JSON data type. To ensure compatibility with the Postgres ecosystem, Hologres supports two native types: JSON and JSONB. The JSON type is stored as TEXT format, while the JSONB type is stored in a parsed binary format. Since there is no need for parsing during querying, JSONB provides faster processing. Many of the internal optimizations in Hologres' semi-structured data solution are based on the JSONB type.

Semi-structured data is widely used due to its ease of use and powerful expression capabilities.

In a data warehouse, whenever there are changes in the upstream data format, such as changing data types or adding/deleting fields, the tables in the data warehouse with a rigid schema format need to undergo schema evolution to adapt to the changes. This often involves executing DDL statements to add or delete columns, and even modifying and relaunching intermediate real-time data ETL jobs.

In scenarios where there are frequent schema evolutions, ensuring data quality becomes a major challenge. Additionally, maintaining and managing table schemas becomes a tedious and time-consuming task for data developers.

Semi-structured data naturally supports schema evolution. When there are changes in the upstream business, you only need to add or delete corresponding fields in the JSON column data without executing any DDL statements on the warehouse tables. This change can also be transparent to intermediate ETL jobs, significantly reducing the cost of maintaining and managing table schemas.

When processing semi-structured data in a data warehouse, there are two primary considerations for evaluating a solution:

However, traditional solutions often struggle to strike a balance between these considerations. There are two common methods for processing JSON data:

The following scenarios use JSON data as an example. Let's assume we have the following JSON data:

| {"user_id":1001, "user_name": "Adam", "gender": "Male", "age": 16} |

| {"user_id":1002, "user_name": "Bob", "gender": "Male", "age": 41} |

| {"user_id":1003, "user_name": "Clair", "gender": "Female", "age": 21} |

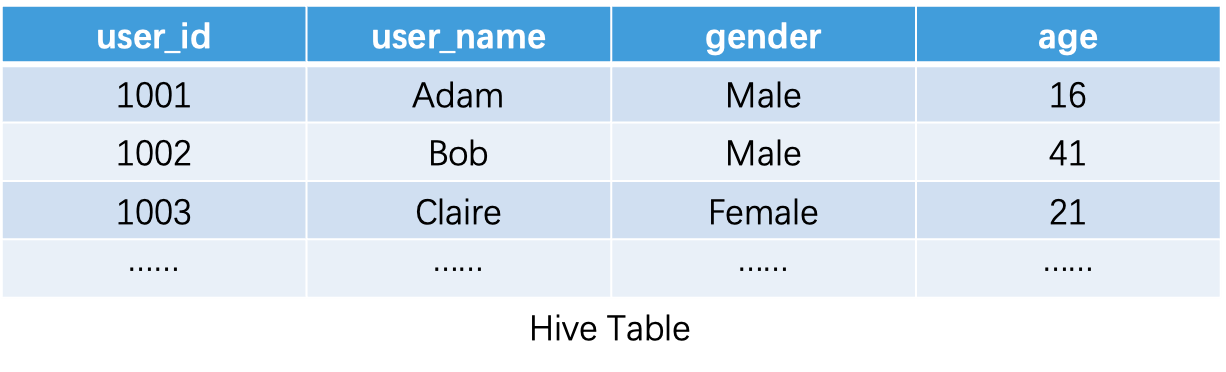

The most intuitive solution is to store the original JSON data into a separate column. Take Hive as an example:

At the storage layer, the data of this Hive table is also continuously stored on the disk as a complete JSON value with the smallest storage granularity:

Then, use the relevant JSON function to query. For example, query the number of all users older than 20:

SELECT COUNT(1) FROM tbl WHERE cast(get_json_object(json_data, '$.age') as int) > 20;The corresponding abstracted process is as follows:

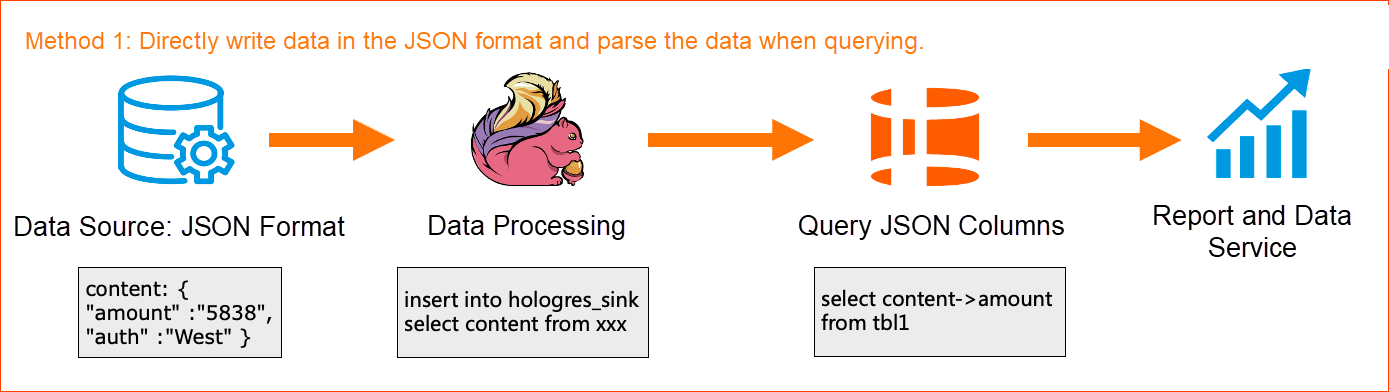

The upstream directly writes the JSON type to Hologres without processing and then parses the required data when the application layer queries.

• Advantage: JSON naturally supports schema evolution. When upstream business changes, you only need to add or delete corresponding fields in the JSON column data without executing any DDL statements on the tables in the warehouse, and it can also be transparent to intermediate ETL jobs. This ensures the ease of use and flexibility of semi-structured data to the greatest extent and reduces the cost of maintaining and managing table schemas.

• Disadvantage: When the application side queries, it needs to select appropriate processing functions and methods to parse the required data. The development is relatively complex. If JSON is complex, the query performance will degrade because each time the data in the JSON column is involved in the calculation, the JSON data needs to be completely parsed. For example, if you want to extract a field in the entire JSON, you must read each row of JSON, parse them, take out the required fields, and return when the query engine executes . This involves a large amount of I/O and calculation, and what is needed may be only one of hundreds of fields in JSON data. Therefore, a large amount of I/O and calculation is unnecessary.

Since the parsing cost of JSON query is high, it's better to put the parsing in the data processing link in advance. Therefore, another method is to process the JSON into a wide table:

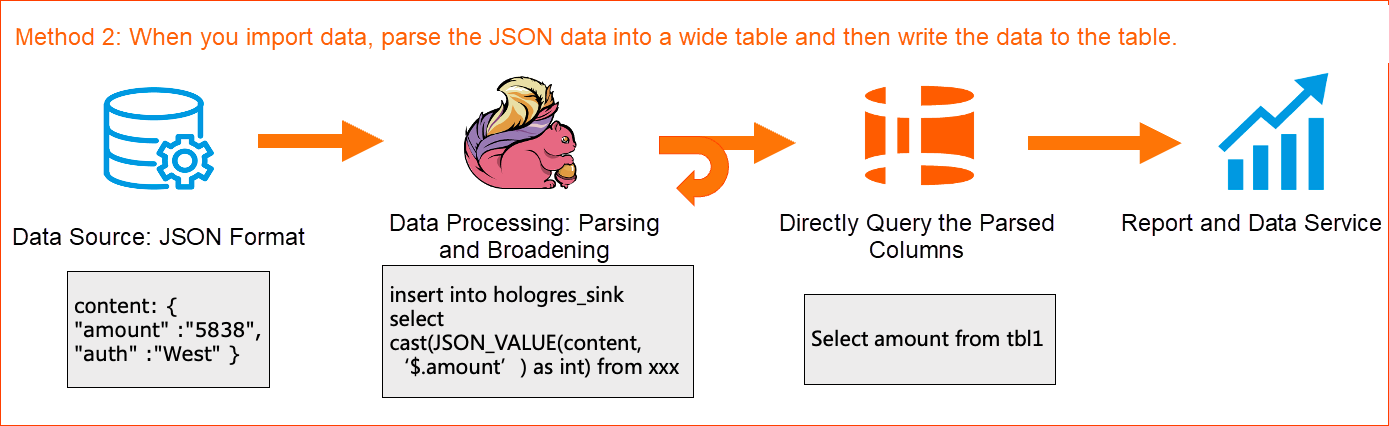

The corresponding abstracted process is as follows:

The upstream data is in JSON format. When you import data, the JSON data is parsed. For example, you can use the JSON_VALUE function of Flink to parse the JSON data, broaden the JSON data into a wide table, and then write the table to Hologres. For upper-layer applications, you can directly query the parsed columns in Hologres.

• Advantage: When you write data to Hologres, the write performance is better because it is written to ordinary columns. At the same time, you do not need to parse JSON data on the query side, so the query performance is better.

• Disadvantage: Whenever the upstream data format changes, such as changing the data type, adding or deleting fields, and executing DDL statements to add or delete columns, the real-time data ETL jobs need to be adapted and relaunched, which is not user-friendly and increases the O&M and development burden.

Based on this background, the industry urgently needs a solution that can maintain efficient query performance without sacrificing flexibility to cope with extreme analysis scenarios of massive semi-structured data.

In order to better support JSON analysis scenarios, Hologres continues to enhance its technical capabilities. In previous versions, Hologres supported the JSON data format and related parsing functions, allowing users to directly write JSON data and execute related queries. In addition, version 1.1 included optimizations at the query layer to improve the performance of JSON data queries. These optimizations included supporting GIN inverted indexing, accelerating JSON data filtering, and enabling expression pushdown. However, the overall acceleration scenarios were limited and difficult to use. To address this, version 1.3 introduced significant optimizations at the storage layer, leveraging JSONB column-oriented storage to achieve better query performance.

Based on our observations, the structure of unstructured data from actual users remains relatively stable over a certain period of time. Typically, there are only a limited number of well-defined fields, with variations occurring in the frequency of each field. The data type of each field remains stable as a whole.

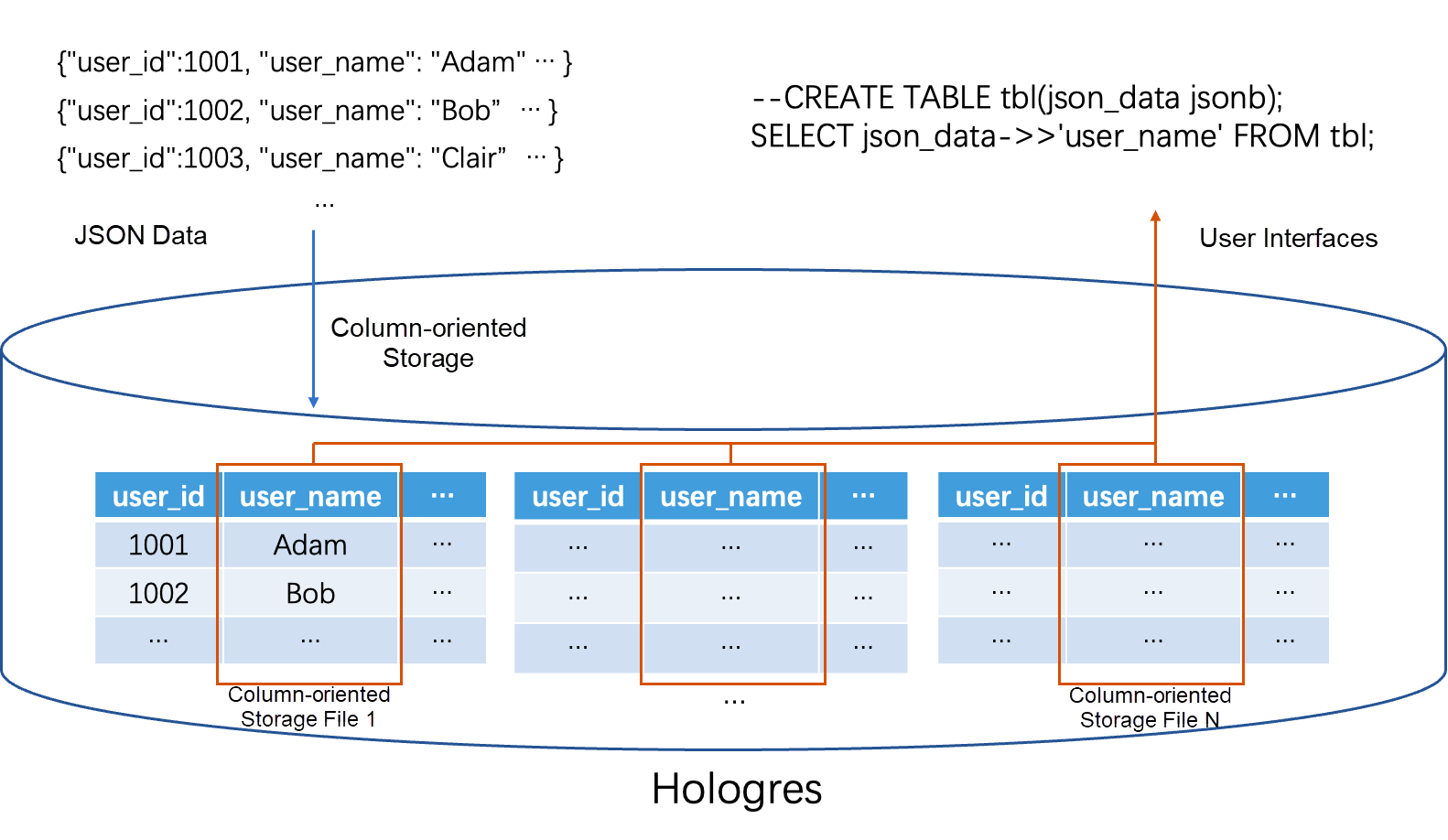

Taking these factors into consideration, the implementation solution provided by Hologres automatically extracts the structure of the JSON data (number of fields, field types, etc.) when importing JSON data into Hologres. It then converts the JSON data into a column-oriented storage format file with a strong schema format at the storage layer to accelerate queries. Despite this optimization, the semantics of JSON are preserved on the external interface. This solution allows users to maintain the ease of use of JSON while improving the performance of OLAP queries.

The diagram below illustrates that the data in each Hologres table on the same shard is stored in different files, with data in the same file being written at adjacent points in time. As a result, there may be structural differences between files in JSON scenarios. However, a single file can have a stable structure, allowing for the stable evolution of the JSON data structure.

Before delving into the implementation of JSON column-oriented storage in Hologres, let's briefly discuss the differences between the JSON and JSONB data types in Postgres.

From a user interface perspective, JSON and JSONB have very little difference. Most operators can be used interchangeably. The main distinction lies in the storage format:

1. The JSON type only checks whether the written data conforms to the JSON specification. The original JSON text is directly stored as a TEXT type without any optimization.

2. Based on JSON, JSONB optimizes the format of data. It stores the optimized binary format of the original JSON data. The optimization includes but is not limited to:

a) Remove redundant spaces from data.

b) Deduplicate the fields with the same name in the same path.

c) Sort, rearrange and organize fields in JSON data to accelerate query capability.

There are also some differences in terms of function coverage. For example, JSON cannot be directly cast into INT, Float, and Numeric types, while JSONB can. Therefore, JSONB is more comprehensive and easier to use in terms of overall syntax.

The current implementation of Hologres' JSON column-oriented storage solution is primarily based on the JSONB data type. The specific reasons are described in the following section.

The structure extraction of JSON data is mainly to determine the format of JSON data, including the specific fields of JSON and the data type of each field, which is used as the actual storage structure of the underlying column-oriented store file.

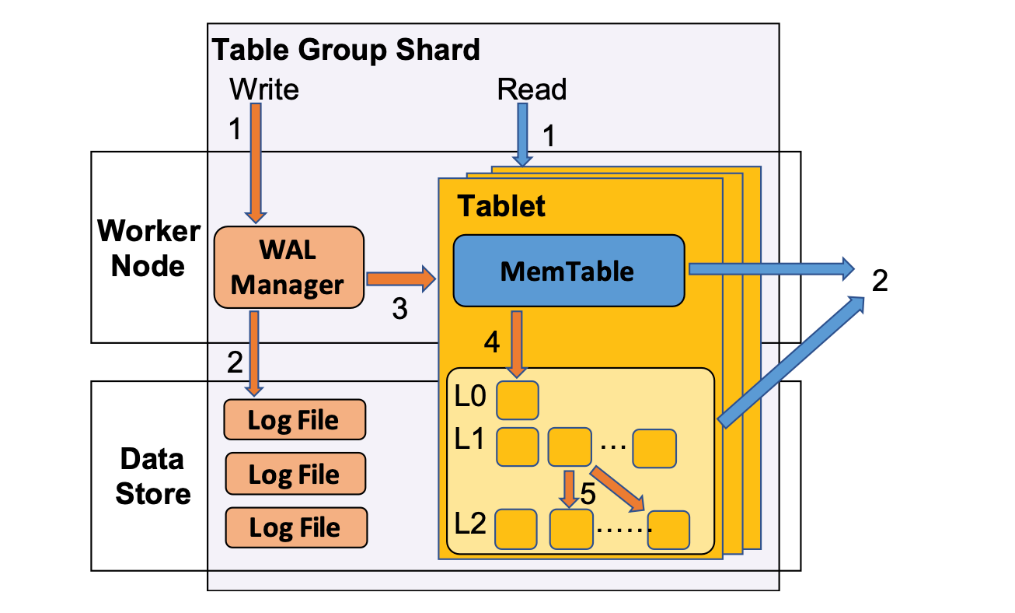

The Hologres data writing process is based on the Log-Structured Merge-Tree (LSM) architecture. When data is written to a Hologres table, the data will first be written to the MemTable. When a MemTable is full, it will be flushed to the file system in an asynchronous manner (step 4 in the following figure), and a new MemTable will be initialized. At the same time, there will be tasks at the backend to keep merging the files flushed to the file system (Compaction, step 5 in the following figure).

The structure extraction of JSON data mainly occurs in the flush and compaction phases.

When the MemTable is flushed, we will traverse all JSON data in the MemTable and record the fields that have appeared in each JSON and the data type of each field. After the traversal is completed, we can know which columns will be stored in this JSON data column and the type of each column.

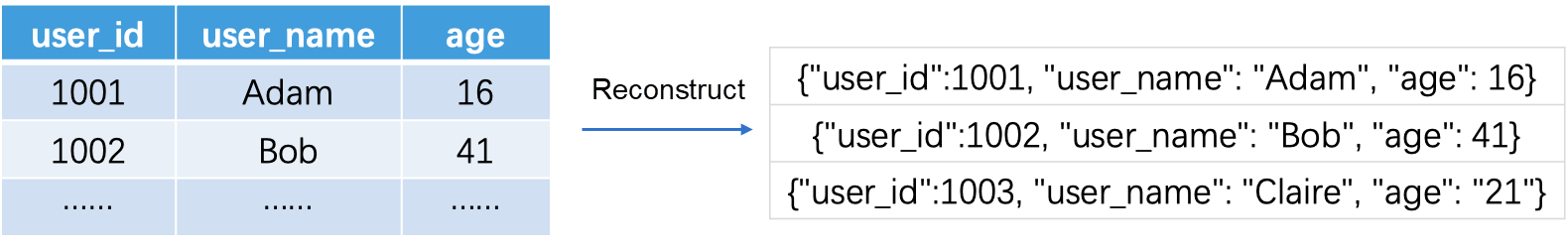

Take the following data as an example:

| {"user_id":1001, "user_name": "Adam", "gender": "Male", "age": 16} |

| {"user_id":1002, "user_name": "Bob", "gender": "Male", "age": 41} |

| {"user_id":1003, "user_name": "Clair", "gender": "Female", "age": 21} |

We can extract the following JSON format:

| Column name | Data type |

| user_id | INT |

| user_name | TEXT |

| gender | TEXT |

| age | INT |

In addition, in the process of traversing JSON, we will also perform type generalization. For example, if a row of data in the user_id field exceeds the INT type threshold, we will generalize the user_id column type to the BigINT type to be compatible with all data.

After extracting the JSON structure, we can write the data in MemTable to the file system, and the JSON column data will be split and written to the corresponding 4 columns.

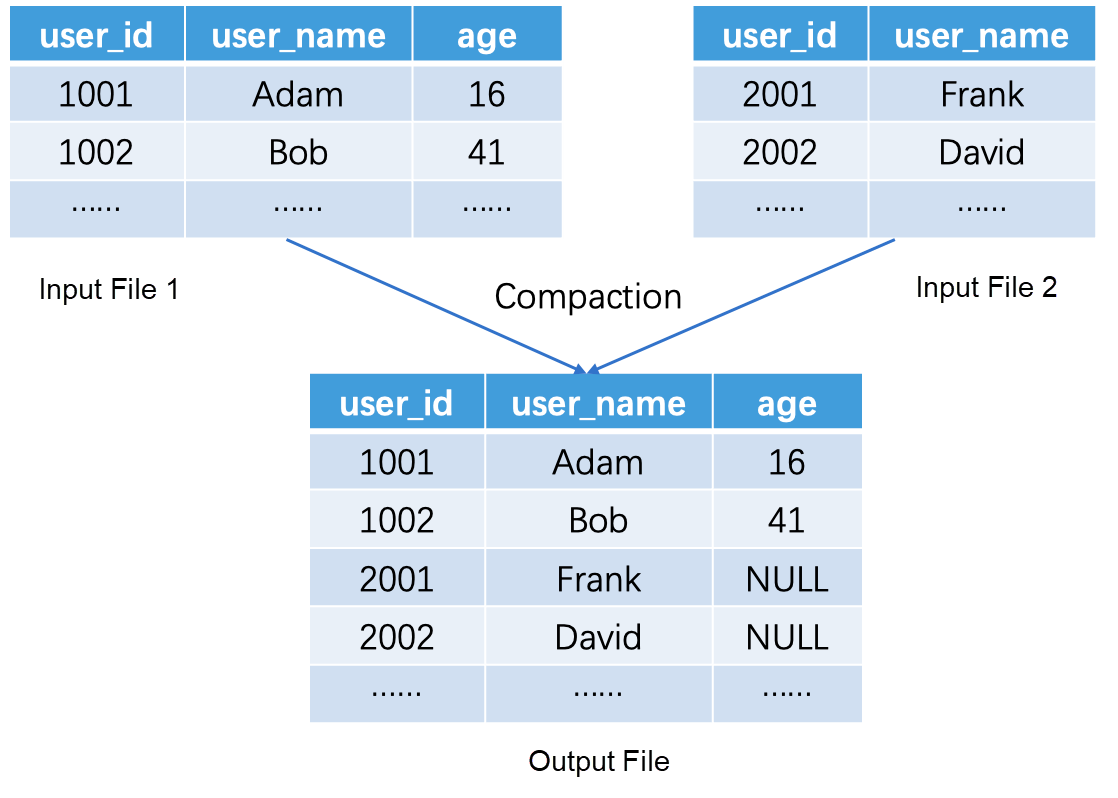

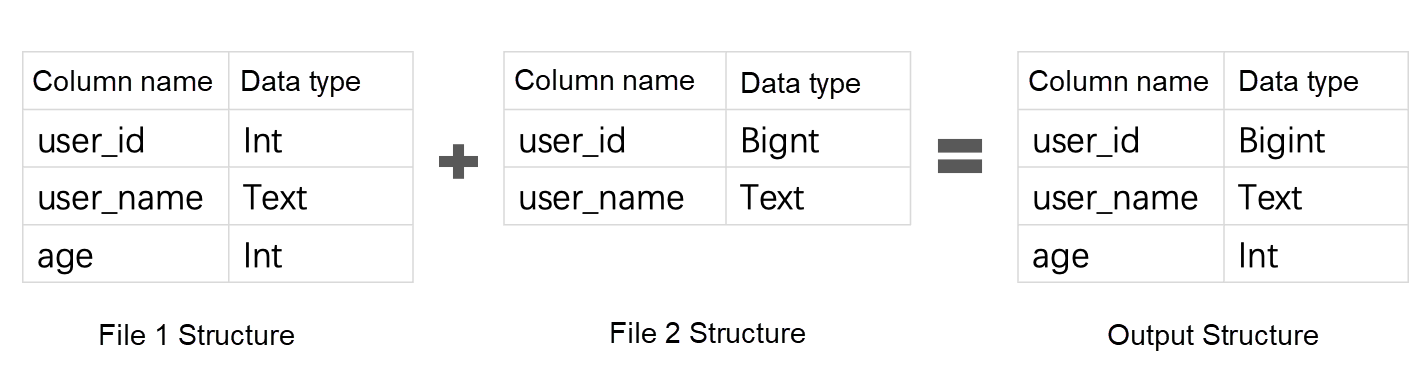

Compaction can merge multiple files into a larger file, which also involves extracting JSON structures.

Unlike Flush, because the input file of Compaction has already implemented the JSON column-oriented storage, we do not need to completely traverse the JSON data in all files to extract the structure in most cases but can directly derive the JSON format of the output file through the Meta information of the files by obtaining a union of the input columns of all files and generalizing the types of conflict columns.

Through the JSON data processing in the Flush and Compaction phases, we can implement the column-based data at the storage layer to accelerate subsequent queries.

As mentioned above, although the underlying storage of Hologres converts JSONB data into column-oriented storage, the user interface still uses the native JSONB query interface. However, due to the change in the underlying JSONB data format, if the query engine still regards the column-based data as the JSONB type, the query will inevitably fail (the actual input type of the data is inconsistent with the expected input type of the execution plan). Therefore, this requires adaptive query rewriting of our query engine.

Next, let's take a simple SQL example to explain the adaptive query rewriting involved in the query process:

CREATE TABLE TBL(json_data jsonb); -- Create table DDL

SELECT SUM((json_data->'quantity')::BIGINT) FROM TBL;In Hologres, the two most commonly used operators for the JSONB type are -> and ->>.

Therefore, the meaning of the above example is to read the quantity field in the json_data JSONB column, convert it to the BIGINT type, and then perform the SUM aggregation operation.

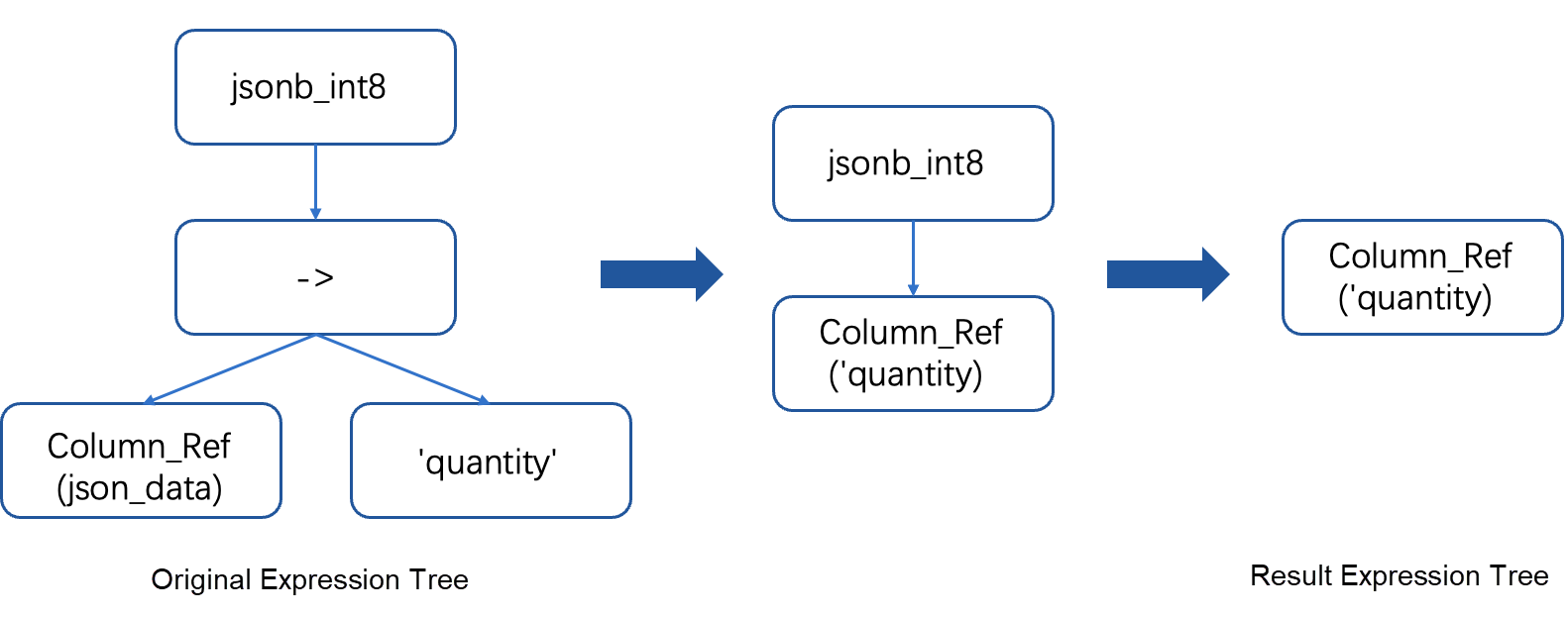

Therefore, in the physical execution plan, the Scan node will have the leftmost expression tree in the above figure. The root node represents the function that converts JSONB into BIGINT, and its child node table is the quantity field in the json_data column.

However, the underlying file stores the column-oriented storage data, and there is no longer a physical JSON column json_data. Therefore, we need to perform adaptive physical execution plan rewriting on the Scan node:

So why not use SQL Optimizer to rewrite the execution plan from the start?

The reason is that the optimizer does not know the true format of the JSONB column in the storage engine. For example, the type of the same column quantity in file A is INT, and in file B is TEXT. Therefore, the execution plans for different files may be different. SQL Optimizer cannot use a physical execution plan to express all possible situations, so it requires the execution engine to rewrite the execution plan adaptively.

Due to the ease of use of the JSON type, users can write any data that conforms to the JSON format to JSON. As a result, there are more dirty data in JSON compared with strong Schema types. This requires the JSON column-based solution of Hologres to be robust and tolerant of dirty data. Here, we mainly discuss two types of problems: inconsistent data types and data sparseness caused by incorrect field names.

First, let's discuss how to deal with inconsistent data types. Suppose we now have the following JSON data that needs column-oriented storage:

| {"user_id":1001, "user_name": "Adam", "gender": "Male", "age": 16} |

| {"user_id":1002, "user_name": "Bob", "gender": "Male", "age": 41} |

| {"user_id":1003, "user_name": "Claire", "gender": "Female", "age": "21"} |

It can be seen that the first two rows of data in the age column are of the INT type, but the data in the age column is of the TEXT type by the third row. At this time, we will generalize the type to the JSONB type we mentioned above:

| Column name | Data type |

| user_id | INT |

| user_name | TEXT |

| gender | TEXT |

| age | JSONB |

We can regard JSON as a recursively defined format. The values in the age field, such as 16, 41, and 21, are also JSON values (Object type), so we can perform such type generalization. After such generalization, the query performance for the age column will be slightly weaker than that without dirty data because, at the execution engine layer, JSONB's Cast operation cannot be directly omitted as mentioned in the previous section. But the overall performance is still far better than that without JSON column-oriented storage because we still only need to read the age column data, which saves a lot of I/O and computing operations.

Let's look at how to deal with sparse data. Generally, the cause of sparse data is that there is a problem with the logic of upstream data generation, generating a large number of non-duplicate field names, such as the following data:

| {"user_id":1001, "user_name": "Adam", "gender": "Male", "age": 16, "key_1": "1"} |

| {"user_id":1002, "user_name": "Bob", "gender": "Male", "age": 41, "key_2": "2"} |

| {"user_id":1003, "user_name": "Claire", "gender": "Female", "age": 21, "key_3": "3"} |

It can be seen that each row has a different and non-repetitive field. If we choose to extract columns key_1, key_2, and key_3, the data in these three columns will be very sparse, causing the number of columns in the overall file to expand greatly.

We choose to extract these sparse data separately into a special column (holo. remains), and the column is also of JSONB type. We will store all data whose frequency of occurrence is lower than a certain threshold (configurable) in this field:

| Column name | Data type |

| user_id | INT |

| user_name | TEXT |

| gender | TEXT |

| age | INT |

| holo.remaining | JSONB |

It can be considered that what is stored in the remaining column is a subset of the entire JSON data. This column and other column-based data can be constructed into the original complete JSON value.

The performance of querying the remaining columns is also slightly weaker than that of querying column-based columns. This is because what is stored is JSONB data, which includes all sparse fields. Therefore, you need to search for the specified fields in the JSONB data during the query, producing additional overhead. However, as the data stored in this column is sparse, the probability of the query hitting the remaining column is not very high, making it tolerated.

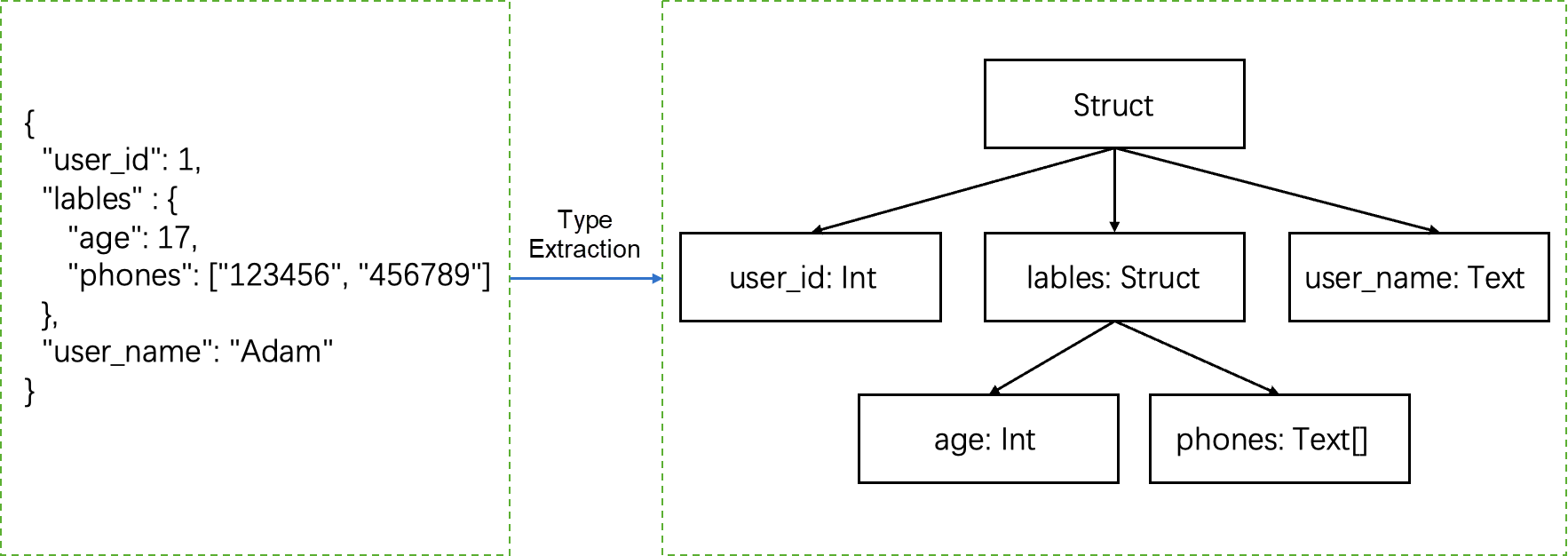

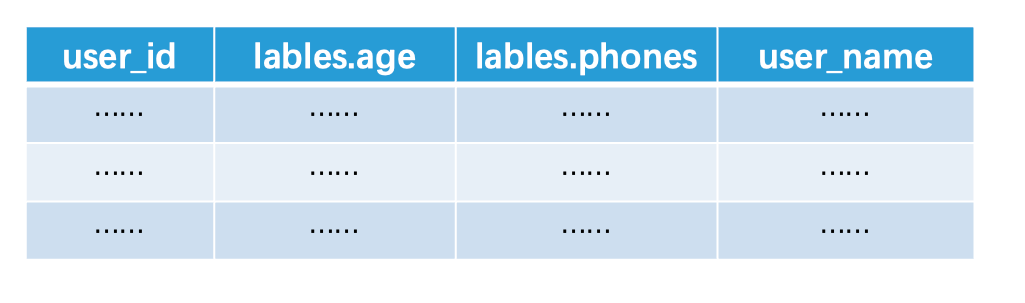

The JSON examples given above are simple and flat data. In fact, JSON data that contains nested structures is common. Next, I will briefly introduce how Hologres processes complex JSON structures.

For nested structures, we can regard JSON data as a tree, and the data is stored in leaf nodes (without complex nested structures). For example, the following JSON data will extract the tree structure shown in the right figure:

Because non-leaf nodes do not store data, the above tree structure can be flattened to obtain the following table structure during storage. In addition, our metadata will record the depth information of the nodes to ensure that there will be no column name ambiguity or conflict when flattening.

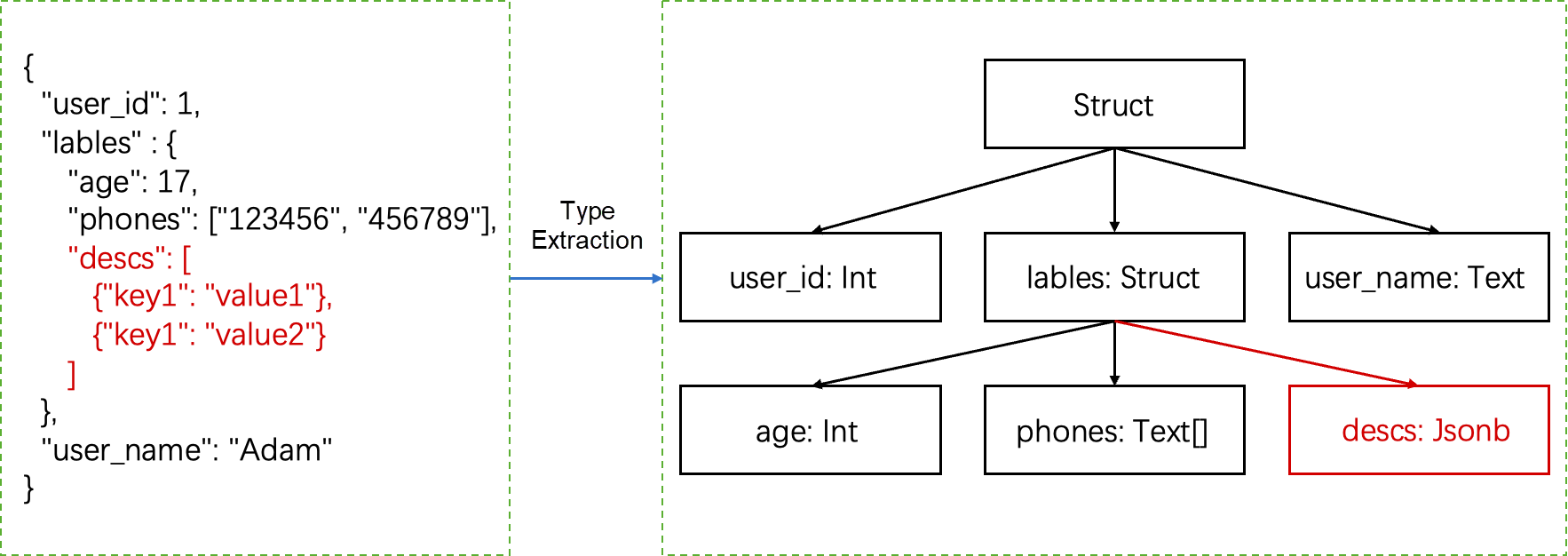

First of all, we need to make it clear that when Hologres extracts JSON structure, it only extracts the following basic types:

The JSONB type here is implemented as a bottom type when the JSON structure can't be extracted into the first six basic types after we try to generalize types. Complex nested structures are included. For example, the JSON data in the following line will extract the structure shown in the right figure. For the descs field, because non-basic type data is nested in the array, the type has degenerated into JSONB type.

Therefore, we should notice that for this type of data that degenerates to JSONB type, the performance of the operation on this column will be inferior to those that are extracted into basic type data, but the overall performance will still be much better than that of the non-column JSON solution. This is because the JSONB column only stores a subset of the complete JSON data, making the I/O and calculation involved in querying this column much smaller.

The column-based JSON solution of Hologres has a good optimization effect in most scenarios. The main point is that in scenarios where the query results need to bring out complete JSON columns, the performance of column-based JSON degrades compared with that of directly storing the original JSON format. See the following SQL:

CREATE TABLE TBL(pk int primary key, json_data jsonb); -- Create table DDL

SELECT json_data FROM TBL WHERE pk = 123;

SELECT * FROM TBL limit 10;The reason is that the underlying layer has converted JSON data into column-oriented storage. Therefore, when you need to query the complete JSON data, you need to reassemble the column-oriented data into the original JSON format:

This step will generate a large amount of I/O and conversion overhead. If a large amount of data is involved and the number of columns is large, it may even become a performance bottleneck. Therefore, we recommend that you do not enable column-based optimization in this scenario.

As mentioned above, when the column-based JSON data encounters sparse fields, we will merge these fields into a special column called holo.remaining to avoid column expansion.

Therefore, if the user's JSON data contains sparse fields, for example, each field appears only once in extreme cases, then our column-based JSON will not work because all fields are sparse, and all fields will be merged into the holo.remaining field. In this case, the column-based JSON fails to work. Therefore, the query performance will not be improved.

We used the TPCH dataset to test the optimization effect of the Hologres column-based JSON solution on the storage space. The specific test comparison solution is to build the TPCH tables into a JSONB column format and then compare the effect before and after opening the column solution (several tables with small data volume are omitted):

-- The table that stores the original JSONB data

CREATE TABLE CUSTOMER(data jsonb);

CREATE TABLE LINEITEM(data jsonb);

CREATE TABLE ORDERS(data jsonb);

CREATE TABLE PART(data jsonb);

CREATE TABLE PARTSUPP(data jsonb);

-- The table that enables column-based JSON optimization

CREATE TABLE CUSTOMER_COLUMNAR(data jsonb);

ALTER TABLE CUSTOMER_COLUMNAR ALTER COLUMN data SET (enable_columnar_type = on);

CREATE TABLE LINEITEM_COLUMNAR(data jsonb);

ALTER TABLE LINEITEM_COLUMNAR ALTER COLUMN data SET (enable_columnar_type = on);

CREATE TABLE ORDERS_COLUMNAR(data jsonb);

ALTER TABLE ORDERS_COLUMNAR ALTER COLUMN data SET (enable_columnar_type = on);

CREATE TABLE PART_COLUMNAR(data jsonb);

ALTER TABLE PART_COLUMNAR ALTER COLUMN data SET (enable_columnar_type = on);

CREATE TABLE PARTSUPP_COLUMNAR(data jsonb);

ALTER TABLE PARTSUPP_COLUMNAR ALTER COLUMN data SET (enable_columnar_type = on);The TPCH 100GB test set was used for verification. The results are as follows:

| Table name | Original JSONB storage (GB) | Enable column-based JSONB storage optimization (GB) | Data compression ratio |

| CUSTOMER | 4.4 | 2.14 | 2.06 |

| LINEITEM | 43 | 18 | 2.39 |

| ORDERS | 14 | 4.67 | 3 |

| PART | 5.2 | 1.3 | 4.3 |

| PARTSUPP | 7.8 | 5.8 | 1.34 |

As you can see, after column-based JSONB optimization is enabled, the storage space of each table is significantly reduced. The reason is:

1. The field names in the original JSON data will no longer be stored, but only the specific values corresponding to each field need to be stored. For example, the following is a row of data in the CUSTOMER table that has been converted into JSON. The strings such as c_name, c_phone, and c_acctbal in the data do not need to be stored after the column-based JSONB optimization is enabled.

{"c_name": "Customer#002662050", "c_phone": "23-793-162-6786", "c_acctbal": 4075.57, "c_address": "paJBRFkD N368pMSvGsYivWyRAs", "c_comment": "ly. fluffily even packages along the blithely even deposits should sleep slyly above the", "c_custkey": 2662050, "c_nationkey": 13, "c_mktsegment": "BUILDING"}2. The data type of each column is the same, and column-oriented storage can have a better data compression ratio.

In some datasets, we have observed that the actual storage space does not decrease after the column-based optimization is enabled on some datasets. This situation is usually caused by poor compression due to the sparse fields in JSON data, severe expansion of the number of columns, and TEXT type of each column after column optimization. Therefore, the above test is only a theoretical value. The actual user data is various, and the actual storage effect after compression still depends on the actual situation.

Thanks to the underlying column-oriented storage format, for those queries that can take advantage of JSON column pruning, their performance will usually be improved several times, even more than ten times in specific scenarios.

Here, we use the Github dataset (see the SQL and DDL appendix at the end of the article) to verify the query improvement of the Hologres column-based JSON solution. This dataset records various user behavior logs on Github, including code reviews and comments. This dataset is a JSON-formatted dataset. We have selected a total of 172,309,645 rows of data from 2015, imported them into the same Hologres instance, and compared the query performance optimized by using native JSON type, native JSONB type storage, and enabling column-based JSONB:

| Query | JSON(ms) | JSONB(ms) | Column-based JSONB (ms) | Performance improvement of columnar JSONB over native JSONB |

| Query1 | 26426 | 15831 | 50 | 31562% |

| Query2 | 28657 | 19285 | 320 | 5926% |

| Query 3 | 26900 | 17062 | 869 | 1863% |

| Query 4 | 57356 | 44314 | 1430 | 2999% |

As you can see, the query performance after column-based JSONB is optimized is qualitatively improved compared with the original JSONB format. However, it should be noted that the performance benefits may vary greatly due to different datasets and query modes, and the specific effect still depends on the actual situation. In the future, we will introduce the implementation cases of the Hologres column-based JSON solution in different scenarios and their corresponding performance benefits.

Alibaba's search and recommendation division utilizes Hologres to provide real-time data warehousing solutions for Alibaba Group's e-commerce businesses, including Taobao, Taobao Special Edition, and Ele.me. These solutions include ad hoc multi-dimensional analysis and A/B testing.

In business scenarios such as search and recommendation, there are various multi-value attributes such as user tags, product tags, seller tags, and algorithm bucket numbers. Let's take user tags as an example. The profile attributes of users are not fixed in the business, and there may be a need to add new attributes for observation at any time. Storing new user attributes in separate fields each time can result in inefficiencies in the real-time link. Prior to using column-based JSONB, the Text array type was used to store multi-value fields.

Here are examples of data and queries:

The above SQL statement is used to filter out data that corresponds to the 'layerA:1' and 'layerA:2' buckets, and calculate the corresponding PV (Page Views).

Although this solution has been in stable use for several years, it is not the most efficient solution. There is still room for improvement in terms of storage costs and computing performance. Additionally, we believe that using JSONB to store various attributes is the most intuitive and natural approach for the entire data model.

The following are examples of data and queries after the JSONB solution is used:

| second_timestamp | pk | UID | ... | bts_tags |

| 2022-11-11 00:00:00+08 | 858739e966f7ebd1cfaa49c564741360 | 1 | {"layerA":"1", "layerB":"11", "layerC":"111"} | |

| 2022-11-11 00:00:01+08 | e7e3d71fac5a92b87c3278819f6aff8c | 2 | {"layerA":"1", "layerB":"12", "layerC":"112"} | |

| 2022-11-11 00:00:01+08 | 828f07dc16f4fa2f4be5ba3a9d38f12a | 3 | {"layerA":"2", "layerB":"11", "layerC":"111"} |

SELECT

'layerA:' || (bts_tags ->> 'layerA') AS "bts_tags",

COALESCE(sum(scene_count), 0) AS "pv"

FROM

wireless_pv

WHERE

second_timestamp >= TIMESTAMPTZ '2022-11-11 00:00'

AND second_timestamp <= TIMESTAMPTZ '2022-11-11 23:59'

AND bts_tags ->> 'layerA' IN ('1', '2')

GROUP BY

'layerA:' || (bts_tags ->> 'layerA');As you can see, after the switch to JSONB, both data and queries are more intuitive. In 2022, the migration of the Hologres column-based JSONB solution was completed during the Double 11, and after the migration, the storage cost was reduced, and the query performance was improved.

| Table name | Average latency of queries in the Array format (ms) | Average latency of queries in the JSONB format (ms) | JSONB performance improvement |

| wireless_pv | 5975.5 | 1553.8 | 280% |

| wireless_dpv | 862.5 | 455.8 | 89% |

| Table name | Storage capacity of the Array format | Storage capacity of the JSONB format | Storage reduction (%) |

| wireless_pv | 35TB | 15TB | -57% |

| wireless_dpv | 3472GB | 1562GB | -55% |

The column-based JSON solution of Hologres provides excellent OLAP query performance while maintaining the simplicity and flexibility of JSON. This allows users to fully explore semi-structured data on Hologres and even enables Hologres, a comprehensive real-time data warehouse, to handle some functionalities of a data lake. We will continue to optimize the column-based JSON implementation in the future to deliver even better performance. Stay tuned.

1. Github dataset: https://www.gharchive.org/

2. Query performance testing DDL

CREATE TABLE gh_2015(gh_jsonb jsonb);

-- Enable column-based optimization

ALTER TABLE gh_2015 ALTER COLUMN gh_jsonb SET (enable_columnar_type = on);3. Query performance testing Query

--Query 1

SELECT COUNT(1) FROM gh_2015 WHERE gh_jsonb->>'type' = 'WatchEvent';

--Query 2

SELECT gh_jsonb->'repo'->>'name', count(1) AS stars FROM gh_2015 WHERE gh_jsonb->>'type' = 'WatchEvent' GROUP BY gh_jsonb->'repo'->>'name' ORDER BY stars DESC LIMIT 50

--Query 3

SELECT to_date((substring((gh_jsonb ->> 'created_at')FROM 1 FOR 8) || '01'), 'YYYY-MM-DD') AS event_month,

sum(coalesce((gh_jsonb -> 'payload' -> 'issue' ->> 'number'), (gh_jsonb -> 'payload' -> 'pull_request' ->> 'number'),

(gh_jsonb -> 'payload' ->> 'number'))::int) AS closed

FROM gh_2015

WHERE (gh_jsonb ->> 'type') = 'IssuesEvent'

AND (gh_jsonb -> 'payload' ->> 'action') = 'closed'

AND (gh_jsonb -> 'repo' ->> 'id')::bigint = 41986369

GROUP BY 1;

--Query 4

SELECT event_month,

all_size

FROM

(SELECT event_month,

COUNT(*) OVER (PARTITION BY event_month) AS all_size,

ROW_NUMBER() OVER (PARTITION BY event_month) AS row_num

FROM

(SELECT (gh_jsonb ->> 'type') AS TYPE,

(gh_jsonb -> 'repo' ->> 'id')::bigint AS repo_id,

(gh_jsonb -> 'payload' ->> 'action') AS action,

to_date((substring((gh_jsonb ->> 'created_at')

FROM 1

FOR 8) || '01'), 'YYYY-MM-DD') AS event_month

FROM gh_2015) t

WHERE TYPE = 'PullRequestEvent'

AND repo_id = 41986369

AND action = 'opened') sub

WHERE row_num = 1

ORDER BY event_month;Learn more about Hologres: https://www.alibabacloud.com/product/hologres

44 posts | 1 followers

FollowAlibaba Cloud MaxCompute - December 22, 2021

Apache Flink Community - August 14, 2025

Alibaba Cloud Big Data and AI - December 29, 2025

Alibaba Cloud MaxCompute - September 7, 2022

Rupal_Click2Cloud - December 15, 2023

Apache Flink Community - April 24, 2024

44 posts | 1 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn More Personalized Content Recommendation Solution

Personalized Content Recommendation Solution

Help media companies build a discovery service for their customers to find the most appropriate content.

Learn MoreMore Posts by Alibaba Cloud Data Intelligence