With the increasing memory size of modern hardware, the overhead of address translation becomes an important problem that cannot be ignored. Although huge pages can effectively reduce the burden of MMUs, designing an efficient huge page management solution is still a difficult challenge for developers. According to page access analysis in kernel mode and the data in the hardware performance counter in the recent Ingens research paper, the huge page management policy in Linux has some shortcoming such as address translation performance, page fault latency, and memory bloat.

In the HawkEye: Efficient Fine-grained OS Support for Huge Pages paper published at ASPLOS 2019, a new huge page management solution was proposed—HawkEye. The management algorithms of HawkEye mainly include asynchronous page pre-zeroing, deduplication of zero-filled pages, fine-grained page access tracking, and address translation overhead measurement through the hardware performance counter.

The statistics of the study show that HawkEye provides better performance than Linux in various workloads and more features.

The overhead of address translation is a significant problem for applications of large memory sizes. In virtual scenarios with two-layer address translation, the overhead of MMUs is especially high. Technologies such as multi-layer TLB and multi-layer cache and modern architectures have supported huge pages with different sizes.

However, this makes it more necessary for the OS to carefully choose a proper huge page policy for different workloads.

Despite the huge amount of hardware support, the performance of huge pages on some applications is still not satisfactory, mainly because of the huge page management algorithms from the software layer. The huge page management algorithm in Linux requires complicated balance between address translation overhead, page fault latency, memory bloat, algorithm overhead itself, and fairness.

HawkEye has noticed problems of some current huge page management solutions and provides a new solution to these problems.

First, let's see three representative huge page solutions: Linux, FreeBSD, and Ingens by Kwon and others.

Transparent huge pages in Linux request huge pages by using the two following methods:

In Linux, the background merge feature is only started when the system memory has a high fragmentation level and page faults find it hard to directly assign huge pages. Once started, khugepaged will select and merge processes based on the FCFS (first-come-first-serve) policy. The next process is only selected after the previous process is merged.

FreeBSD supports huge pages with different sizes. Unlike Linux, FreeBSD reserves a contiguous physical block for page faults. However, only when all the 4 KB pages in a contiguous 2 MB page (for example, 2 MB huge page) are assigned, FreeBSD will merge them into large pages. Besides, when huge demand is put on the memory, small pages released in huge pages will be split and returned to the OS (no longer managed as huge pages).

Therefore, FreeBSD enables relatively high memory usage, but also cause more page faults and MMU overhead.

According to the Ingens paper, the authors of Ingens pointed out the disadvantages of the Linux and FreeBSD huge page management solutions and claimed that Ingens can implement a good balance among these problems. Generally, Ingens has the following features:

As a new OS-level huge page solution, HawkEye provides a simple and efficient algorithm to achieve the following goals:

By testing various typical applications (applications with different requirements on huge pages), HawkEye performance data shows that its performance is much higher than existing solutions. At the same time, the consequent overhead increase is negligible (3.4% increase in CPU overhead in the worst situations).

Generally, the design of huge page management solutions involves the consideration of and balance among the following problems. Compared with existing OS solutions, HawkEye can solve these problems in a more efficient way.

The biggest problem with designing a huge page management solution is how to properly balance between the address translation overhead and memory bloat. Although the synchronous huge page allocation in Linux minimizes the MMU overhead, it will lead to significant memory bloat when an application uses only a small part of a huge page. The conservative approach adopted by FreeBSD (merging into huge pages only when all small pages are used) solves the memory bloat problem, but at the cost of the overall performance.

Ingens is like the combination of Linux and FreeBSD. It uses a concept called FMFI (Free Memory Fragmentation Index) to measure the system memory burden. When FMFI is smaller than 0.5 (low memory fragmentation level), Ingens acts like Linux and assigns huge pages to applications whenever possible to improve the performance. When FMFI is greater than 0.5 (high memory fragmentation level), Ingens switches to a conservative policy: It merges small pages into huge pages to mitigate the system memory bloat only when 90% of all the small pages are used.

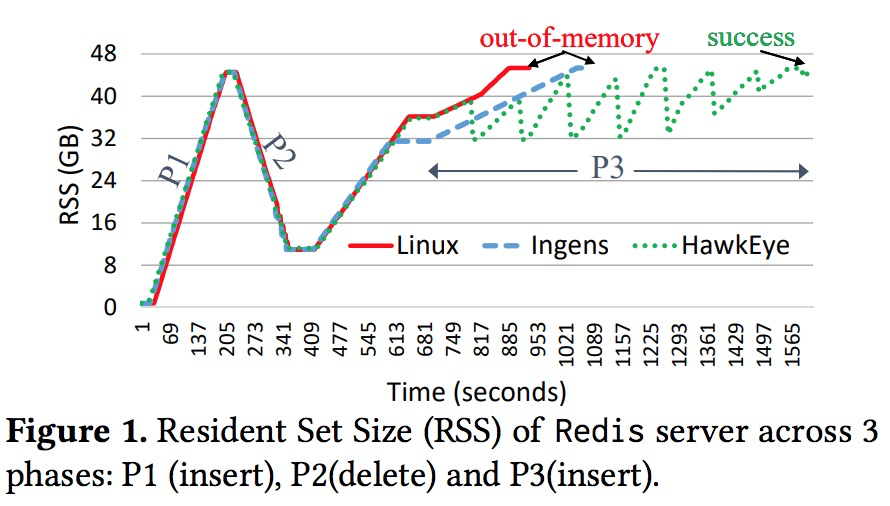

However, Ingens is not the best solution, because the huge pages assigned in case of low memory fragmentation does not solve the memory bloat problem. We can use a simple Redis key-value store test to verify this problem:

Use Redis to perform the following test on a system with 48 GB memory:

The test result is as follows:

As shown in the figure, after the deletion operation is executed during P2, the RSS of the processes is quickly reduced to about 11 GB. At this point, the khugepaged kernel thread merges many idle pages into huge pages. During P3 phase, before the RSS reaches 32 GB, Ingens and Linux are assigning as many huge pages as possible to the application. After the RSS reaches 32 GB, Ingens takes a conservative approach to avoid further memory bloat.

However, both Ingens and Linux trigger the OOM exception. By checking valid data entries, we can find 28 GB memory bloat in Linux (only 20 GB valid data) and 20 GB memory bloat (28 GB valid data). Although Ingens tries to reduce the memory bloat, already wasted memory cannot be recycled.

If Ingens is configured in a completely conservative way, although the memory bloat problem can be solved, the performance is significantly reduced when working with low memory. An ideal policy is expected to implement high application performance when memory is available and efficiently restore from memory bloat when memory is low, just like HawkEye in the figure.

When the OS assigns pages to applications, usually page content is completely cleared (except in some scenarios). Page zeroing takes a very large proportion of the page fault time, especially when huge pages are used. According to the test, clearing the content of a 4 KB page accounts for 25% of the total page fault time and clearing a 2 MB page accounts for 97% of the total page fault time.

When page fault latency is too high, users will become aware of the latency and have an awful user experience with interactive applications.

To solve this problem, Ingens only assigns small pages (4 KB) when a page fault occurs and has the khugepaged background thread to asynchronously merge small pages into huge pages. However, this loses an important advantage of using huge pages, that is, reduced number of page faults.

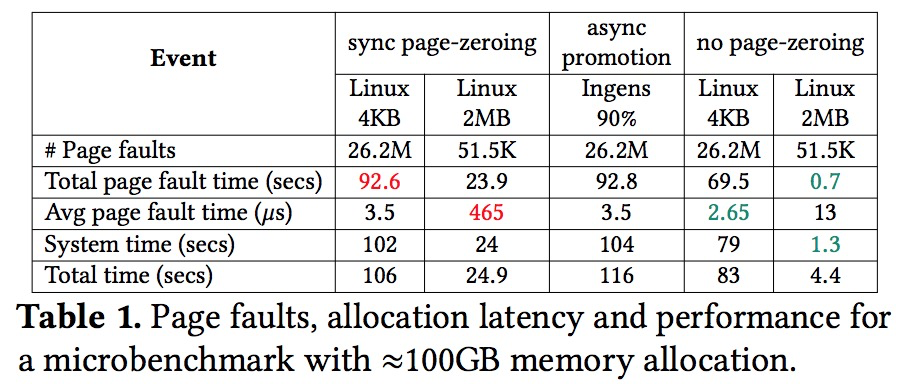

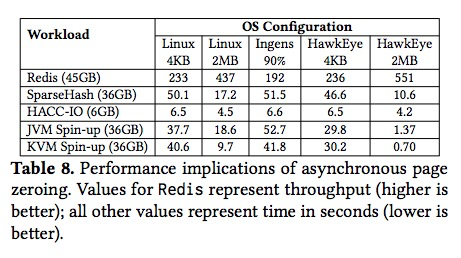

Let's perform a simple benchmark test: request 100 GB memory, write one byte into each 4 KB page and release the memory. The following table compares different huge page solutions.

As shown in the table, the Linux system (Linux 2 MB) that supports transparent huge pages has 500 times fewer page faults than the Linux system that only uses 4 KB pages, contributing nearly four time better performance in this benchmark test; the average page fault time is nearly 133 times higher (465µs vs. 3.5µs). However, the average page fault time in Ingens is basically the same as Linux 4 KB in this scenario.

It can be inferred that the page fault latency and the number of page faults can be reduced if page zeroing is not required when page faults occur on huge pages. HawkEye achieves this goal by implementing an asynchronous page zeroing thread.

Due to memory fragmentation, the OS needs to fairly assign huge pages among multiple processes. Ingens considers the contiguous physical memory as a system resource and uses the merge proportion of huge pages per process as the fair standard to implement the evaluation and the fairness among individual processes. However, this fairness policy of Ingens has a big disadvantage.

Consider two processes (P1 and P2) with the same proportion of huge pages. The TLB burden of P1 is much higher than that of P2 (for example, P1 accesses multiple 4 KB small pages; P2 mainly accesses one or several 4KB small pages). In this case, merging into huge pages is more necessary for P1 than P2. However, Ingens will treat the two processes equally.

HawkEye considers it more efficient to evaluate the system memory burden based on the MMU overhead. According to HawkEye, a fairness algorithm needs to balance the MMU overhead of individual processes and make each process have similar MMU overhead. For example, if the MMU overhead of P1 and P2 is 30% and 10% respectively, then more huge pages should be assigned to P1 until its MMU overhead is down to 10%. This policy allows the entire system to have optimal performance.

Finally, in a single process, both Linux and Ingens scan and merge small pages into huge pages in the order from the low address range to high address range of a virtual address. However, this method is unfair to applications that have hot spots in the high address range. Because the hot spots of applications are usually distributed in different VMAs, this practice easily leads to unfairness.

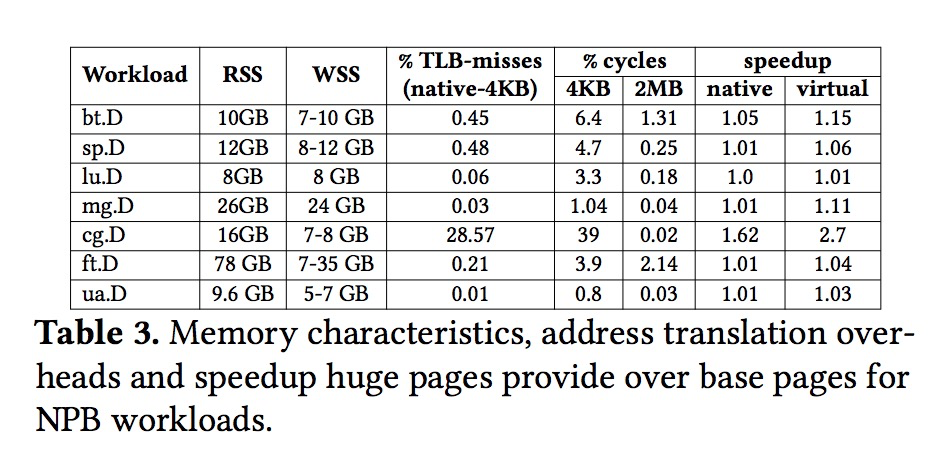

Currently, a common approach to evaluating the address translation overhead is WSS (working set size). A task with higher WSS is generally considered to have higher MMU and performance overhead. However, the test result shows that this is not always true for actual applications:

As shown in the table, a task with high WSS (for example, mg.D) has much smaller MMU overhead than a task with low WSS (for example, cg.D). This is closely related to the memory access modes for processes.

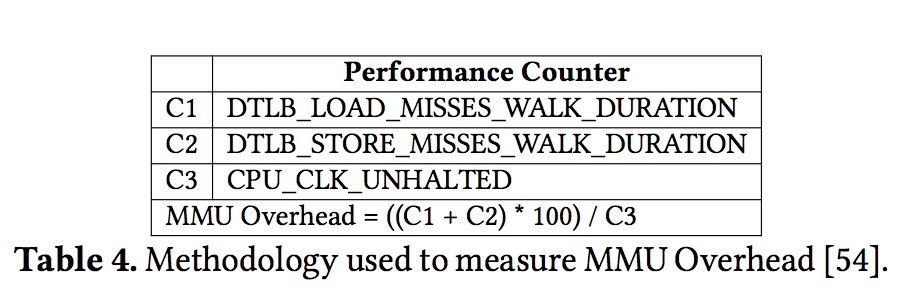

We actually do not know how the OS measures the MMU overhead. It depends on the complex interaction relationship between applications and underlying hardware. However, the preceding test shows that the MMU overhead may not be in proportion to the memory size used by a process. Therefore, if possible, we can directly use the hardware performance counter to evaluate the TLB overhead, which is more efficient. HawkEye proposed the following algorithm to calculate MMU overhead:

However, the preceding performance counter cannot be used in some cases, for example, virtual scenarios where most hypervisors do not have a hardware performance counter related to virtual TLB. To solve this problem, HawkEye provides two algorithms:

HawkEye-PMU that measures MMU overhead based on the hardware performance counter and HawkEye-G that evaluates MMU overhead based on memory access modes

The preceding table shows the main design objectives of HawkEye. HawkEye is based on four critical factors:

(1) Use asynchronous page pre-zeroing to solve high latency in case of huge page faults.

(2) Detect and delete duplicate small pages in assigned huge pages to solve the memory bloat problem.

(3) Select a better memory range for merging into huge pages based on access tracking of huge page area granularity. The tracking metrics include recency, frequency, and access coverage (that is, how many small pages are accessed in a huge page).

(4) Achieve balanced fairness among processes based on MMU overhead.

Asynchronous page pre-zeroing is a common mechanism, which is often implemented by creating a CPU and using limited background threads. This mechanism was proposed as early as 2000. However, Linux developers do not think that this mechanism will improve the performance, mainly for the two following reasons:

To achieve asynchronous pre-zeroing, HawkEye uses two linked lists to manage idle pages in the partner system: zero and no-zero. Huge pages are assigned preferentially from the zero linked list and the background thread will periodically zero pages in the no-zero linked list by using non-temporal to avoid cache pollution.

Pre-zeroing is not required for page cache and copy-on-write pages. This type of memory requests can be processed preferentially by assigning pages from the no-zero linked list.

In short, according to the HawkEye team, page pre-zeroing a topic that deserves attention in the new hardware environment and current workload requirements. Their test data has proven the effectiveness of this mechanism.

In general, the application memory is mainly zero-filled pages requested by traditional methods (for example, malloc) and the remaining is file cache or copy-on-write pages. Transparent huge pages are mainly used in anonymous pages. HawkEye preferentially allocates huge pages to the process when the first page fault occurs. However, when the system memory pressure is too high, HawkEye scans all the small pages in each huge page. If the total number of zero-filled small pages is greater than a certain proportion, the huge page will be split into small pages, and all the zero-filled pages will be merged into one by using the copy-on-write technology. In some scenarios, this will lead to more page faults. However, this practice mitigates the memory bloat problem more obviously.

To recover from memory bloat, HawkEye uses two memory allocation watermarks. When the requested system memory size exceeds a threshold (for example, 85%), the background recovery thread is activated and executed periodically until the system memory usage drops below 70%. For each execution, the recovery thread preferentially scans the process with minimum MMU overhead.

This policy ensures that processes that least need huge pages are processed first. It is consistent with the huge page allocation policy (huge pages are preferentially assigned to processes with maximum MMU overhead) in HawkEye. For each small page, it can be skipped when non-zero bytes are scanned, and 4096 bytes need to be scanned for zero-filled pages, so that the overhead of scanning threads is irrelevant to the total memory of the entire system, but only to the recyclable memory. This is also applicable to systems with large memory.

The mechanism for merging zero-filled small pages is similar to the current KSM mechanism in Linux kernel, but KSM in Linux kernel and huge page management currently belong to two independent systems. HawkEye uses some techniques in KSM to quickly find and efficiently merge zero-filled pages.

At present, the huge page merging mechanism of the system scans from the low address space to the high address space of the process, which is inefficient because the hot memory areas of the process are not all within the low address range.

HawkEye defines a metric called access-coverage. By scanning and counting the page table access bits of each small page in a huge page periodically, it can be determined how many small pages have been accessed within a certain time range, thereby selecting hot regions for merging, which can significantly improve TLB revenue after merging.

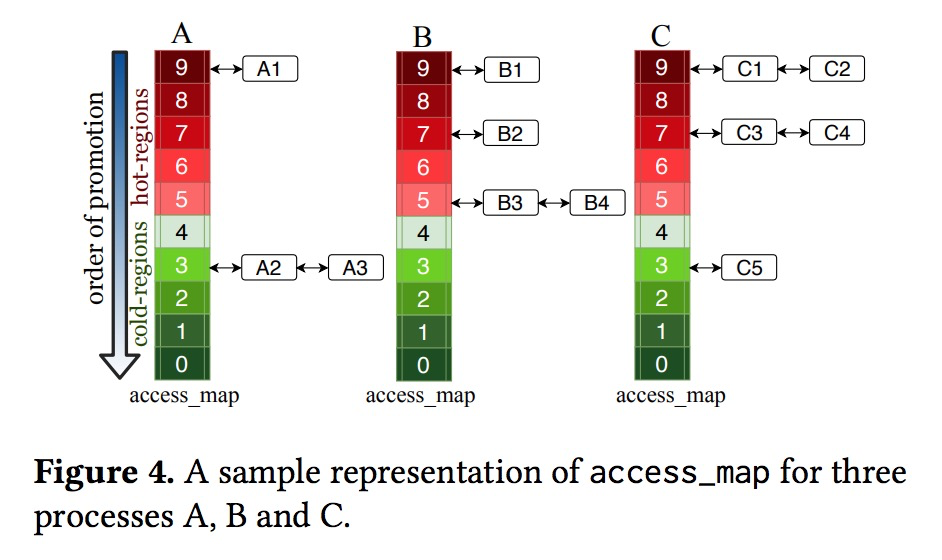

HawkEye uses the data structure access_map of a per process to manage access-coverage. The access_map is an array, and each array member is called a bucket (each bucket is actually a linked list). Take the 2 MB huge page of x86 as an example. Each huge page consists of 512 small pages. According to the total number of accesses (0-512) on the page table of each small page in the large page, the huge page is put into the corresponding bucket: If the number of accesses is 0-49, the huge page is put into bucket 0; If the number of accesses is 50-99, the huge page is put into bucket 1, and so on. The following figure shows the access_map status of the three processes A, B, and C:

During a scan, if the access coverage of a huge page increases (for example, from 30 small pages to 55 small pages), it needs to be moved from bucket 0 to bucket 1 (upgrade). This time, it will be moved to the head of the linked list of the corresponding bucket. On the contrary, when it is moved from bucket 1 to bucket 0 (downgrade), it will be moved to the end the linked list of the bucket. The huge page merging is traversed from the head to the end of the linked list, which ensures that the most recently accessed regions will be merged preferentially during each merge. It can be noted that the HawkEye solution takes into account the access frequency and recency. Huge pages that have not been accessed recently or have a low access range will be moved to a lower-level bucket or the end of the linked list of the current bucket.

In the access-coverage policy, pages with the highest MMU pressure are first merged into a large page, which is consistent with the Fairness discussed earlier, that is, the process with the highest TLB pressure is prioritized to be merged. The access-coverage always selects the bucket with the widest access coverage among all processes (such as bucket 9 in the figure above) for priority recycling. If multiple processes have the same highest level, the fairness is guaranteed by means of loops. As shown in the figure above, the merging sequence in process A, B, and C is A1, B1, C1, C2, B2, C3, C4, B3, B4, A2, C5, and A3.

However, the MMU overhead may not be exactly the same as the access coverage-based overhead. Therefore, in the HawkEye-PMU algorithm, the process with the highest MMU overhead measured by the hardware counter is preferentially selected, and then huge page merging is carried out in the order of the above buckets. If the MMU overhead of multiple processes is similar, they are processed one by one in a loop.

Currently, the HawkEye algorithm has the following unsolved problems:

(1) Method for selecting the memory pressure threshold. Currently, static fixed thresholds are used, such as 85% and 70% of the system memory. If the memory pressure keeps fluctuating, static thresholds can be "conservative" or "radical". Ideally, these thresholds should be adjusted dynamically according to the system status.

(2) Huge pages are "starved to death". Although the HawkEye algorithm is based on the MMU overhead, it may cause some processes to be unable to use huge pages (because the MMU pressure is insufficient). This problem can be solved by limiting the number of huge pages used by processes through mechanisms, such as Linux Cgroup.

(3) Other algorithms. For example, some management algorithms that have not been discussed (such as the overhead of khugepaged merge/split mechanism itself), and merge algorithms that can minimize the tracking overhead of page table entries. Not much in-depth research has been done to apply HawkEye in these fields for the time being.

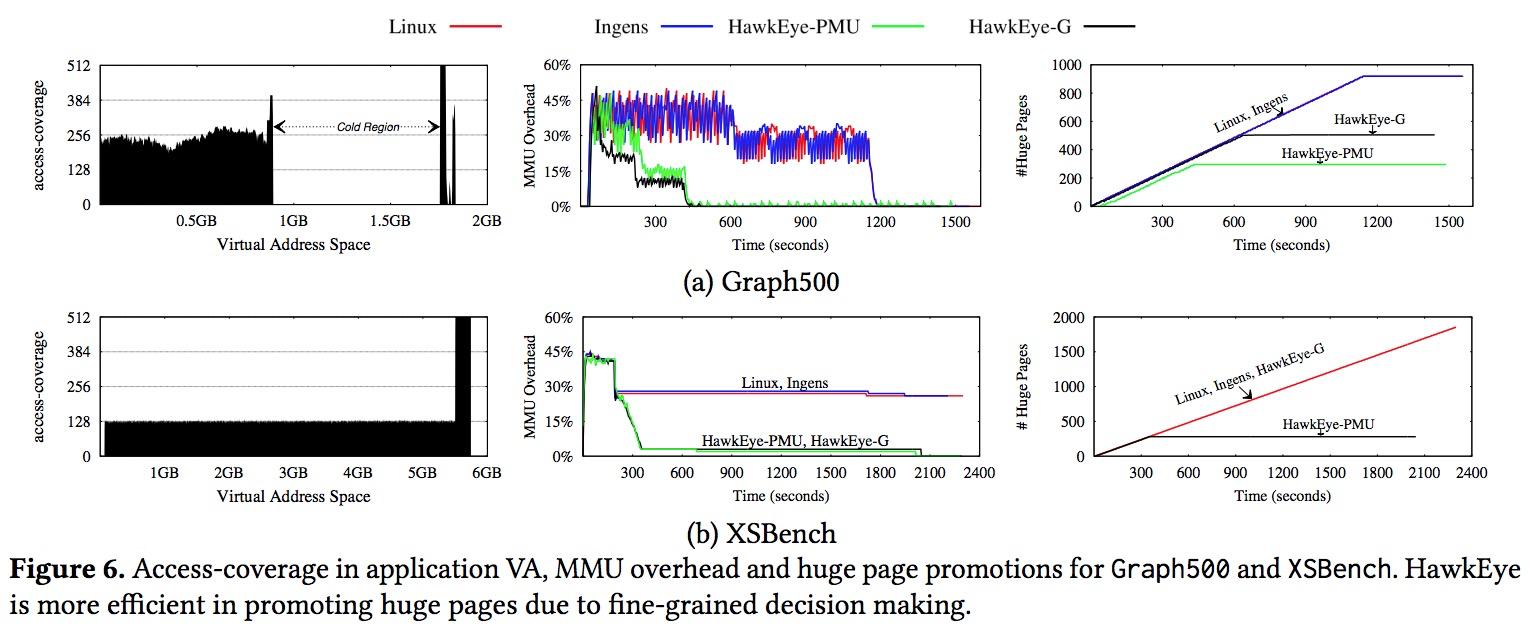

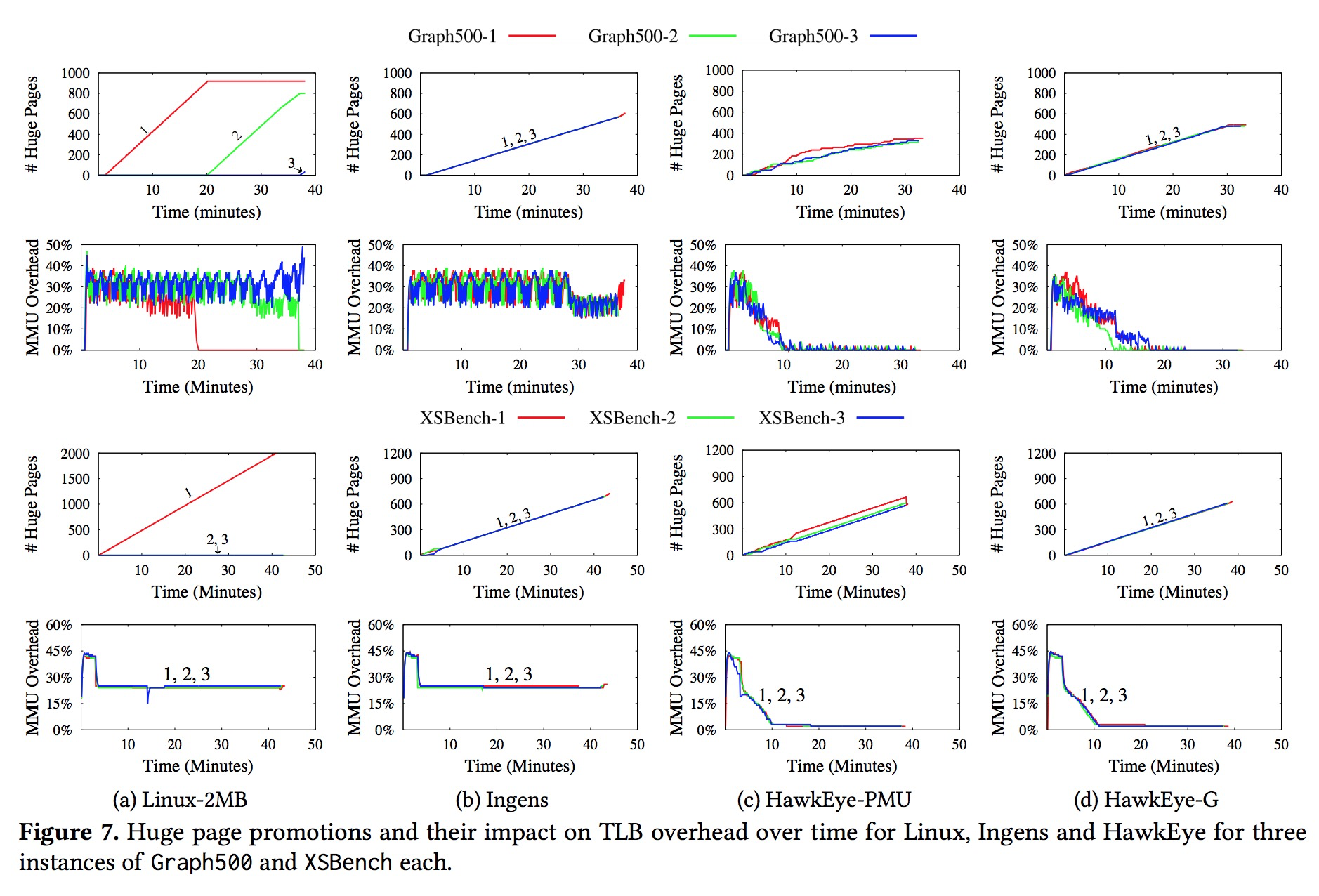

The figure above shows the execution results of a Graph500 and a XSBench applications under different algorithms. From the first column, we can see that the hot memory region of the application is not always in the low address space. The second and third columns show the effect of huge page merging. For both Linux and Ingens, it took nearly 1,000s to lower the MMU overhead, while it took only about 300s for HawkEye to achieve significant results.

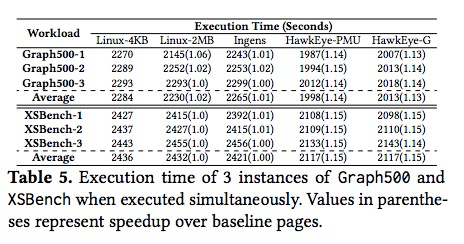

Three Graph500 and three XSBench applications are run at the same time. The following table shows the overall running duration. The running duration of the HawkEye algorithm is shorter than that of Linux and Ingens, and the performance is higher.

The figure below shows the huge page usage and MMU overhead of each process during the above testing. It is obvious from the figure that for Linux, the huge page usage is unfair and highly random to each process, while for Ingens and HawkEye, the large page usage is fairer to each process. As for the performance of MMU overhead, HawkEye has an absolute advantage.

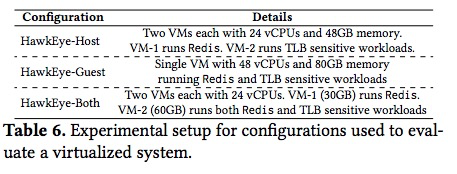

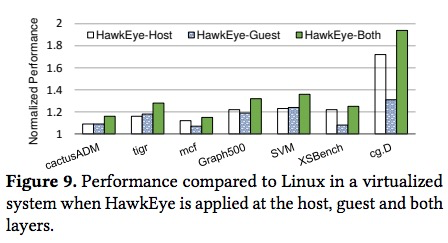

The following figure shows that HawkEye improves the performance by about 18-90% compared with Linux in virtualization scenarios. We can see that, in some applications (such as cg.D), the performance improvement of HawkEye is more obvious in the virtualization scenarios than in the bare machine, because the processing of "guest TLB miss" involves multi-layer MMU overhead, which gives HawkEye algorithm more space.

Test Configuration

Test Performance

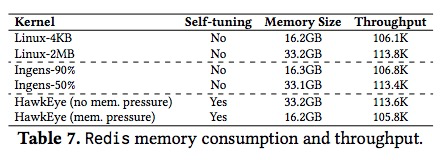

The following table shows the balance effect between memory bloat and performance in the Redis scenario. When the memory pressure is low, HawkEye takes up more memory (equivalent to that of Linux-2M) to provide higher performance, while when the memory pressure is high, HawkEye can eliminate the memory bloat of Redis, and reduce it to the level of Linux when using only 4 KB small pages (no memory bloat).

In 4 KB scenarios, the acceleration effect of HawkEye on Page Fault is not obvious, because the page zeroing accounts for a small proportion of page faults.

The first row in the table below shows the throughput of Redis. We can see that the throughput of HawkEye is about 1.26 times higher than that of Linux-2M in the 2 MB huge page scenario.

The data displayed in other rows is the execution time. The execution time of HawkEye-2M is about 1.62 times higher than that of Linux-2M on the SparseHash test.

In the virtualization scenario, the Spin-up duration is strongly related to Page Fault exceptions. We can see that the duration of HawkEye can be 13 times higher than that of Linux in the huge page scenario, but only 1.2 times in the 4 KB page scenario.

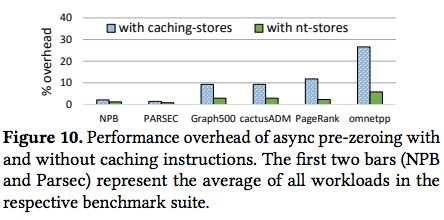

The following table shows the performance overhead caused by asynchronous pre-zeroing threads in multiple test sets. We can see that the overhead can be significantly reduced by using the non-temporal instruction (no cache). In the omnetpp test, the overhead can be reduced from 27% to 6% by using nt-stores, while the average overhead in common scenarios is below 5%.

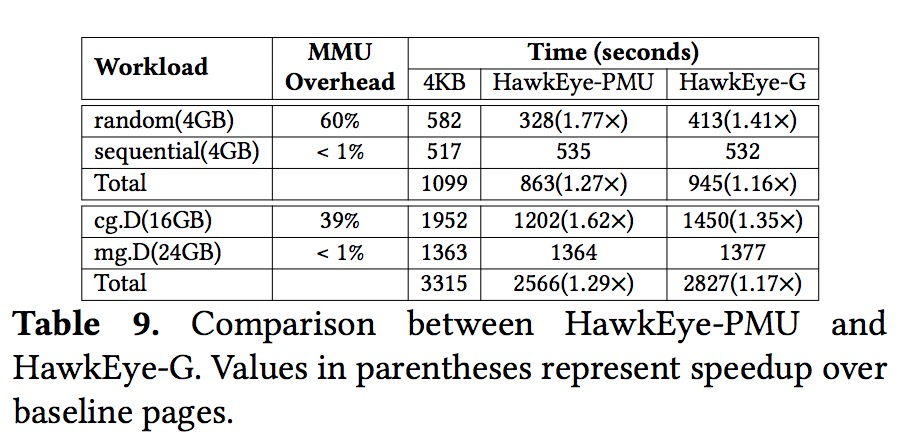

HawkEye-PMU is used to compute the MMU overhead based on hardware performance counters, which is more accurate than the software estimation of HawkEye-G. HawkEye-G estimation is based on page access coverage, but applications with the same coverage are also classified as TLB sensitive and TLB insensitive. As follows, two tasks are run simultaneously in one system: TLB sensitive tasks (such as cg.D) and TLB insensitive tasks with TLB (such as mg.D). We can see that HawkEye-PMU can accurately identify tasks with higher MMU overhead, while HawkEye-G may see deviations in estimation based on page access. In the worst case, HawkEye-PMU is 36% better than HawkEye-G. The problem of how to eliminate the differences between the two algorithms through software requires future work.

2,599 posts | 762 followers

FollowAlibaba Cloud Project Hub - December 10, 2020

XianYu Tech - August 6, 2020

OpenAnolis - November 20, 2023

淘系技术 - November 4, 2020

ApsaraDB - December 25, 2023

amap_tech - November 20, 2019

2,599 posts | 762 followers

Follow Alibaba Cloud Linux

Alibaba Cloud Linux

Alibaba Cloud Linux is a free-to-use, native operating system that provides a stable, reliable, and high-performance environment for your applications.

Learn More Accelerated Global Networking Solution for Distance Learning

Accelerated Global Networking Solution for Distance Learning

Alibaba Cloud offers an accelerated global networking solution that makes distance learning just the same as in-class teaching.

Learn More Networking Overview

Networking Overview

Connect your business globally with our stable network anytime anywhere.

Learn More Machine Translation

Machine Translation

Relying on Alibaba's leading natural language processing and deep learning technology.

Learn MoreMore Posts by Alibaba Clouder