By Baitao, Yueyi, Jingzhou, Hongcheng, Yingzhi, and Kante

This article introduces the research about the interactive ability of gesture recognition by the Tmall Genie M laboratory. The research covers the exploration of business and algorithms in gesture recognition.

"Gestures are the most natural form of human communication. Hardware is the only limitation that prevents us from controlling our devices well." Here, the hardware limitation refers to the need for additional depth sensors by traditional gesture recognition algorithms. Thanks to the continuous development of adaptive artificial intelligence (AI) and edge computing over the past decade, gesture recognition has gradually become possible.

We may see more functions operated with gestures in smartphones, tablets, desktop computers, laptops, smartwatches, smart televisions, and Internet-of-Things (IoT) devices.

This year, we have already seen such a trend. Technology behemoths have launched their own gesture recognition products. Among them, Google provided gesture interactions on its mobile phones and smart speakers. Apple has submitted a patent regarding the application of gestures on smart speakers. Indeed, gestures are the most natural method of human-computer interaction. Now, imagine the following scenarios:

In a word, you are the only interface you need.

We belong to the Tmall Genie M laboratory, which is mainly responsible for the visual algorithms of Tmall Genie. Our main research direction is visual algorithms for human-computer interaction, including gesture recognition, body recognition, and multi-modal visual speech interaction.

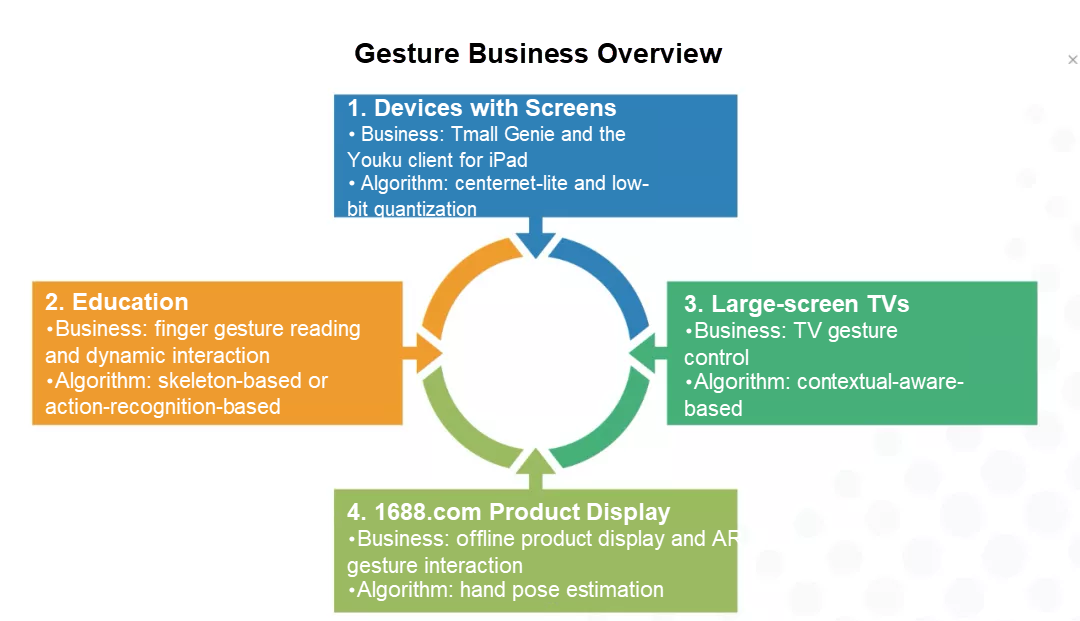

Last year, we launched an ultra-lightweight gesture recognition algorithm based on Tmall Genie smart speakers. This year, we have gone further by exploring the following technologies, business, and algorithms.

Last year, we launched an ultra-lightweight gesture recognition algorithm based on Tmall Genie smart speakers. This year, we cooperated with partners from Youku to implement single-point gestures into the Youku client for iPad.

A quote from users: "a magical tool to watch a TV series while eating"

This is an introduction video that was spontaneously uploaded by users after Youku went online. It also accords with the expected scenarios and pain points:

In recent years, more smart TVs (smart screens) have entered our homes. According to the forecast by the Ministry of Industry and Information Technology, the market penetration rate of smart TVs is expected to exceed 90% by 2020. In addition to popularity, a powerful interaction capability is a requisite feature of a smart home interface. Smart TVs have become another important interface for the smart home IoT. Due to their large screens, gesture interaction is more user-friendly.

The further we forge, the greater challenges we are often accompanied by. Compared with mobile devices, like Tmall Genie CC or the iPad, the development of gesture algorithms on smart TVs face the following challenges:

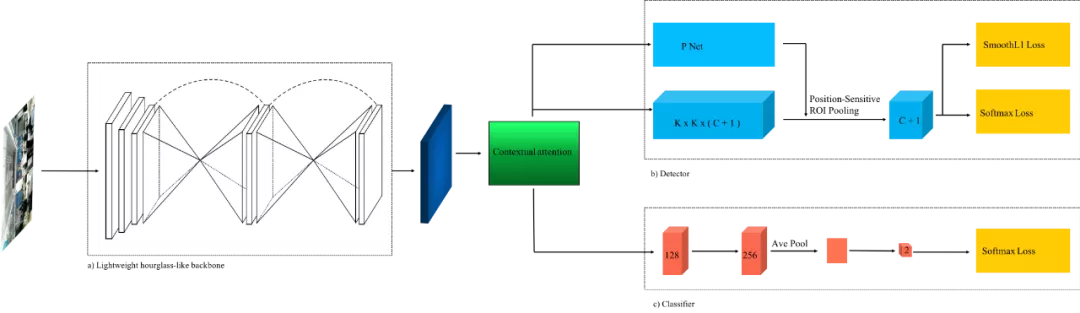

To address the preceding challenges, after exploring and researching the algorithms, we proposed Contextual-attention-guided Fast Tiny Hand Detection and Classification.

The large screen solution: Contextual-attention-guided Fast Tiny Hand Detection and Classification

1) Lightweight hourglass-like backbones

The lightweight hourglass module downsamples the input and keeps detailed features as much as possible while obtaining feature graphs with high-level semantic information. This is conducive to the detection of a tiny hand.

2) Contextual attention

From three to five meters away, human hands occupy an extremely small proportion of pixels in the entire picture. Although very small, a hand is close to certain parts of the body, such as the wrist, arm, and face. Also, it may be a similar color to these parts. These human bodies or body parts are larger than hands, which provide us with additional clues for the detection of smaller hands. With these clues, we can detect smaller hands more easily. Based on this, we use similarity context and semantics context as the contextual attention to guide the network to obtain semantic information outside the hand area and enhance detection performance.

Haier TV gestures

I believe that any student who has implemented AI algorithms will encounter various practical algorithm problems.

We went through the same process after we launched gesture recognition on Tmall Genie and Youku. To improve our algorithm, we took the following actions:

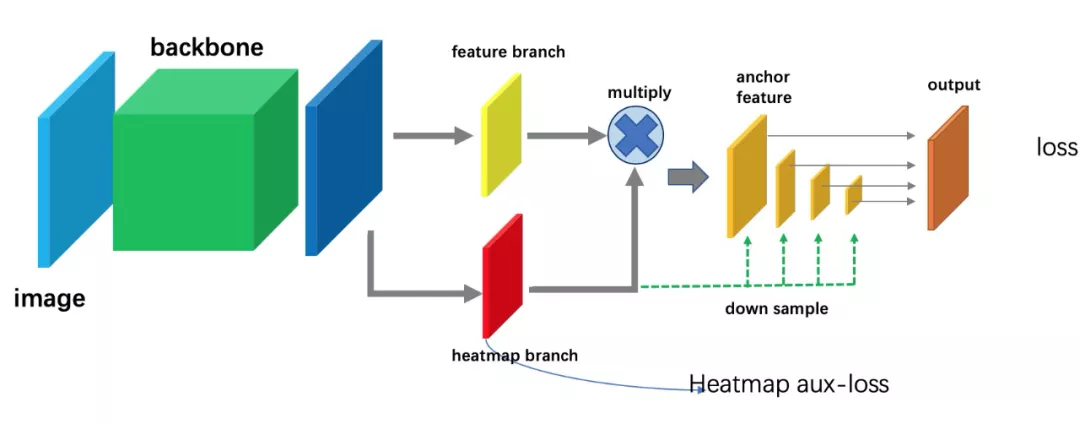

Based on the anchor-free solution, we adopted a more efficient algorithm framework by assisting the anchor solution with heatmap.

Due to the computing power difference on different devices, such as Tmall Genie speakers and IoT visual modules, gesture recognition on the client has higher requirements. To improve the gesture recognition algorithms, a centernet-lite detection algorithm is proposed based on the popular anchor-free centernet algorithm. However, in the process of implementing the algorithms, we found that the popular anchor-free solution had some inherent disadvantages in small networks:

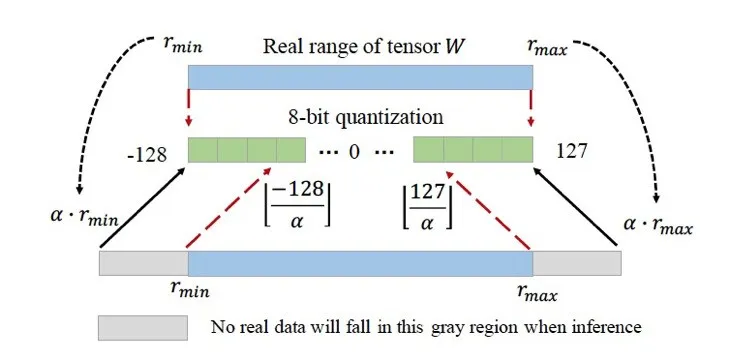

Quantization on the client

The current popular solution to accelerate the recognition in the industry is Google's 8-bit quantization algorithm, but there is a better low-bit quantization algorithm. This algorithm learns the min. and max. ranges of each layer, and dynamically adjusts the quantization solution of each layer. Currently, the acceleration ratio at the inference engine end is 70%.

Finally, we use the heatmap solution to assist the anchor-free solution and integrate the overflow-aware solution. This has achieved a good balance between accuracy and performance on Tmall Genie hardware.

| Hardware | Before optimization | centernet-lite | Overflow-aware |

|---|---|---|---|

| MTK8167S | 200ms | 110ms | 80ms |

| iPad Mini4 | 45ms | 23ms | 18ms |

We have adopted the distillation idea of deep learning and used the output of a pre-trained complex model (teacher model) as a supervisory signal to train the online network (student model.) We can continuously optimize algorithms without directly using business data.

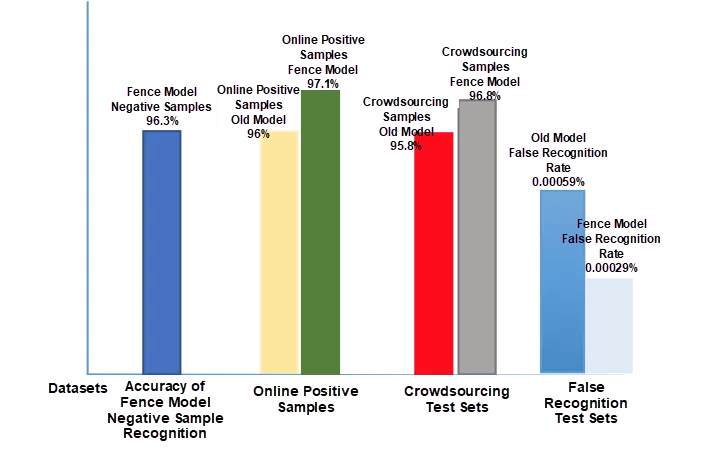

Here are the optimization results with the fence model:

We have tried and applied many single-point gestures. However, dynamic gestures are a more natural and comfortable method of interaction. It is the direction we have been studying continuously.

From product planning, dynamic gestures provide more senses of interaction and participation, and their application scenarios are also different. For example, single-point gestures may be applied in algorithm scenarios where IoT devices are controlled. By comparison, the dynamic gestures with the unique sense of participation are suitable for scenarios, such as education, entertainment, and offline operations. This is also why we keep trying to break through in dynamic gesture scenarios.

Last year, we found a skeleton-based dynamic gesture recognition algorithm. Related work has been submitted to ISMAR2019 and published here.

However, in the productization process, we found that a purely skeleton-based solution may be impractical for general dynamic gesture recognition, due to the following reasons:

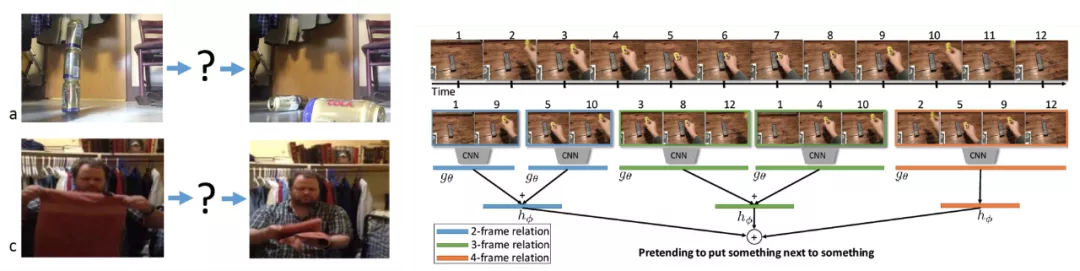

Temporal Reasoning

Principle: To help computers recognize these two behaviors, the temporal reasoning capability of image relations requires two or more frame images to assist mutual recognition. The behavior needs to be interpreted by the combination of multiple frames. Therefore, this solution works around motion blurring and is more controllable in computing power consumption.

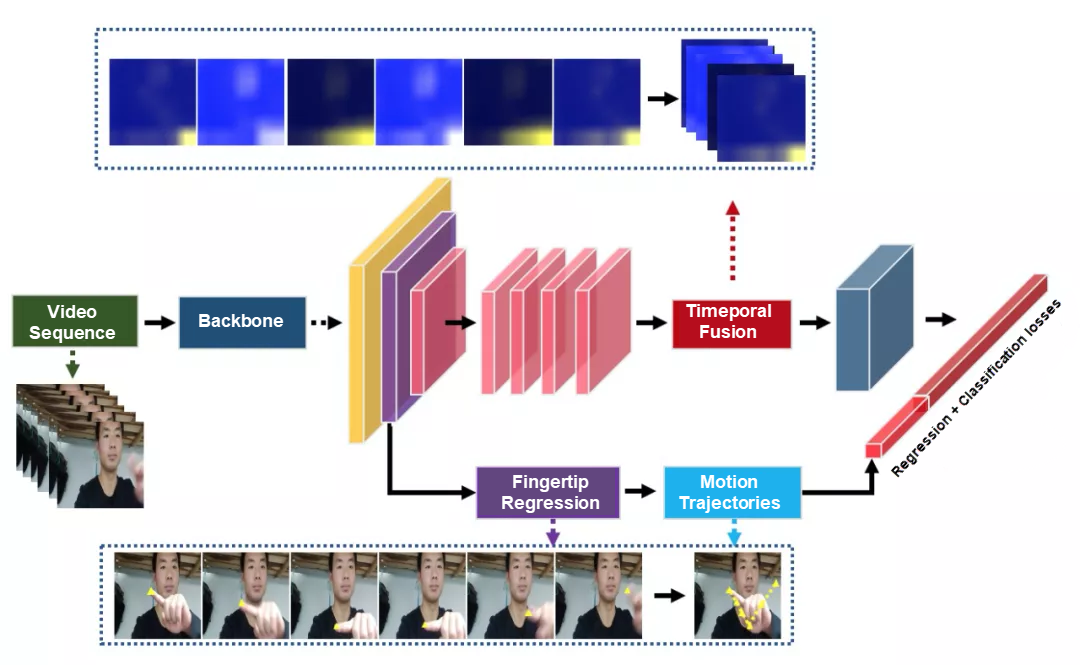

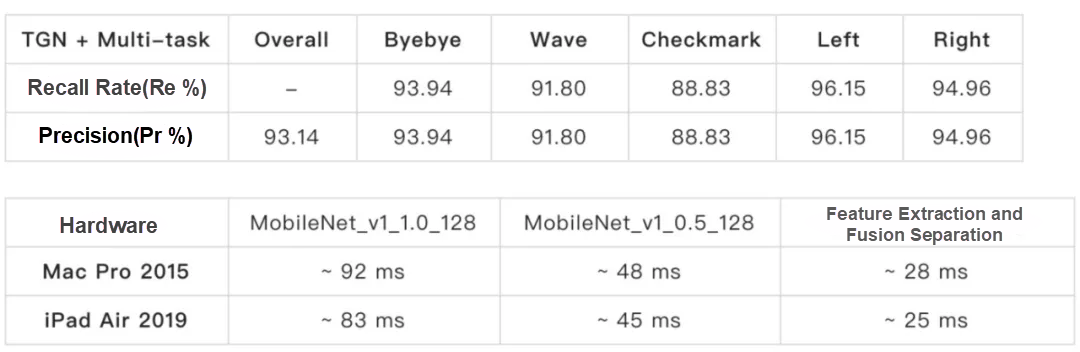

Our Temporal Generation Network

To solve motion blurring, a video recognition solution, which utilizes the RGB time series sequence as the main framework, is adopted. The solution extracts the features of frames under continuous sampling and merges time series features with an improved 3D convolution network that is efficient and non-degenerated.

At the same time, to recognize specific gestures, an auxiliary branch based on finger key points is proposed. Heatmap is used to carry out multi-task learning and regression of the finger key points and detect the motion trajectory of the finger. Then, the finger key point branch merges features with the RGB branch to assist dynamic gesture recognition. The algorithm combines the advantages of the solution that is based on RGB and key points, achieving a balance between speed and accuracy.

We have already explored and tried many algorithms in various businesses with single-point and dynamic gesture recognition. Therefore, we have some prospects on the algorithm exploration direction and business focus for gesture recognition.

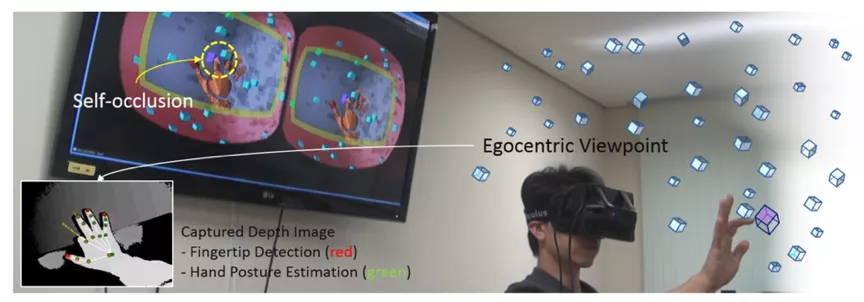

3D hand posture estimation is the process of modeling human hands based on input RGB or RGB-D images and finding the positions of key components, such as knuckles. We live in a 3D world, and 3D hand posture interaction will inevitably bring a more natural and comfortable interactive experience. We are also actively exploring 3D hand posture interaction. In the future, we will launch more interactive products to provide more humanized interactive experiences and services, such as interactive display of e-commerce products, virtual reality (VR), or artificial reality (AR), gesture language recognition, and online education.

3D hand posture manipulation launched by Oculus Quest this year

Can gesture control surpass voice control as the most natural method of control for smart home devices? For example, in the IoT scenario, you can use gestures to control TVs, light bulbs, and air conditioners. Currently, some startup companies have begun to explore this aspect.

Take Bixi as an example. Bixi is a small remote control that senses your gestures. It can control your favorite smartphone applications, LifX or Hue lightbulbs, Internet speakers, GoPros, and many other IoT devices.

Another example is the Bearbot universal remote control shown in the following figure. With its cute appearance, Bearbot supports custom gestures to control all home appliances, so you can get rid of the shackles of traditional remote controls.

Bearbot gesture remote control. Source.

In addition to finger gesture reading, gestures have more applications in the education industry. Gestures can increase the sense of interaction in the virtual classroom. Also, the interesting and novel manipulation experience provided by gestures and vision is very important for children to focus in class. For example, it guides children to raise their hands before answering questions. Take another example, when small exercises are needed in class, the ordinary practice may be boring. However, dynamic gesture recognition allows children to complete these exercises interactively, such as drawing a tick or a cross on-screen.

1 posts | 0 followers

Follow淘系技术 - November 17, 2020

Alibaba Tech - February 24, 2020

Alibaba Cloud Community - June 6, 2025

Neel_Shah - March 27, 2025

youliang - February 5, 2021

Alibaba Cloud Product Launch - December 12, 2018

1 posts | 0 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More Online Education Solution

Online Education Solution

This solution enables you to rapidly build cost-effective platforms to bring the best education to the world anytime and anywhere.

Learn More Accelerated Global Networking Solution for Distance Learning

Accelerated Global Networking Solution for Distance Learning

Alibaba Cloud offers an accelerated global networking solution that makes distance learning just the same as in-class teaching.

Learn More