By Liang You, Director of AI Acceleration Computing for Alibaba Cloud Heterogeneous Computing

Stanford University recently released the latest results of DAWNBench, one of the most authoritative competitions in the Artificial Intelligence (AI) field. DAWNBench measures the strength of comprehensive solutions such as deep learning optimization strategies, model architectures, software frameworks, clouds, and hardware.

In the category of image classification on ImageNet, Alibaba Cloud took first place in all four rankings: training time, training cost, inference latency, and inference cost.

According to the official DAWNBench results, it took only 2 minutes and 38 seconds for Alibaba Cloud's heterogeneous computing service to train a model on 1.28 million ImageNet images, after which the Hanguang 800-based AI service could identify an image in a mere 0.0739 ms. Alibaba Cloud also set a world record in training cost and inference cost.

Alibaba Cloud attributes its record-setting performance to its proprietary acceleration framework Apsara AI Acceleration (AIACC) and the Hanguang 800 chip.

AIACC is Alibaba Cloud's proprietary Apsara AI acceleration engine that unifies acceleration of mainstream deep learning frameworks such as TensorFlow, PyTorch, MXNet, and Caffe, the first in the industry. In contrast with its competitors operating on the same hardware platform, AIACC can significantly improve the performance of AI training and inference.

As the head of research and development for AIACC, I would like to share our experience with AIACC-based large-scale deep learning application architecture and performance optimization practices in this article.

As deep learning models become increasingly complex, they require greater computing power. A 90-epoch training on 1.28 million ImageNet images with ResNet-50 can achieve a 75% top-1 accuracy. This process takes about 5 days when using one P100 GPU. The major vendors have been using their own large-scale distributed training methods to shorten the training time for this benchmark.

In July 2017, Facebook unveiled its server design known as Big Basin and used 32 Big Basin servers, each equipped with eight P100 cards interconnected through NVLink, equivalent to 256 P100 cards in total. Big Basin completed this training in one hour.

In September 2017, UCBerkeley published an article stating that it shortened the training time to 20 minutes by using 2,048 NKL cards.

In November 2017, Preferred Network in Japan used 1,024 P100 cards to reduce the training time to 15 minutes.

In an article published in November 2017, Google announced that it completed the training with 256 TPUv2 in 30 minutes.

All these leading vendors are competing to gain a strategic advantage in distributed training, which proves that large-scale distributed training is the trend of technological development in this industry.

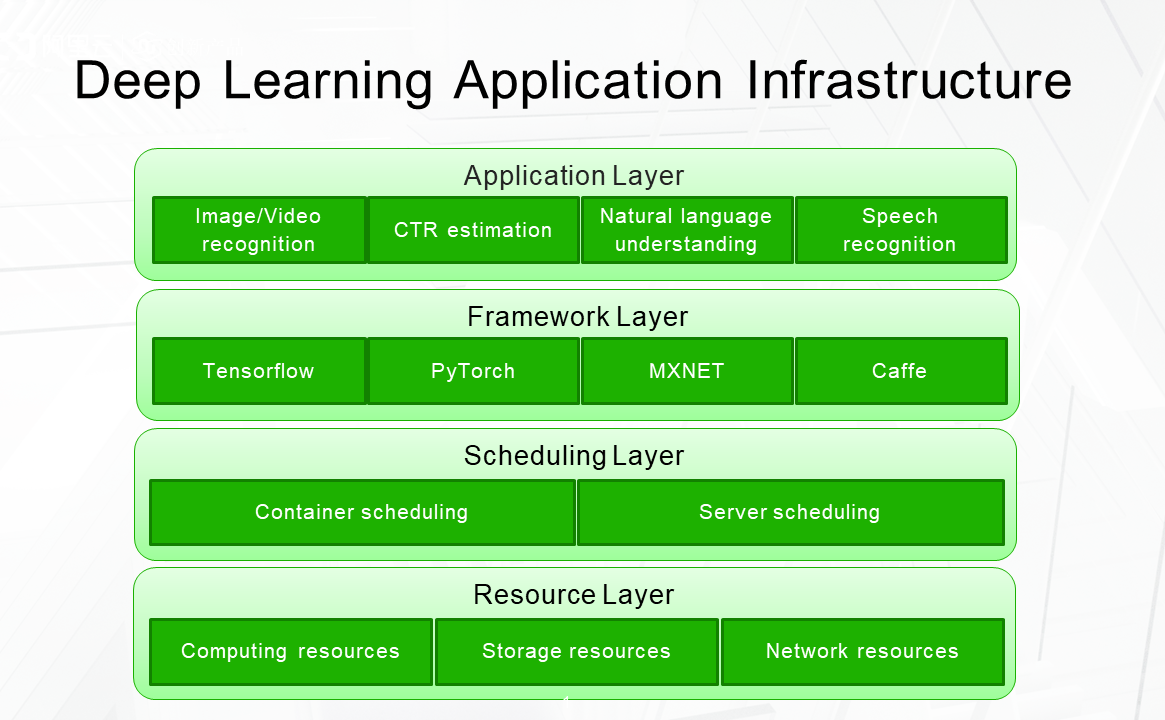

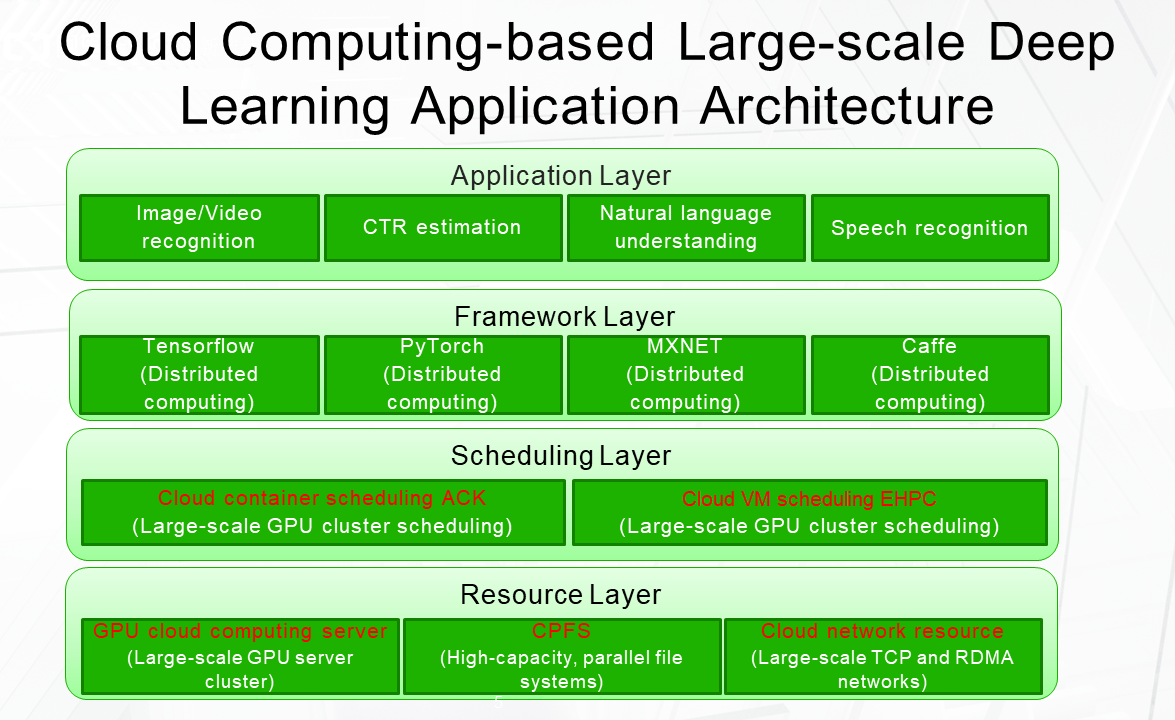

First, let's take a look at the infrastructure of deep learning applications. The entire architecture consists of four layers.

The resource layer is at the top and includes computing resources, storage resources, and network resources. When developing deep learning applications, we need the computing resources of the GPU servers for deep learning training and inference, the storage resources to store the training code and data, and the network resources to handle communication among multiple machines.

The second layer is the scheduling layer. It includes two scheduling modes: container-based scheduling and physical machine-based scheduling. This layer is in charge of the downward scheduling of computing resources, storage resources, and network resources and the upward scheduling of computing tasks at the framework and application layers.

Right below the scheduling layer is the framework layer. Mainstream deep learning computing frameworks include TensorFlow, PyTorch, MXNET, and Caffe.

The application layer is at the bottom of the infrastructure and includes deep learning applications, such as image recognition, object detection, video recognition, CTR estimation, NLP, and voice recognition. It utilizes the computing framework from the framework layer to describe and compute users' models and algorithms.

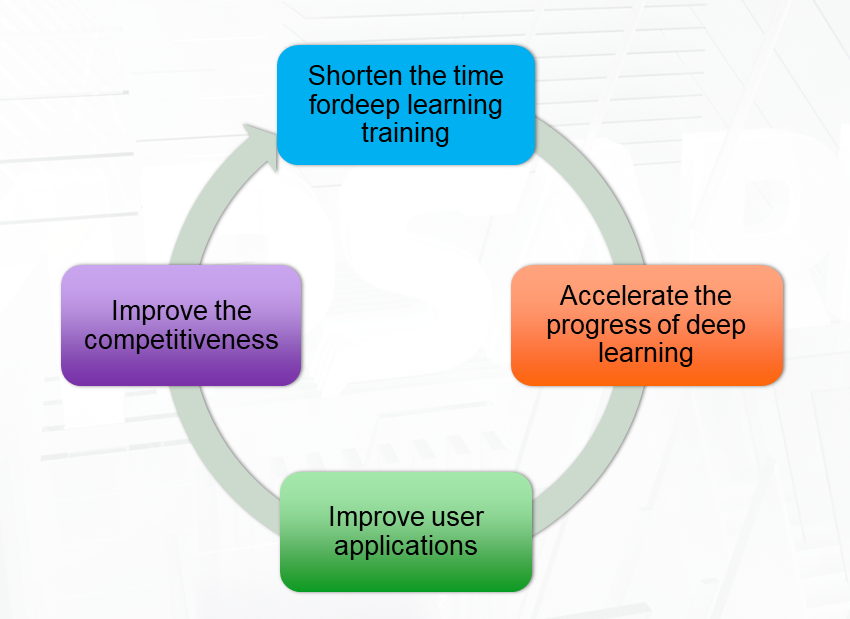

Why do we need to perform large-scale deep learning?

For example, if we train a model with only one GPU card, it would take 7 days. In other words, after one model parameter is adjusted, it takes 7 days to find out whether the parameter is set correctly. If we use an 8-GPU card server, the training will take less than a day. If we increase the number of 8-card servers to 10, it may take only two to three hours to train one model. In this way, we can quickly find out whether the parameter setting is correct.

This example demonstrates four advantages of large-scale deep learning: First, it can reduce the deep learning training time. Second, it can increase the efficiency and accelerate the process of deep learning algorithm research. Third, large-scale distributed inference can help users improve the concurrency and reliability of deep learning applications. Fourth, it can ultimately enhance the competitiveness of user products and increase their market share. Therefore, large-scale deep learning is a strategic area from which companies can improve their productivity and efficiency.

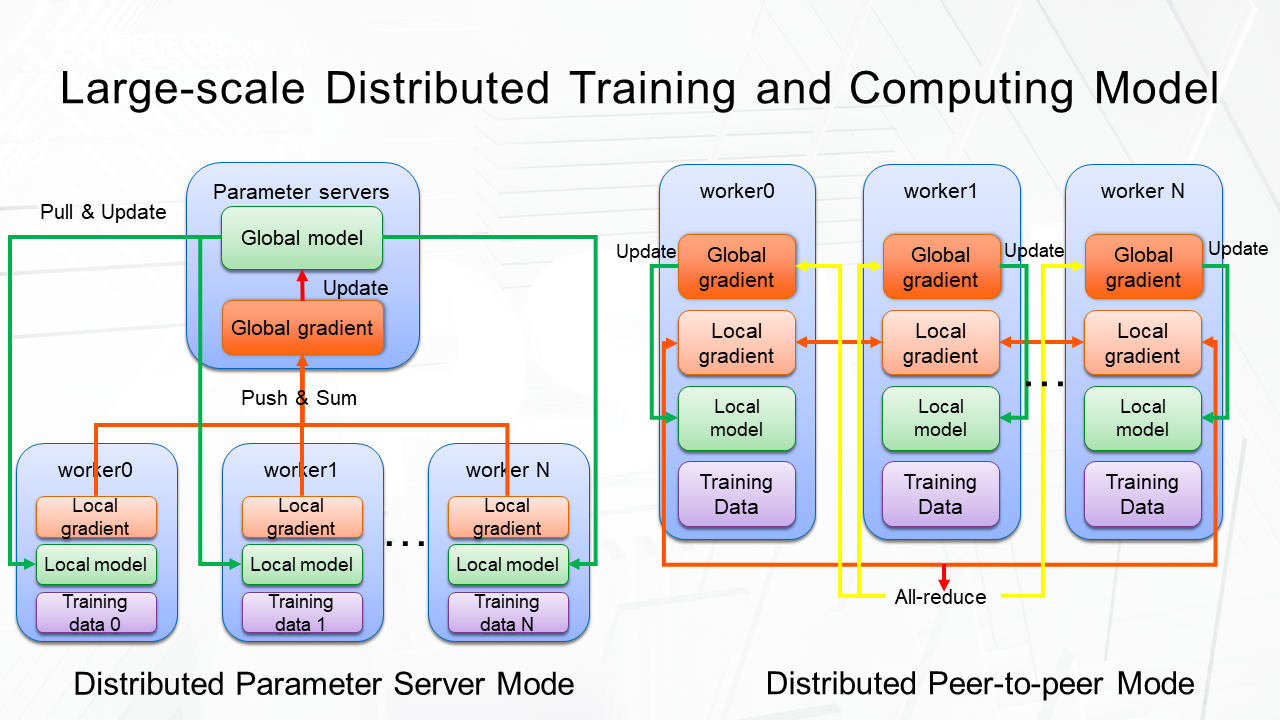

The fundamental computing models for large-scale distributed training generally fall into two categories: distributed training in parameter server (PS) mode and in peer-to-peer mode.

As shown in the figure, the PS mode includes a distributed parameter server and many workers. The parameter server stores the global model, and each worker has a copy of the local model. At the beginning of the distributed training, each worker accesses its own training data and then updates the local model to obtain the local gradient. Then, each worker uploads the local gradient to the parameter server and obtains the global gradient. The parameter server updates the global model with the global gradient. Next, the updated global model refreshes the local models of all workers and then initiates the next training iteration.

In comparison, the peer-to-peer mode does not have a parameter server. Instead, each worker obtains the training data, updates the local model, and obtains the local gradient. After that, Allreduce is performed on the local gradients of all workers so that each worker has access to the global gradient. Finally, each worker uses the global gradient to update the local model and initiate the next training iteration.

The PS mode is more suitable for scenarios with a large number of model parameters because they cannot fit on a single GPU card and therefore have to be stored in the distributed PS mode. However, the disadvantage is centralized communication. The more workers there are, the lower the communication efficiency gets.

The peer-to-peer mode is more suitable for models that can fit on a single GPU card and use decentralized communication. The ring communication algorithm, known as "ring-allreduce", can be introduced to reduce communication complexity. Different computing models are used for different computing frameworks. For example, TensorFlow supports both the PS and peer-to-peer modes, PyTorch primarily supports the peer-to-peer mode, and MXNet focuses on the PS mode for KVStore and PS-Lite.

The different frameworks adopted in different distributed modes are a great obstacle to the implementation of distributed coding, distributed performance optimization, and distributed scheduling.

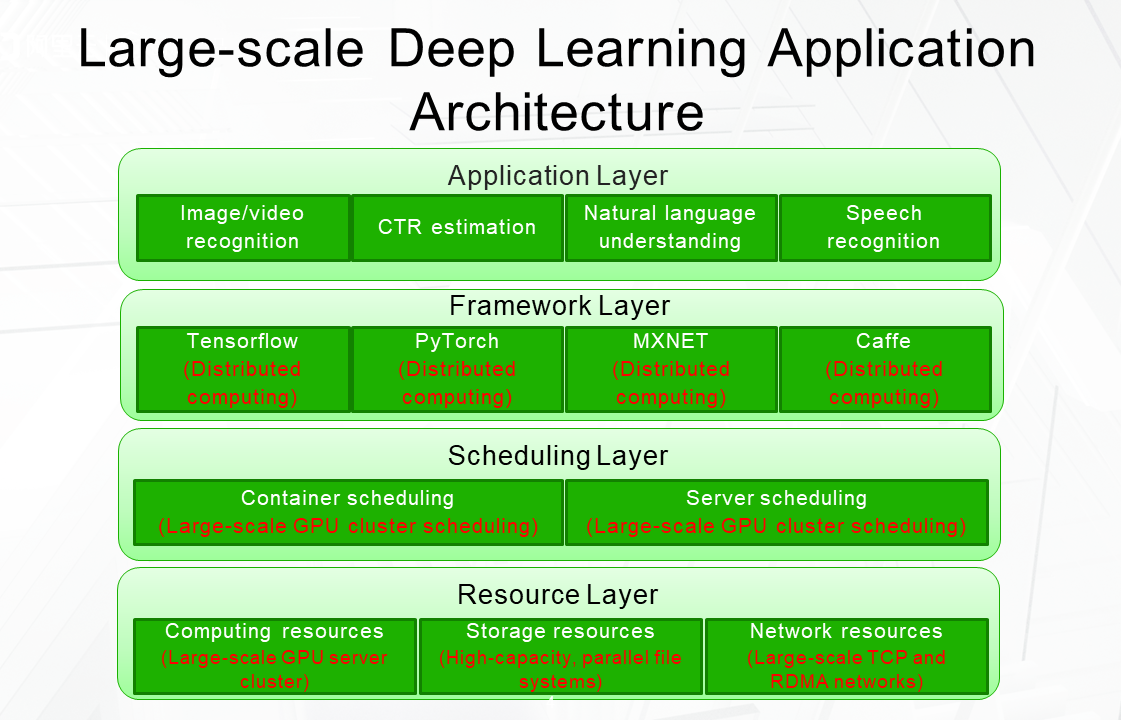

Large-scale deep learning applications have different requirements for infrastructure.

On the resource layer, the computing resources require massive GPU server clusters to conduct distributed training and high-capacity parallel file systems for massive storage of files and parallel file access. It also entails a large-scale TCP or RDMA network.

Extensive scheduling of GPU clusters and tasks is required on the scheduling layer.

The framework layer needs the distributed computing mode to detect computing and schedule different deep learning computing frameworks.

On the application layer, the training data or training models are split among different workers.

Therefore, the use of large-scale deep learning encounters many difficulties and challenges.

At the resource layer, we must tackle the following challenges.

First, it is very difficult to build large-scale GPU clusters because we must address the challenges of data centers, racks, and power supplies for large-scale GPU machines, in addition to concerns over stability and cost.

Second, building massive parallel file systems not only requires high-capacity parallel file systems but also must make these systems stable and reliable, which is a considerable challenge.

Moreover, the scale of the challenge to build large-scale and high-bandwidth TCP or RDMA networks is overwhelming because it necessitates the planning and implementation of a large-scale topology of switches and nodes, the convergence ratio of large-scale north-south traffic, and large-scale network protocols and IP addresses. In addition, the network reliability and performance of the large-scale networks must be ensured.

At the scheduling layer, whether scheduling is container-based or physical machine-based, we need to schedule both CPUs and GPUs and conduct shared scheduling of GPU memory and distributed scheduling for different deep learning computing frameworks.

At the framework layer, the mainstream deep learning computing frameworks use different distributed computing modes. As a result, they require different distributed implementations on the application layer and different distributed scheduling methods on the scheduling layer. At the same time, it is necessary to optimize the distributed performance of various frameworks for the implementation of the underlying network.

To deploy and implement any of these projects, we need technical experts, architects, and engineers with a wealth of expertise.

How can ordinary companies afford the implementation and use of these advanced technologies? Fortunately, we have cloud computing.

Taking Alibaba Cloud as an example, to build an architecture for large-scale deep learning applications based on cloud computing, we can create large-scale GPU cloud computing servers on the resource layer to meet the needs of large-Scale GPU server clusters. We can use the Cloud Parallel File System (CPFS) as our large-capacity parallel file system. In addition, Alibaba Cloud's network resources can be directly used to implement large-scale TCP or RDMA networks.

On the scheduling layer, the container orchestration service Alibaba Cloud Container Service For Kubernetes (ACK) or cloud virtual machine scheduling service (EHPC) can be directly used to meet the needs of scheduling massive GPU clusters. Today's cloud computing products can solve the issues of massive resources and large-scale scheduling in a way that does not require companies to master advanced technologies.

Cloud computing has the intrinsic advantages of accessibility, elasticity, and stability.

Its first advantage is accessibility. If a company urgently needs massive GPU computing, storage, and network resources in a short period of time, instead of hiring technical experts in computing, storage, and networking and spending months on independent purchase and deployment, it can go to Alibaba Cloud to obtain large-scale GPU computing, storage, and network resources in about ten minutes.

The second advantage of the cloud is elasticity. During business peaks, elasticity makes it possible to expand more infrastructure resources to cope with the higher business volume. After the peak, the additional infrastructure resources will be released. This approach gives you to perfect amount of infrastructure resources for your business volume.

The third advantage is stability. The computing, storage, and network services provided by Alibaba Cloud are far more stable than those provided by physical resources. The computing and network services provided by Alibaba Cloud have a durability of 99.95%, and our storage services have a durability of 99.9999999999%.

The fourth advantage is cost savings. As a result of its inherent advantages of scale, cloud computing is also cost-effective due to the fact that physical hardware is centrally purchased, managed, operated, and maintained. In addition, auto scaling allows you to purchase only the resources you need for your business.

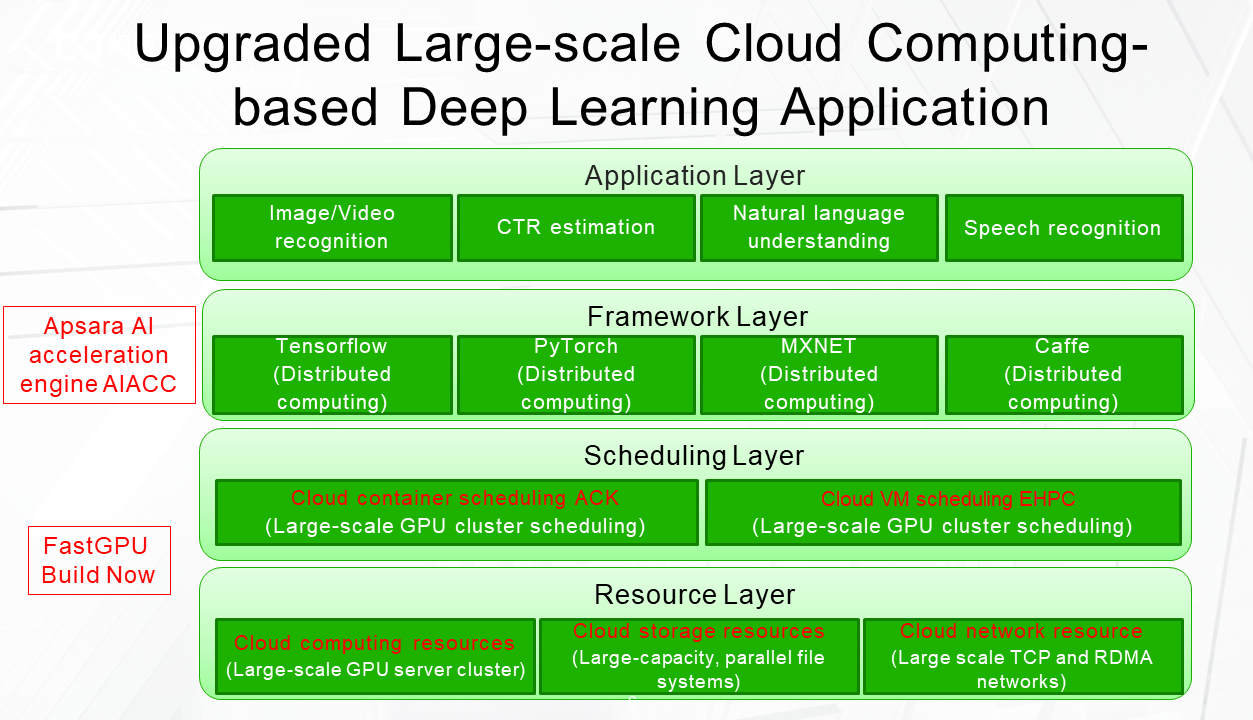

When it comes to the application of large-scale deep learning, besides the preceding universal advantages of cloud computing, our team has also implemented two cloud-based architectural upgrades: the Apsara AI acceleration engine AIACC and the instant built solution FastGPU.

As I mentioned, distributed modes vary across different frameworks, which causes great difficulties in distributed coding, performance optimization, and scheduling and results in high learning costs. We introduced the Apsara AI acceleration engine AIACC to address the challenge of unified performance acceleration and scheduling for large-scale deep learning on the framework layer. This marks the first time that a performance acceleration engine has been used for unified acceleration of mainstream open-source frameworks, including TensorFlow, PyTorch, MXNet, and Caffe. AIACC has four major advantages.

Its first advantage is unified acceleration.

As mentioned earlier, the differences in distributed modes among the computing frameworks pose major obstacles to unified scheduling and distributed implementation at the application layer.

AIACC provides unified acceleration for distributed modes and distributed performance, which allows the scheduling layer to conduct unified distributed scheduling and the application layer to perform unified distributed computing. This approach keeps the optimization of distributed communication at the underlying layer simple. In other words, all frameworks enjoy the benefits of performance improvement.

The second advantage is the extreme optimization of network and GPU accelerators, which will be elaborated on in the next section.

The third advantage is that cloud-based auto scaling can minimize users' business costs.

The final advantage is the solution's compatibility with open-source frameworks. Most of the code written for open-source deep learning computing frameworks can be retained and its performance is directly improved through the AIACC library.

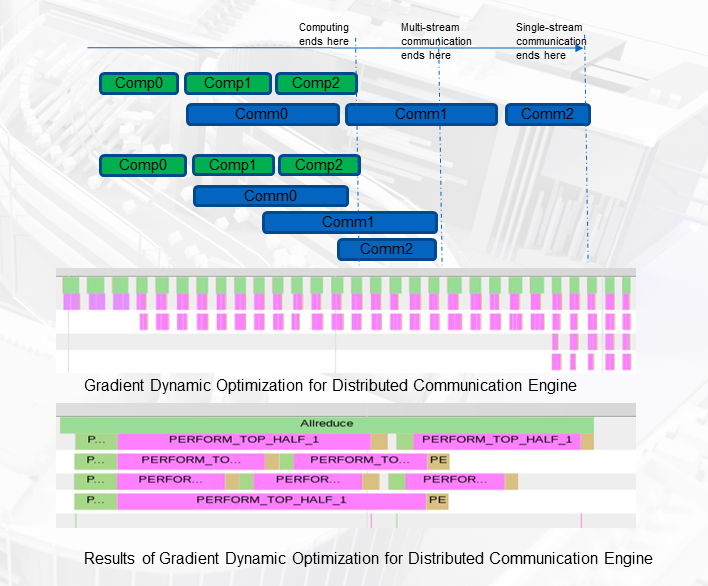

AIACC utilizes communication-based performance optimization technology. As explained above, when performing distributed training, we need to achieve highly efficient data communication for the exchange of gradient data between machines and GPU cards.

Our optimization of distributed communication involves three aspects.

The first aspect is the overlap between communication and computing. Gradient communication is performed asynchronously. To be specific, gradient communication is performed in parallel to computing, so the computing time includes the time spent on communication.

The second aspect is latency optimization. Gradient negotiation needs to be conducted before gradient communication in order to determine whether the gradients in the GPUs of each machine are ready for communication. The traditional practice is to conduct the gradient negotiation for all nodes through a central node. The downside is high latency when the architecture is large. Our approach decentralizes gradient negotiation, which is more efficient and does not lead to increased latency at large scales.

The third optimization is bandwidth optimization. We use five methods to optimize bandwidth.

The first method is the optimization of hierarchical communication based on the topology structure. We know that the communication bandwidth available between GPUs on the same machine is very high, while the bandwidth available for cross-machine GPU communication is very low, so we perform a two-staged optimization. Specifically, we first perform inter-GPU communication within individual machines, and then GPU communication among machines.

The second method is to reduce precision for transmission. The precision of the original gradient is of float32. We maintain the precision during computing, but convert the gradient data to float16 for transmission, which cuts the data to be transmitted in half. In addition, we use scaling to ensure the accuracy is not reduced.

The third method is multi-gradient aggregation. When performing distributed communication, a model requires the communication of multi-level gradients. If every calculated gradient requires one communication, the data packets of multi-layer gradients will be very small and the bandwidth utilization will be extremely low. Therefore, we adopted gradient aggregation. After the gradients are aggregated into one batch, it is sent for multi-machine communication. This method achieves a high bandwidth utilization rate.

The fourth method is multi-stream communication. In a high-bandwidth TCP network, a single communication stream cannot use up the bandwidth, so we use multiple streams for communication. However, we found that the transmission rates of multiple streams are different. Therefore, we use load balancing to automatically allocate more gradient data to the streams with a higher transmission rate and less to the streams with a lower rate.

The last method is a dynamic tuning process applied to the aggregation granularity and the number of communication streams. When we start to train the first few batches, the parameters are dynamically tuned according to the current network conditions to achieve optimal performance. This dynamic adaptation process maintains the bandwidth performance at the highest level no matter how the network conditions change.

This figure shows the dynamic optimization process. The green bars are computing and the blue bars are communication. We can see that, at the top of the figure, the first few batches only use one stream for communication, and the communication time is relatively long. The middle part of the figure shows that communication is first carried out in two streams, and then in four streams, which are load-balanced, as the process moves towards the right. Therefore, the best bandwidth performance is achieved for the last batch.

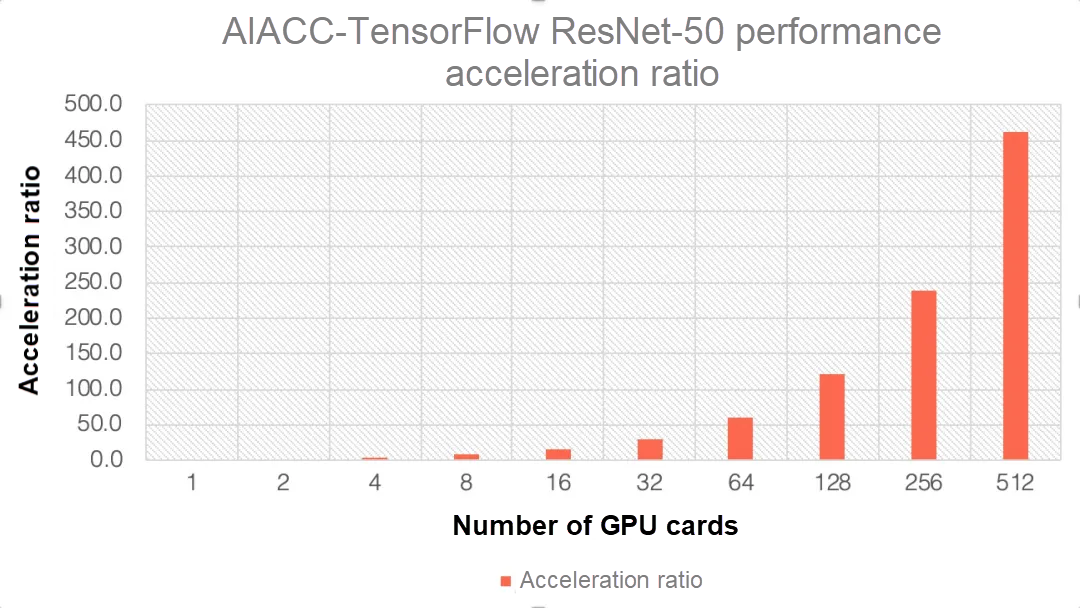

After we successfully put these performance optimization methods to use, we began to test our work by training a ResNet-50 model on the ImageNet datasets using different solutions. The performance we achieved with 512 P100 cards was 462 times faster than that achieved with a single card, indicating almost linear performance acceleration and reducing the training time from 5 days to 16 minutes.

For the purpose of this submission to DWANBench, we also released the results of large-scale V100-based training. It only took 2 minutes 38 seconds to train a model with 93% top-5 accuracy.

We also upgraded the architecture with FastGPU, which can help users quickly build large-scale distributed training clusters and optimize business costs on the cloud. Now, let's see how FastGPU can be used to construct large-scale distributed training clusters on the cloud.

To begin with, Alibaba Cloud's cloud computing services provide APIs that can be directly used to create computing resources, storage resources, and network resources. We can encapsulate these APIs through FastGPU to create one large-scale distributed cloud cluster and start large-scale distributed training tasks at the same time.

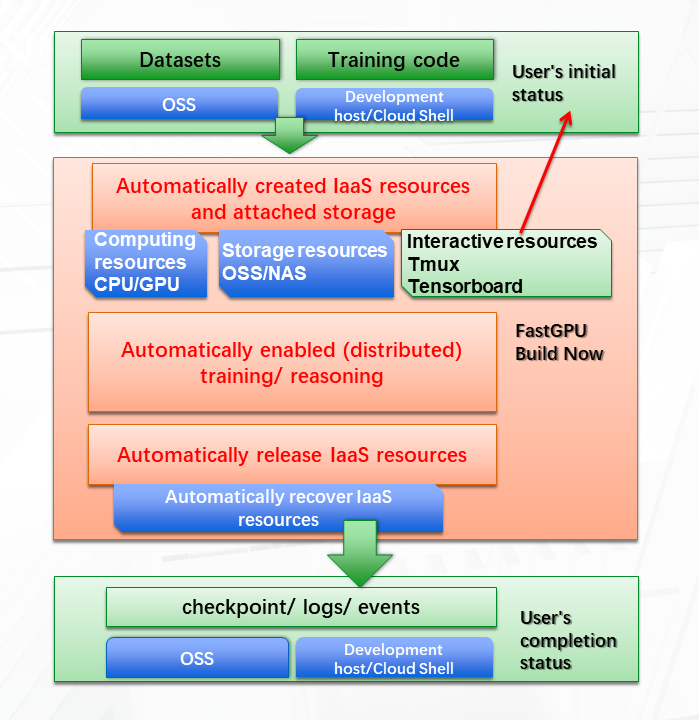

As shown in the preceding figure, the green boxes represent the user, the blue boxes represent Alibaba Cloud resources, and the orange boxes represent FastGPU. In the initial status, the user uploads the training datasets to Object Storage Service (OSS) and creates an Elastic Compute Service (ECS) instance as the development host to store the training code (which can also be stored in Cloud Shell). On this development machine, the user can use FastGPU to conveniently create basic resources for deep learning applications, including large-scale GPU computing resources, storage resources for cloud disks and parallel file systems, and the interactive resources for Tmux and TensorBoard. Users can check the training progress in real time through interactive resources.

After all the resources required for training are ready, the distributed training task will be automatically initiated. After the distributed training task is completed, these infrastructure resources will be automatically released, while the models and log files obtained through training are stored in OSS or the development host, where you can use them.

FastGPU saves time and money and is easy to use.

First, it saves time. For example, to configure a distributed deep learning environment, you need to prepare the GPU infrastructure resources, network resources, and storage resources in that order, and then configure the deep learning environment for each machine, which includes the specific version of the operating system (OS), GPU driver, CUDA, cuDNN, and TensorFlow. After that, the training data needs to be uploaded to each machine. The next step is to set up the network connecting these machines. It may take an engineer a day to prepare the environment, but with FastGPU, it only takes 5 minutes.

Second, this solution saves money. We can synchronize the lifecycles of GPU resources with that of training. That is, we need to activate the GPU resources only when our training or inference tasks are ready. Once the training or inference task is completed, the GPU resources are automatically released so the resources do not sit idle. You can also create and manage preemptible GPU instances (low-cost instances).

Third, this solution is accessible. All resources created are infrastructure as a service (IaaS) resources, and all resources created and tasks executed are accessible, adjustable, reproducible, and traceable.

For large-scale distributed training, we want the training performance to grow linearly as the number of GPUs increases. However, this ideal acceleration ratio often cannot be achieved in reality. Even when GPU servers are added, the performance does not increase accordingly.

There are two major bottlenecks that restrict performance growth: First, IOPS and bandwidth capabilities restrict the ability of multiple GPU servers to concurrently read training files from the file system. Second, communication between GPU servers creates a bottleneck.

The convenient construction of CPFS on Alibaba Cloud can provide high concurrency file access, and AIACC can be used to ensure the performance of large-scale distributed communication.

Finally, I would like to share our experience in the optimization of application architectures and performance by considering four large-scale deep learning scenarios: large-scale image recognition, large-scale CTR estimation, and large-scale natural language processing.

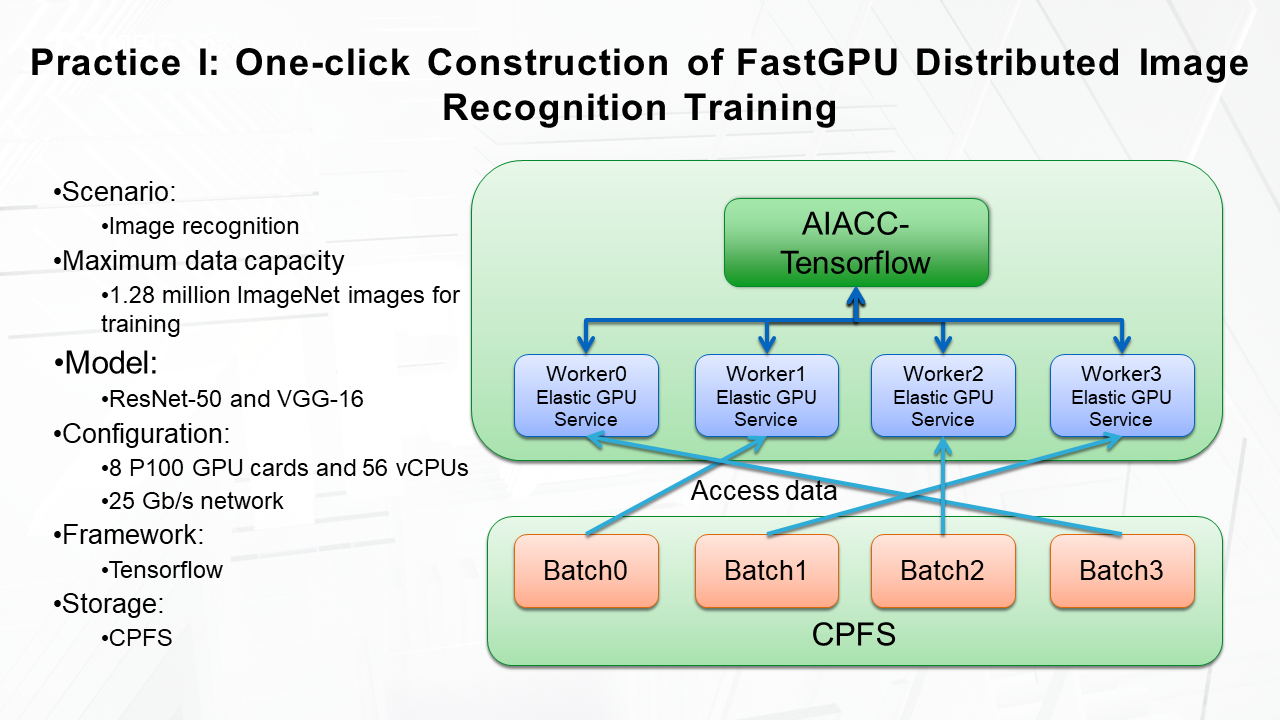

In our first case study, we will create a distributed training task for large-scale image recognition with one click.

In this scenario, we must train ResNet-50 and VGG-16 models on 1.28 million ImageNet images using the TensorFlow training framework.

FastGPU is used to deploy the architecture on the right with one click. This architecture includes multiple 8-P100 GPU card servers, a 25 Gbit/s network, a CPFS file system, and an AIACC-TensorFlow framework for distributed training.

Workers on the GPU servers perform training in parallel and concurrently access training data from CPFS. CPFS provided the aggregated IOPS and aggregated bandwidth for concurrent data access by the GPU servers, while AIACC ensures optimum performance of multi-GPU communication.

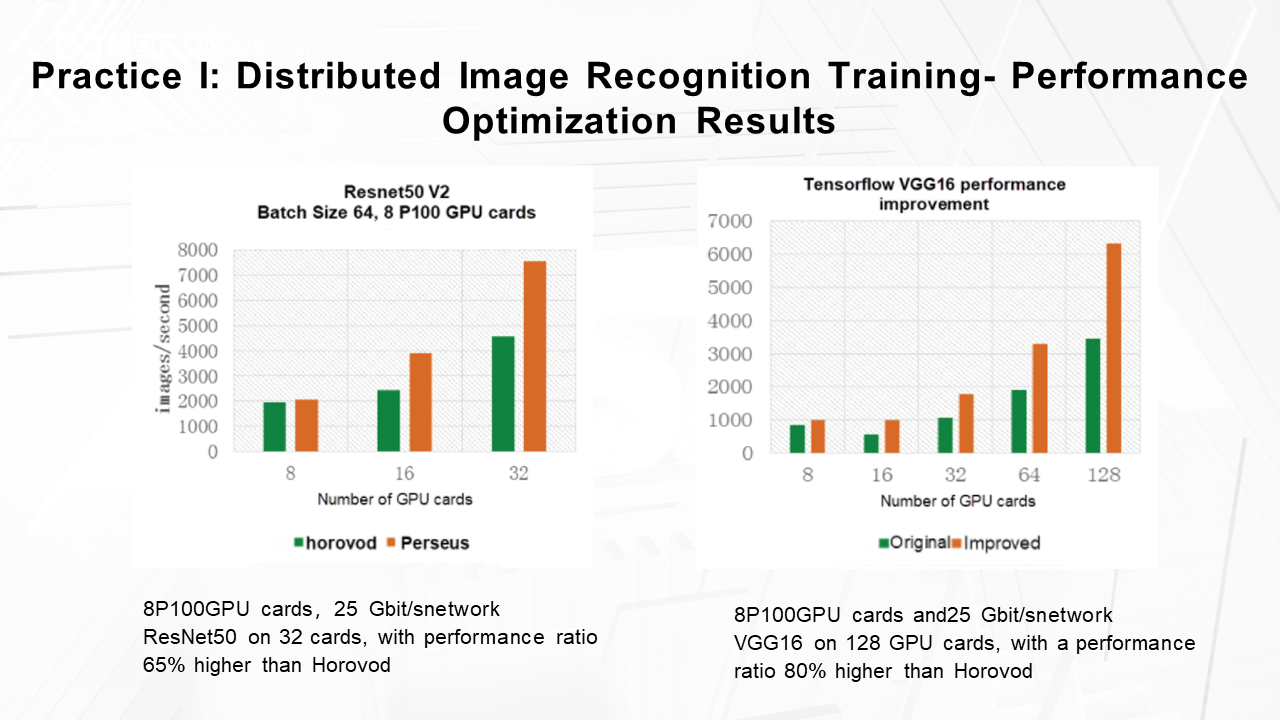

The following figure shows the results of distributed training performance optimization for large-scale image recognition. Uber also developed an open-source distributed training optimization framework called Horovod, which optimizes ring-allreduce communication. Its distributed performance is better than that of native TensorFlow and other frameworks. Compared to Horovod, our solution achieved a training performance 65% higher on 32 P100 cards and 80% higher on 128 P100 cards.

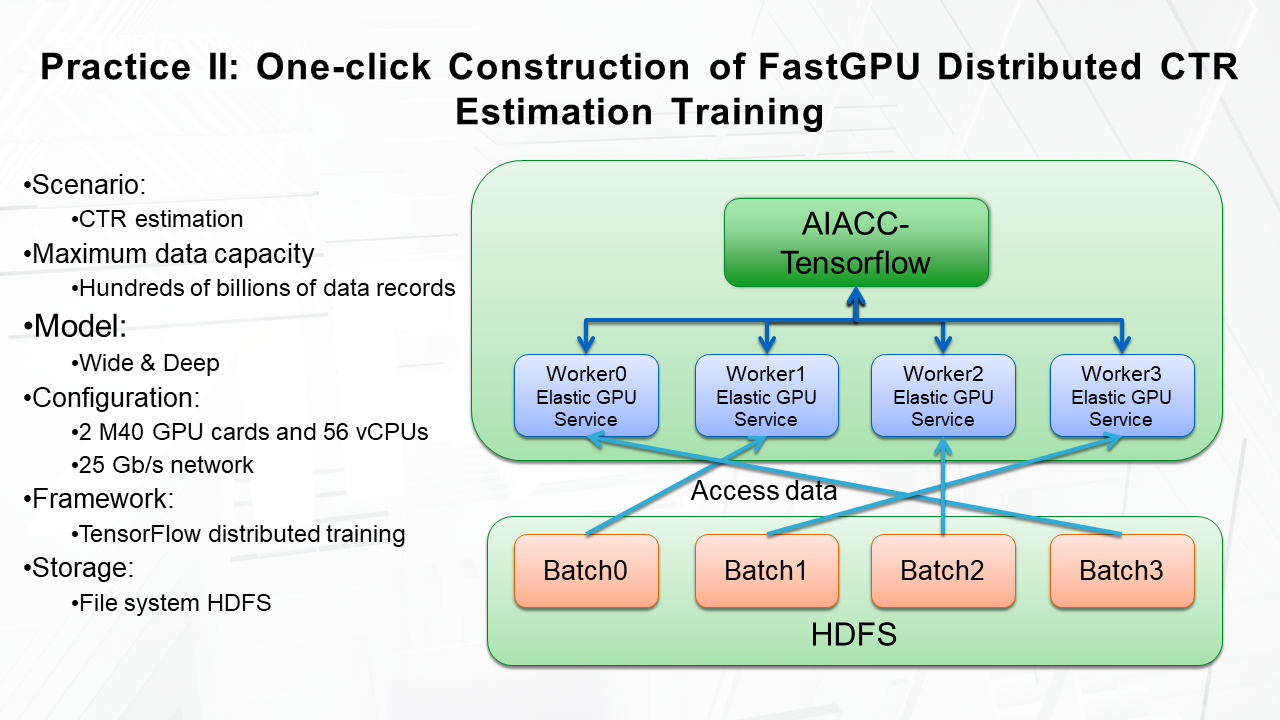

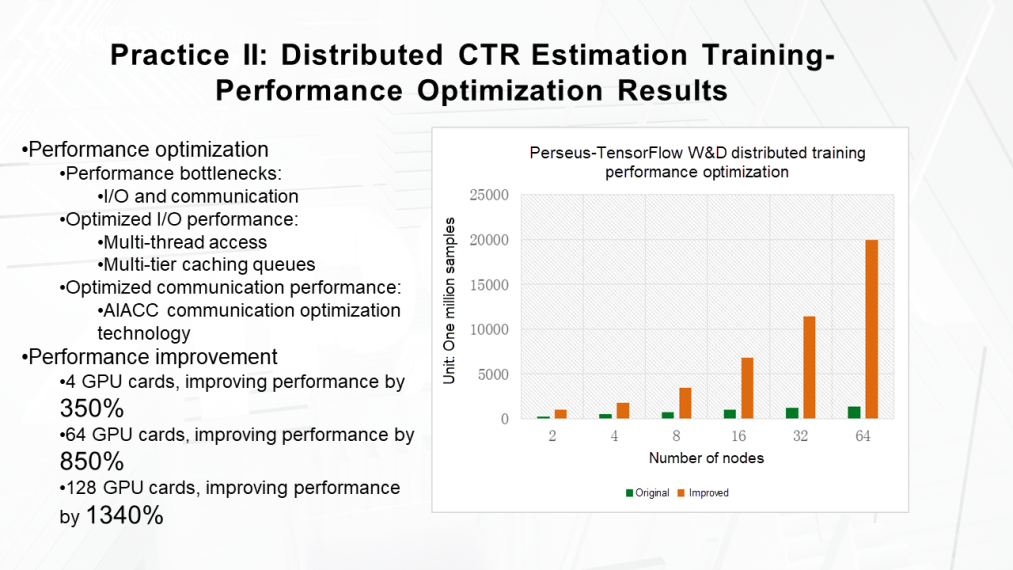

For our second case study, we will look at the distributed training of large-scale CTR estimation.

CTR estimation considers online behavior such as clicks, time-on-page, likes, forwards, and purchases in order to make targeted recommendations concerning content, products, or advertisements that users may be interested in.

This solution must train a Wide & Deep model on 100 billion images using the distributed framework TensorFlow.

First, we used FastGPU to deploy the architecture with one click, including multiple 2-M40 GPU card servers, a 10 Gbit/s network, and an HDFS file system. Then, we used the AIACC-TensorFlow framework for distributed training.

On the right side of the figure, the green boxes indicate the performance of the original TensorFlow. As the number of nodes increases, the performance does not increase as much. Using this architecture, it was impossible to train the model on 100 billion images in one day.

We pinpointed the application performance bottlenecks: the I/O performance of accessing files in HDFS and communication between the machines.

We optimized the file access I/O performance by using multi-thread parallel access and multi-buffer queues and used AIACC's communication optimization technology to boost the communication performance between the machines.

In the end, this solution improves performance by 350% on 4 GPU cards, 850% on 64 GPU cards, and 1340% on 128 GPU cards and can train a model on 100 billion entries in 5 hours.

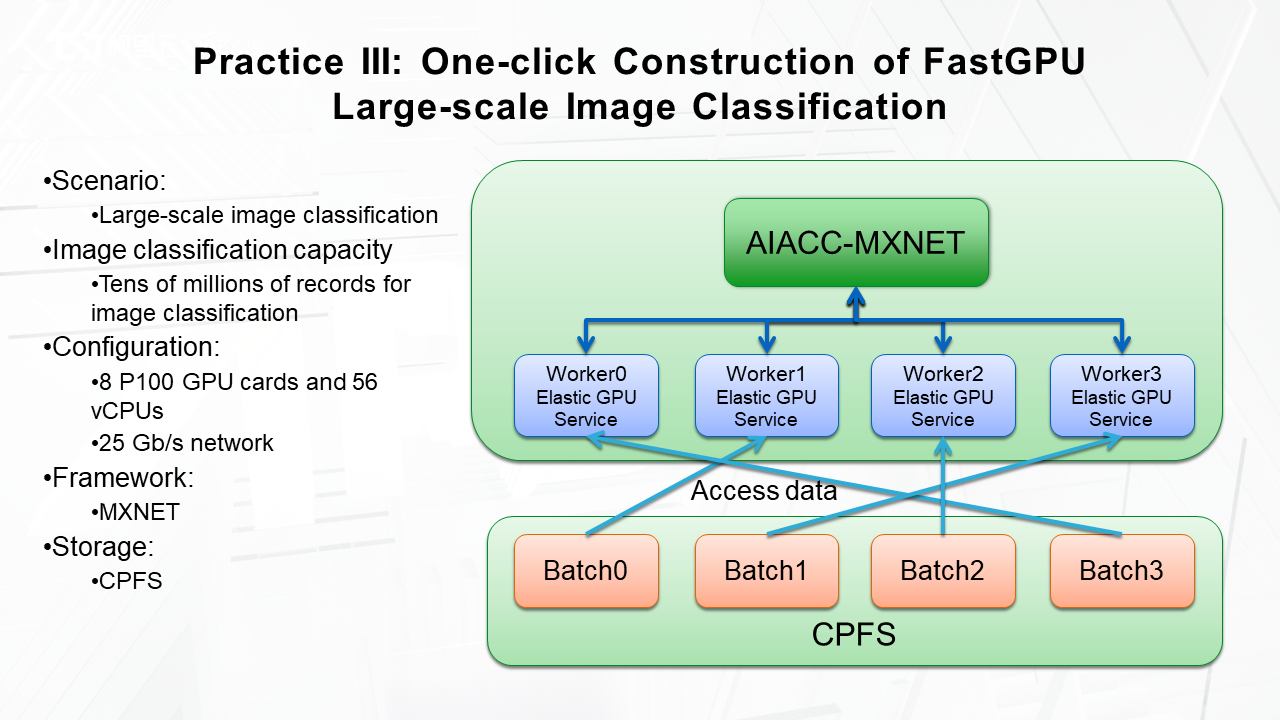

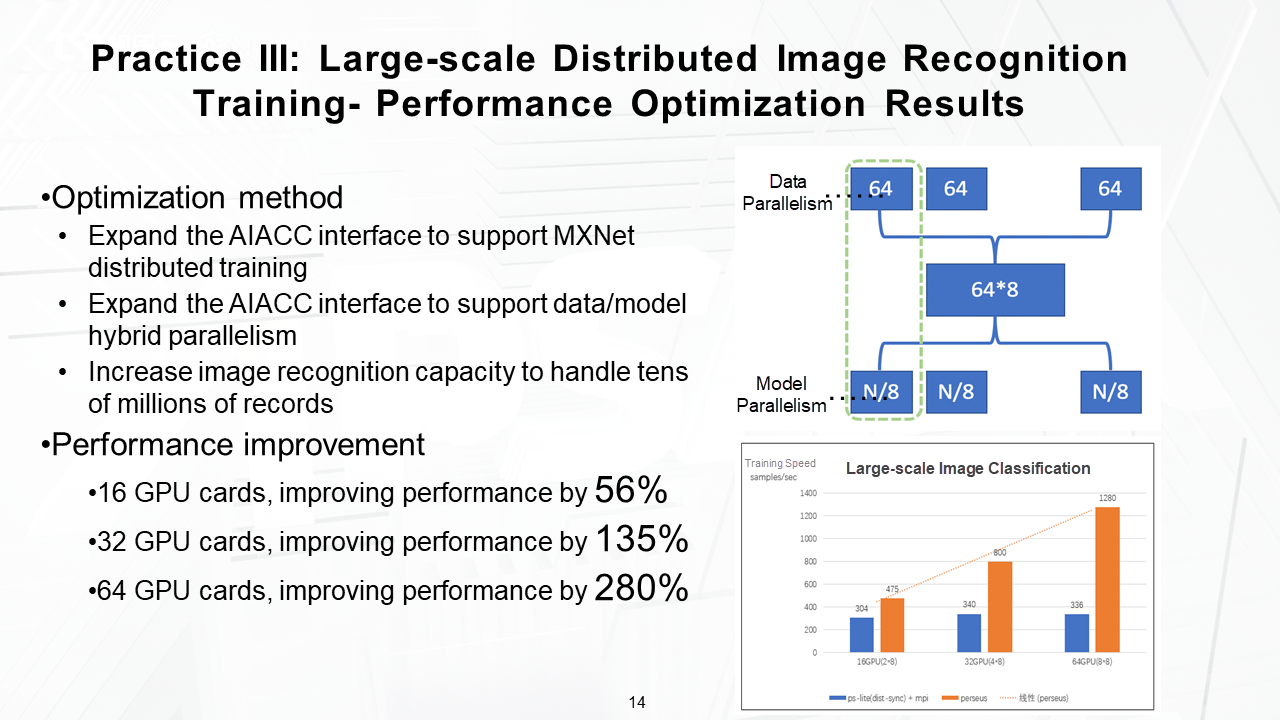

The third case study deals with distributed training for large-scale image classification.

In this scenario, distributed training becomes more complex when the number of classification increases. This solution trains the model by classifying tens of millions of images using the MXNet framework.

First, we use FastGPU to deploy multiple 8-P100 GPU card servers, a 25 Gbit/s network, and CPFS file system and used AIACC-MXNet for distributed training.

When we need to classify tens of millions of images, data parallelism alone is not feasible, so we need to adopt a hybrid solution combining data parallelism and model parallelism. Therefore, we expanded the AIACC interface to support the KVStore interface of MXNet and the hybrid data parallelism/model parallelism solution. This boosts the processing capability for image recognition to tens of millions of image classification by using AIACC-MXNet. The final results showed that the performance was improved by 56% on 16 GPU cards, 135% on 32 GPU cards, and 280% on 64 GPU cards.

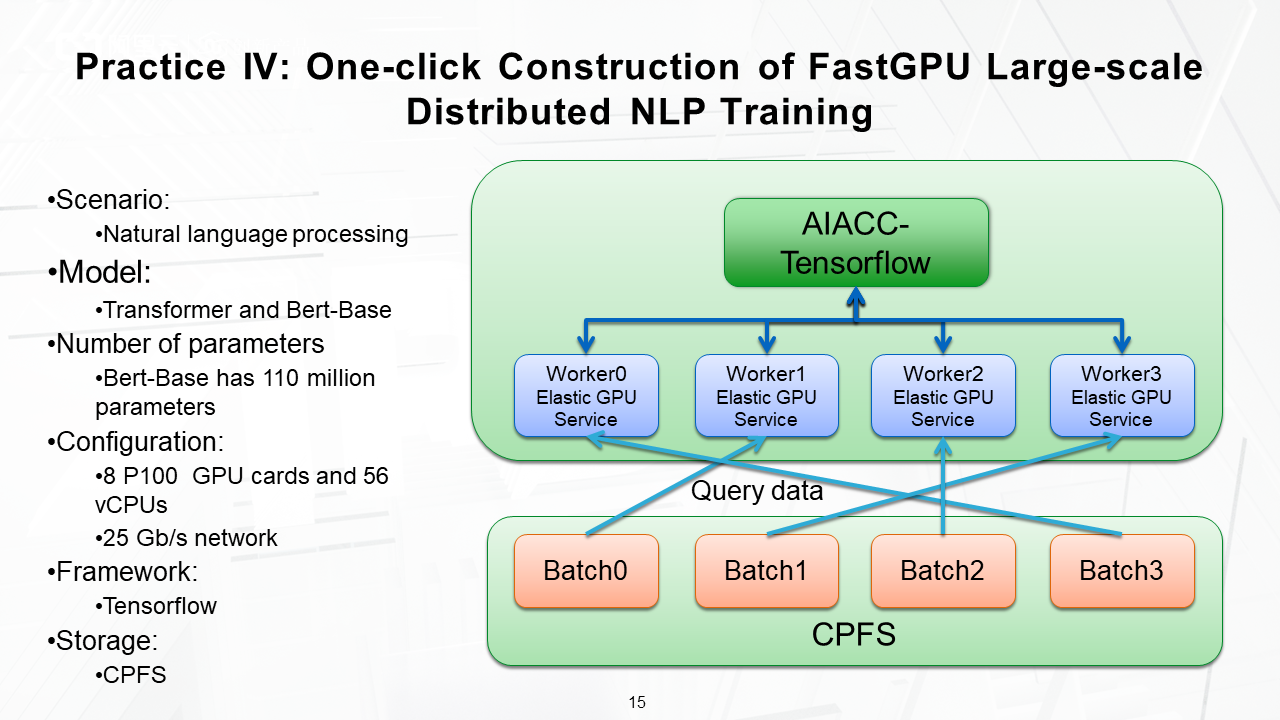

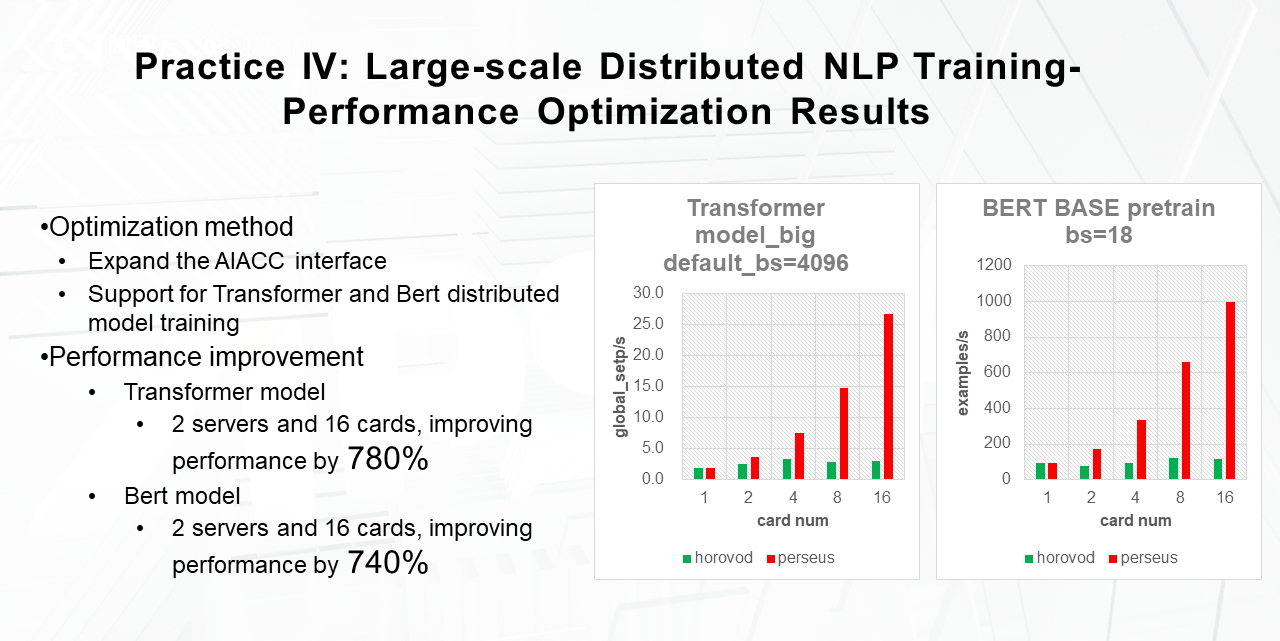

In the last case study, we will look at distributed training for large-scale natural language understanding.

In this solution, we use the Transformer and Bert models. The latter, Bert, is a very large open-source model developed by Google, which has achieved exceptional results in NLP competitions. It has 110 million parameters and poses a tremendous challenge to the acceleration ratio for distributed training. We used FastGPU to deploy multiple 8-P100 GPU card servers, a 25 Gbit/s network, and CPFS file system, and conducted the distributed training using AIACC-TensorFlow.

We expanded the AIACC interface to support the distributed training of the Transformer and Bert models. In the end, on 16 GPU cards, the Transformer model improved the performance by 780%, and the Bert model improved the performance by 740% times.

We will share more source code for large-scale deep learning in the future.

Rupal_Click2Cloud - November 23, 2023

Rupal_Click2Cloud - October 19, 2023

Alibaba Clouder - January 22, 2020

Alibaba Clouder - September 1, 2020

Alibaba Clouder - May 11, 2020

Alibaba Clouder - May 6, 2020

Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn More Online Education Solution

Online Education Solution

This solution enables you to rapidly build cost-effective platforms to bring the best education to the world anytime and anywhere.

Learn More