The Alibaba Cloud 2021 Double 11 Cloud Services Sale is live now! For a limited time only you can turbocharge your cloud journey with core Alibaba Cloud products available from just $1, while you can win up to $1,111 in cash plus $1,111 in Alibaba Cloud credits in the Number Guessing Contest.

By Deng Bin (nicknamed Anmie), compiled by Zhao Yuying, AI Frontline

Speaker: Lin Wei | The Development Trends of the AI Ecosystem

Lin Wei, a researcher at the Alibaba Cloud Machine Learning Platform for AI (AI) division, delivered a speech on "AI Powers up Double 11 on the Cloud for 500 Million Consumers." This article enlists excerpts from his speech to describe how AI capabilities enable Double 11 on the Cloud.

Lin Wei said, "I specialized in system development, and recently noticed at several conferences that an increasing number of people in this field are turning to artificial intelligence (AI)." He recollects that during the AI boom in the late 1990s when he worked in the school's AI laboratory, how he was worried about the performance of his models. However, later he realized that his fears were misplaced as the models were far from being usable. The wave of AI simmered down for a period until recent years to bounce again.

He stated, that in some campus events, he found out that many students are studying AI algorithms. Though neural networks, genetic algorithms, and simulation algorithms were born many years ago, they got momentum in recent years due to the advancement in data and computing power.

Cloud computing plays an important role in this process. Sufficient computing power is necessary to produce more effective models. This is one crucial reason why Alibaba embraced cloud computing in 2009. Today, Alibaba's e-commerce business is one of the key accounts of Alibaba Cloud and Double 11 is the most important event of the year for this business.

During the past few years, the gross merchandise volume (GMV) of Alibaba's Double 11 has continuously risen, and this is driven by the fact that all the core systems are running on the cloud. After migrating all the core systems to the cloud, the team realized that AI alone cannot perform without computing. Using AI to improve efficiency works only with the mighty computing power support. The Double 11 Shopping Festival provides a large-scale playing-field to put ideas about system architecture, data processing, and data mining into practice.

Considering the Double 11 scenario, Lin lists three key characteristics:

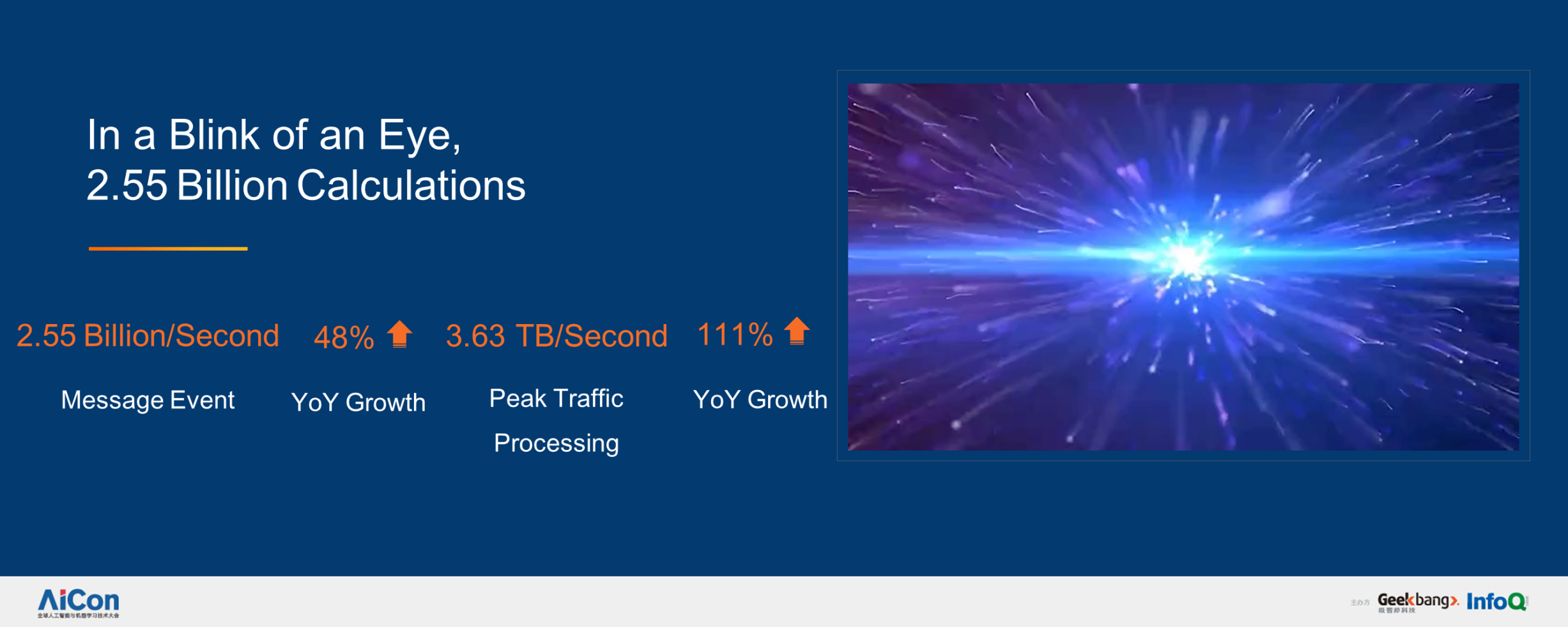

1. Real-time. In the 24 hours of Double 11, it is imperative to analyze data and give feedback to sellers in a timely manner. Therefore, acting in real-time is critical. The system supporting the Double 11 dashboard uses Flink to perform real-time computing. It requires more fine-grained metrics than just the GMV, including purchase interest, product category, supply to sales ratio, channel, warehouse location, and product sourcing. With real-time analysis and timely feedback, sellers and courier companies get the information they need to adjust their strategies with agility. During this year's Double 11, 2.55 billion messages were processed per second, including transaction information and package delivery requests.

2. Scale. In addition to real-time feedback, detailed statements must be given to banks and merchants after Double 11. This year, due to the elasticity of the cloud platform it took only one day, that is, November 12, to complete all the reports. In the case of such large-scale transactions, the efficiency of serving merchants is also a challenge. In the past, phone calls and human agents were employed. However, at today's scale, there is a need for AI technologies to serve merchants and assist with package delivery. For example, AI-based bots may check if users are at home, or where to drop packages.

Contrary to the common impression that AI is distant from everyday life, it now helps to improve user experience. For example, AI helps in scenarios such as assisting with package delivery and providing personalized recommendations on the homepage of Taobao.

Now, Taobao's recommendation page shows better results. This is the result of analyzing 250 million video clips in one day. Lin said "As many sellers now use short videos to promote products these days, we analyze 250 million videos, with 150 million products analyzed per day on average. By calculating the number of users purchasing products after viewing videos, we find that the effective video length is 120 seconds on average. This new technology can drive new scenarios."

3. Artificial Intelligence. Double 11 is driven by AI and data. Real-time, scale, and AI complement each other, greatly improving the efficiency of Double 11 and the computing capability of the system, which powers the GMV of RMB 268.4 billion.

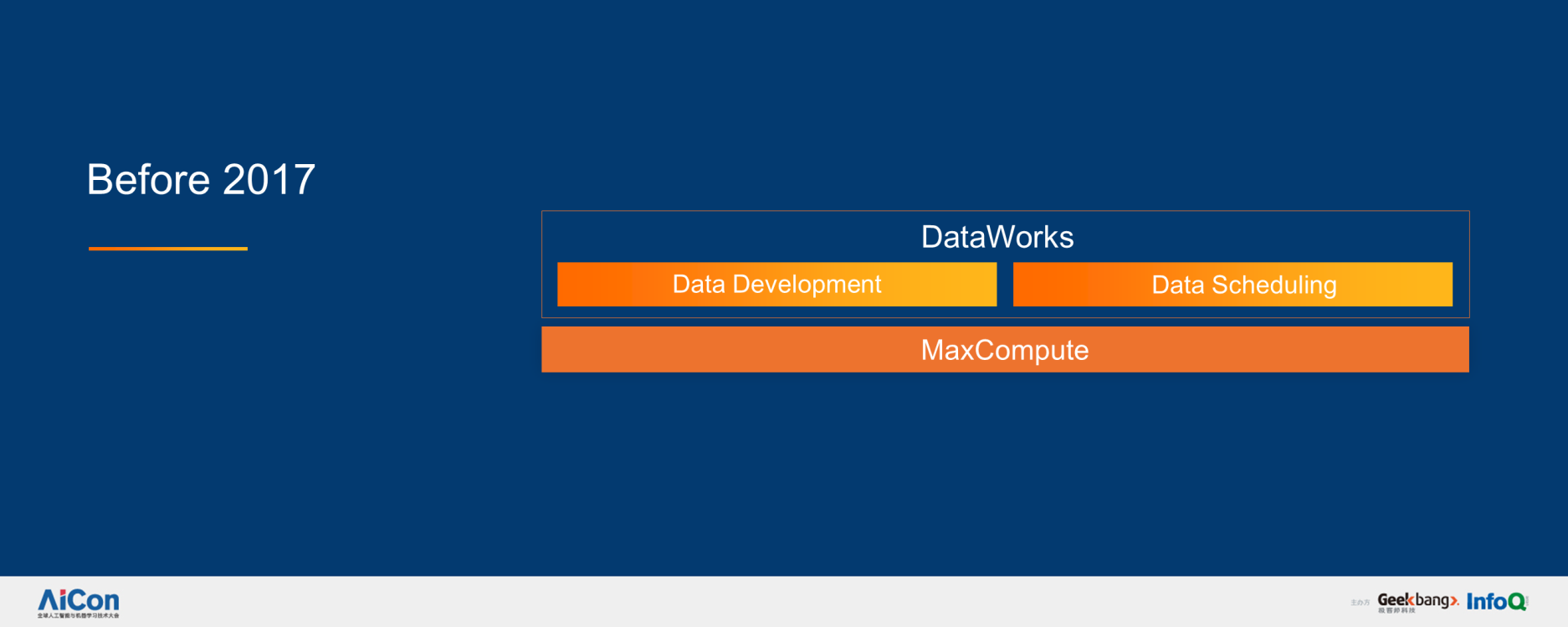

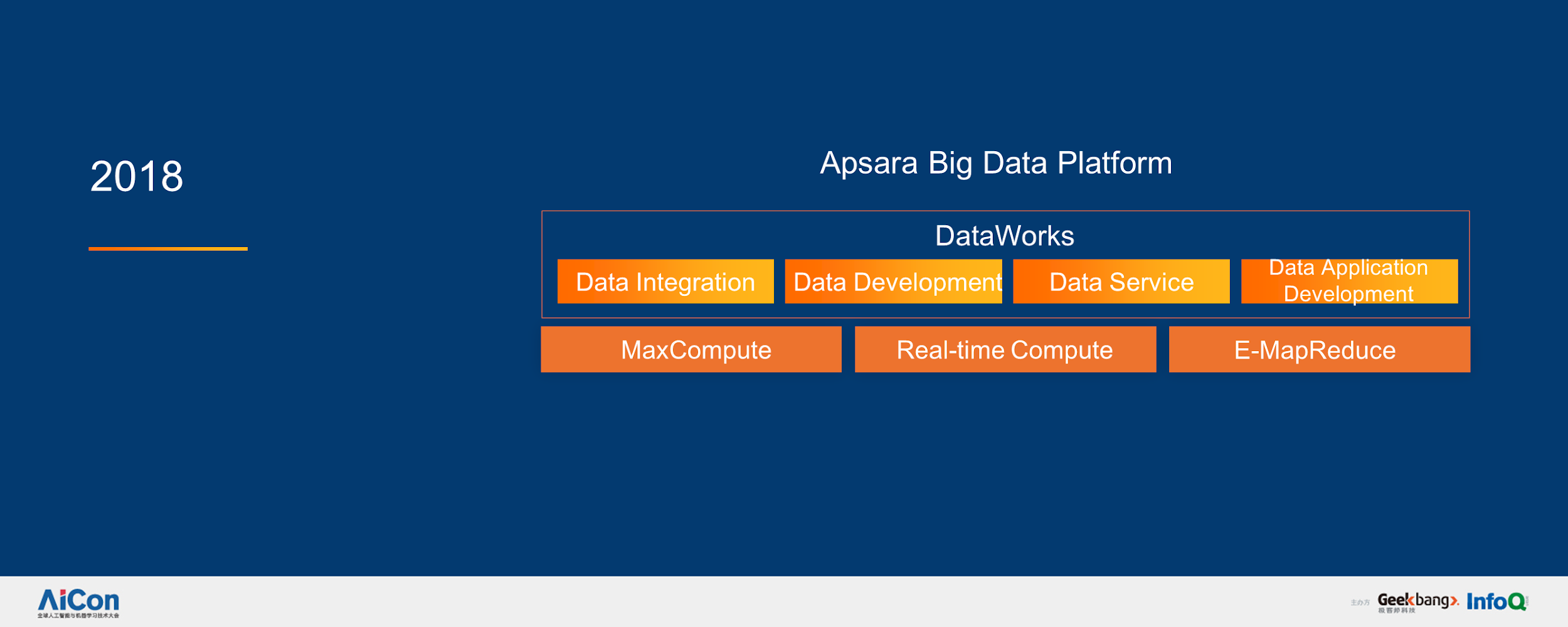

Now, let's talk about the technology used at Double 11. Before 2017, Double 11 used a simple system that mainly processed data and generated reports. A year and a half ago, it began to add real-time features to support business decision-making with real-time data. That's how MaxCompute was born.

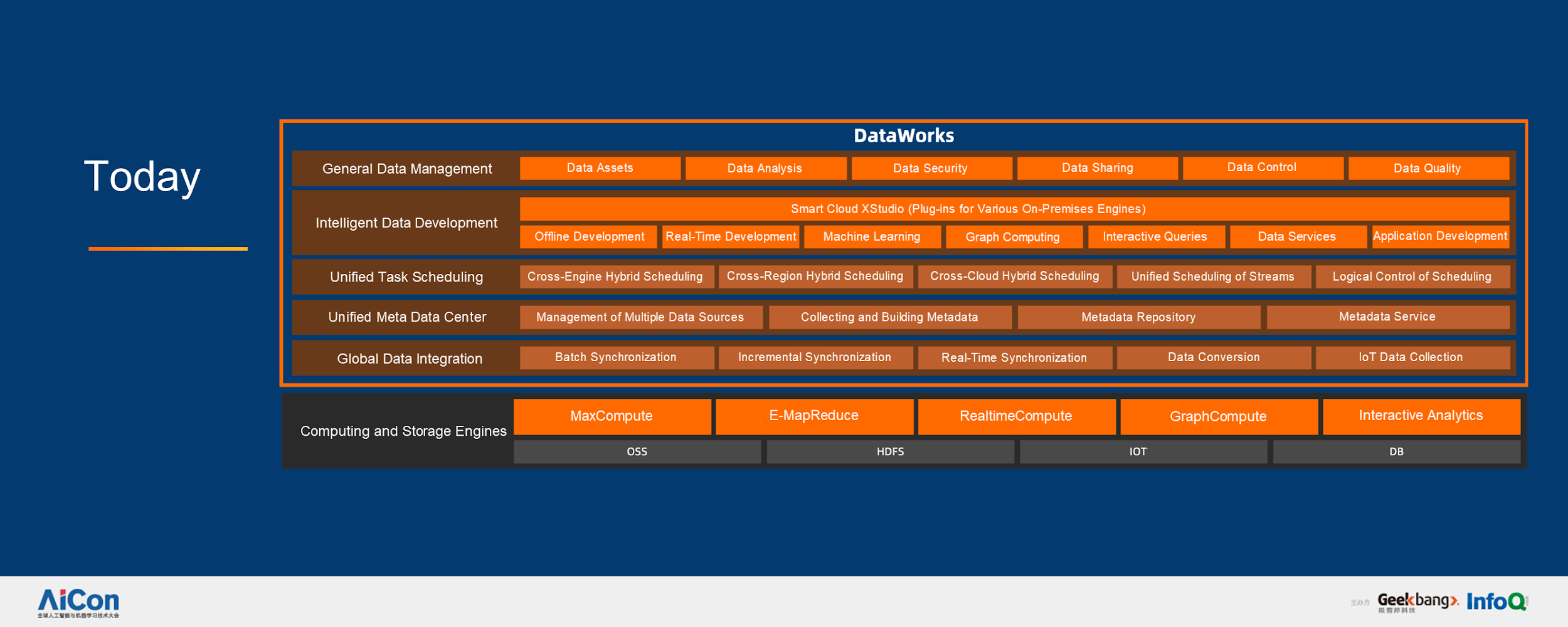

Today, the technology backend is highly complex, incorporating powerful computing engines that are capable of comprehensive data integration, centralized data source management, task management, intelligent data development, and data synthesis governance.

According to the final analysis, AI and computing are interdependent. AI only thrives on a foundation of sufficient computing power. Therefore, a mighty data processing platform is needed to run analysis and extraction to drive innovations. Algorithm engineers may then try various models and ways to structure machine learning to improve AI efficiency and accuracy.

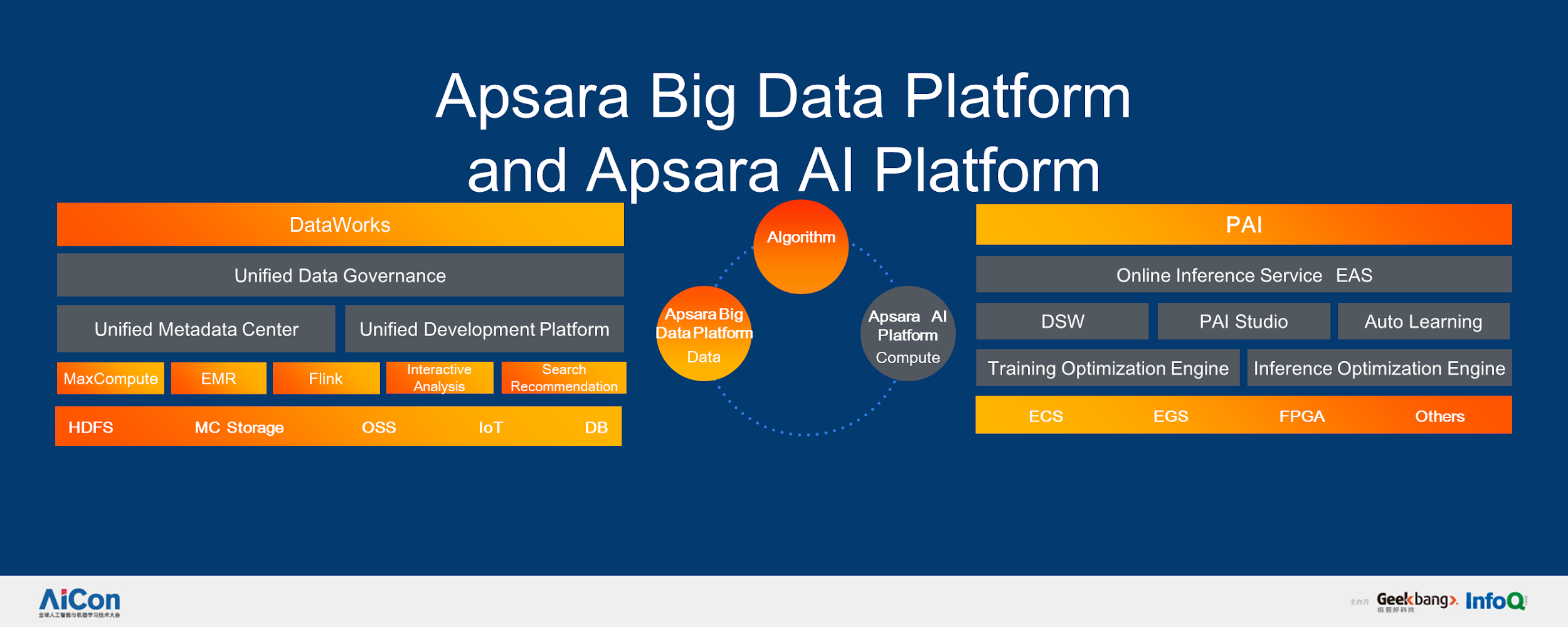

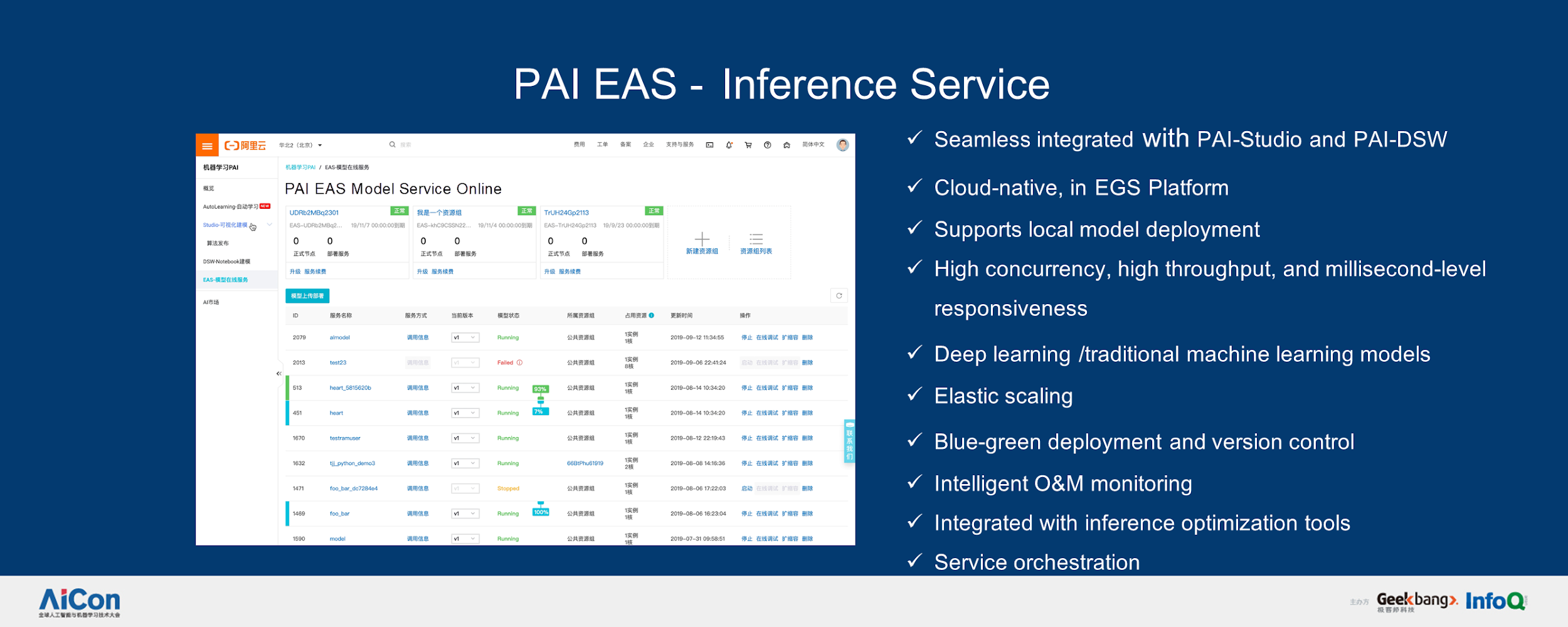

The preceding sections mainly focus on AI scenarios. Now let's discuss the AI technology behind such scenarios with a major focus on the Apsara AI Platform. Machine Learning Platform for AI (PAI) and Elastic Algorithm Service (EAS) for online inference are at the upper layer of this platform, Data Science Workshop (DSW), PAI Studio, and Auto Learning are at the middle layer, and the optimization engine and the inference optimization engine are at the lower layer. The Apsara AI Platform is designed to solve large-scale distributed data processing problems.

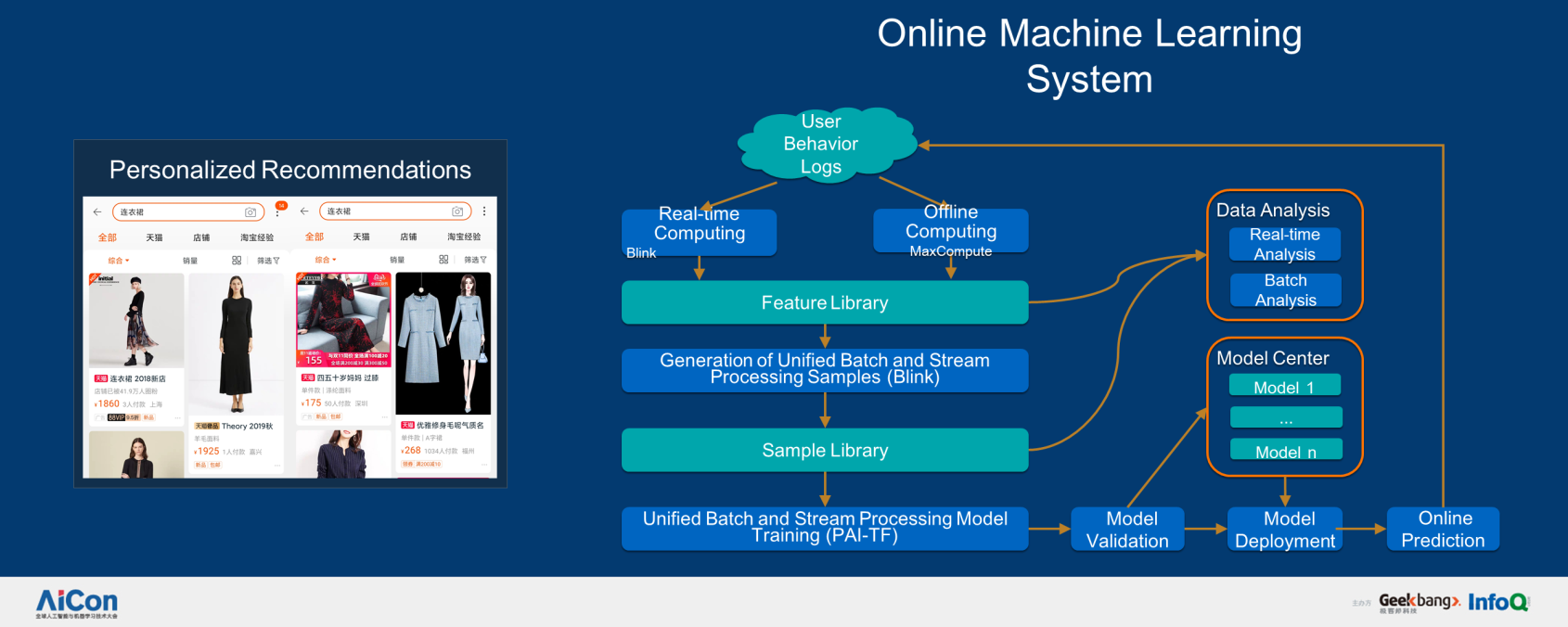

With an existing online machine learning system, which performs real-time and offline computations on user behavior logs, extracts feature library, generates unified stream and batch processing samples to the sample library, and eventually conducts unified stream and batch processing model training; what is the need for another system? The first answer is the need for real-time. Lin said "We must closely follow the fast-changing user interests, whereas traditional searching is insensitive to such changes. If we update models every two weeks, we will miss several waves of best-selling products."

Therefore, It is necessary to make real-time judgments using online machine learning, which is pretty close to deep learning. In a non-real-time situation, engineers may take time for refined feature engineering, a better understanding of data, and capturing the relationships among data using deep learning. It is the advantage brought by deep learning that takes the place of experts for capturing data relationships. However, this process involves massive computing workloads. Alibaba Cloud's online machine learning system passes the logs of Double 11 to the real-time computing platform for forming data sets, generates samples by aggregating data according to IDs, and eventually performs incremental learning, validation, and deployment. Thus, models are rapidly updated to meet the changing user and business demands.

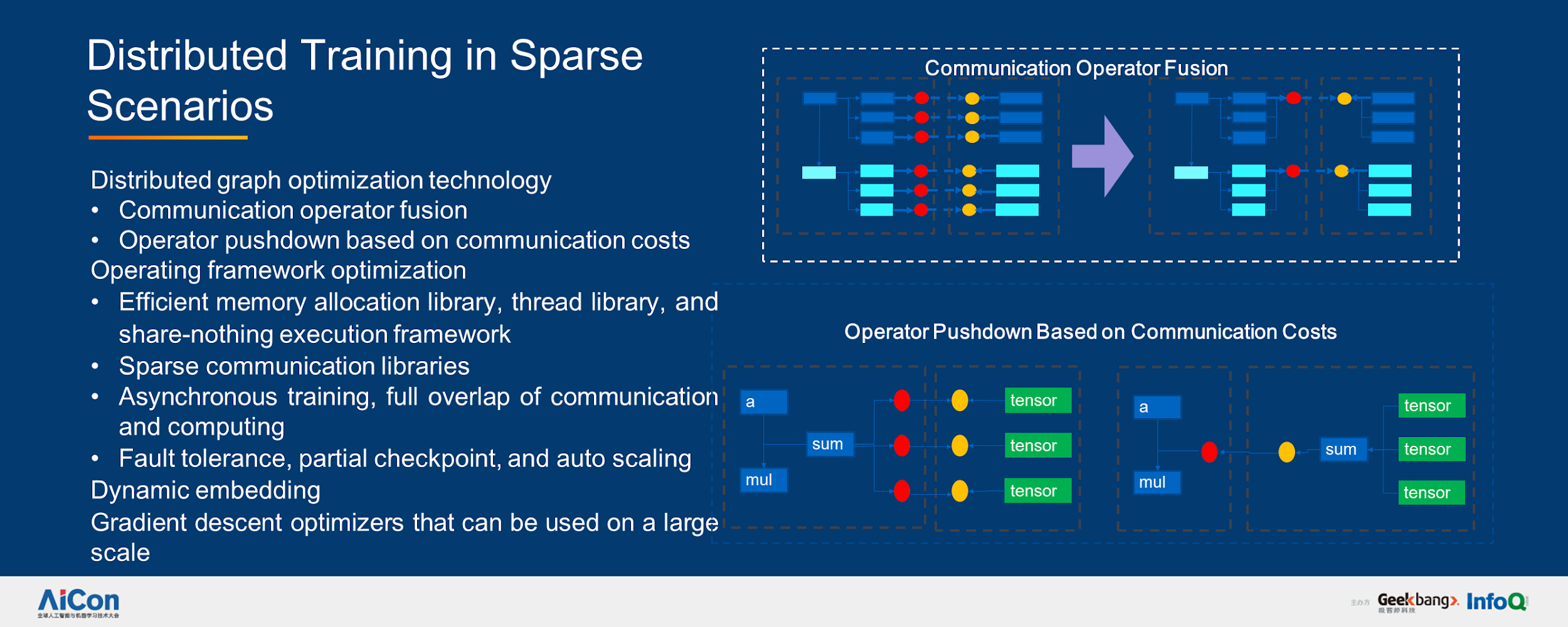

This process poses another challenge. To make personalized recommendations, the model must be massive and therefore necessitates very large distributed training for sparse scenarios. Currently, the available open-source machine learning frameworks do not meet the required demand for scale, due to the need for several optimizations to train vast quantities of data in sparse scenarios. Deep learning is capable of describing large fine-grained images. However, it is imperative to consider how to split images for a better trade-off between computation and communication.

Lin said, "We implement the distributed graph optimization technology by combining communication operator fusion and operator pushdown based on communication costs." The following figure shows the optimization of the running framework using various features and tools, including efficient memory allocation library (such as thread library and a share-nothing execution framework), sparse communication, asynchronous training, full overlap of communication and computation, fault tolerance, partial checkpoint, auto-scaling, dynamic embedding, and large-scale application of gradient descent optimizer.

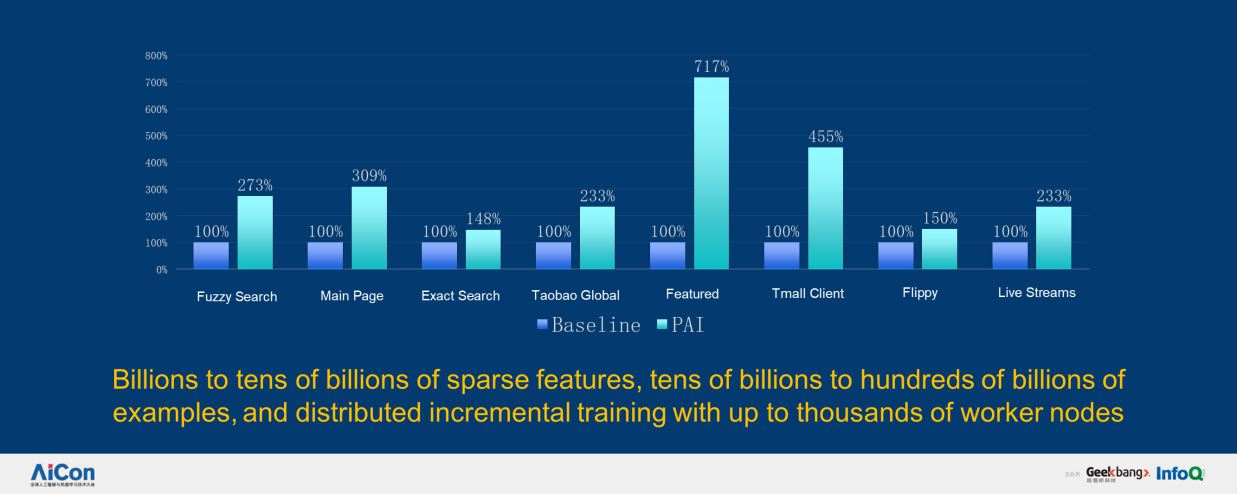

After the optimization, the performance improves seven-folds. The number of sparse features grows from billions to tens of billions and that of samples from tens of billions to hundreds of billions. Moreover, distributed incremental training involves up to thousands of worker nodes.

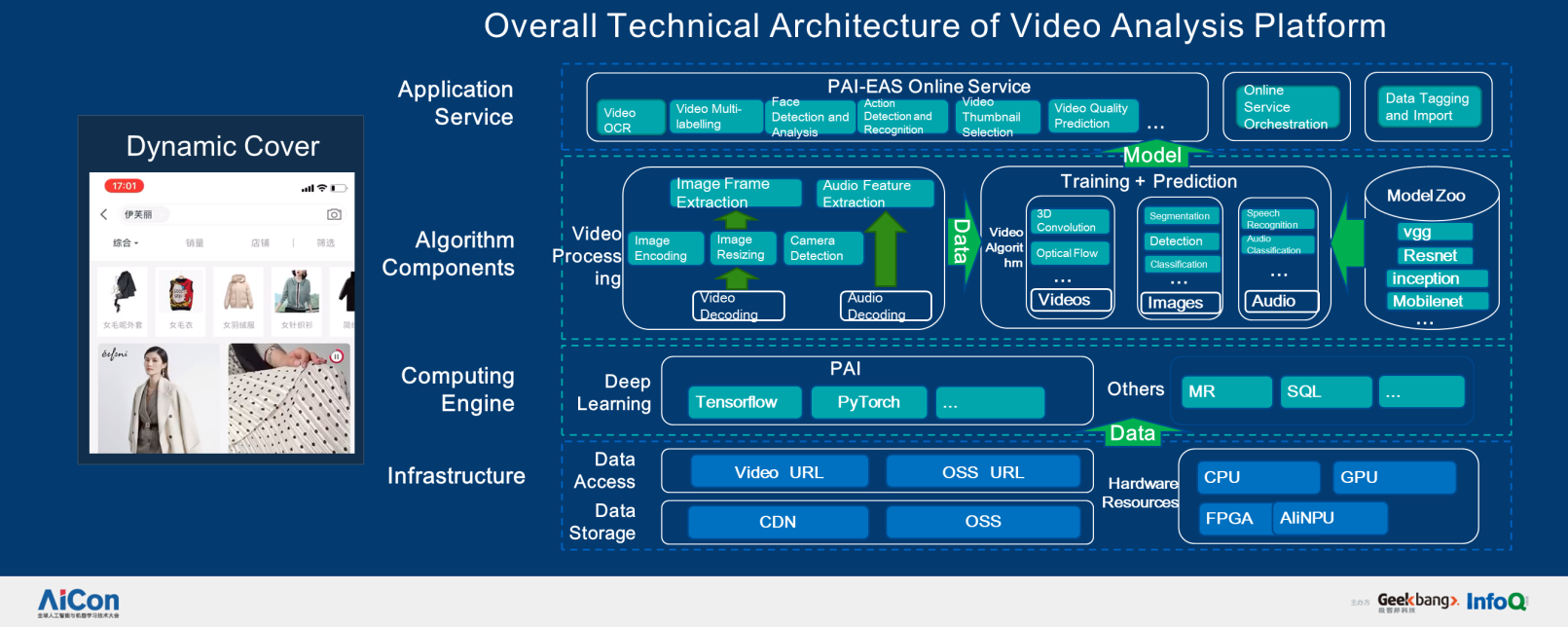

For the dynamic cover scenario, a large number of videos are analyzed. Analyzing videos is more complicated than analyzing images as videos involve more processing. For example, videos need to be preprocessed to extract video frames. However, as it is way too costly to extract every frame, we only extract the keyframes. This is done through image recognition and target detection, which are highly complex tasks. Therefore, Alibaba develops a video platform to help video analysis and algorithm engineers solve problems. The following figure shows its architecture.

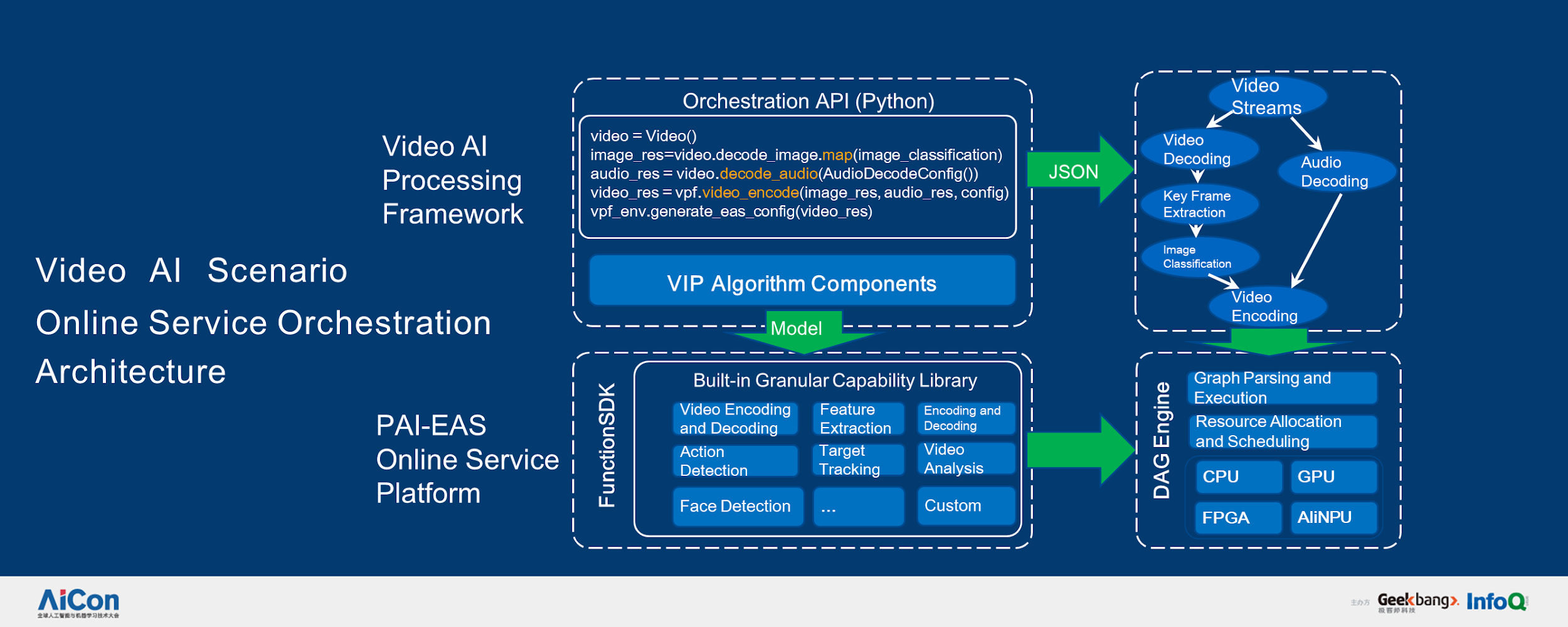

In the case of videos, online services are also complicated, including segmentation and merging. Videos are segmented, understood, and extracted, and finally merged. With the video PAI-EAS online service platform, algorithm engineers only need to write simple Python code to invoke the corresponding services through APIs, allowing them to focus more on innovation.

In addition to the preceding scenarios, the most important task of the platform is to support the massive innovations made by algorithm engineers. Five years ago, Alibaba's algorithm models were rare and precious because there were very few algorithm engineers. With the evolution of deep learning, more and more algorithm engineers are building models. To better support their needs, Alibaba automates AI to allow algorithm modeling engineers to focus on business modeling, whereas the infrastructure PAI is responsible for the efficient and high-performance execution of the business models.

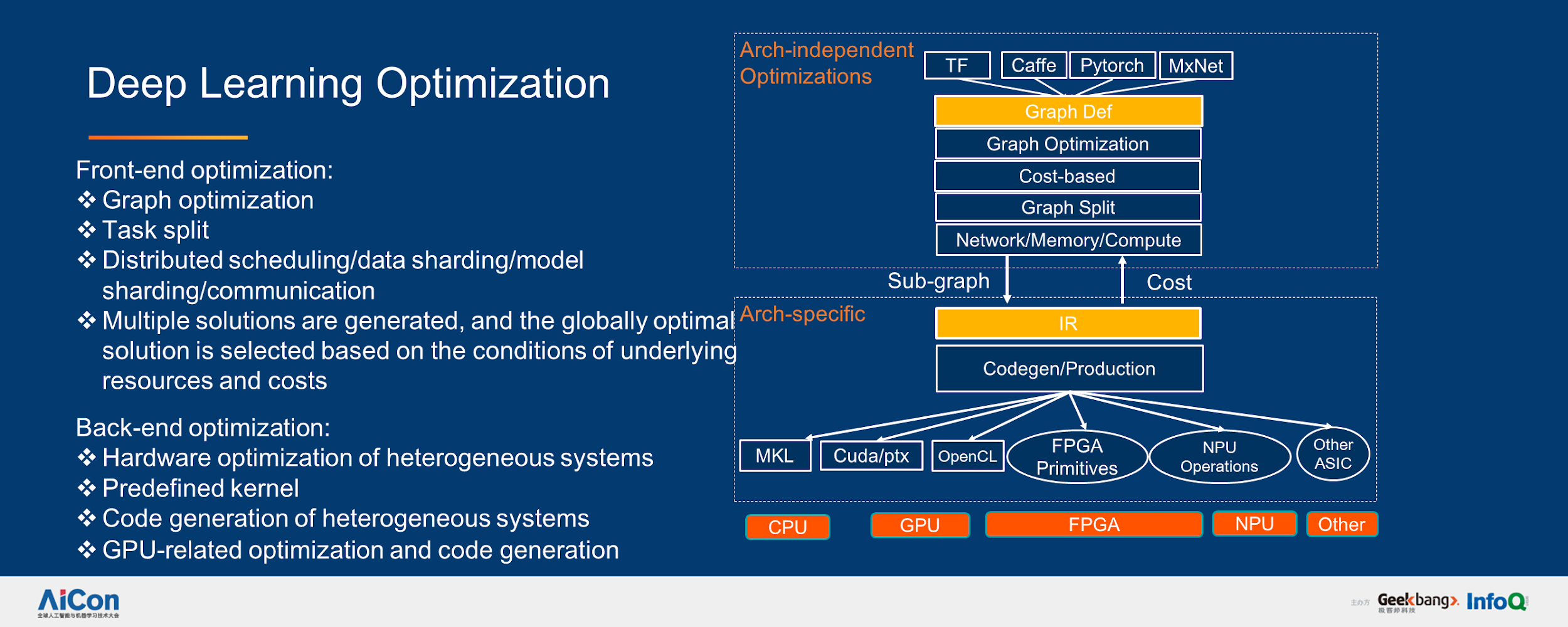

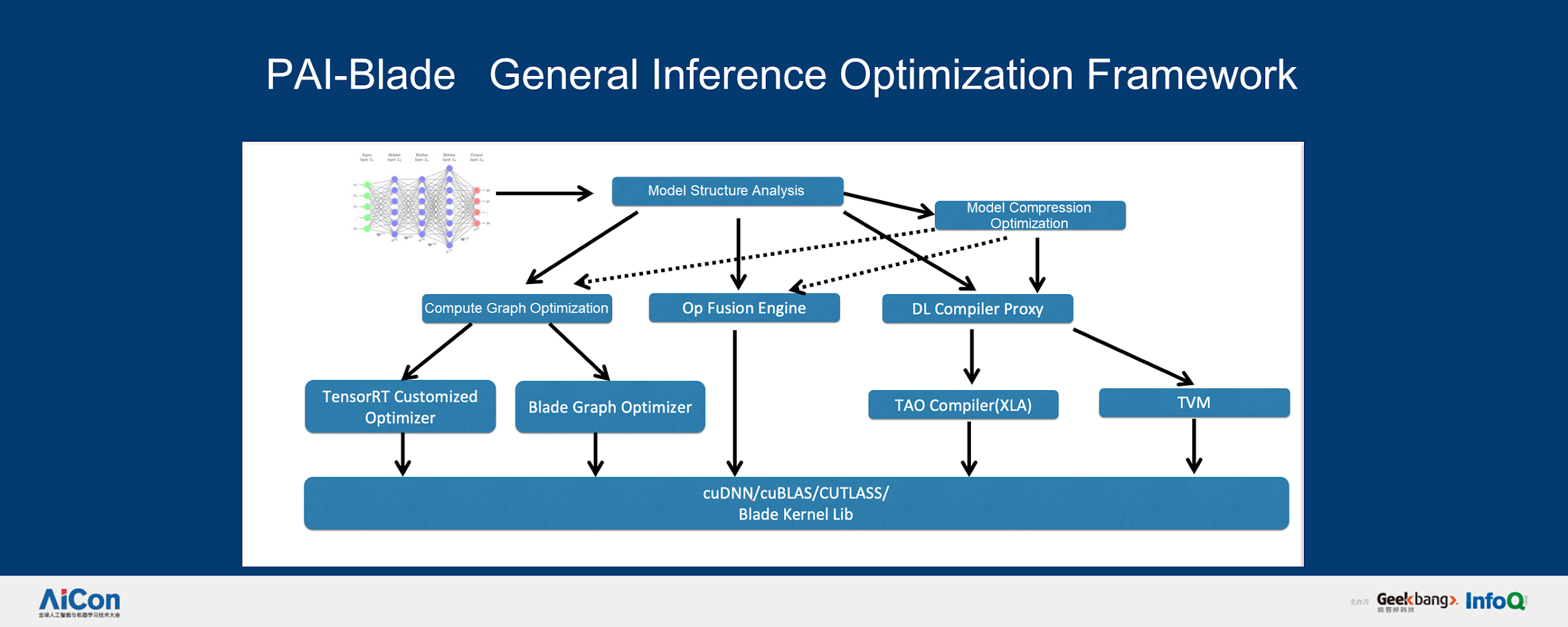

In terms of deep learning, Alibaba makes front-end and back-end optimizations. "We want to utilize the compilation technology and system technology services to implement graph optimization, task split, distributed scheduling, data sharding, and model sharding, and select the best solution to implement through the system model," said Lin This is the philosophy behind PAI. The general inference optimization framework of PAI-Blade consists of the following parts:

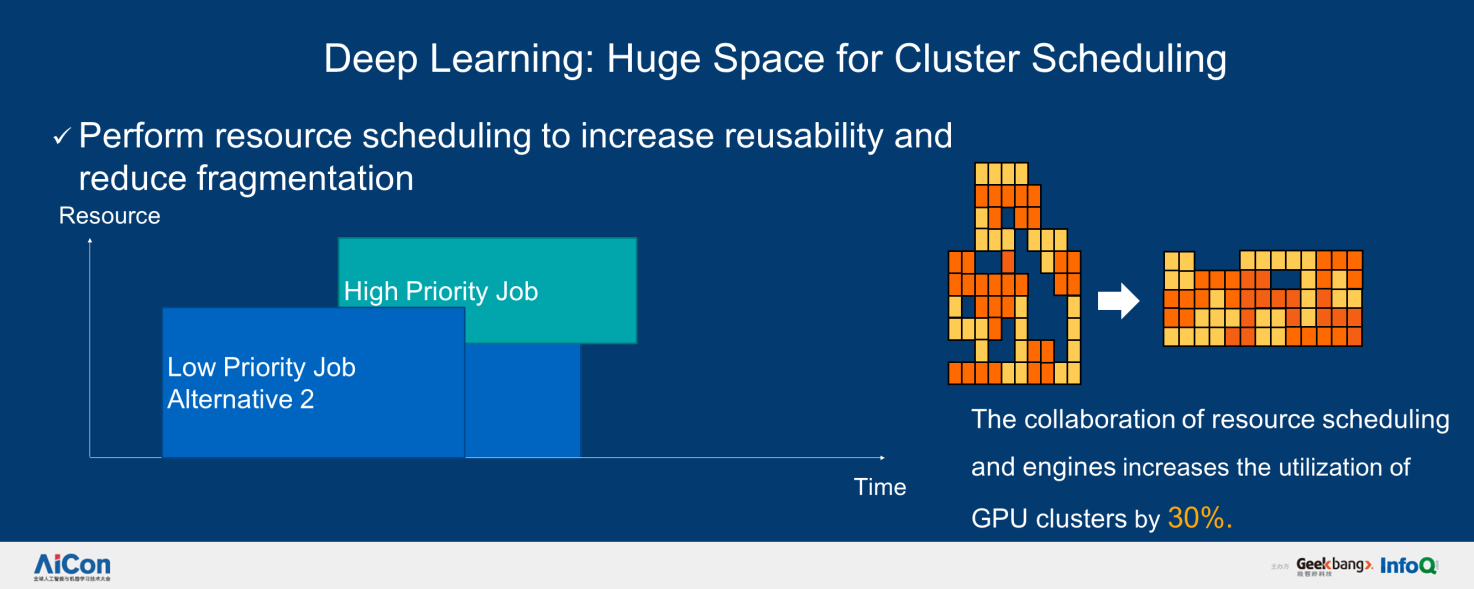

Lin stated, "With these systematic enhancements, we have made some optimizations. We have a very large cluster in which reuse is conveniently implemented. The utilization of GPU clusters increases by 30% due to the coordination of resource scheduling and engines."

Additionally, many of Alibaba AI services are loaded with the online service framework, PAI EAS. This cloud-native framework supports the massive AI requests of Double 11 by using the scale and scalability of the cloud platform. During Double 11, Alibaba deals with not only massive transaction data, but also massive AI requests from AI-based customer service agents and Cainiao Logistics' voice assistant. The capabilities of the cloud platform allow providing better user experience.

To summarize, such technologies back all Alibaba business units by supporting distributed training for a single task of over tens of thousands of machines, and thousands of AI-based services, with average daily invocation reaching 100,000. Finally, the growth of Alibaba's Double 11 is closely related to the evolution of AI technology and the explosion of data.

Guest Speaker Bio:

Lin Wei is a researcher at the Alibaba Cloud Platform for Artificial Intelligence division. He has 15 years of experience in big data and ultra-large distributed systems. He is responsible for the overall design and architecture of Alibaba's MaxCompute and Machine Learning Platform for AI (PAI) and helps to advance the evolution of MaxCompute2.0, PAI2.0, and PAI3.0. Before joining Alibaba, he was a core member of Microsoft Cosmos and Scope at Microsoft Research, focusing on distributed system research. He worked on the distributed NoSQL storage system PacificA, the distributed large-scale batch processing system Scope, the scheduling system Apollo, the stream computing engine StreamScope, and ScopeML distributed machine learning. He has presented over ten papers at top conferences in the field of systems, including ODSI, NSDI, SOSP, and SIGMOD.

How to Connect the Consumer Experience with Cloud Computing?

2,599 posts | 762 followers

FollowAlibaba Clouder - November 22, 2019

Alibaba Clouder - November 27, 2019

AlibabaCloud_Network - January 23, 2020

Alibaba Cloud MaxCompute - March 3, 2020

Alibaba Clouder - December 3, 2019

Alibaba Clouder - November 18, 2020

2,599 posts | 762 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn MoreMore Posts by Alibaba Clouder