Application-level scaling is relative to O&M-level scaling. The utilization ratio of CPU and memory is an application-independent O&M metric, and the HPA configuration for scaling based on such metrics is O&M-level scaling. Metrics, such as request total, request latency, and P99 distribution, are application-related or called business-aware monitoring metrics.

This article describes the configuration of three application-level monitoring metrics in HPA to implement the application-level auto scaling.

Kube-metrics-adapter

Run the following command to deploy kube-metrics-adapter (For the complete script, please see demo_hpa.sh):

helm --kubeconfig "$USER_CONFIG" -n kube-system install asm-custom-metrics \

$KUBE_METRICS_ADAPTER_SRC/deploy/charts/kube-metrics-adapter \

--set prometheus.url=http://prometheus.istio-system.svc:9090Run the following command to verify the deployment:

#Verify POD

kubectl --kubeconfig "$USER_CONFIG" get po -n kube-system | grep metrics-adapter

asm-custom-metrics-kube-metrics-adapter-6fb4949988-ht8pv 1/1 Running 0 30s

#Verify CRD

kubectl --kubeconfig "$USER_CONFIG" api-versions | grep "autoscaling/v2beta"

autoscaling/v2beta1

autoscaling/v2beta2

#Verify CRD

kubectl --kubeconfig "$USER_CONFIG" get --raw "/apis/external.metrics.k8s.io/v1beta1" | jq .

{

"kind": "APIResourceList",

"apiVersion": "v1",

"groupVersion": "external.metrics.k8s.io/v1beta1",

"resources": []

}Loadtester

Run the following command to deploy flagger loadtester:

kubectl --kubeconfig "$USER_CONFIG" apply -f $FLAAGER_SRC/kustomize/tester/deployment.yaml -n test

kubectl --kubeconfig "$USER_CONFIG" apply -f $FLAAGER_SRC/kustomize/tester/service.yaml -n testFirst, create a configuration of HorizontalPodAutoscaler to sense the application request total (istio_requests_total):

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

metadata:

name: podinfo-total

namespace: test

annotations:

metric-config.external.prometheus-query.prometheus/processed-requests-per-second: |

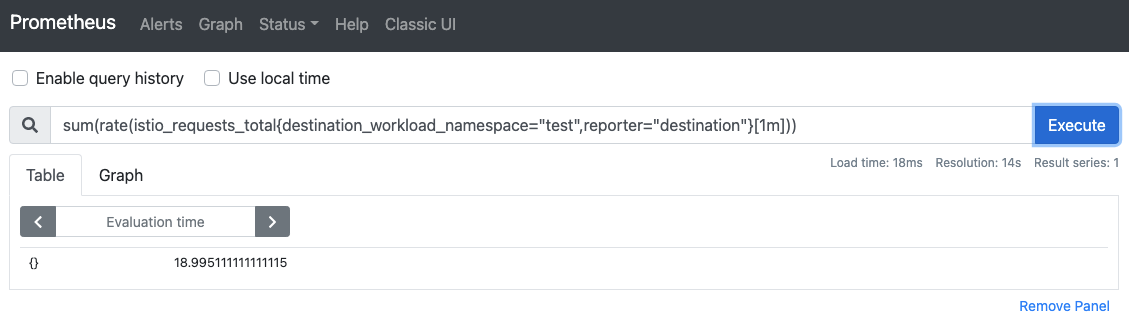

sum(rate(istio_requests_total{destination_workload_namespace="test",reporter="destination"}[1m]))

spec:

maxReplicas: 5

minReplicas: 1

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: podinfo

metrics:

- type: External

external:

metric:

name: prometheus-query

selector:

matchLabels:

query-name: processed-requests-per-second

target:

type: AverageValue

averageValue: "10"Run the following command to deploy the HPA configuration:

kubectl --kubeconfig "$USER_CONFIG" apply -f resources_hpa/requests_total_hpa.yamlRun the following command for verification:

kubectl --kubeconfig "$USER_CONFIG" get --raw "/apis/external.metrics.k8s.io/v1beta1" | jq .The results are listed below:

{

"kind": "APIResourceList",

"apiVersion": "v1",

"groupVersion": "external.metrics.k8s.io/v1beta1",

"resources": [

{

"name": "prometheus-query",

"singularName": "",

"namespaced": true,

"kind": "ExternalMetricValueList",

"verbs": [

"get"

]

}

]

}Similarly, application-level metrics from other dimensions can be used to configure HPA. The examples are listed below:

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

metadata:

name: podinfo-latency-avg

namespace: test

annotations:

metric-config.external.prometheus-query.prometheus/latency-average: |

sum(rate(istio_request_duration_milliseconds_sum{destination_workload_namespace="test",reporter="destination"}[1m]))

/sum(rate(istio_request_duration_milliseconds_count{destination_workload_namespace="test",reporter="destination"}[1m]))

spec:

maxReplicas: 5

minReplicas: 1

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: podinfo

metrics:

- type: External

external:

metric:

name: prometheus-query

selector:

matchLabels:

query-name: latency-average

target:

type: AverageValue

averageValue: "0.005"apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

metadata:

name: podinfo-p95

namespace: test

annotations:

metric-config.external.prometheus-query.prometheus/p95-latency: |

histogram_quantile(0.95,sum(irate(istio_request_duration_milliseconds_bucket{destination_workload_namespace="test",destination_canonical_service="podinfo"}[5m]))by (le))

spec:

maxReplicas: 5

minReplicas: 1

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: podinfo

metrics:

- type: External

external:

metric:

name: prometheus-query

selector:

matchLabels:

query-name: p95-latency

target:

type: AverageValue

averageValue: "4"Run the following command to generate experimental traffic to verify if the auto scaling with HPA configuration has taken effect:

alias k="kubectl --kubeconfig $USER_CONFIG"

loadtester=$(k -n test get pod -l "app=flagger-loadtester" -o jsonpath='{.items..metadata.name}')

k -n test exec -it ${loadtester} -c loadtester -- hey -z 5m -c 2 -q 10 http://podinfo:9898A request lasting for five minutes with a QPS of 10 and a concurrency number of 2 is run on the section below. The detailed hey command is listed below:

Usage: hey [options...] <url>

Options:

-n Number of requests to run. Default is 200.

-c Number of workers to run concurrently. Total number of requests cannot

be smaller than the concurrency level. Default is 50.

-q Rate limit, in queries per second (QPS) per worker. Default is no rate limit.

-z Duration of application to send requests. When duration is reached,

application stops and exits. If duration is specified, n is ignored.

Examples: -z 10s -z 3m.

-o Output type. If none provided, a summary is printed.

"csv" is the only supported alternative. Dumps the response

metrics in comma-separated values format.

-m HTTP method, one of GET, POST, PUT, DELETE, HEAD, OPTIONS.

-H Custom HTTP header. You can specify as many as needed by repeating the flag.

For example, -H "Accept: text/html" -H "Content-Type: application/xml" .

-t Timeout for each request in seconds. Default is 20, use 0 for infinite.

-A HTTP Accept header.

-d HTTP request body.

-D HTTP request body from file. For example, /home/user/file.txt or ./file.txt.

-T Content-type, defaults to "text/html".

-a Basic authentication, username:password.

-x HTTP Proxy address as host:port.

-h2 Enable HTTP/2.

-host HTTP Host header.

-disable-compression Disable compression.

-disable-keepalive Disable keep-alive, prevents re-use of TCP

connections between different HTTP requests.

-disable-redirects Disable following of HTTP redirects

-cpus Number of used cpu cores.

(default for current machine is 4 cores)Run the following command to check the scaling:

watch kubectl --kubeconfig $USER_CONFIG -n test get hpa/podinfo-totalThe results are listed below:

Every 2.0s: kubectl --kubeconfig /Users/han/shop_config/ack_zjk -n test get hpa/podinfo East6C16G: Tue Jan 26 18:01:30 2021

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

podinfo Deployment/podinfo 10056m/10 (avg) 1 5 2 4m45sIt is a similar case with the other two HPAs. The commands are listed below:

kubectl --kubeconfig $USER_CONFIG -n test get hpa

watch kubectl --kubeconfig $USER_CONFIG -n test get hpa/podinfo-latency-avg

watch kubectl --kubeconfig $USER_CONFIG -n test get hpa/podinfo-p95At the same time, the related real-time application-level metric data can be viewed in Prometheus in real-time, as shown below:

Flagger on ASM: Progressive Canary Release Based on Mixerless Telemetry (Part 1) – Telemetry Data

feuyeux - July 6, 2021

feuyeux - July 6, 2021

Alibaba Cloud Native - November 3, 2022

Xi Ning Wang(王夕宁) - July 21, 2023

Alibaba Cloud Native Community - July 27, 2023

Alibaba Cloud Native Community - September 20, 2022

Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn MoreMore Posts by feuyeux