In the Apsara User Group—CDN and Edge Computing Session at the Computing Conference 2018 held in Hangzhou in the afternoon of September 19, Wang Guangfang, a senior technical expert of Alibaba Cloud's Edge Computing team, shared Alibaba Cloud's understanding of edge computing from several perspectives including the definition, scenario requirements, and challenges of edge computing as well as the values and capabilities, typical application scenarios, and examples of Alibaba Cloud's Edge Node Service (ENS).

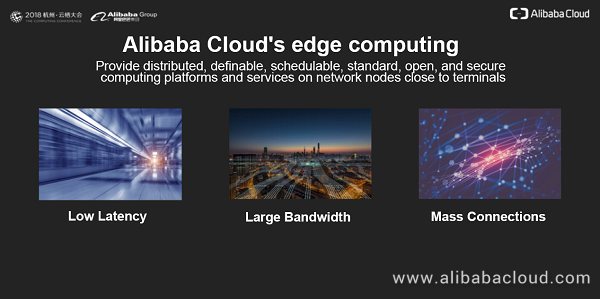

Edge computing is a concept that appeared several years ago. It has many different definitions in the industry, and a commonly agreed one can be to provide services on the network edge closer to terminals. On the one hand, the network location must be close enough to terminals. On the other hand, comprehensive service capabilities meeting the edge requirements must be available at that network location.

With the development of the Internet, business scenarios tend to be complex and innovative. The ever-increasing and ultimate experience-seeking requirements bring about the need for architecture and cost optimization. The previous simple center-terminal architecture cannot manage network performance and business traffic pressures, and a multi-level center-edge-terminal architecture is needed.

Besides, with the development of new technologies represented by IoT and 4G/5G, more and more smart devices are connected to the Internet. According to the Internet data center (IDC) data statistics, over 50 billion terminals and devices will be connected to the Internet by 2020. This is a huge number. Data generated by massive devices is experiencing an exponential growth. If such a huge amount of data and device access traffic are directly routed to the cloud center, the cloud center is heavily burdened, bringing about many problems such as network congestion. Edge computing supports the establishment of a large-scale, distributed architecture that allows a certain percentage of data within a certain scope to be processed on the edge. Only a small amount of necessary data and access traffic will be routed to the cloud center.

The first is low network latency. The shortening of spatial distance brings about shortened propagation latency. What's more important, it reduces the latency caused by various routing/forwarding and network device processing scenarios on complex networks, thereby greatly reducing the overall latency.

The second is to support large bandwidth scenarios. By processing large-traffic business on the edge, edge computing can effectively avoid network congestion and other problems, and greatly reduce costs.

The third is to deal with highly concurrent access. Edge computing uses a distributed architecture to mitigate the load on the cloud center.

"From these aspects, it can be seen that edge computing is complementary to the cloud center's capabilities. Its positioning is not to replace the cloud center, but to extend the cloud boundaries and empower a new cloud-edge-terminal business architecture along with the cloud center. The synergy between the cloud and the edge is the basic form of the Internet of Beings (IoB) era." said Wang.

Wang held that edge computing is definitely not to directly copy and transfer capabilities of the cloud to the edge, because the operating environment of the edge is completely different from that of the cloud center. The edge is small in scale, limited in resources, and heterogeneous. In addition, edge nodes are scattered at the far end of the network. A distributed business architecture is established on this basis, which is bound to involve scheduling.

Taking the above reasons into consideration, edge computing should provide a platform to hide the underlying complex infrastructure forms on the edge, and standardize and open the underlying computing/storage/network infrastructure capabilities, and the distribution, scheduling, security, and other capabilities required by the distributed architecture. In this way, users can easily build their own distributed edge business architecture.

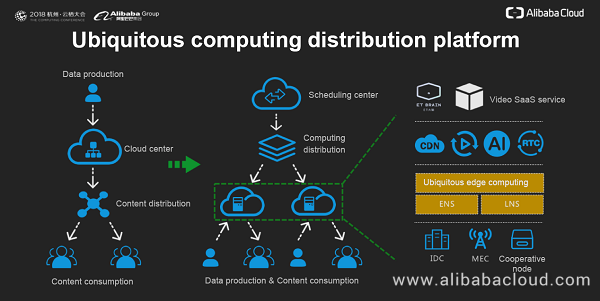

Currently, Alibaba Cloud has developed several cloud products in the field of edge computing. What we are discussing here is Alibaba Cloud's planning of general service capabilities at the edge infrastructure level, that is, the edge computing distribution platform shown in this figure.

In current edge scenarios, the most mature application is Content Delivery Network (CDN). CDN caches content to edge nodes in advance to achieve low latency and low cost of content consumption. Against the CDN architecture, Wang's Edge Computing team hopes to build edge computing into a computing distribution platform, which checks a customer's resource requirements and selects appropriate edge node resources through resource scheduling at the center. Then, the platform packs and distributes the business logic to the edge nodes based on the computing distribution capability, so that the customer's business can run easily on the edge.

After the appropriate business logic is transferred to the edge nodes for execution, data production and consumption can form a closed loop within the edge without having to go back to the center for processing. The right side of the figure above shows a detailed edge node architecture. First, the infrastructure of edge computing is built on various edge nodes, including fixed network nodes such as an edge IDC, mobile network nodes such as wireless base stations, and more heterogeneous cooperative nodes with different resource capabilities and network environments. Based on the infrastructure, software systems such as ENS and LNS are used to encapsulate the underlying complex infrastructure forms and then abstract and open standardized interfaces and service capabilities to the upper layer.

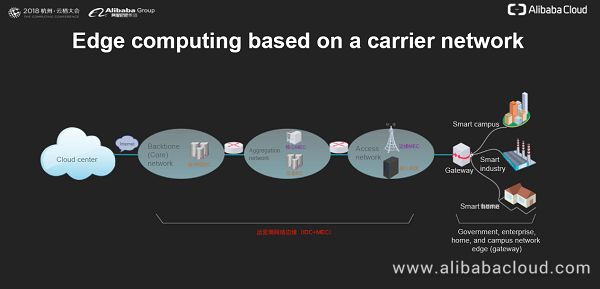

Speaking of the network scope of edge computing, Wang said: "The network edge varies from the user-side networks of the government, enterprises, campuses, and homes that are closest to terminals, to the carrier networks in different regions such as provinces, cities, districts, and counties. The network link from terminals to the center is relatively complex. Edge computing must provide broad coverage, from the last hundreds or tens of kilometers to last ten kilometers to terminals, to ensure that service capabilities can be delivered. ENS is targeted at the intermediate carrier network, including the IDC environment on the fixed network and the mobile edge computing (MEC) environment on the mobile network. By delineating the edge network scope based on ENS, we can quickly identify some target scenarios, such as CDN and interactive live streaming."

Before edge computing services such as ENS appear, customers only have one choice. That is, they have to purchase nodes from various carriers in various regions and build their own edge infrastructure. Self-built Infrastructure will bring about a series of problems and challenges.

First, self-built infrastructure requires customers to perform supply chain management, such as business procurement and server procurement, and node construction all by themselves, resulting in excessive asset investment and cost. In case of burst business traffic, customers are captive in a lack of flexibility because the construction of new nodes requires a long delivery cycle. After the temporary business peaks, a lot of resources become idle. In addition, self-built infrastructure brings challenges to operations and maintenance (O&M). First, customers need to manage the entire process from the construction, delivery, to the operation of edge nodes. Second, customers have to manage O&M issues that may occur at the physical server level, operating system level, and software application level of edge nodes. Whenever a problem appears, customers need to use a set of tools to remotely view logs and locate causes. In this context, customers are in urgent need of automated and GUI-based O&M.

Another challenge lies in security and reliability. The reliability of IDC infrastructure on the edge depends on the third-party carrier's services. Customers need to deal with various complex situations, for example, take countermeasures in response to carrier network cutover. Customers also need to discover and cope with possible hardware and software failures on edge nodes in a timely manner. These requirements need to be implemented in the design and development of the business architecture, bringing about great challenges and high costs. For security, the focus should be put on different levels of issues such as network traffic security and host security. The security solution development cost is high at each level. Take DDoS protection as an example. When an attack against an IP address on an edge node occurs, the whole network may become unavailable. To achieve the desired protection effect, customers may need to deploy a software + hardware system solution on each edge node.

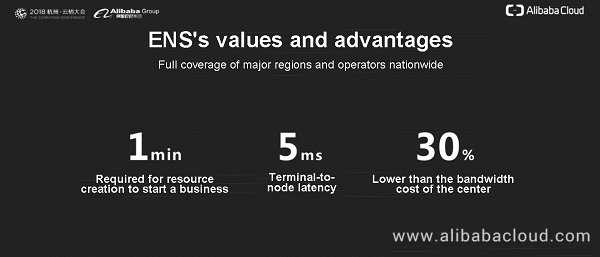

ENS is developed to address the pain points and challenges that customers may encounter in self-building edge infrastructure in the aforementioned target scenarios. ENS relies on edge nodes close to terminals and users to provide the computing distribution platform service, which enables customers to easily run appropriate business modules on the edge and establish a distributed edge architecture through cloud-edge synergy. It features low latency and low cost, and reduces the pressure on the center. The edge nodes of ENS have covered major regions and carriers in China. On the edge nodes, computing resources can be dynamically scheduled according to customers' requirements, and customers can prepare their business application software into image packages and distribute them to the edge nodes. ENS further extends Alibaba Cloud's public cloud boundaries to the edge, and fully meets customer requirements for a complex "center + edge" business architecture along with the public cloud, thereby truly offering the cloud's infrastructure capabilities to users.

By using Alibaba Cloud's ENS, customers can create edge resources in minutes, reduce the response time from a terminal to a node to 5 ms, and save over 30% of the bandwidth cost for the center.

ENS encapsulates some self-built infrastructure problems to the bottom layer as these problems are invisible to and require no concern from users. This greatly reduces the capital investment in business start-up or capacity expansion and also saves a lot of management costs. Second, with its dynamic resource scheduling capability, ENS can help customers expand or shrink the resource capacity in a relatively short period of time. Customers can purchase resources on demand in Pay-As-You-Go mode. This ensures that business resource requirements are met while saving costs for customers.

In terms of product experience, ENS provides a complete and easy-to-use Web management console and OpenAPIs to support remote online management and computing distribution management of computing resources, real-time visualized monitoring of various operating indicators, and statistical analysis of various usage data. It greatly enhances the monitoring and O&M capabilities and efficiency, and simplifies monitoring and O&M.

The autonomous service and self-recovery capabilities of nodes are core requirements for evaluating availability. When the center loses control of an edge node due to disconnection, we need to ensure the availability and continuity of services within the node to guarantee basic service capabilities. When the edge node re-establishes a connection to the center, the intact status and data need to be sent to the center.

In addition, by using the dynamic resource migration and scheduling capabilities, we can ensure high availability of computing resources and isolate multiple tenants to avoid resource contention. In terms of security, edge nodes support DDoS detection, flow cleaning, and IP black hole protection capabilities. For more detailed product introduction and product experience of ENS, visit our official website.

Wang elaborated on several typical application scenarios and examples of ENS.

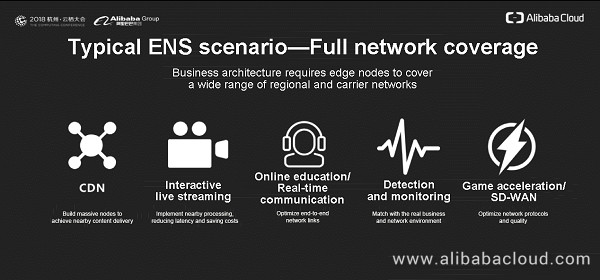

The first category of typical scenarios is that the business architecture has requirements for node coverage. Such scenarios mainly take place on the online business of the Internet industry. Generally, these scenarios have few regional limitations on the target service scope, and the edge nodes shall ensure sufficient and broad coverage over the entire network. These five specific scenarios are representative of the current ENS service scenarios, which can give full play to the advantages of ENS and bring greater value to customers' business.

The first scenario is CDN. As mentioned earlier, the CDN business is a typical edge computing scenario and it can seamlessly run on ENS. Compared to the previous solutions, our online customers using ENS now have higher gains and obvious improvements in terms of the overall cost, O&M capabilities, and other aspects.

The second scenario is interactive live streaming, which requires low latency and low bandwidth cost.

The third scenario is online education and real-time audio and video communication. This scenario has two characteristics, namely, more emphasis on low latency and reliance on end-to-end communication as the basic pattern. The role of edge nodes in this scenario is to give customers access to the nearest forwarding network and provide more alternative network forwarding nodes.

The fourth scenario is detection and monitoring. In this scenario, we hope to match with the real business and network environment at the edge closest to users, to detect the correctness of some business logic, business stability, and performance indicators of core business. In particular, if business logic or user experience varies in different target regions, ENS can simulate the real results at the nearest edge. For the aforementioned real-time communication, the premise of dynamic routing is to inspect and evaluate the quality of existing network nodes and links, which is also a practical example of detection.

The fifth scenario is game acceleration and SD-WAN. In this scenario, we essentially expect to set up edge soft gateways or soft routes on edge nodes, and achieve such objectives as acceleration and security through the optimization of network protocols and network links. The role of edge nodes in this scenario is similar to real-time communication.

The second category of typical scenarios is localization, which focuses on ultra-low latency (within 1 ms to meet business requirements) and large bandwidth business scenarios within local 10 kilometers. Such scenarios are more biased towards traditional industries or offline businesses with regional characteristics. For example, in ET City Brain scenarios, video surveillance is implemented on the cloud. In new retail scenarios, some stores perform video AI, automatic identification monitoring, sales, and other activities on the cloud. Some IT facilities in the local industry are migrated to the cloud. Alibaba Cloud is gradually producing mature examples about these scenarios.

In the end, Wang shared his thoughts on the future development of ENS. First, with the arrival of 5G, we will see an explosion of demand for MEC. Second, Alibaba Cloud will continue to upgrade the computing distribution platform capabilities, including the global distribution capability, enhance the resource scheduling performance on massive nodes and user experience, and provide customers with more powerful and automated remote O&M capabilities to support scenario-based edge O&M. Finally, at the infrastructure level, we have seen the requirements for using edge computing in conjunction with AI. Alibaba Cloud is planning to support GPU and other capabilities to help build a distributed data analysis platform for edge scenarios. In addition, we will further enhance the network throughput and performance to support the processing of large-traffic business that accounts for a large percentage in edge scenarios.

2,599 posts | 764 followers

FollowAliware - November 4, 2021

Alibaba Developer - July 9, 2021

Alibaba Cloud Native Community - October 13, 2020

XG Lab - April 29, 2021

Alibaba Clouder - September 28, 2020

Alibaba Clouder - February 3, 2021

2,599 posts | 764 followers

Follow CDN(Alibaba Cloud CDN)

CDN(Alibaba Cloud CDN)

A scalable and high-performance content delivery service for accelerated distribution of content to users across the globe

Learn More CEN

CEN

A global network for rapidly building a distributed business system and hybrid cloud to help users create a network with enterprise level-scalability and the communication capabilities of a cloud network

Learn More ApsaraVideo Live

ApsaraVideo Live

A professional solution for live video and audio

Learn MoreMore Posts by Alibaba Clouder