By Kun Wu

In the previous article Driving Business Agility and Efficient Cloud Resource Management through Elastic Scheduling, we introduced the elastic scheduling feature of Alibaba Cloud Container Service (ACK). This feature supports resource scheduling based on the priority of multiple levels of resources and scale-in based on defined priorities. It aims to assist customers in overcoming challenges when using elastic cloud resources, such as the difficulty in differentially controlling business resource usage and the failure of some business pods to be released during scale-in.

This article will delve into how elastic scheduling utilizes virtual nodes to meet your business elasticity requirements. During the implementation of application elasticity, elastic speed and elastic position are the two core indicators that enterprises focus on.

For enterprises seeking high availability and stability , agile elasticity ensures the system's continuity and stability in the event of sudden increases in business traffic. Additionally, deploying applications across multiple regions effectively maintains continuous service availability during regional failures.

For enterprises processing big data tasks, fast elasticity shortens task execution time and accelerates application iteration. Moreover, centrally deploying applications in a single region reduces network latency between applications, further improving data processing efficiency.

These two indicators are essential to ensure the stable and efficient operation of enterprise business.

However, the current auto-scaling node pool that scales within minutes can no longer meet the demand in the face of fast-approaching business traffic peaks and increasing demand for big data computing power. Furthermore, achieving the expected elastic position through a reasonable deployment strategy is quite challenging.

To address these challenges, Alibaba Cloud has launched Elastic Container Instances (ECIs) to effectively scale at an elastic speed of 10 seconds in response to sudden traffic. Additionally, ACK seamlessly integrates with ECI resources using virtual node technology, allowing businesses to flexibly and dynamically call ECI resources in clusters and quickly respond to elasticity challenges. Furthermore, the elastic scheduling feature of ACK can maintain the service affinity configuration when scheduling services to ECIs, ensuring stable and efficient application running.

To utilize ECIs in ACK, the virtual node component needs to be installed in the ACK cluster.

For an ACK Pro cluster, you can deploy the ack-virtual-node component on the Add-ons page. By default, this component is managed and does not utilize worker node resources.

In an ACK dedicated cluster, you can deploy the ack-virtual-node component on the Marketplace page. Once installed, a deployment named ack-virtual-node-controller is created in the kube-system namespace, and it operates on your worker nodes.

Upon successful installation, you can utilize the kubectl get no command to view the virtual nodes in the cluster. The presence of several virtual nodes indicates successful installation.

After the virtual nodes are installed, you can use the elastic scheduling feature to configure the usage policy of ECIs. An example of this is prioritizing ECS scheduling and using ECI resources when ECS resources are fully utilized.

apiVersion: scheduling.alibabacloud.com/v1alpha1

kind: ResourcePolicy

metadata:

name: test

spec:

strategy: prefer

units:

- resource: ecs

- resource: eciAfter configuring the ResourcePolicy, all pods in the default namespace will adhere to the following scheduling rule: favor ECS, and use ECI when ECS resources are fully utilized.

🔔 Note: The preceding configuration will deactivate the preemption feature on ECS nodes. To maintain preemption capability on ECS nodes, set preemptPolicy=BeforeNextUnit. To limit the effective scope, configure the selector as needed.

The following shows the effect:

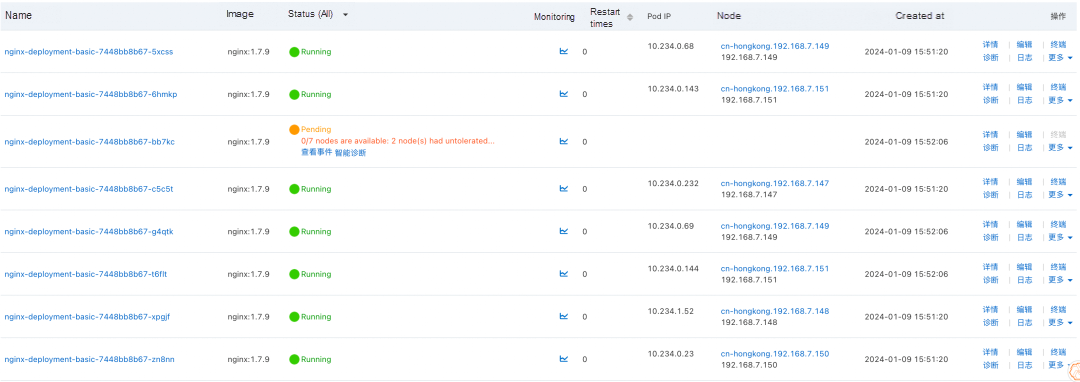

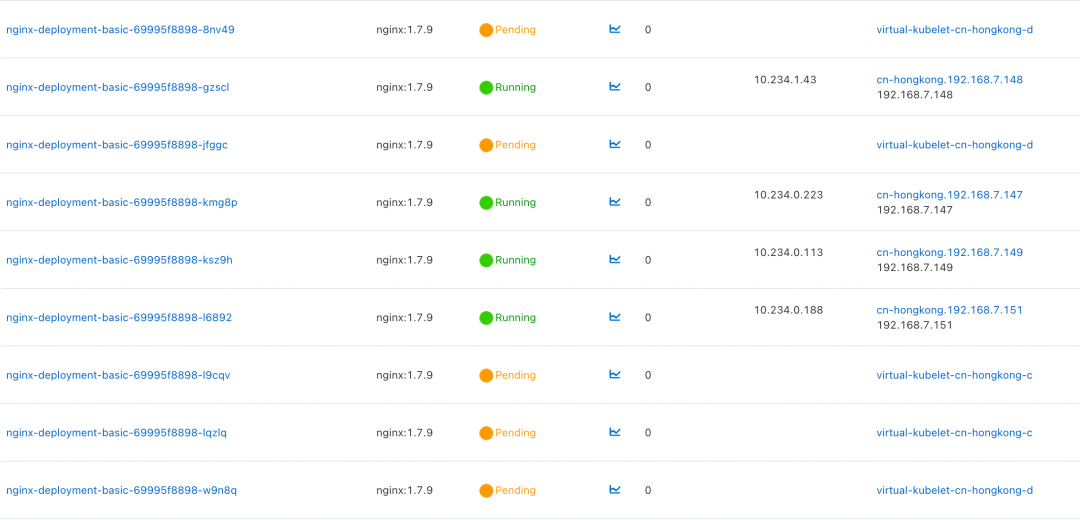

First, submit a deployment. Only seven of the eight business pods can be scheduled successfully.

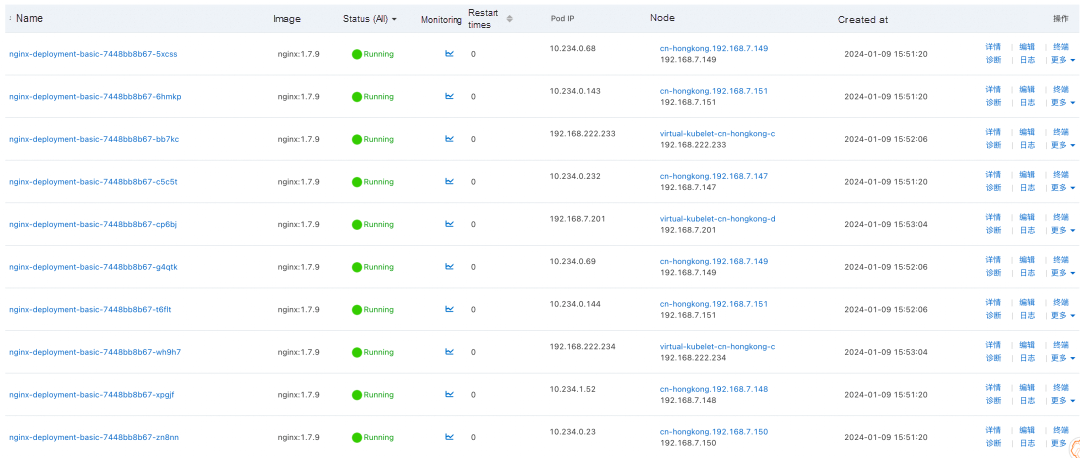

At this point, submit the resource policy and increase the number of replicas of the deployment to 10. All new replicas will run on ECIs.

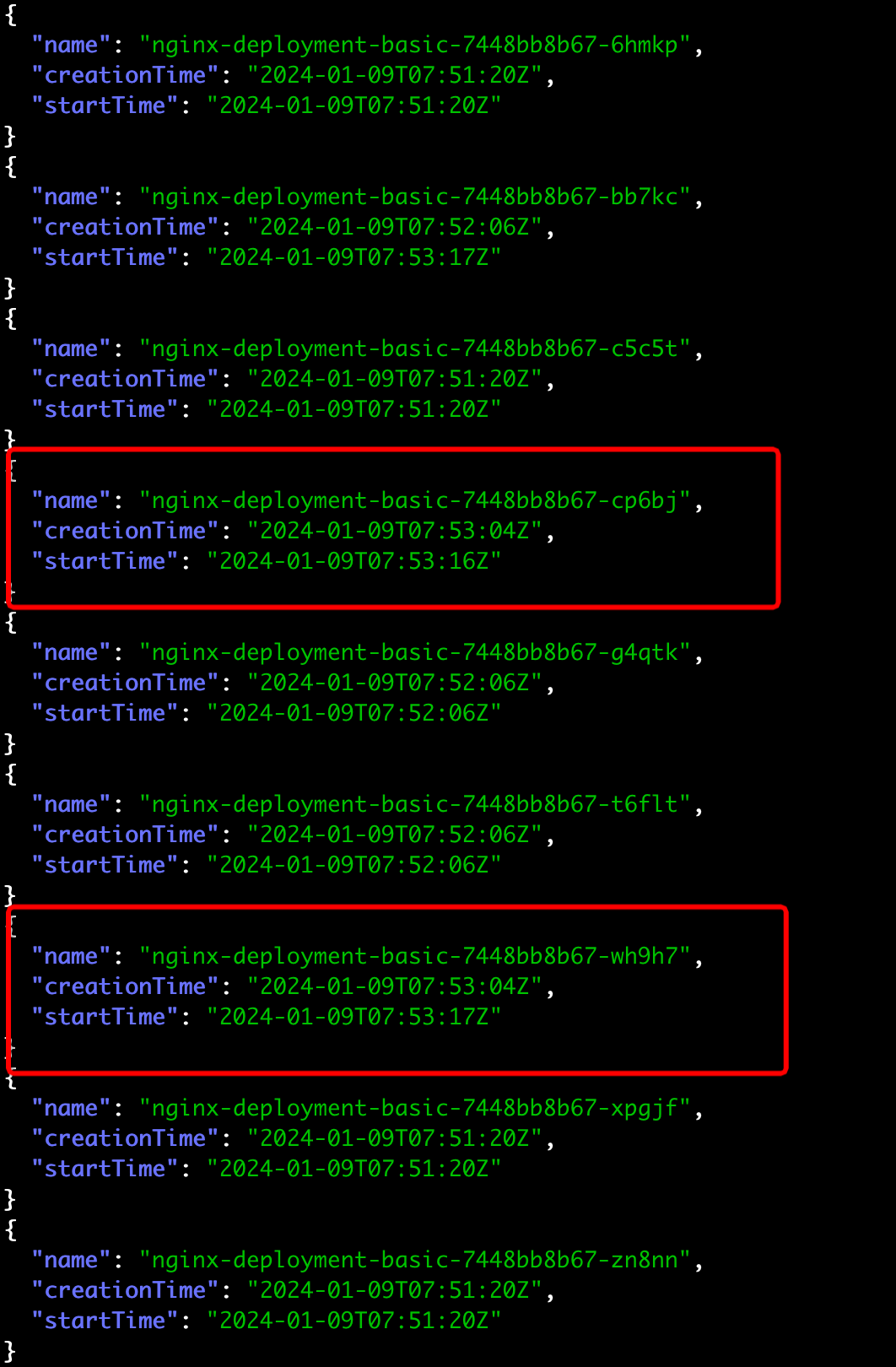

Calculate the pod creation time and start time. As can be seen in the following figure, the creation of the new pods takes 13 seconds, far less than the elastic time required for auto-scaling nodes.

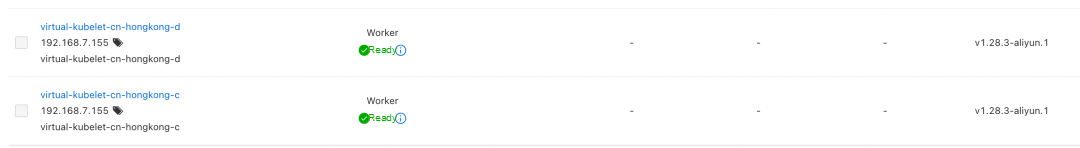

If your cluster is configured with virtual nodes in multiple zones, ECI pods may be scheduled on multiple zones by default. As shown in the following figure, Nginx is scheduled to virtual nodes in zones C and D by default.

For big data applications, configuring zone affinity often reduces the cost of network communication between computing pods, resulting in higher throughput. With Alibaba Cloud elastic scheduling, you can restrict business scheduling to specific zones based on node affinity and pod affinity on pods, enabling the scheduling of pods on ECS instances and ECIs in the same zone.

The following examples demonstrate how to configure scheduling in the same zone on ECIs by specifying and not specifying a zone for scheduling. A resource policy is submitted in advance in both examples:

Native Kubernetes provides node affinity scheduling semantics to control the scheduling location of pods. In the following example, we specify that the Nginx service is scheduled only in Zone C. The only modification required is to add node affinity constraints to the workload's PodTemplate or PodSpec.

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx

name: nginx-deployment-basic

spec:

replicas: 9

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: topology.kubernetes.io/zone

operator: In

values:

- cn-hongkong-c

containers:

- image: 'nginx:1.7.9'

imagePullPolicy: IfNotPresent

name: nginx

resources:

limits:

cpu: 1500m

requests:

cpu: 1500m

terminationMessagePath: /dev/termination-log

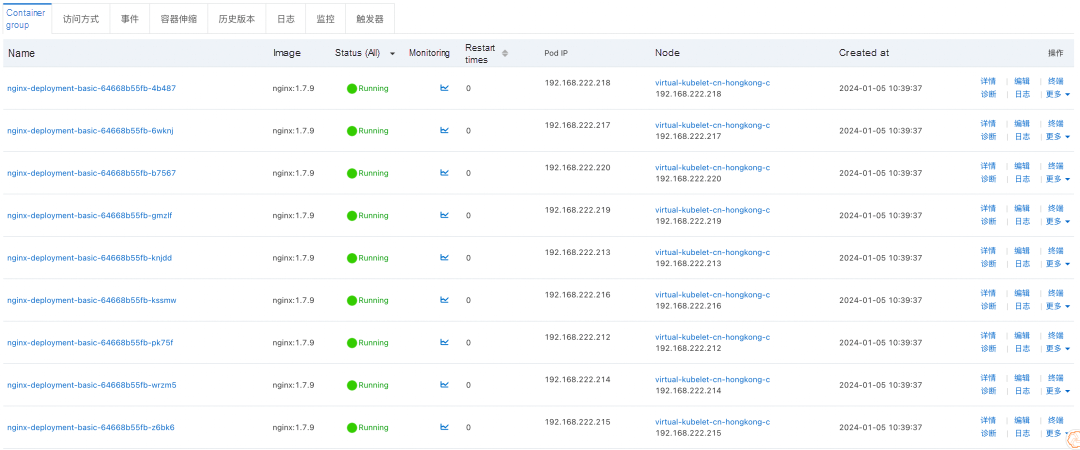

terminationMessagePolicy: FileScale out the number of services to 9. In this case, all service pods are deployed in Zone C. All ECS instances in the cluster are deployed in Zone D. Therefore, all service pods are deployed in ECIs.

Big data computing usually requires a large number of pods. In this case, it is critical to ensure that ECIs provide sufficient computing power resources. To ensure that a zone with sufficient remaining computing power is selected, you can use pod affinity when specifying zone affinity. During ECI scheduling, the scheduler selects a zone with more available computing power based on the zone suggestions provided by ECIs. In this way, the scheduler automatically selects a better location. In the following example, we restrict pods to be scheduled only on ECIs and to the same zone through pod affinity.

🔔 Note: Pod affinity allows subsequent pods to be scheduled in the same zone as the first pod to be scheduled. When ECS instances are used for elastic scheduling, the first pod to be scheduled is usually an ECS pod. In this case, we recommend that you use preferredDuringSchedulingIgnoredDuringExecution.

The submitted resource policy:

apiVersion: scheduling.alibabacloud.com/v1alpha1

kind: ResourcePolicy

metadata:

name: test

spec:

strategy: prefer

units:

- resource: eciThe submitted workload:

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx

name: nginx-deployment-basic

spec:

replicas: 9

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- nginx

topologyKey: topology.kubernetes.io/zone

containers:

- image: 'nginx:1.7.9'

imagePullPolicy: IfNotPresent

name: nginx

resources:

limits:

cpu: 1500m

requests:

cpu: 1500m

terminationMessagePath: /dev/termination-log

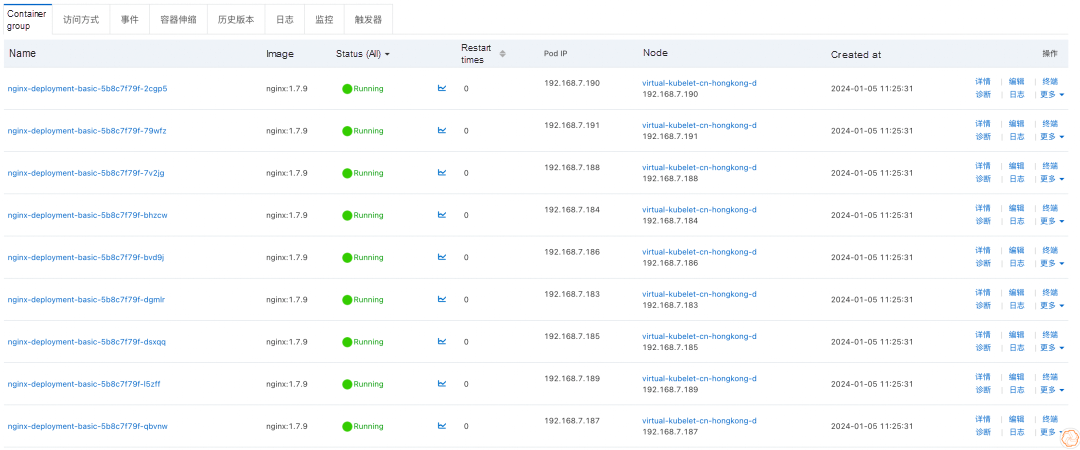

terminationMessagePolicy: FileIn this case, pods are still scheduled in the same zone, which is a better zone recommended by ECIs.

For online services, multi-zone deployment is an effective means to ensure high service availability. Alibaba Cloud elastic scheduling allows you to use the topology distribution constraints on pods to ensure that pods on ECS instances follow the same topology distribution constraints as pods on ECIs. This ensures the high availability of your business.

The following example shows how to configure high availability on ECIs. This example specifies that pods are evenly distributed across multiple zones and ECIs are used when ECS resources are insufficient.

apiVersion: scheduling.alibabacloud.com/v1alpha1

kind: ResourcePolicy

metadata:

name: test

spec:

strategy: prefer

units:

- resource: ecs

- resource: eci

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx

name: nginx-deployment-basic

spec:

replicas: 9

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

topologySpreadConstraints:

- labelSelector:

matchLabels:

app: nginx

maxSkew: 1

topologyKey: topology.kubernetes.io/zone

whenUnsatisfiable: DoNotSchedule

containers:

- image: 'nginx:1.7.9'

imagePullPolicy: IfNotPresent

name: nginx

resources:

limits:

cpu: 1500m

requests:

cpu: 1500m

terminationMessagePath: /dev/termination-log

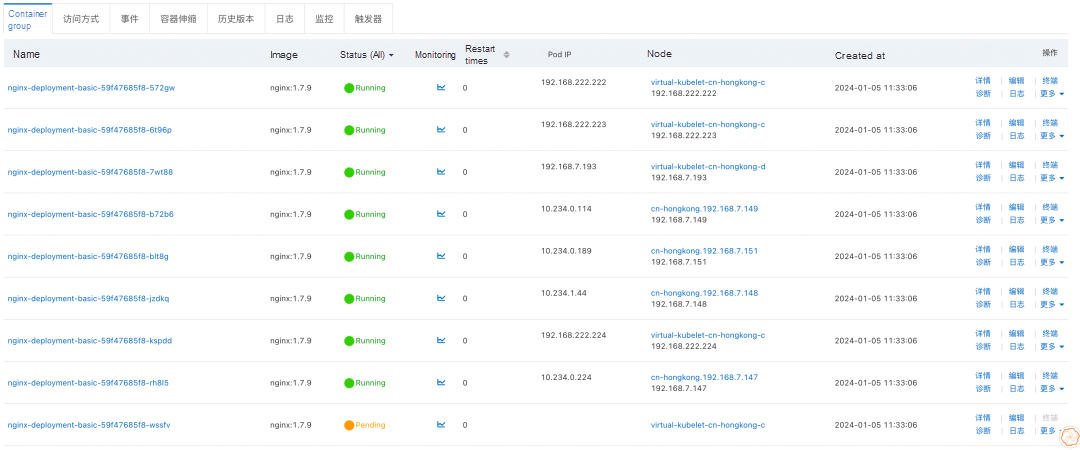

terminationMessagePolicy: FileAfter you submit the preceding resource list, the pods are distributed in the following zones and nodes. Three ECS nodes exist in Zone D, so five pods exist in Zone D and four in Zone C. It can meet the requirement of a maximum inclination of 1 in the constraint.

ACK extends the elastic scheduling feature based on the standard K8s scheduling framework, aiming to enhance application performance and overall resource utilization of clusters, thus ensuring the stable and efficient operation of enterprise businesses.

In the previous article Driving Business Agility and Efficient Cloud Resource Management through Elastic Scheduling, we explored how to effectively manage various types of elastic resources through the elastic scheduling feature of ACK, helping enterprises optimize resource allocation for lower costs and higher efficiency.

This article describes how to combine elastic scheduling with ECIs. Leveraging the advantages of ECIs, including fast elasticity, per-second billing, and instant release, significantly improves the stability and efficiency of business operations.

In the upcoming articles on scheduling, we will provide detailed insights into managing and scheduling AI tasks on ACK, enabling enterprises to swiftly implement AI business in the cloud.

ARMS eBPF Edition: Technical Exploration for Efficient Protocol Parsing

212 posts | 13 followers

FollowAlibaba Cloud Native - March 12, 2024

Alibaba Container Service - April 3, 2025

Rupal_Click2Cloud - December 12, 2023

Alibaba Clouder - July 1, 2020

Alibaba Developer - February 7, 2022

Alibaba Container Service - January 15, 2026

212 posts | 13 followers

Follow Elastic Container Instance

Elastic Container Instance

An agile and secure serverless container instance service.

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More ECS Bare Metal Instance

ECS Bare Metal Instance

An elastic and horizontally scalable high-performance computing service providing the same computing performance as traditional physical servers including physical isolation.

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn MoreMore Posts by Alibaba Cloud Native