By Longcheng

PostgreSQL databases are widely used by enterprises for their online businesses, gaining high recognition in the industry for their exceptional stability and comprehensive capabilities.

This article explains how to synchronize data links between two PostgreSQL instances, enabling comprehensive PostgreSQL capabilities for both Online Transaction Processing (OLTP) and Online Analytical Processing (OLAP) scenarios.

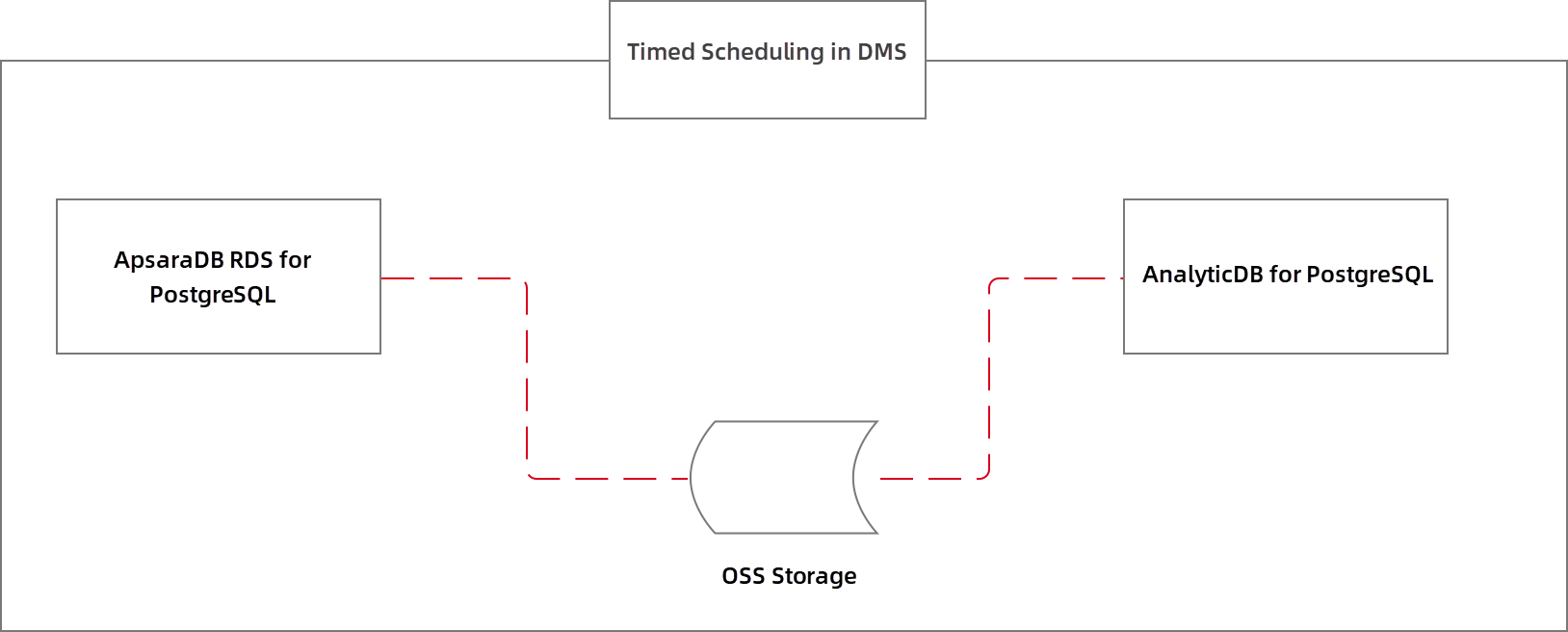

In this article, Data Management Service (DMS) is used to schedule data links and Object Storage Service (OSS) is used for intermediate storage. Data is loaded from an ApsaraDB RDS for PostgreSQL instance to OSS and then synchronized to an AnalyticDB for PostgreSQL instance in Serverless mode to realize T+1 data analysis.

Benefits:

A constraint in the example described in this article is that incremental data in the source RDS table can be archived by using conditions. The example shows how to archive data in the c date column of the source table t_src on a daily basis.

Solution framework:

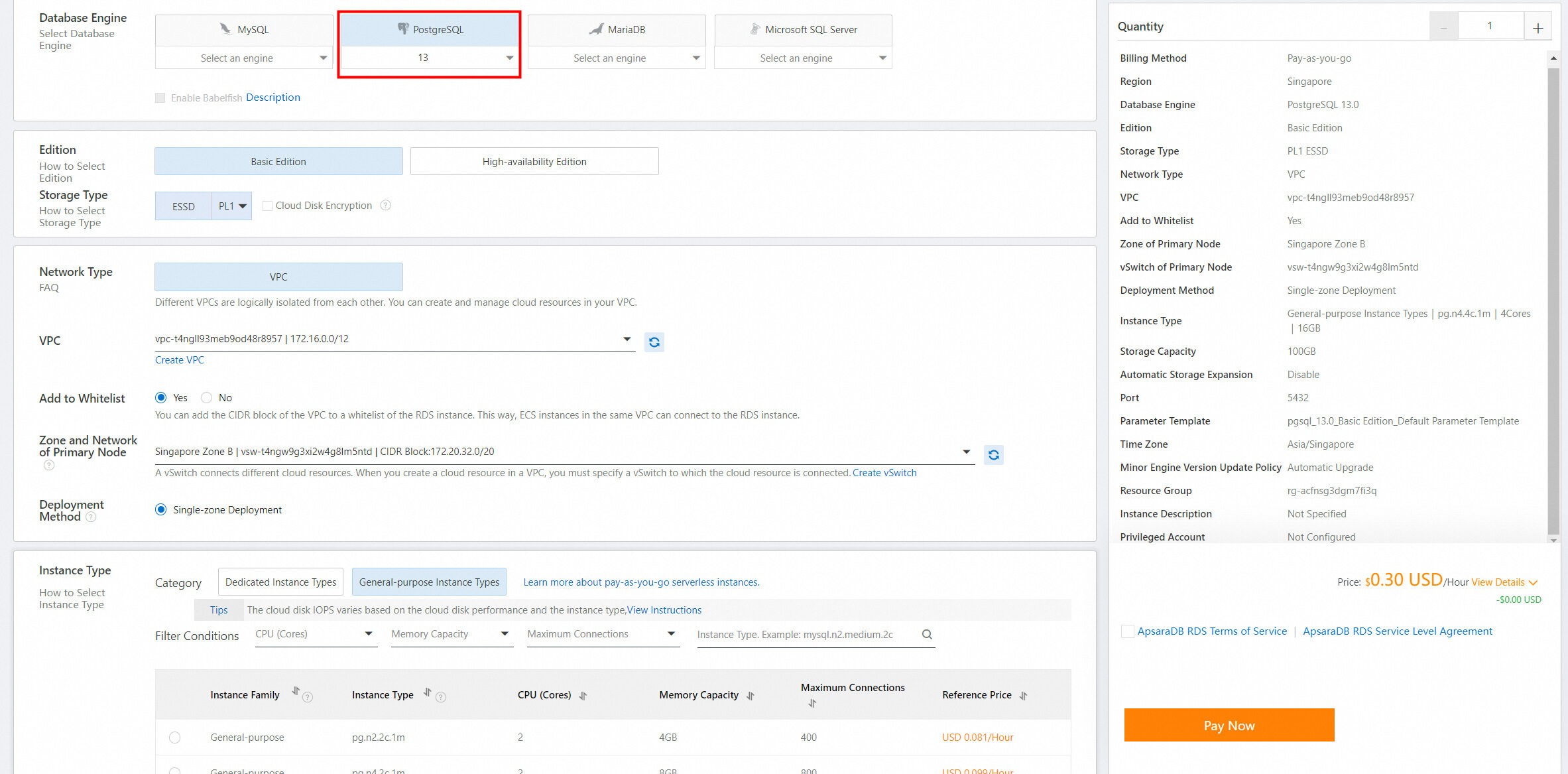

Note: This solution applies to ApsaraDB RDS for PostgreSQL instances that run PostgreSQL 9.4 to PostgreSQL 13, but does not apply to instances that run PostgreSQL 14.

1. Create an ApsaraDB RDS for PostgreSQL instance that runs PostgreSQL 9 to PostgreSQL 13.

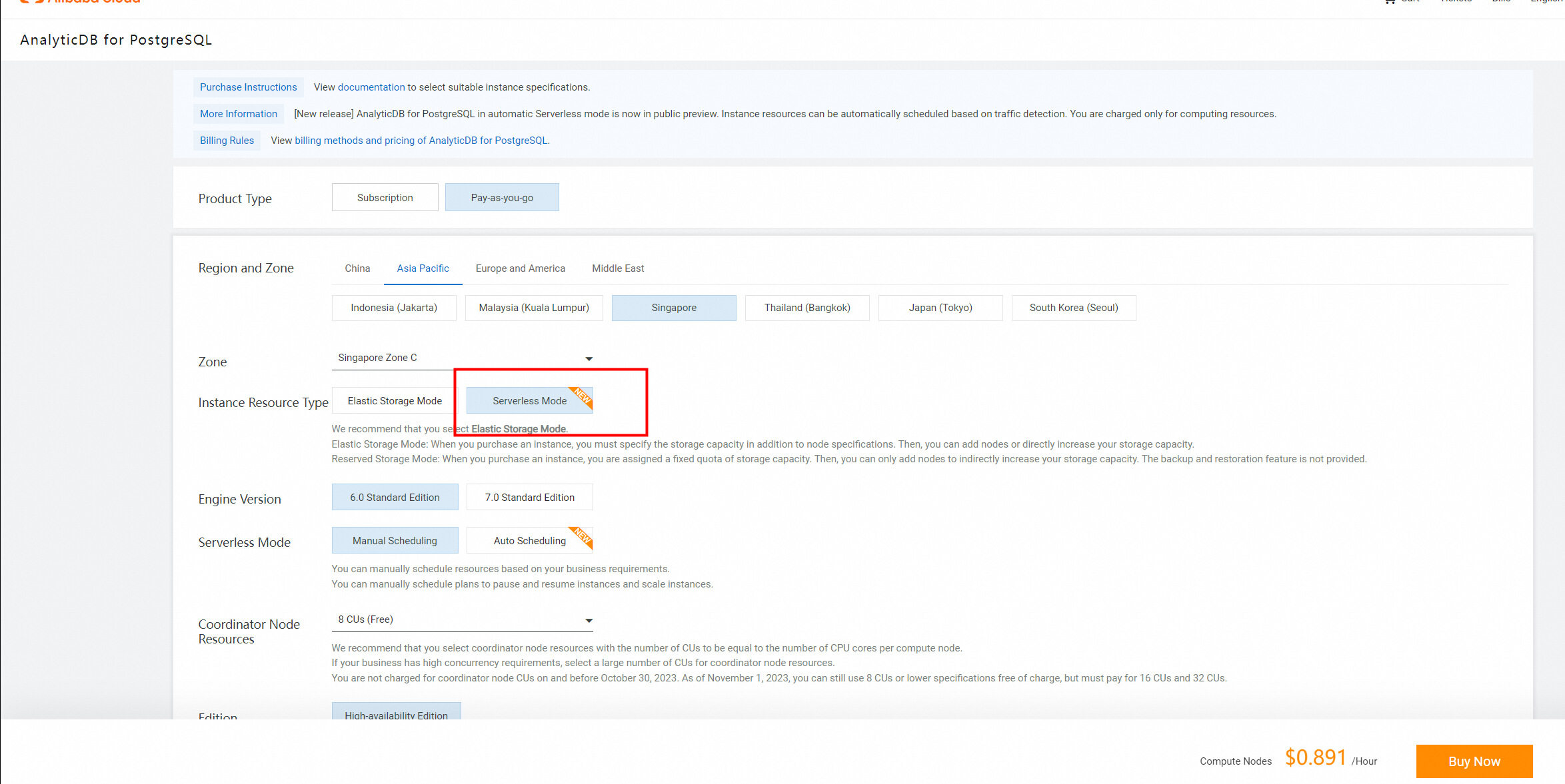

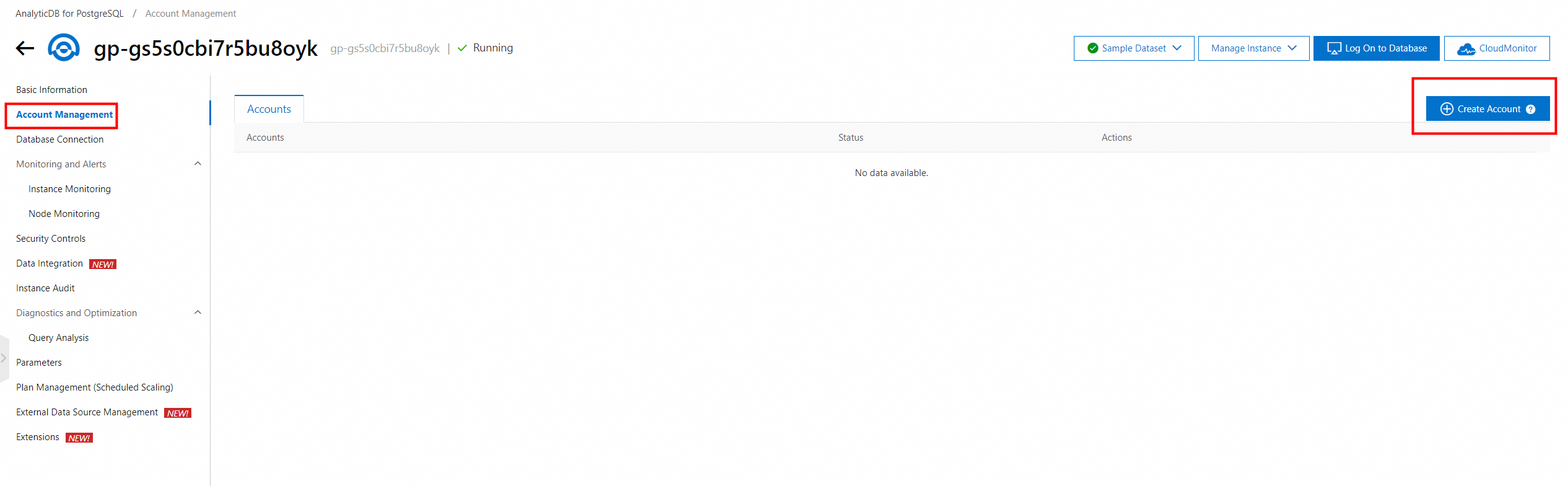

2. Create an AnalyticDB for PostgreSQL instance in Serverless mode. If you have not activated AnalyticDB for PostgreSQL, activate the service first. After the instance is created, create an account for the instance.

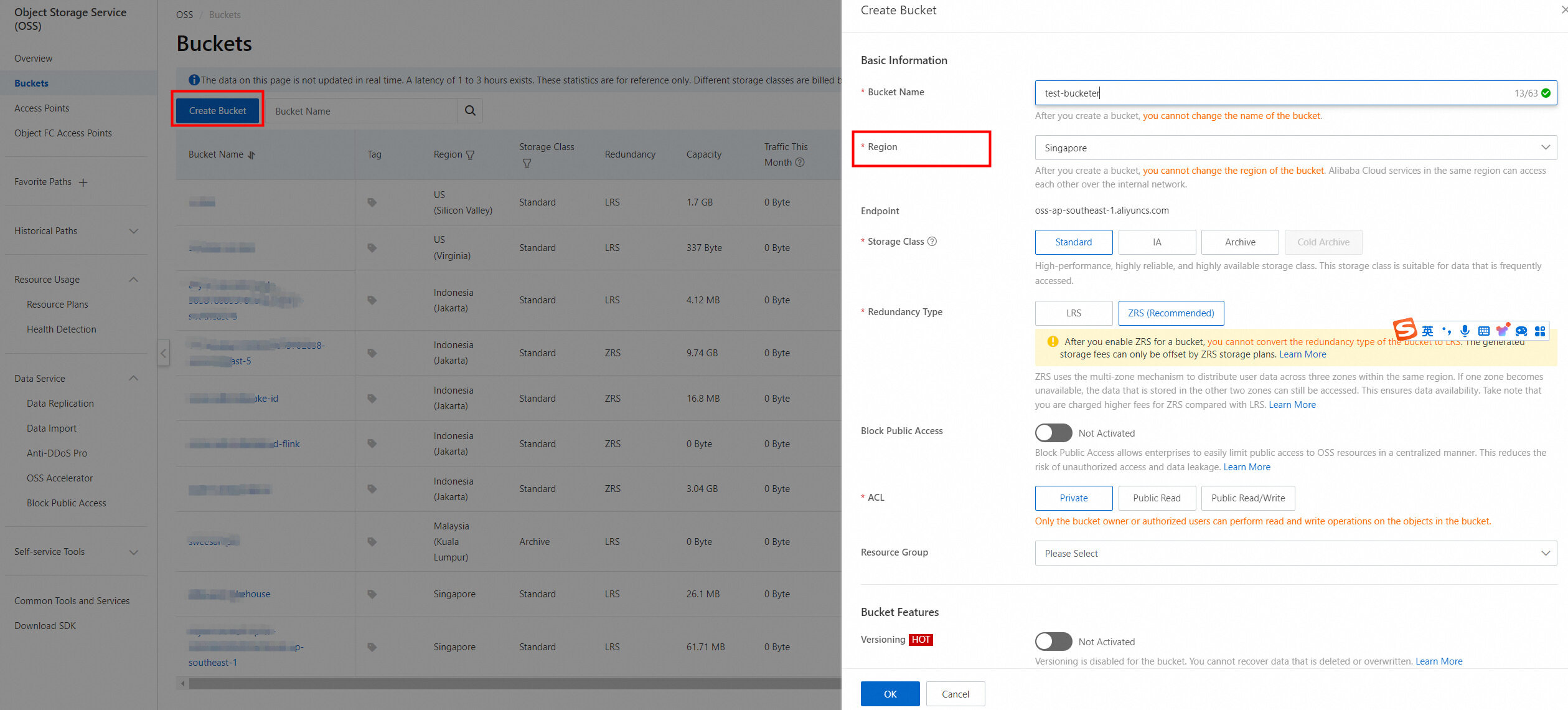

3. Activate OSS and create a bucket with an AccessKey ID and an AccessKey secret.

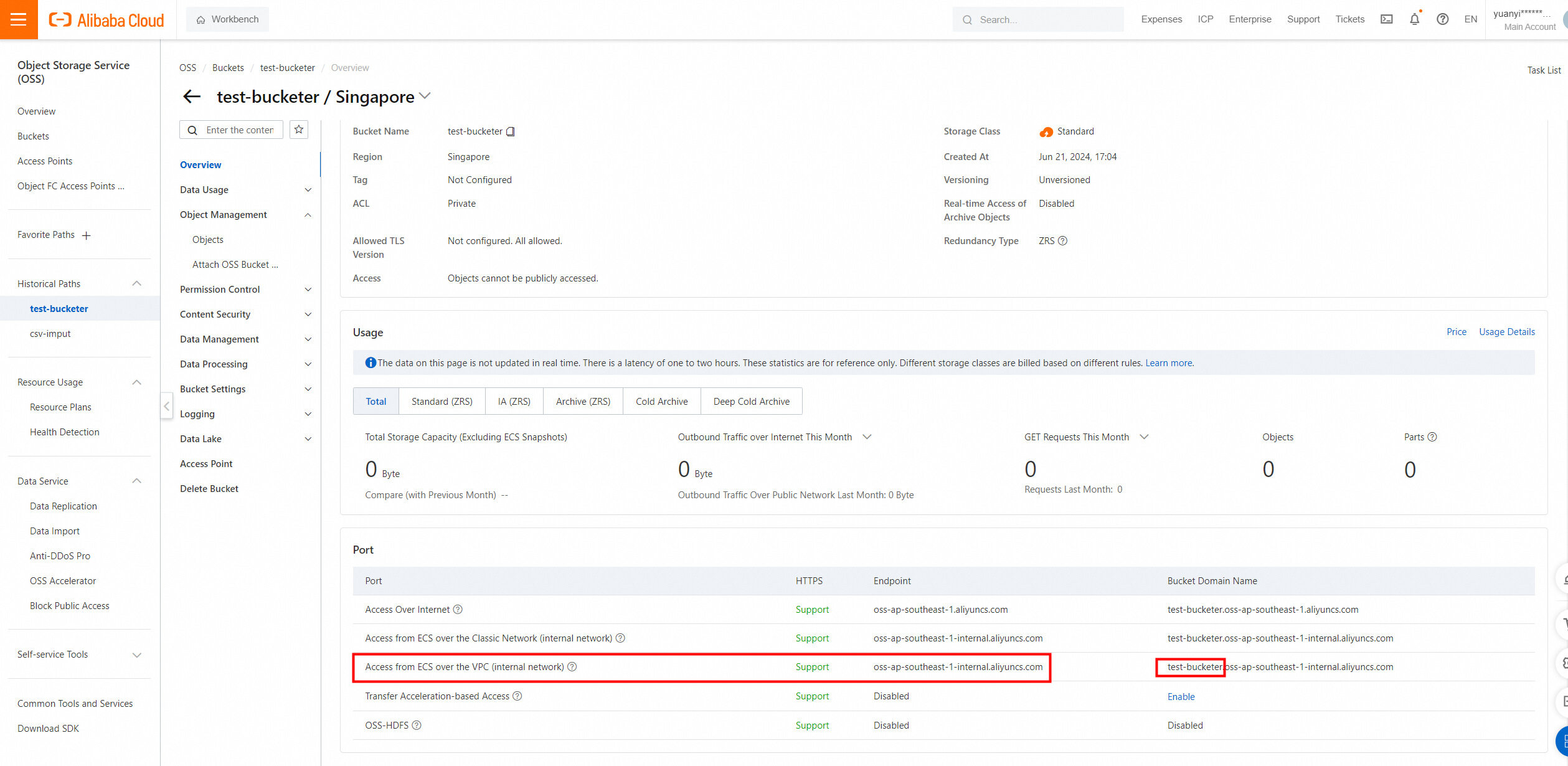

4. Go to the details page of the bucket. Record the information about the bucket, including its host information and domain name, as shown in the following figure.

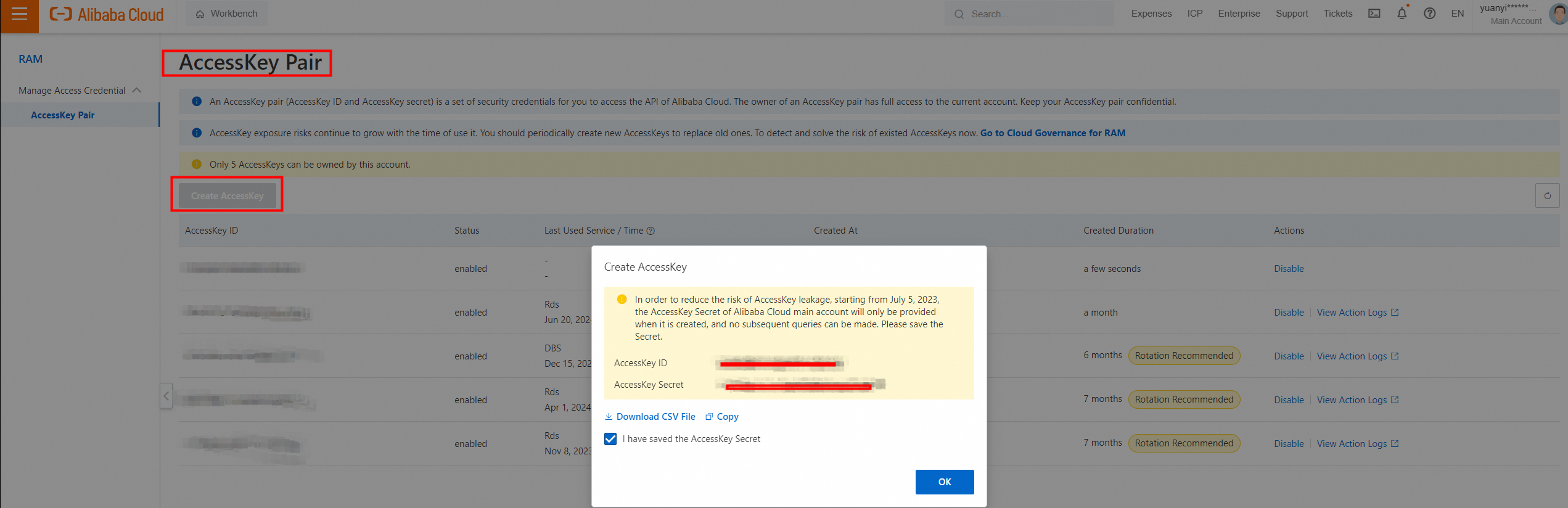

5. To obtain the AccessKey ID and AccessKey secret, move the pointer over your profile picture in the upper-right corner of the console and click AccessKey Management.

On the AccessKey Pair page, view and record the AccessKey ID and AccessKey secret of the bucket.

Theoretically, business data is already generated in the ApsaraDB RDS for PostgreSQL instance. For ease of understanding, sample data is used in this example for illustrative purposes.

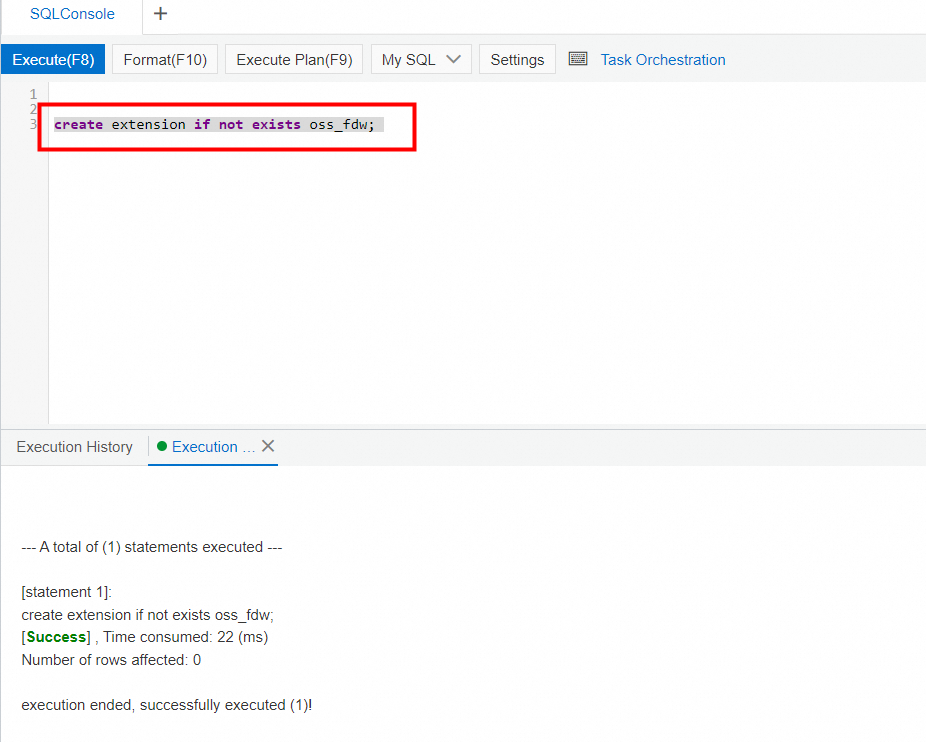

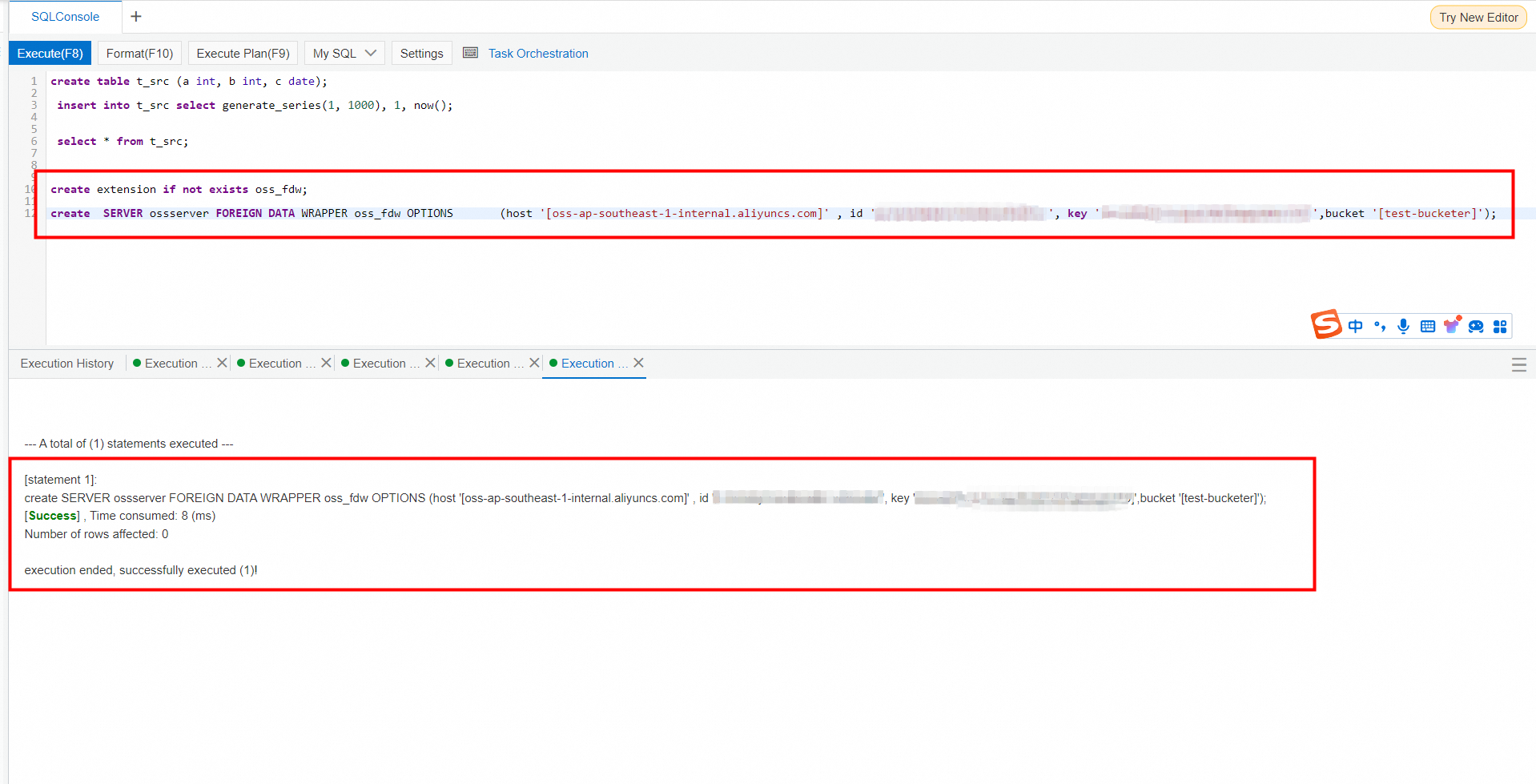

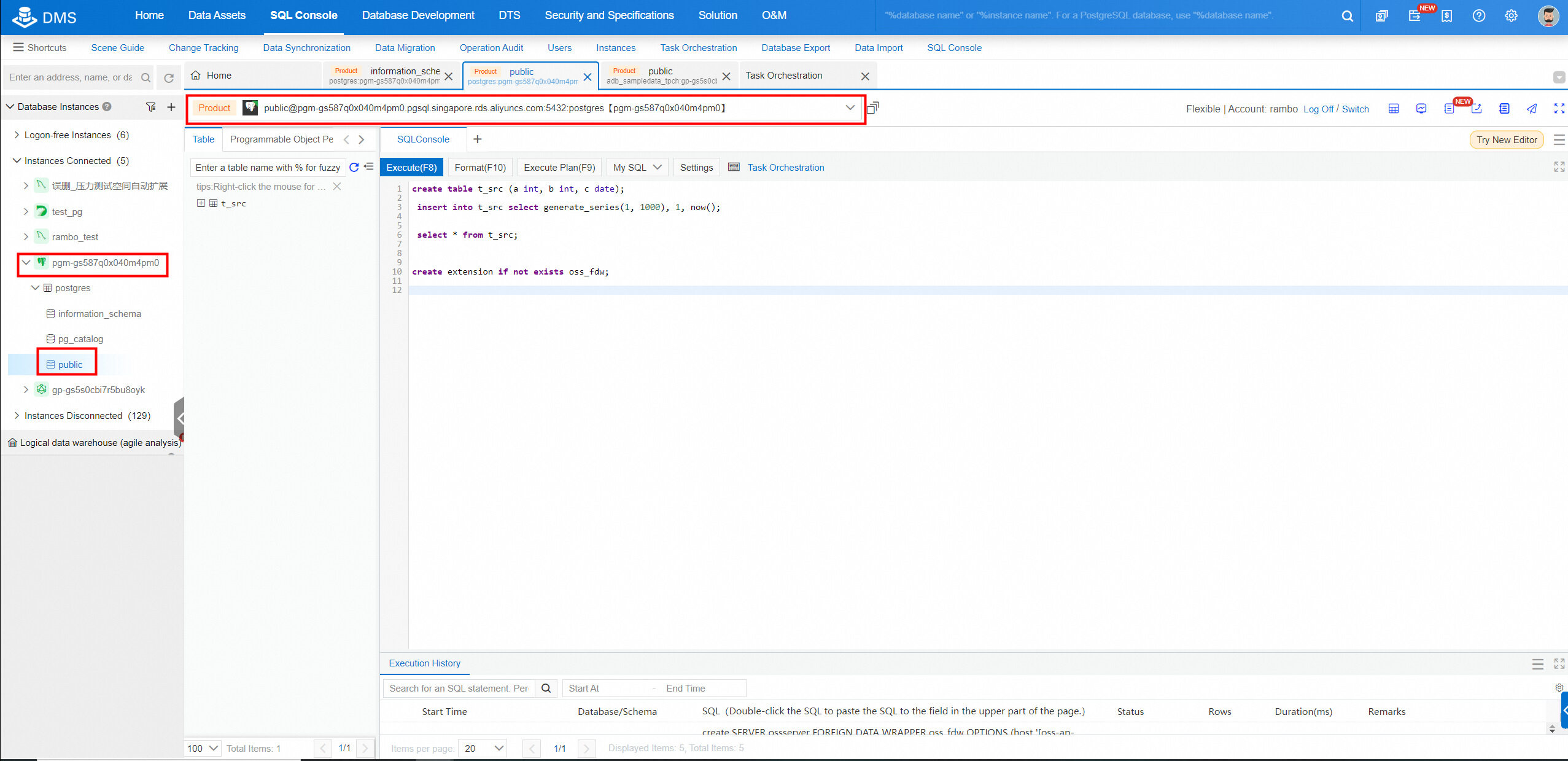

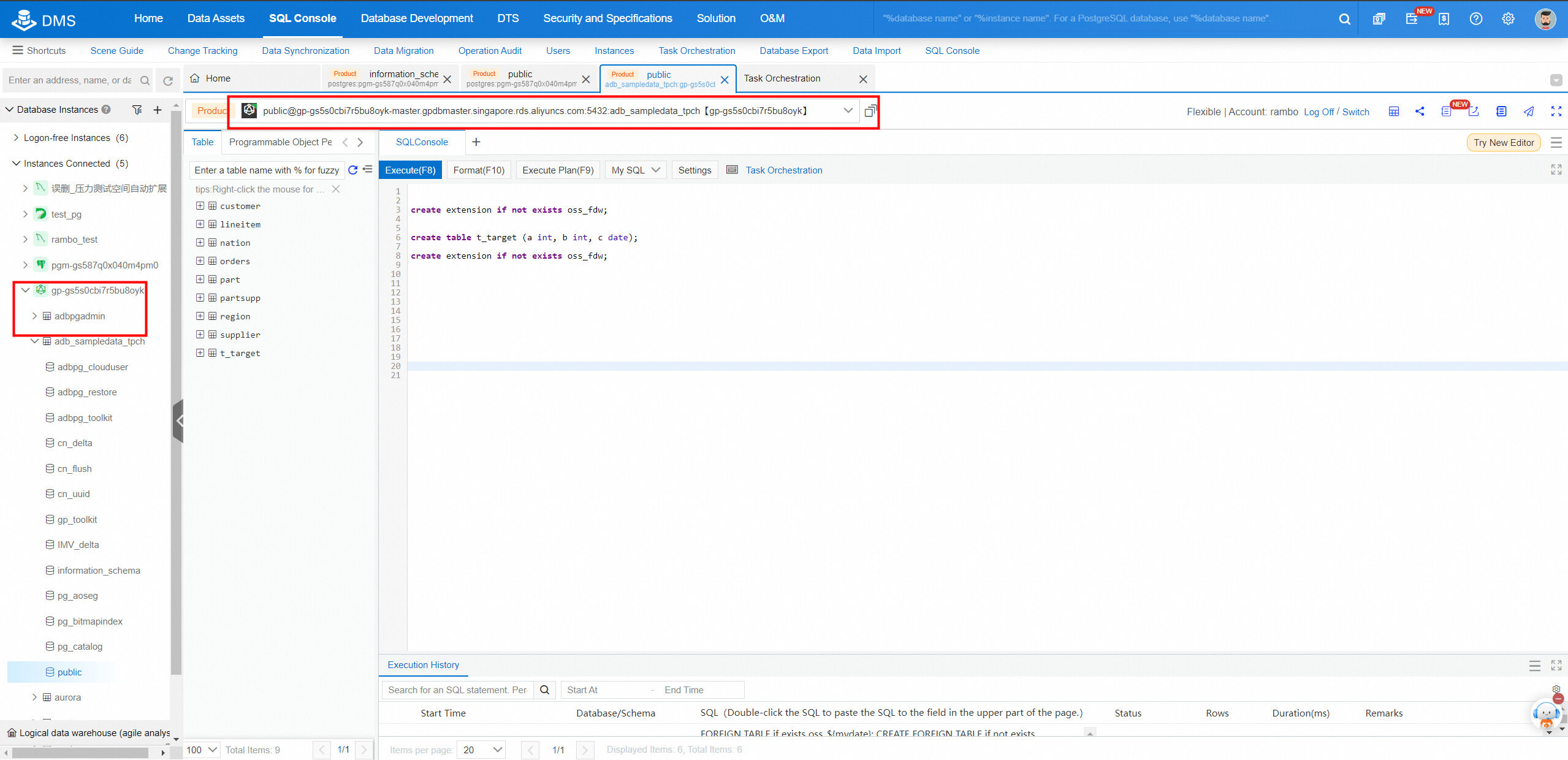

1. Log on to the ApsaraDB RDS for PostgreSQL instance and enter the database. On the SQLConsole tab, create an oss_fdw extension.

• Log on to the ApsaraDB RDS for PostgreSQL instance by using DMS. Enter and run the following SQL statement:

create extension if not exists oss_fdw;

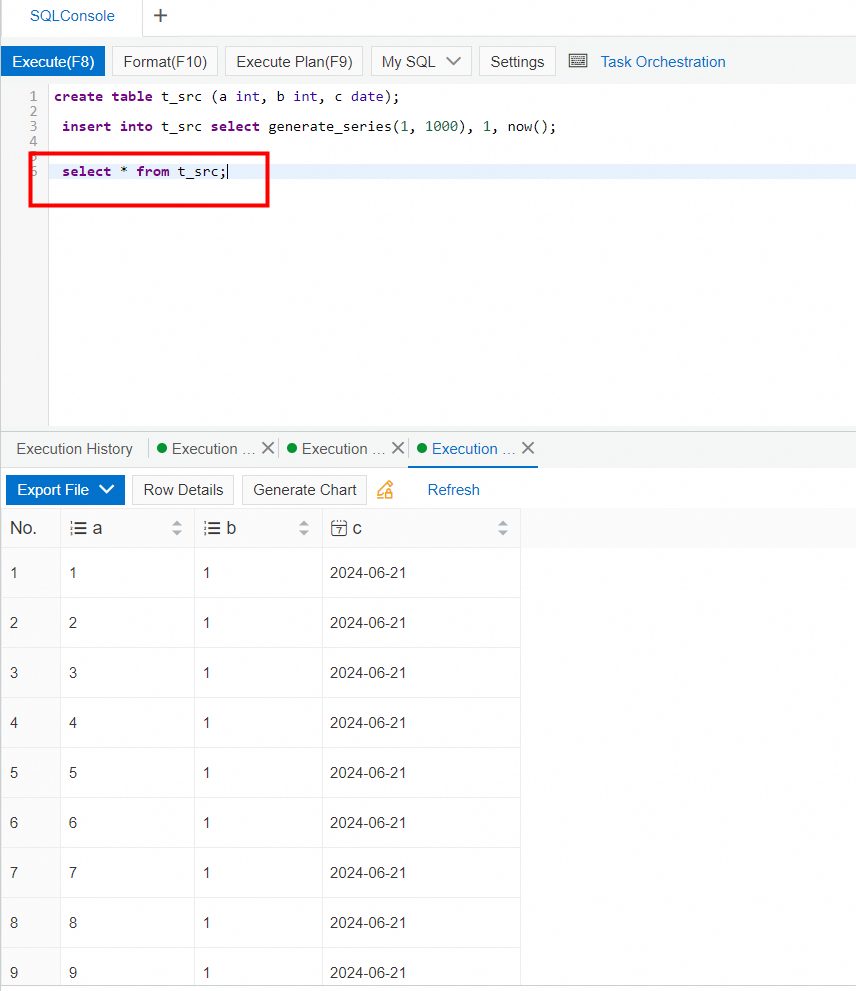

• Enter and run the following SQL statements to create a source table:

create table t_src (a int, b int, c date);

insert into t_src select generate_series(1, 1000), 1, now();

select * from t_src;

• Create a foreign table for the ApsaraDB RDS for PostgreSQL instance and write data to the table.

-- Import the oss_fdw extension.

create extension if not exists oss_fdw;

-- Create a foreign table service.

create SERVER ossserver FOREIGN DATA WRAPPER oss_fdw OPTIONS

(host '[bucket_host]' , id '[access_key]', key '[secret_key]',bucket '[bucket_name]');

2. Log on to the AnalyticDB for PostgreSQL instance in Serverless mode and enter the database. On the SQLConsole tab, enter and run the following SQL statements to create an oss_fdw extension, create a destination table, and configure the OSS foreign table:

-- Add the oss_fdw extension.

create extension if not exists oss_fdw;

-- Create a table with the same schema as the source table created in the ApsaraDB RDS for PostgreSQL instance. Note that ApsaraDB RDS for PostgreSQL instances in Serverless mode do not support primary keys.

create table t_target (a int, b int, c date);

-- Import the oss_fdw extension.

create extension if not exists oss_fdw;

-- Create a foreign table service.

create server oss_serv

foreign data wrapper oss_fdw

options (

endpoint '[bucket_host]',

bucket '[bucket_name]');

create user mapping for public

server oss_serv

options (

id '[access_key]',

key '[secret_key]');3. Next, let's configure automatic archiving and task flow loading in DMS.

Configure a task to extract data from the ApsaraDB RDS for PostgreSQL instance

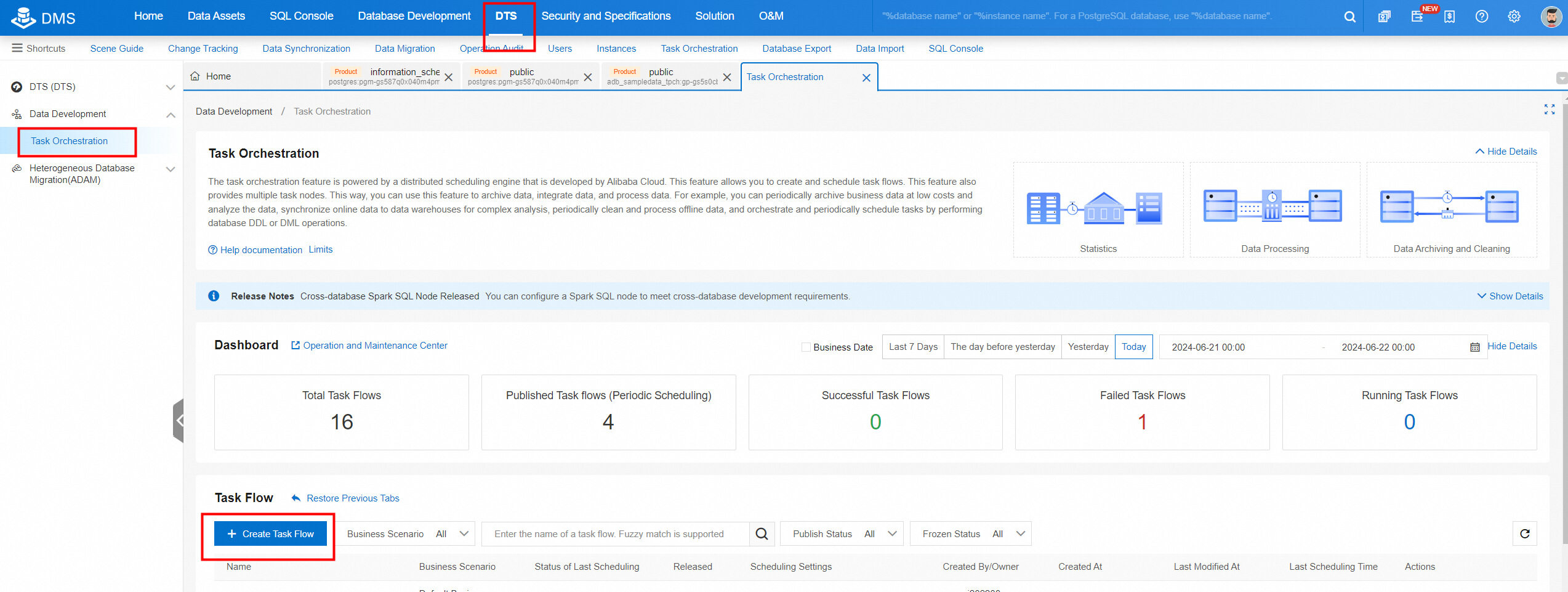

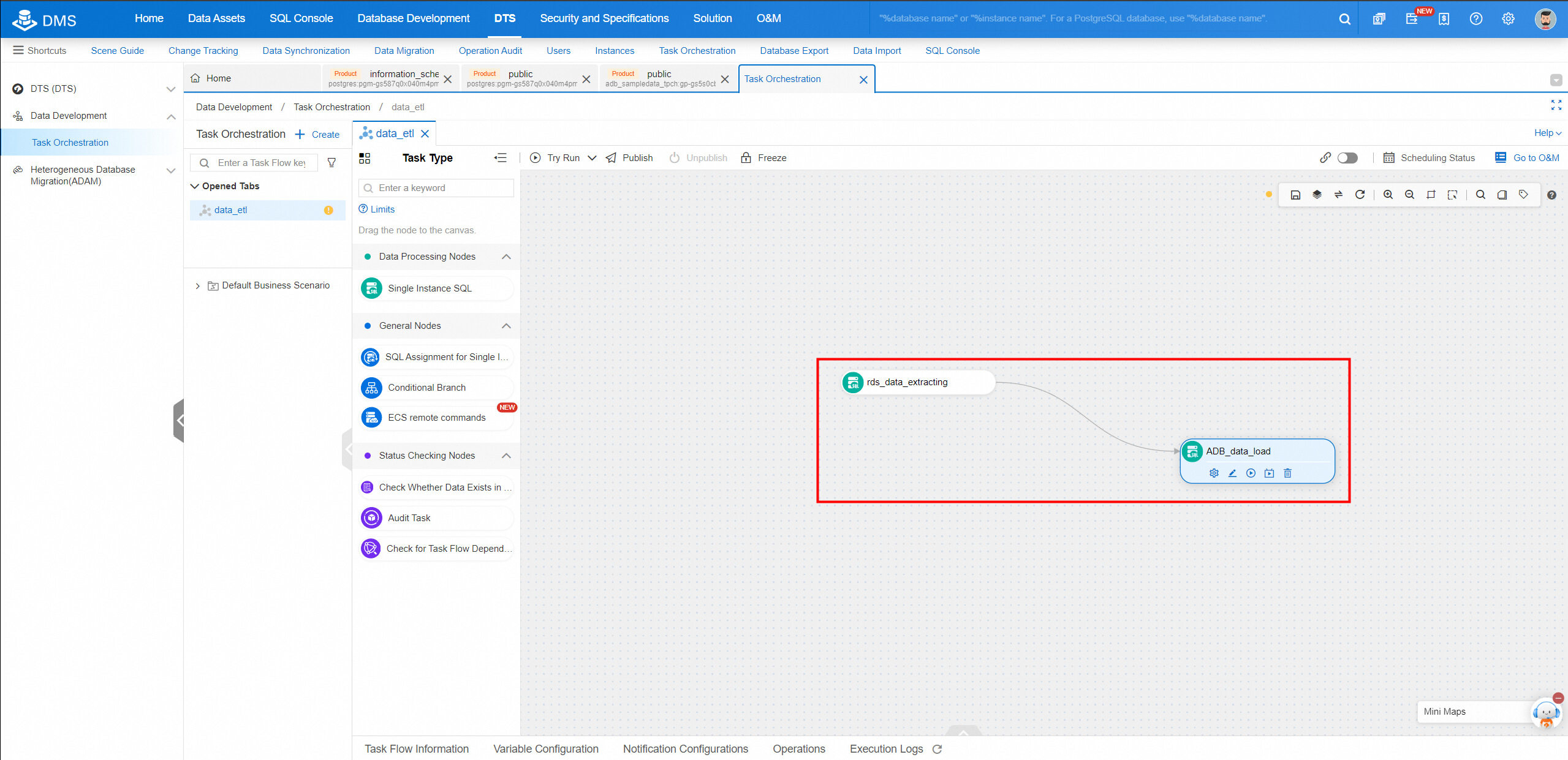

1. Create a task flow: Log on to the DMS console. In the top navigation bar, choose DTS. In the left-side navigation pane, select Task Orchestration. On the Task Orchestration page, click Create Task Flow. Set the task flow name to rdspg data import to OSS.

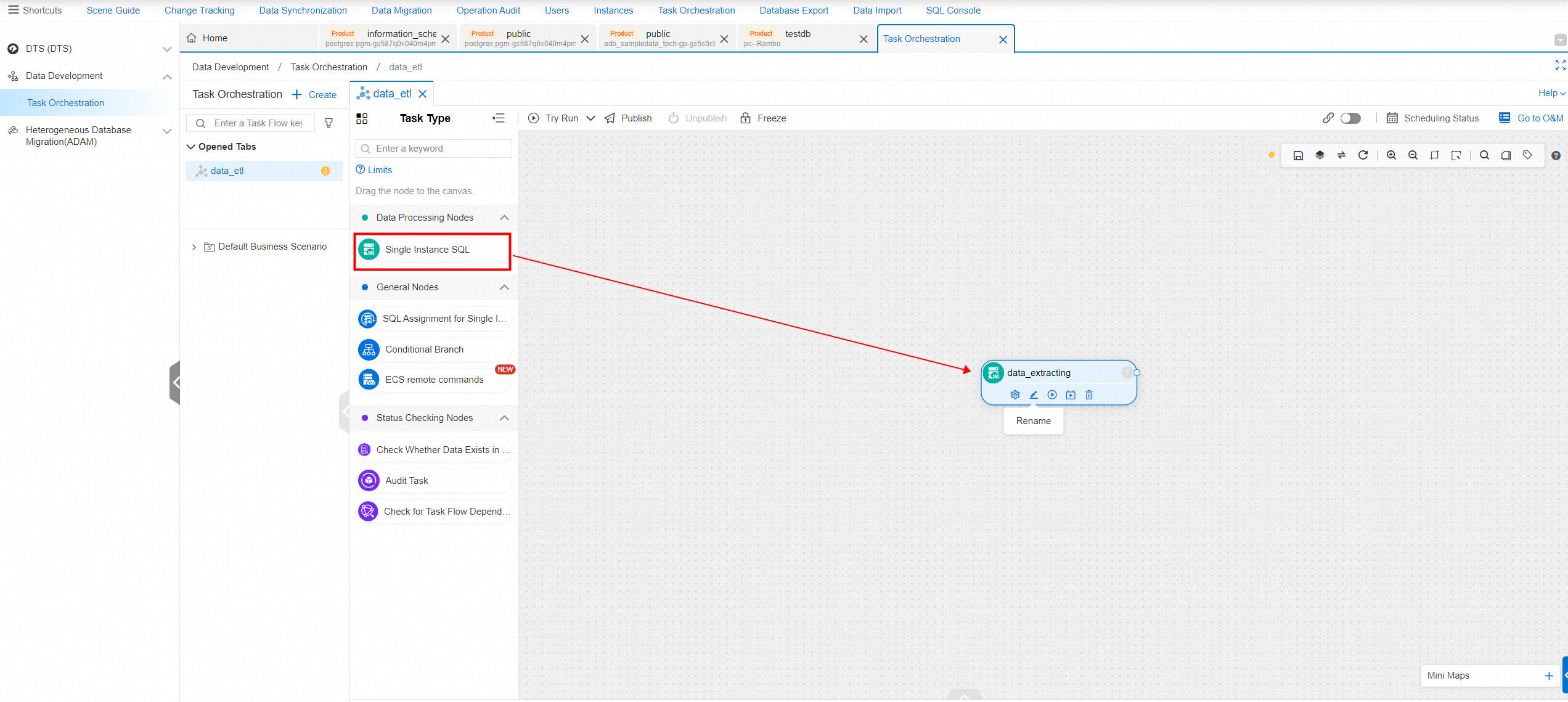

2. Create a task for archiving data from the ApsaraDB RDS for PostgreSQL instance. From the Task Type list on the left side of the canvas on the Task Orchestration page, drag the Single Instance SQL node under Data Processing Nodes to the canvas.

• Click the rename icon  and set the name to rds data extraction.

and set the name to rds data extraction.

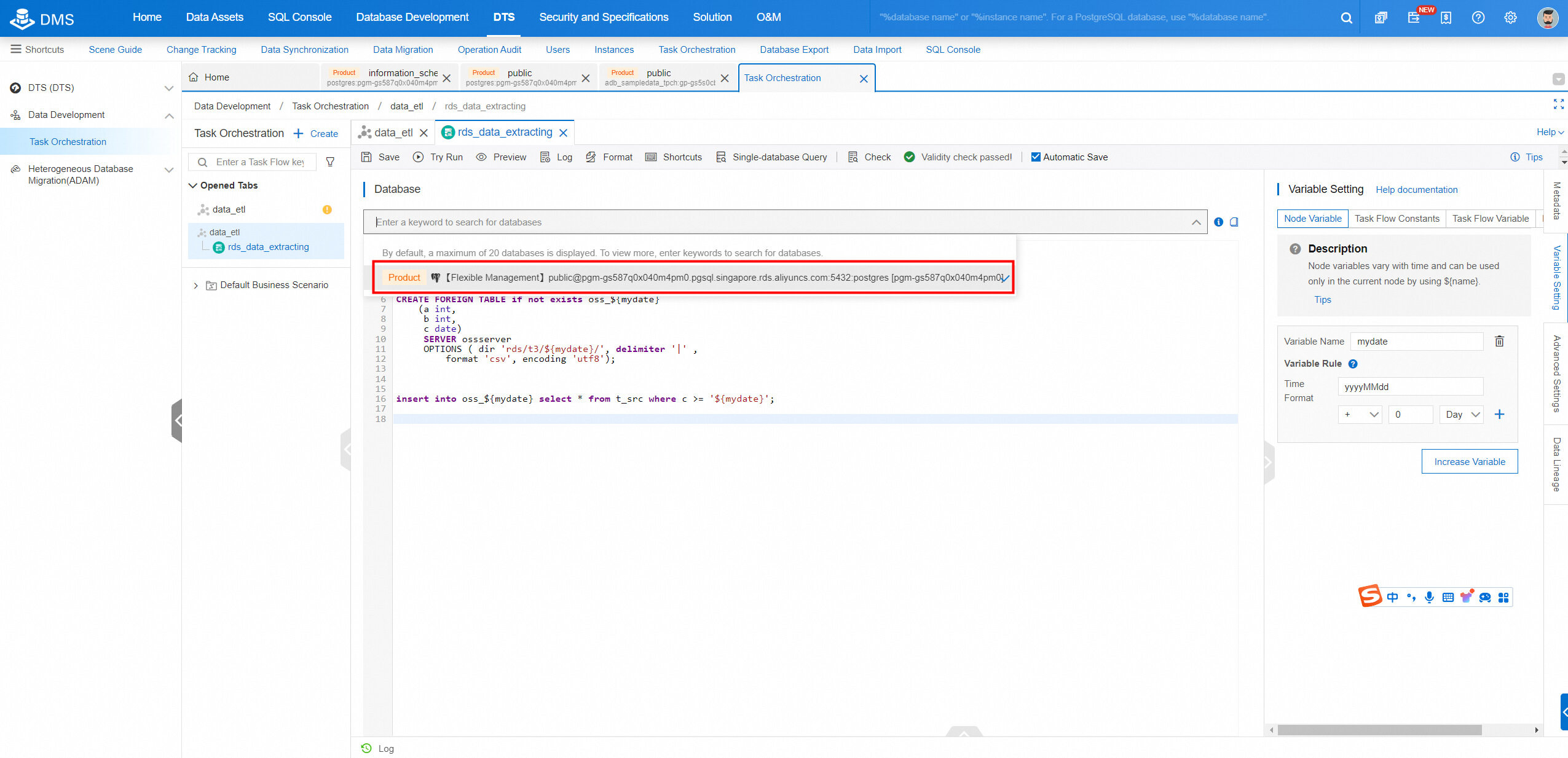

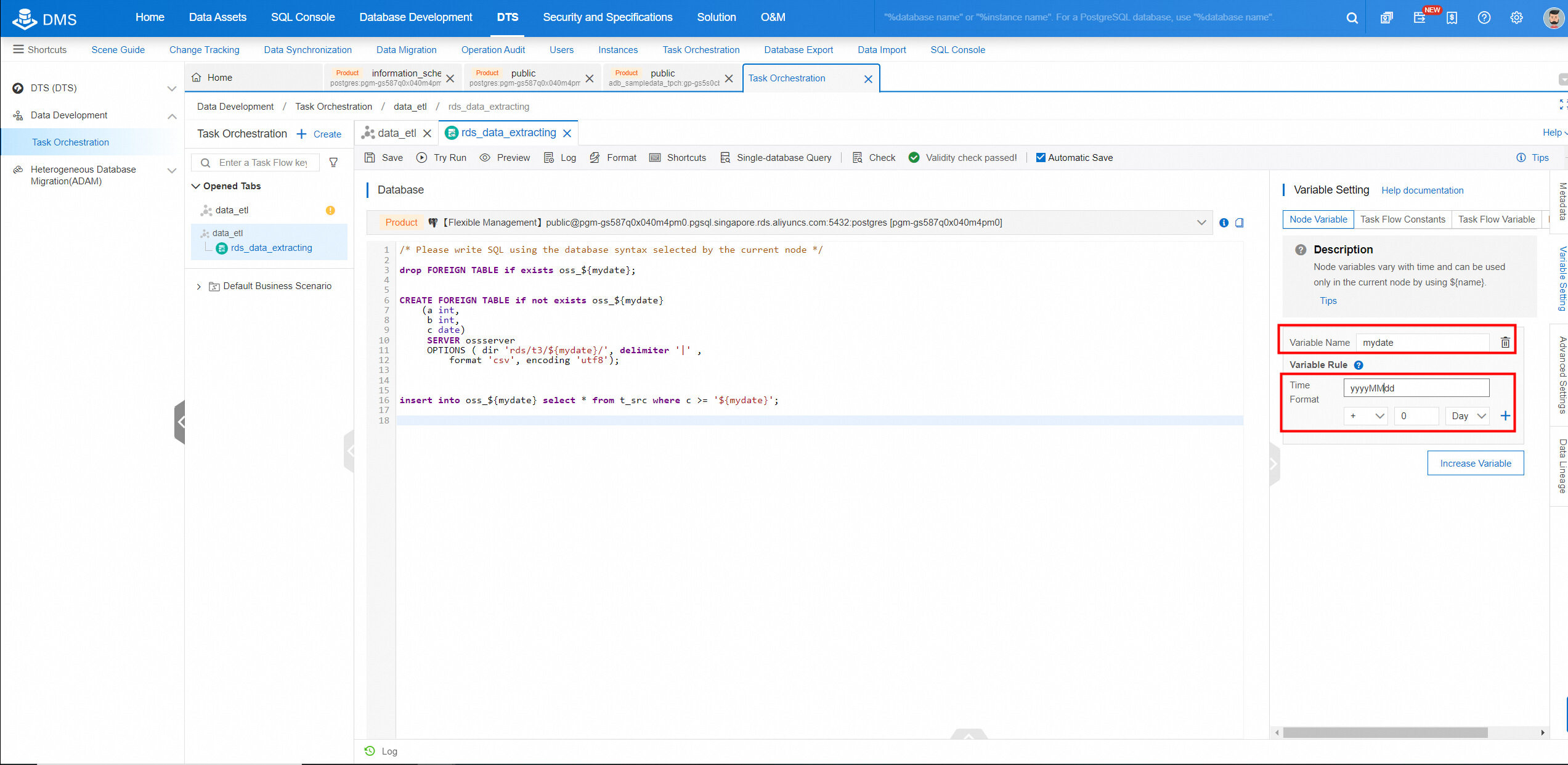

• Click the settings icon  to go to the task configuration page. Enter and run the following SQL statements to write data from the ApsaraDB RDS for PostgreSQL instance to OSS.

to go to the task configuration page. Enter and run the following SQL statements to write data from the ApsaraDB RDS for PostgreSQL instance to OSS.

drop FOREIGN TABLE if exists oss_${mydate};

CREATE FOREIGN TABLE if not exists oss_${mydate}

(a int,

b int,

c date)

SERVER ossserver

OPTIONS ( dir 'rds/t3/${mydate}/', delimiter '|' ,

format 'csv', encoding 'utf8');

insert into oss_${mydate} select * from t_src where c >= '${mydate}';3. Enter the name of the source database of the task. To obtain the database name, go back to the SQL Console and click the source database in the ApsaraDB RDS for PostgreSQL instance. The database name (starting with public) is at the top of the tab that appears. Click the copy icon to copy the database name.

4. Return to the task configuration page, paste the copied database name in the search box, and then select the matched result.

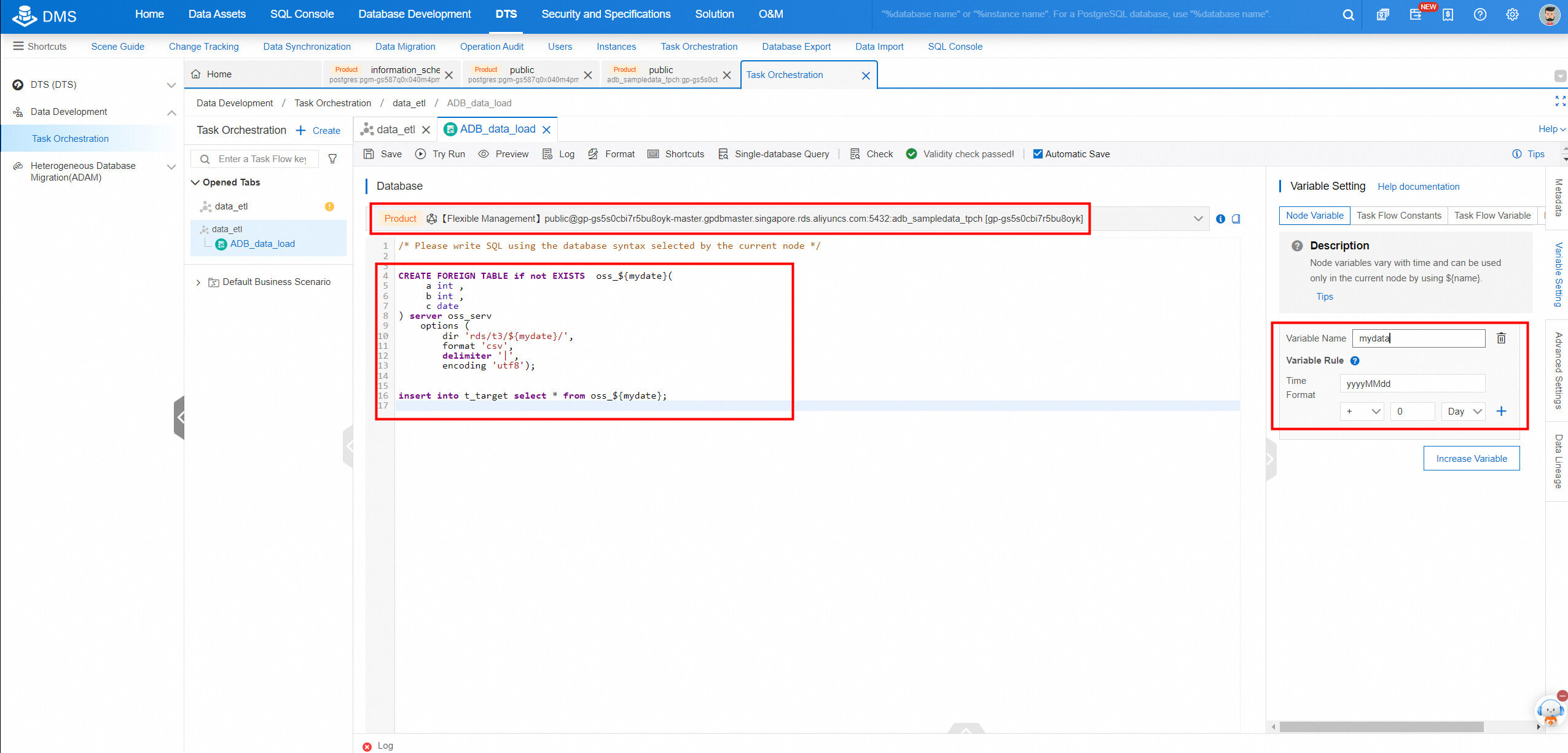

5. Click the Variable Setting tab in the right-side navigation pane. In the Variable Setting panel, configure a date variable named mydate and set the time format to yyyyMMdd. This variable will be used later to implement incremental archiving by date.

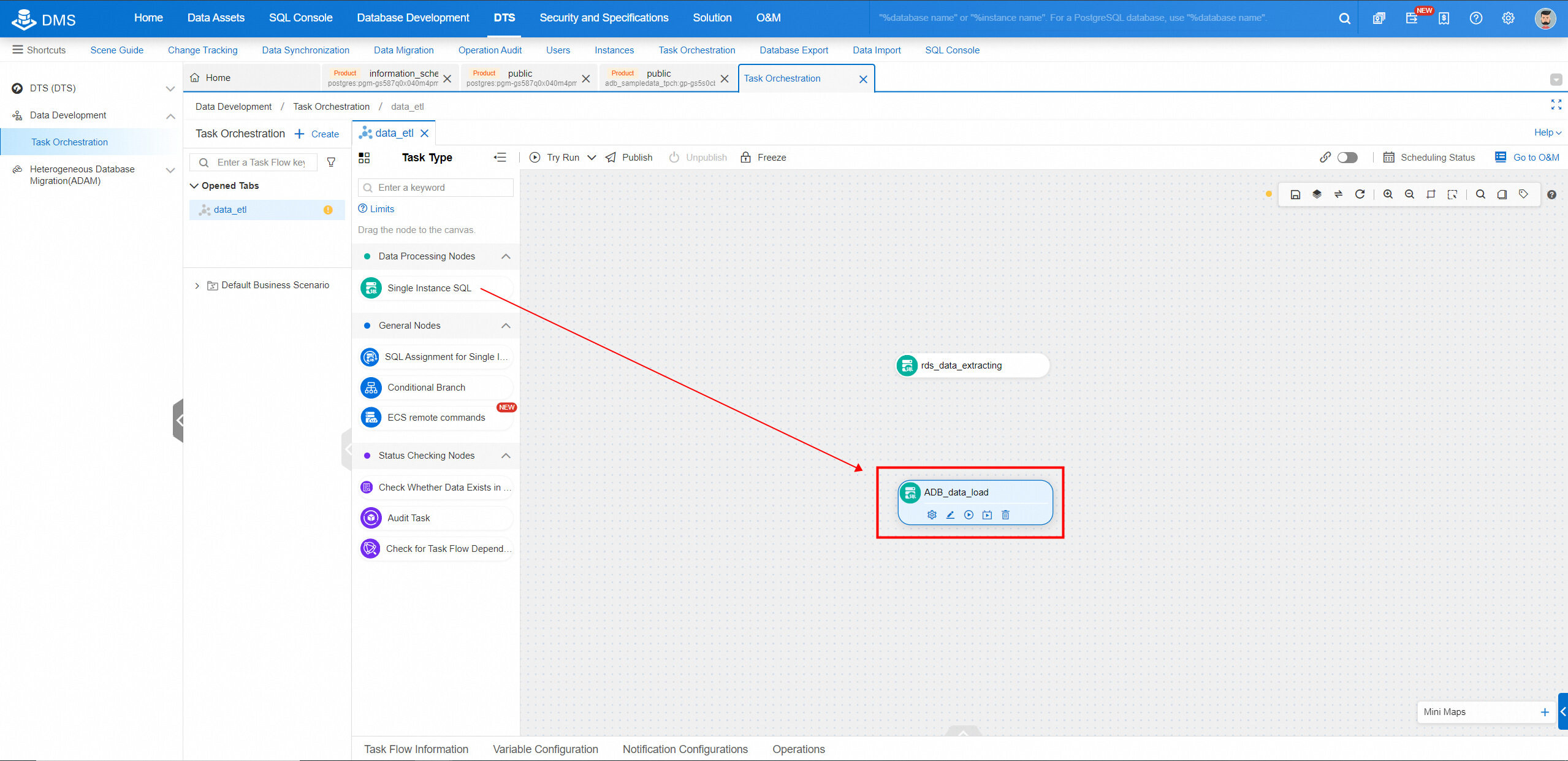

1. From the Task Type list on the left side of the canvas on the Task Configuration page, drag the Single Instance SQL node under Data Processing Nodes to the canvas and rename the node "ADBPG data load".

2. Click the settings icon  to go to the task configuration page. Select the AnalyticDB for PostgreSQL instance (as described above).

to go to the task configuration page. Select the AnalyticDB for PostgreSQL instance (as described above).

3. Enter and run the following SQL statements to extract data from OSS to the AnalyticDB for PostgreSQL instance.

CREATE FOREIGN TABLE if not EXISTS oss_${mydate}(

a int ,

b int ,

c date

) server oss_serv

options (

dir 'rds/t3/${mydate}/',

format 'csv',

delimiter '|',

encoding 'utf8');

insert into t_target select * from oss_${mydate};4. Configure a date variable named mydate as described above.

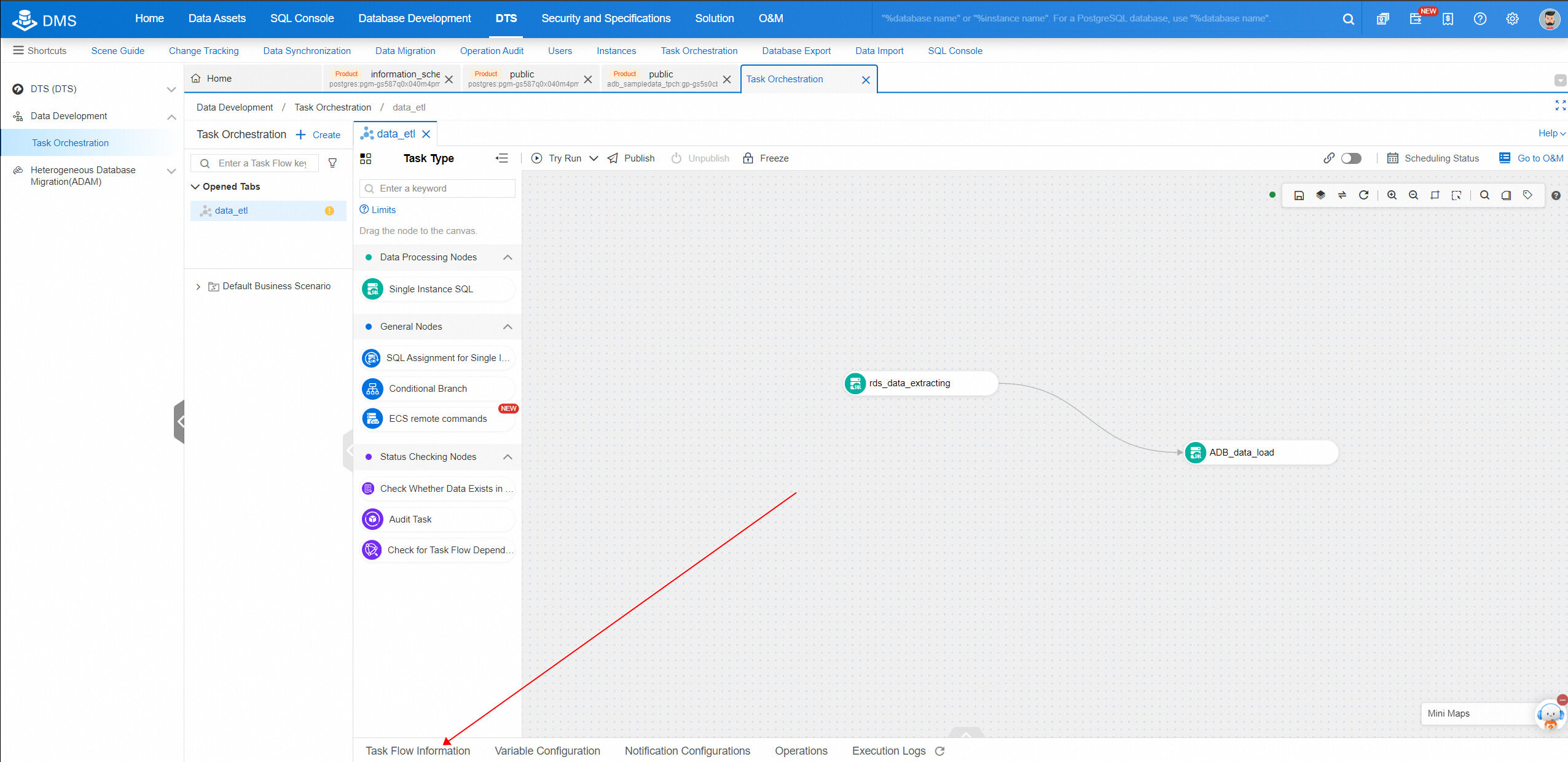

1. Configure the task flow to first run the task for extracting data from the ApsaraDB RDS for PostgreSQL instance and then run the task for loading data to the AnalyticDB for PostgreSQL instance. Move the pointer over the data extraction task, click and hold the small circle on the right side, and then draw a line from the circle to the data loading task.

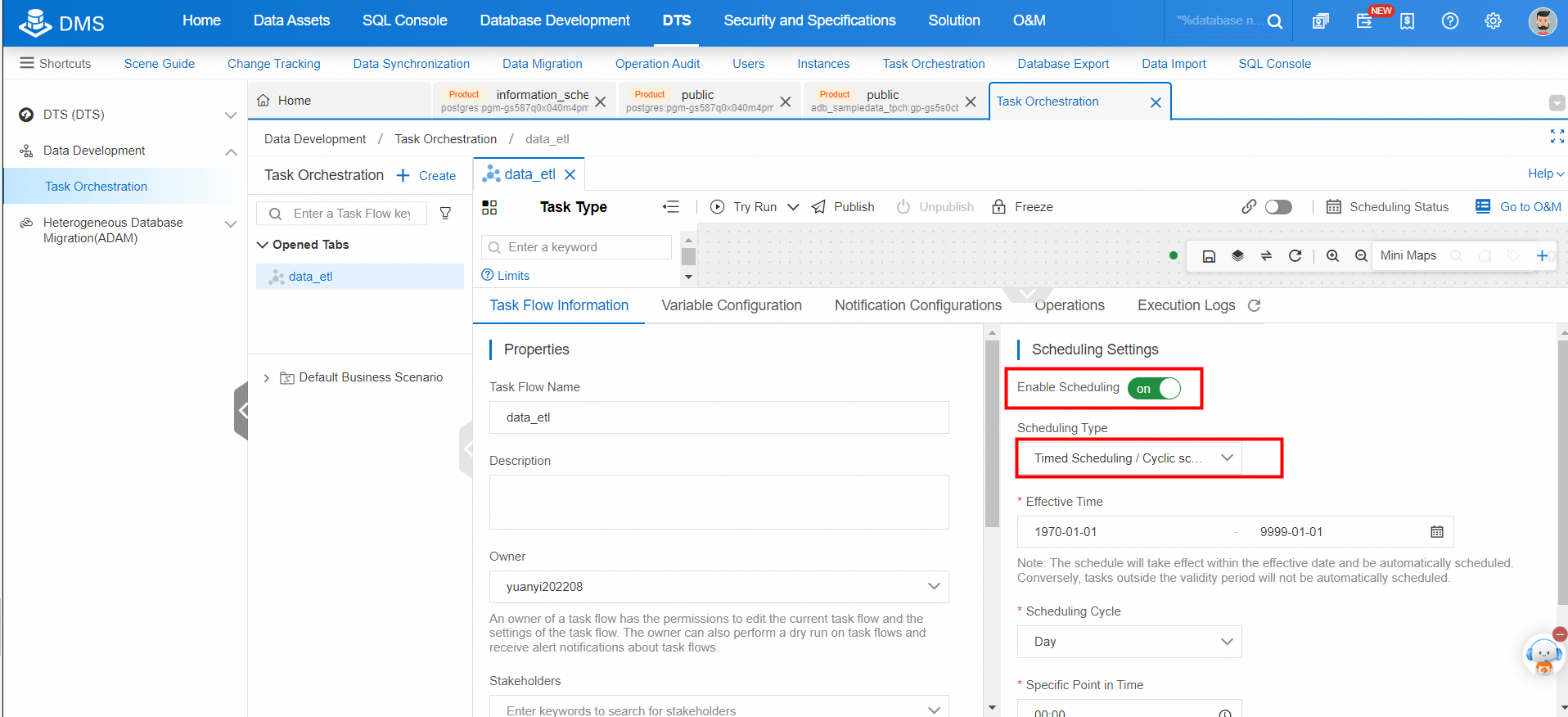

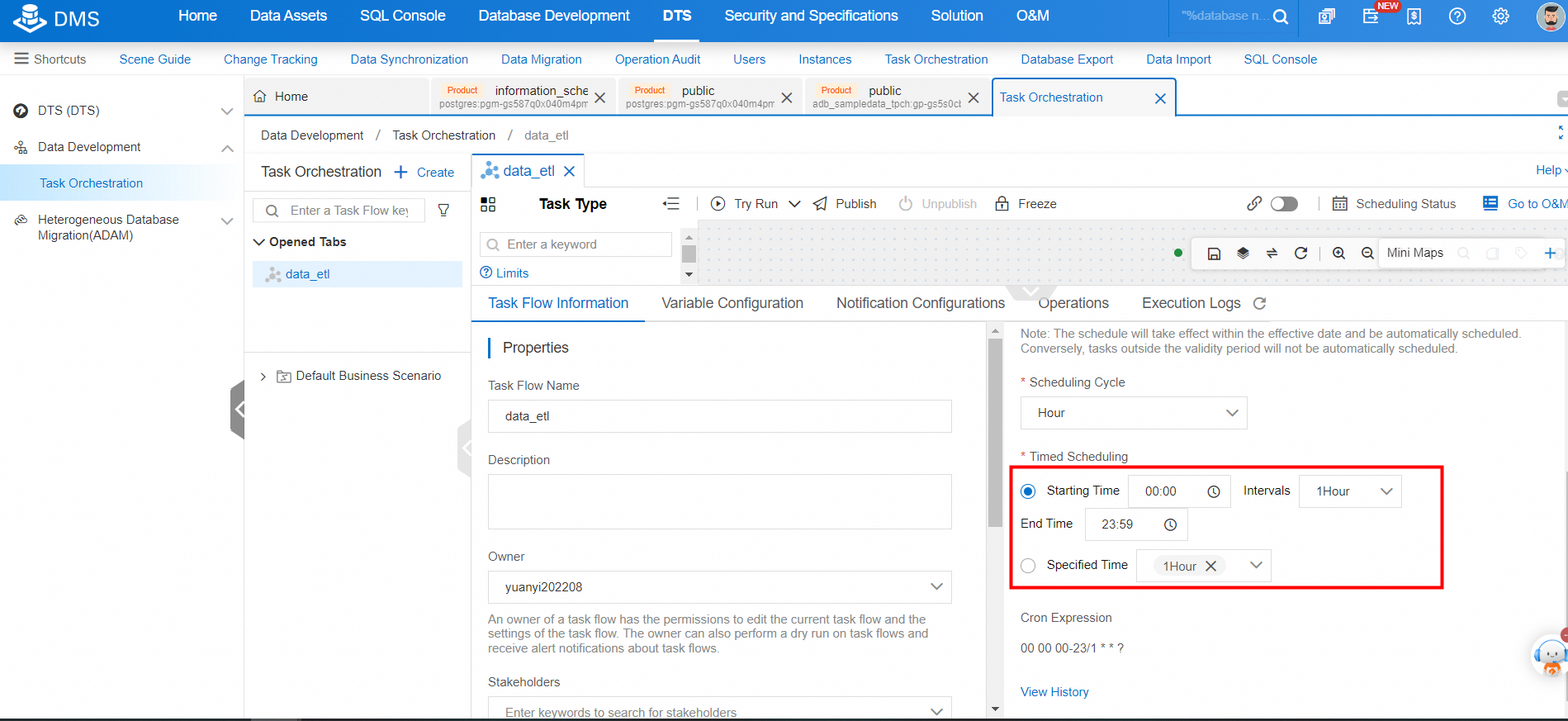

2. On the Task Flow Information tab, turn on the Enable Scheduling switch and configure a scheduling policy. Select a scheduling cycle as needed. Each time the task flow is scheduled, data is exported from the ApsaraDB RDS for PostgreSQL instance and imported to the AnalyticDB for PostgreSQL instance to ensure data consistency.

Click Try Run to test the code. If the code is run with no error reported, click Publish.

AnalyticDB for PostgreSQL allows users to quickly implement data ETL links for free. Users can move data in ApsaraDB RDS for PostgreSQL to OSS. The data in OSS can then be loaded to AnalyticDB for PostgreSQL for analysis or can be stored in foreign tables for federated analytics.

AnalyticDB for PostgreSQL supports high-efficiency analysis of massive partitioned data, query of terabytes to petabytes of data within seconds, and integration with mainstream business intelligence (BI) products. AnalyticDB for PostgreSQL is now widely used by enterprises to deploy cloud data platforms in their digital transformation process.

[1] Use cases of DMS

[2] Use OSS foreign tables for ApsaraDB RDS for PostgreSQL instances

[3] Use OSS foreign tables for AnalyticDB for PostgreSQL instances

Discover what and how you can use our products to build >>

ApsaraDB - August 19, 2025

ApsaraDB - February 7, 2025

Alibaba Cloud Community - August 9, 2024

ApsaraDB - May 19, 2025

ApsaraDB - July 23, 2021

ApsaraDB - June 18, 2021

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn More ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by ApsaraDB