Each Kubernetes node has a program at the underlying layer to create or delete a specific container. Kubernetes calls its API for orchestration and scheduling of the container. The software is called a container runtime, for example, the well-known Docker.

Container runtimes are not limited to Docker but include CoreOS's rkt, hyper.sh's runV, Google's gvisor, and Alibaba's PouchContainer. All of these runtimes contain complete container operations and can be used to create containers with different features. Containers have different unique advantages to meet different requirements. It is imperative for Kubernetes to support multiple container runtimes.

Initially, Kubernetes had built-in APIs for Docker, and then the community integrated Kubernetes 1.3 with APIs for rkt, which became another container runtime option other than Docker. The calling of either Docker or rkt APIs is strongly coupled with the core Kubernetes code, which will undoubtedly bring about the following two problems:

To solve these problems, the community introduced Container Runtime Interface (CRI) in Kubernetes 1.5, which defines a group of public APIs for container runtimes. The Kubernetes core code can be shielded from calling APIs for different container runtimes. The Kubernetes core code can be used only to call the abstract interface layer. A container runtime with CRI interfaces can access Kubernetes and become a container runtime option. As simple as it is, the solution is a relief for the Kubernetes community maintainers and container runtime developers.

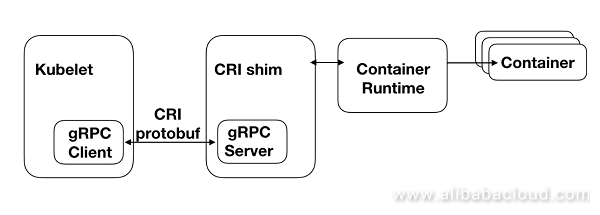

As shown in the figure above, Kubelet on the left is the node agent of the Kubernetes cluster, which monitors the status of containers on the node and ensures their operations as expected. For this purpose, Kubelet continuously calls related CRI interfaces to synchronize containers.

A CRI shim can be considered as an interface conversion layer, which converts a CRI to an interface for the corresponding container runtime at the underlying layer, calls the CRI, and return results. For some container runtimes, a CRI shim runs as an independent process. For example, when Docker is chosen as container runtime for Kubernetes, a Docker shim process is started during Kubelet initialization. This Docker shim process is a CRI shim of Docker. The CRI shim of PouchContainer is embedded in Pouchd, which is called CRI Manager. This will be further described in the next section about PouchContainer's architecture.

CRI is essentially a set of gRPC interfaces. Kubelet has a built-in gRPC Client while CRI shim has a built-in gRPC Server. Each CRI call of Kubelet is converted into a gRPC request, which is sent by gRPC Client to gRPC Server in a CRI shim. The Server calls the container runtime at the underlying layer to process the request to return results. A CRI call is completed.

The gRPC CRI interfaces can be divided into ImageService interfaces and RuntimeService interfaces. ImageService interfaces are used to manage container's images, while RuntimeService interfaces are used to manage the life cycle of a container and enable users to interact with a container (exec/attach/port-forward).

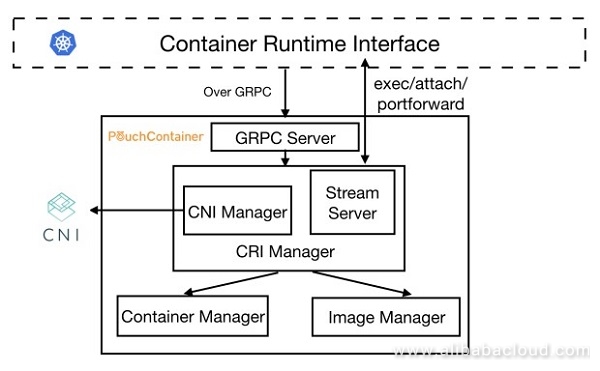

In PouchContainer's architecture, CRI Manager implements all CRI interfaces and plays the role as a CRI shim of PouchContainer. When Kubelet calls a CRI interface, the request is sent by gRPC Client of Kubelet to gRPC Server. The Server parses the request and calls the corresponding method of CRI Manager for processing.

Here is an example to briefly introduce the function of each module. When the request is to create a Pod, CRI Manager first converts the configuration format from CRI to that required by the PouchContainer interface, calls Image Manager to pull the image required, calls Container Manager to create the container required, calls CNI Manager, and configures the Pod network with the CNI plugin. Then, Stream Server processes a CRI request for interaction, for example, exec/attach/portforward.

Note that CNI Manager and Stream Server are sub-modules of CRI Manager while CRI Manager, Container Manager and Image Manager are of the same level and located in the same binary file Pouchd, which can be called directly by functions without remote calling expenses incurred for interaction between Docker shim and Docker. The following provides a further description of CRI Manager, allowing you to deeply understand the implementation of its key functions.

In the Kubernetes community, a Pod is the smallest unit for scheduling. Simply put, a Pod is a container group made up of closely related containers. As a whole, these closely related containers share something to make the interaction between them more efficient. For example, for network access, containers in the same pod share one IP address and one port so that they can access each other using localhost. For storage, the volume defined in a Pod is mounted to each container so that each container can access it.

In fact, all of the foregoing features can be implemented as long as a group of containers share a Linux Namespace and are mounted with the same volume. Here is an example of creating a specific Pod to analyze how CRI Manager in PouchContainer implements a Pod model:

RunPodSandbox. To implement the interface, CRI Manager creates a special container called infra container. Considering the container implementation, the infra container is not special. It is an ordinary container with the pause-amd64:3.0 image created by calling Container Manager. However, considering the whole Pod container group, it plays a special role. As it contributes its Linux Namespace for containers to share, all containers in the container group are connected. It is like a carrier that carries all other containers in a Pod and provides infrastructure for their operations. Generally an infra container stands for a Pod.CreateContainer and StartContainer are called continuously to create a container. For CreateContainer, CRI Manager simply converts the container's configuration format from CRI to PouchContainer, and then transfers the configuration to Container Manager for container creation. The only concern is how to add the container to Linux Namespace of the infra container mentioned above. This is very simple. The container configuration parameters of Container Manager include PidMode, IpcMode, and NetworkMode which are respectively used to configure Pid Namespace, Ipc Namespace, and Network Namespace of a container. Generally speaking, Namespace configuration of a container includes two modes: "None" mode (to create an exclusive Namespace for the container) and "Container" mode (to add the container to the Namespace of another container). You simply need to set the three parameters to the "Container" mode and add the container to the Namespace of an infra container. The specific addition process is not related to CRI Manager. For StartContainer, CRI Manager simply forwards the request, obtains the container ID form the request, and calls the Start interface of Container Manager to start the container.ListPodSandbox and ListContainers to obtain information about the running status of containers on the node. ListPodSandbox shows the status of each infra container while ListContainer shows the status of containers other than infra containers. The problem is infra containers and other containers are no different for Container Manager. Then how does CRI Manager distinguish these containers? In fact, when creating a container, CRI Manager adds a label to the existing container configuration, indicating the container type. In this way, when ListPodSandbox and ListContainers are implemented, different types of containers can be filtered by label value.In summary, to create a Pod, first create an infra container, and then create other containers in the Pod, and add them to the Linux Namespace of the infra container.

As all containers in a Pod share a Network Namespace, when creating an infra container, you simply need to configure its Network Namespace.

In the Kubernetes ecosystem, the network function of containers is implemented by CNI. Similar to CRI, CNI is also a set of standard interfaces. Any network solution that has implemented such interface supports seamless access to Kubernetes. CNI Manager in CRI Manager is simple encapsulation of CNI. During initialization, it loads the configuration file in the /etc/cni/net.d directory, as shown below:

$ cat >/etc/cni/net.d/10-mynet.conflist <<EOF

{

"cniVersion": "0.3.0",

"name": "mynet",

"plugins": [

{

"type": "bridge",

"bridge": "cni0",

"isGateway": true,

"ipMasq": true,

"ipam": {

"type": "host-local",

"subnet": "10.22.0.0/16",

"routes": [

{ "dst": "0.0.0.0/0" }

]

}

}

]

}

EOFThe file specifies CNI plugins that the Pod network may use, for example, bridge as shown above, and some network configuration information, for example, the subnet scope of the Pod on the node and route configuration.

The following describes how to add a Pod to the CNI network:

NetworkMode to "None", indicating that an exclusive Network Namespace is created for the infra container without any configuration./proc/{pid}/ns/net.SetUpPodNetwork method of CNI Manager. The core parameter is the Network Namespace path obtained in step 2. The method is used to call the specified CNI plugins during the initialization of CNI Manager, for example, bridge as shown above, and configure the Network Namespace specified in the parameter, including creating network devices, performing network configuration, and adding the Network Namespace to the CNI network of the plugins.For most Pods, network configuration is performed in the foregoing steps and most operations are performed by CNI and CNI plugins instead. For some special Pods, NetworkMode is set to "Host", that is, such pods and the host share a Network Namespace. In this case, when calling Container Manager to create an infra container, you simply need to set NetworkMode to "Host" and skip CNI Manager configuration.

For other containers in a Pod, no matter whether NetworkMode is set to "Host" or the Pod has an independent Network Namespace, when calling Container Manager to create a container, you simply need to set NetworkMode to "Container" and add other containers to the Network Namespace of the infra container.

Kubernetes provides such functions as kubectl exec/attach/port-forward to implement the direct interaction between a user and a specific Pod or container, as shown below:

aster $ kubectl exec -it shell-demo -- /bin/bash

root@shell-demo:/# ls

bin dev home lib64 mnt proc run srv tmp var

boot etc lib media opt root sbin sys usr

root@shell-demo:/#Executing a Pod equals to logon to the container using SSH. The following execution stream of kubectl exec shows how Kubernetes processes IO requests and the role of CRI Manager.

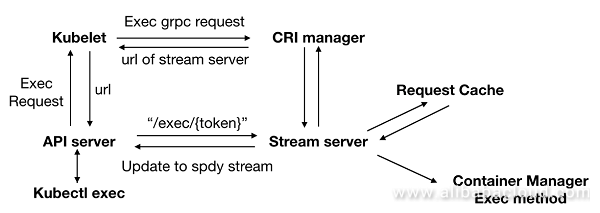

As shown in the figure above, the steps to run a kubectl exec command include:

kubectl exec command is to run the exec command on a container in the Kubernetes cluster and forward the IO stream generated to users. Requests are first forwarded layer by layer to the Kubelet of the node where such container is located, and Kubelet calls the Exec interface in CRI based on the configuration. The following are the requested configuration parameters:type ExecRequest struct {

ContainerId string //Indicates the target container where an exec command is executed.

Cmd []string //Indicates the specific exec command executed.

Tty bool //Indicates whether to run the exec command in a TTY.

Stdin bool //Indicates whether a Stdin stream is contained.

Stdout bool //Indicates whether a Stdout stream is contained.

Stderr bool //Indicates whether a Stder stream is contained.

}Exec method of CRI Manager does not directly call Container Manager to run the exec command on the target container but calls the GetExec method of the built-in Stream Server.GetExec method of Stream Server saves an exec request to Request Cache as shown in the figure above with a token returned. With the token, the specified exec request can be retrieved from Request Cache. Finally, the token is written to a URL that is returned as an execution result to ApiServer layer by layer.CreateExec and startExec methods of Container Manager are called in succession to run the exec command on the target container and forward IO streams to the corresponding streams.In fact, before the introduction of CRI, Kubernetes processes IO requests in the expected way: Kubelet runs the exec command directly on the target container and forwards IO streams back to ApiServer. This, however, overloads Kubelet as all IO streams are forwarded by Kubelet, which is obviously unnecessary. The foregoing processing seems complicated at first, but it effectively relieves the pressure of Kubelet and makes IO request processing more efficient.

This article begins with the reasons for introduction of CRI, briefly describes the CRI architecture, and focuses on the implementation of PouchContainer's core functional modules. CRI makes it easier to add PouchContainer to the Kubernetes ecosystem. The unique features of PouchContainer will also diversify the Kubernetes ecosystem.

The design and implementation of PouchContainer CRI is a joint research project of Alibaba-Zhejiang University Frontier Technology Joint Research Center, aiming to help PouchContainer become a mature container runtime and cooperate with CNCF with respect to the ecosystem. With remarkable technologies, the SEL lab of Zhejiang University effectively implements PouchContainer CRI which is expected to create immeasurable value in Alibaba and other data centers that uses PouchContainer.

639 posts | 55 followers

FollowAlibaba System Software - August 14, 2018

Alibaba Developer - May 8, 2019

Alibaba Developer - June 23, 2020

Alibaba System Software - November 29, 2018

Alibaba Developer - March 25, 2019

Alibaba System Software - September 3, 2018

639 posts | 55 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn More Architecture and Structure Design

Architecture and Structure Design

Customized infrastructure to ensure high availability, scalability and high-performance

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn MoreMore Posts by Alibaba Cloud Native Community