Until we look into how Cainiao's elastic scheduling operates, let's have a look at how Cainiao's total resource use isn't quite that cost-effective.

The number of containers required by a Cainiao application that normally runs and provides services at Cainiao is determined through a system that uses single-server performance stress testing and experience-based service traffic estimation. However, this method can often be disturbed to a great extent by subjective factors of the estimator, which usually retains a large amount of redundancy when estimating service traffic. Next, scaling operations for Cainiao application clusters are seldom performed, which means that the estimator estimated service traffic based on daily peak traffic and service development in an upcoming period, in particular a month long period. This is because peak traffic periods account for only a rather small portion of a day, as such a lot of resources are wasted during non-peak periods.

Following the above discussion, according to the previous performance of the application clusters with elastic scheduling enabled, inaccurate capacity estimation is something that is rather common, and the deviation from the actual situation is huge. Therefore, elastic scheduling is an important solution to all of the above problems because this system can dynamically evaluate the system operating status online and makes scaling decisions accordingly.

So, in other words, what everyone at Cainiao quickly realized was that elastic scheduling can help to make things work better and much more efficiently. Knowing this, Cainiao quickly created the relevant architecture, something we will get to in later articles in this series.

The elastic scheduling system of Ark at Cainiao works well because it enables application developers and the O&M personnel to shift their focus from a specific objective, such as the number of containers, to a more abstract objective, such as a reasonable resource usage index and a reasonable service response time. And this in turn reduces the impact of subjective factors that are inevitable under manual estimation. Furthermore, based on the reliable and efficient scaling capability of the Ark platform of Cainiao, the scaling of an application cluster can be carried out just in minutes, which allows for the real on-demand use of resources.

Now, although elastic scheduling can produce some great results for Cainiao, it is not necessarily suitable to all companies and organizations. So, why does elastic scheduling work so well for Cainiao anyway? Well, we think it comes down to these reasons:

The business of Cainiao requires that the system be designed to coordinate the transfer of information among several different merchants, several Cainiao partners, and also customers. Moreover, the transfer of logistics order information involves a lengthy process and multiple interactions. This determines whether the transfer of information can run normally given if the mass of information flow is greater than the sequence of actual operations. Therefore, there is a rather slim chance that the elastic scheduling system of Ark will have to deal with traffic peaks generated by flash sale on shopping guide websites.

Next, after Cainiao fully implemented containerization and connected to a hybrid cloud architecture system in early 2017, it completed the transformation of resource management from "machine-oriented" to "application-oriented." As a result, application deployment, scaling, and other core O&M processes were greatly simplified and improved. Ark, as a container resource control platform, proved its stability after being tested by major promotion campaigns such as Alibaba's 6.18 Mid-year Shopping Festival and the Double 11 Shopping Festival in 2017. This laid a solid technical foundation for Cainiao to implement elastic scheduling.

Another reason is that most core applications of Cainiao are stateless online computing applications, and the gap between the service pressure peak and valley is significant every day. This provides sufficient scenarios for Cainiao to implement elastic scheduling.

The last reason is because elastic scheduling is not an independent project. It requires the assistance of several basic services and relies on a centralized and standardized system environment. The applications of Cainiao comply with the rules and standards stipulated by Alibaba Group. The elastic scheduling system can directly read monitoring and O&M data from tools such as EagleEye and Alimetrics, and technology stacks used by the core applications have been largely converged. This provides a sound environment for Cainiao to implement elastic scheduling.

Based on the preceding points, you can see why and how Cainiao was able to manage to implement elastic scheduling relatively quickly and do so in a cost efficient manner. Of course, businesses and organization that also implement these changes can also greatly benefit from elastic scheduling.

In this article,we're going to see how Cainiao is able to use resources in an efficient and cost-effective manner thanks to its elastic scheduling system. Then, we will also have a brief discussion on what makes Cainiao's systems special and how it is different to other logistics systems.

This article describes how elastic scheduling works at Cainiao, the mode involved with the scheduling application, its advantages, and also we'll start to take a detailed look at the ark architecture connected with Cainiao's elastic scheduling system.

To present, Cainiao has been mainly implementing elastic scheduling for stateless application clusters. What this means is that, with the system that is used, each cluster will have more than fifteen containers before the cluster will connect to the elastic scheduling system.

During any one single day, the average CPU usage of an application cluster connected to this system is over twenty percent, which is the weighted average of the CPU usage of this particular application cluster at Cainiao during several different periods of an entire day, and the number of containers of this application cluster in a single day. Then, related to this, the total number of containers scaled every day is over three thousand in total.

During the Double 11 Shopping Festival in 2017, elastic scheduling, used as an auxiliary tool at that time, conducted scaling operations for some application clusters from midnight, at the very start of all the logistics madness to follow. With this, the ratio between the number of physical CPU cores consumed by Cainiao and the number of parcels and packages that Cainiao had to handle with CPU resources was significantly reduced.

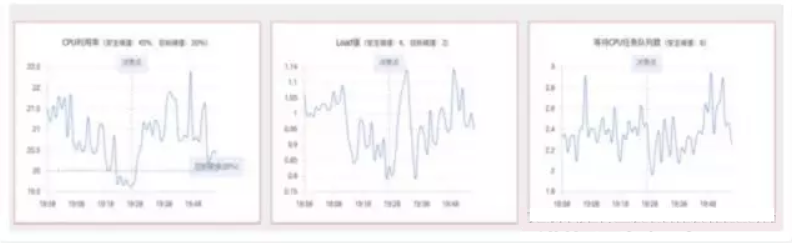

Now consider these figures and this comparison. Below, in the first graph, you can see the change curves of the CPU usage and the number of containers of an application cluster in a day compared. Then, in the second graph, you can see the change curve of the traffic of a core service in this application cluster during the same period of time.

In this article, we're going to continue our discussion about the ark architecture and Cainiao's elastic scheduling system, covering a variety of topics. To be more specific, we will be covering the topics of the policies involved with the architecture as well as the selection between queries-per-second and response time. Then, we will also be taking a look at the topics of threshold comparison and multi-service voting, downstream analysis, and several other miscellaneous topics that are helpful in understanding the overall system architecture.

To continue our discussion on the ark architecture that is behind the elastic scheduling system of Cainiao, in this article we're first going to take a look at some of the policies involved in this architecture.

Decision-making Policies

The elastic scheduling system of Ark supports the quick horizontal extension of decision-making policies. Currently, multiple decision-making policies are included, and some policies are being tested or verified. The following introduces several of the earliest core policies that went online.

Resource Security Policies

Resource security policies focus on the usage of system resources. Based on the past O&M experience and the business dynamics of Cainiao, our resource security policies focus on three system parameters, which are CPU, LOAD1, and Process Running Queue.

When the average value of one or more of these parameters on all servers in an application cluster in a recent time window violates the upper threshold to eliminate the impact of glitches and uneven traffic, a scale-out request is initiated. In this case, custom configuration is supported. If multiple violations exist, the decision-making result of the current policy is the one with the largest number of containers to be scaled out. The method of calculating the quantity will be described later in this article.

The above graphs show resource usage at the time when a resource security policy obtains the scale-out result.

Resource Optimization Policies

Resource optimization policies also focus on the usage of system resources, but they are intended to reclaim resources when the system is idle. These policies also focus on the preceding three system parameters. When all the three parameters are below the lower threshold, a scale-in request is initiated. Note that due to the existence of the second layer of decision-making, when the other decision-making layers require scale-out, scale-in requests generated by resource optimization policies are suppressed.

The above graphs show the resource usage at the time when a resource optimization policy obtains the scale-in result.

Time Policies

The current elastic decision-making model is posterior in nature. That is, a scale-out request is initiated only when a threshold violation occurs. For some services, such as regular computing tasks, traffic may surge on a regular basis.

Therefore, automatic policy configuration is based on the historical traffic changes of an application cluster. When the traffic changes are determined to be periodic changes and are too big and when the posteriori elasticity cannot keep up with the changes in time, a time policy configuration is generated for this application cluster. That is, the number of containers in this application cluster is maintained above a specified number during a specified period of time every day.

Due to the existence of the second layer of decision-making, when other policies determine that the number of containers required in this period of time is greater than the specified number of containers, the larger number prevails. In such a period of time, decision-making results for scale-out are continuously generated based on time policies, and therefore decision-making results for scale-in are continuously suppressed.

During the continuous generation of decision-making results for scale-out, if the current number of containers is equal to the target number of containers, a scale-out decision is generated. In this case, the number of containers to be scaled out is 0.

Service Security Policies

Service security policies are the most complex among all policies. Currently, services include the Consumer in the message queue, Remote Procedure Call (RPC) service, and Hyper Text Transfer Protocol (HTTP) service. At least half of the scale-out tasks each day are initiated by service security policies.

Server Load Balancer (SLB) distributes network traffic across groups of backend servers to improve the service capability and application availability. It provides functions as a reverse proxy at Layer 7(ALB)and load balancing services at Layer 4 (CLB).

EIPs are independent public IP address resources, which allow you to decouple public IP addresses

from ECS instances and facilitate management.

2,599 posts | 764 followers

FollowAlibaba Clouder - January 2, 2020

Alibaba Clouder - January 2, 2020

Alibaba Clouder - January 2, 2020

Alibaba Clouder - April 2, 2018

Alibaba Clouder - September 1, 2020

Alibaba Clouder - April 1, 2021

2,599 posts | 764 followers

Follow Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More Elastic IP Address (EIP)

Elastic IP Address (EIP)

An independent public IP resource that decouples ECS and public IP resources, allowing you to flexibly manage public IP resources.

Learn More Elastic Container Instance

Elastic Container Instance

An agile and secure serverless container instance service.

Learn MoreMore Posts by Alibaba Clouder