How can we restore an accidentally deleted database in GitLab? How can we implement database backup, recovery, disaster tolerance, and high availability? If you are in the database industry, you might be more concerned about these issues recently. Late last year, incidents including Hearthstone data loss and MongoDB hacker extortion highlighted the news. Recently, another incident stole the limelight: data files in GitLab databases were accidentally deleted by the rm -rf command.

Database is a vital for any enterprise. Web SQL injections and accidental deletion of data not only impair businesses but may also lead to user information leaks. Many companies realize the importance of database security and have specialized database administrators (DBA) for data maintenance. The DBA family is like the border warriors in the IT world, and guarding data is one of the most important responsibilities of DBAs. However, despite the many effective measures taken by DBAs, accidents do happen. Sometimes too many operation interfaces and too frequent back-and-forth switches between services may lead to an increased rate of human error.

How can we ensure the safety of data files even after an accidental deletion?

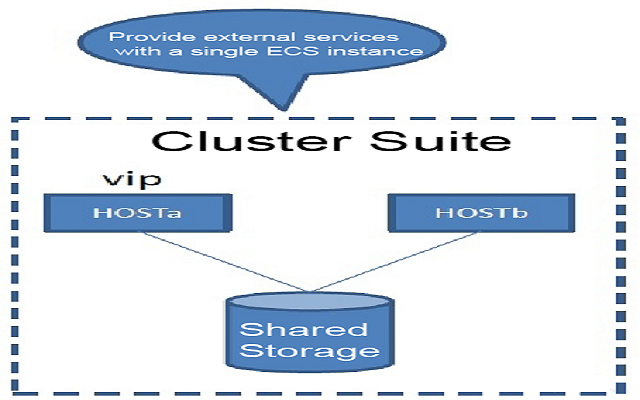

In many traditional enterprises, the common means of implementing HA is to mount shared storage among multiple hosts. In fact, only one copy of data is in place, because the availability of storage is much higher than the server. The advantage of this HA approach lies in the simplicity of its structure and thereby easier implementation of data consistency.

The weakness of this HA approach is obvious: there is a single point of failure. Of course, you can use multiple storage devices or physical images to solve single point of failure issues. On the other hand, because there is only one copy of storage, or multiple copies through the image, data deletion is infectious. That is, you can delete either none or all data, which makes it hard to protect data in the case of an rm -rf operation in GitLab.

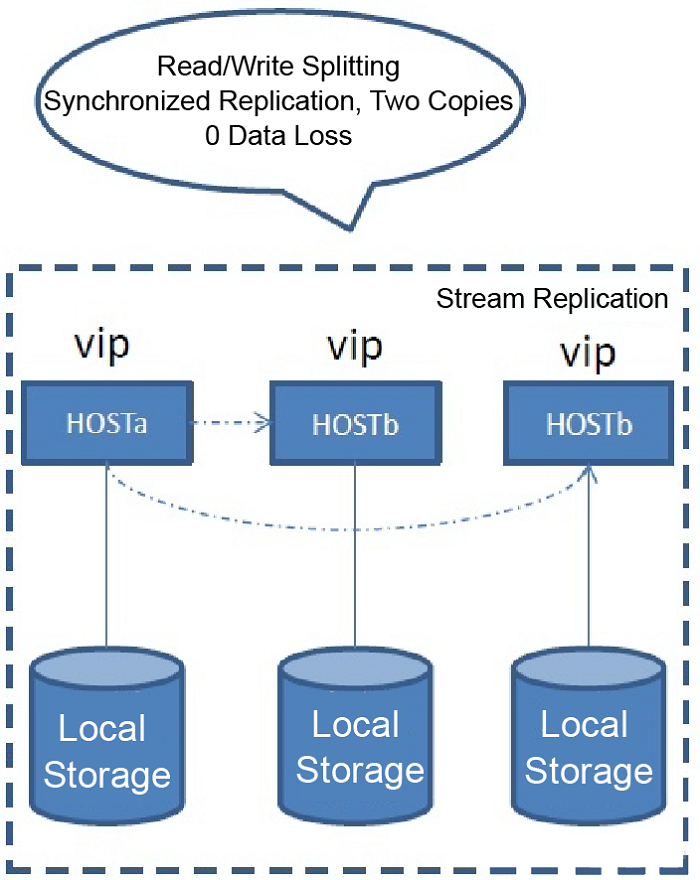

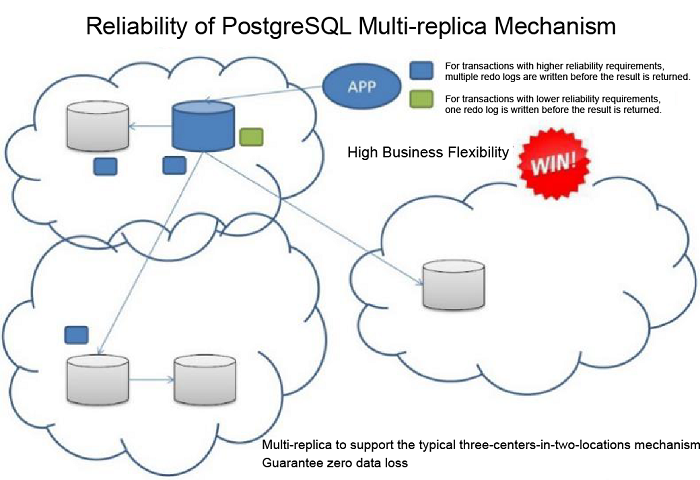

Beginning with v9.1, PostgreSQL supports the dual-replica synchronous stream replication mode and the multi-replica asynchronous stream replication mode. In v9.6, PostgreSQL adds the new multi-replica synchronization mode.

With this approach, even if the data files in the master database are deleted by an rm -rf operation, because the synchronization is based on REDO, the operating system command will not be infectious, and rm -rf misoperation damages can be kept under control.

Another note is that the remote backup and remote disaster tolerance nodes must be in place to withstand the hidden fault risks of a single data room.

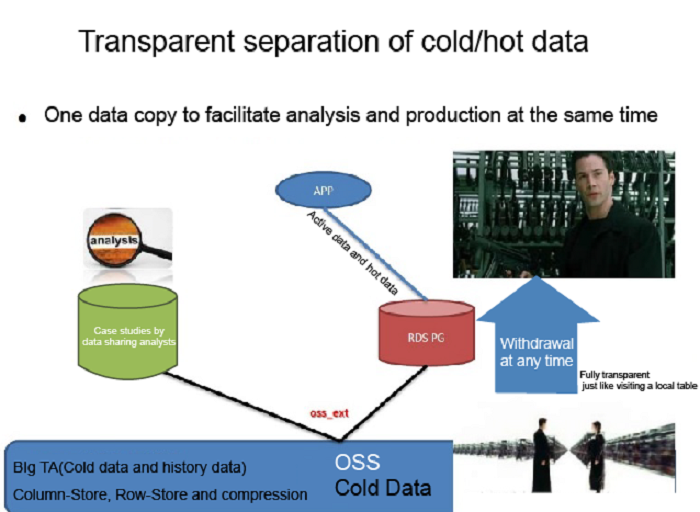

You may choose not to store the unchangeable history data to the external object storage service OSS provided by Alibaba Cloud to save database space, and save the backup resources for this part of data at the same time. (OSS itself implements a multi-replica mechanism. However, it is best to store a copy of the data to a remote location or another platform as an administrator.)

Correct backup methods

1.Logical backup

Database-level consistency. The backup can only be restored to a single point in time and cannot be implemented with incremental restoration or incremental backup.

On the other hand, you should also note that different versions of databases often have different catalogs. This is why we recommend you use the backup client with the same pg_dump version as that of the database version. Otherwise, it may cause backup exceptions or even failures (if you failed to notice this, it may lead to a logical backup failure).

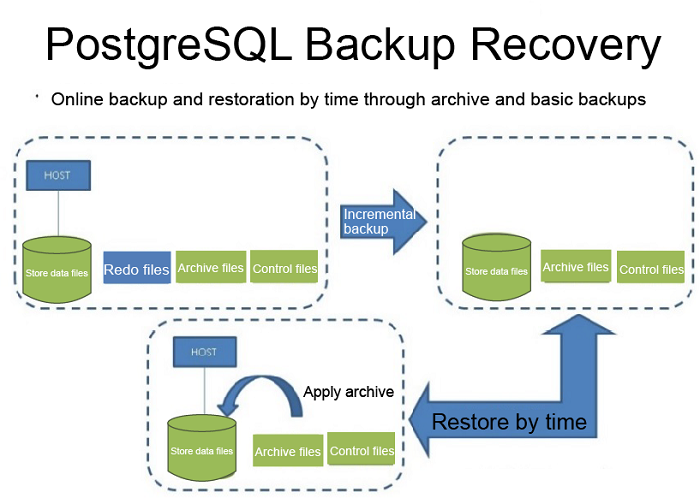

2.Online full + archive backups

This is the most common backup mode and can be done online. You can restore the data to any point in time (at the transaction granularity).

If you ignore this when using pg_basebackup for backup, the backup may also suffer an exception if the destination backup directory already exists and is not empty.

Refer to the pg_basebackup manual, which provides clear instructions. (This also aims to ensure the stability of each backup and keep the backup operation free from any impact or interruptions from existing files. No backup tool designers know whether the files under a non-empty directory may conflict with the backed up files). It is helpful to often read the manual.

-D directory

--pgdata=directory

Directory to write the output to pg_basebackup will create the directory and any parent directories if necessary. The directory may already exist, but it is an error if the directory already exists and is not empty. When the backup is in tar mode, and the directory is specified as - (dash), the tar file will be written to stdout. This option is required.

3.Block-level increment + archive

The header of each data block has an LSN tag, so PostgreSQL supports block-level increments of data files to only back up the data blocks with changes since the last backup. It also supports restoration to any point in time (at the transaction granularity). Compared to the full + archive backups, the block-level increment + archive mode saves more space.

4.File system and logical volume images

In addition to the database-supported block-level incremental backup, you have another incremental backup option if you are using a snapshot-supporting file system or a logical-volume-managed storage service: make a snapshot (pay attention to first execute pg_start_backup('') to put the database into the online backup status) and then execute "pg_stop_backup()".

5.Alibaba Cloud OSS

You can store history data that will not change to the OSS service provided by Alibaba Cloud to save database space. In addition, the OSS itself implements a multi-replica mechanism for storage.

If you store backup files in the same data room as the database, you face the risk of data loss if there are any failures in the data room. Many companies have a copy of archive data or image backup in a remote data room.

PostgreSQL databases enable simple and diversified remote backup approaches, such as:

Method 1. Back up the remote streaming standby node.

Method 2. Transmit the backup files to a remote data room through a file system such as ZFS or a storage snapshot.

Method 3. Transmit the local full backup files asynchronously to a remote data room, transmit the redo logs in the master database to a remote data room in streaming mode and thereby ensure minimal data latency between the two data rooms. When the master data room suffers a disaster (such as an earthquake), the data loss rate can usually be controlled within KBs or several milliseconds (depending on the bandwidth and the business situations, that is, the redo log generation speed matches the bandwidth capacity). If you want to achieve zero data loss, you can purchase a leased line and implement synchronous streaming replication remotely. This way, no data will be lost even if the master data room completes fails.

Method 4. Synchronize the backup data in the local data room, asynchronously or through scheduling, to the storage device of a remote data room on a regular basis.

Apart from valid backups, you also need to ensure the validity of the backup files. For example, you can use the following methods to verify the validity of the backup + archive files and the snapshot backups.

Tips and Advice for DBAs

Apart from the validation mechanisms for daily backups, HA, remote backups, remote disaster tolerance and backup sets, it is also important to develop effective norms and good habits. I would like to share some tips in hopes to offer some insight (Chinese reference: Secret of DBAs).

The foremost mission of a DBA is to guard data, and ensure that databases can run normally. Some rules must be adhered to at the bare minimum.

1.Develop and implement database security regulations.

2.Develop and implement database management regulations.

3.Develop and implement database development regulations.

4.Establish an automated monitoring system.

5.Establish an automated inspection, backup, HA, remote disaster tolerance and remote backup system (as well as the crucial backup set availability check, especially in the tape library era).

6.Develop holiday network blockout and emergency mechanisms.

This is an effective measure to prevent attacks.

1.We recommend you add a routine inspection before holidays, just as you check your car before setting out on a long journey.

2.It is necessary to expand the business databases (including the application servers of course) with predictable loads. Many businesses will welcome a peak load during holidays, such as game businesses, social networking businesses and e-business.

3.Prepare a batch of hardware for standby purposes to cope with instance demands during major holidays.

4.Network blockout to stop changes. You usually need to stop changes a few days in advance to reduce potential issues arising from changes.

For example, some SQL statements may be added after app changes, and these SQL statements may not be optimized, or they cannot predict the business's amount of concurrent requests for these SQL statements, resulting in potential bursts of database loads on major holidays.

5.Shift. Arrange the duty for the day to ensure DBAs are available to provide responses 24/7. The DBA should be reachable via mobile phone or on the Internet.

6.Usually the person on duty on holidays bears more extensive responsibilities than normal. It is necessary to provide him or her with training concerning the business and related duties.

It is hence very important allow multiple DBAs to work in shifts. We must avoid such a situation that only one DBA is familiar with a specific business.

7.Publicity. It is imperative to publicize the network blockout window to the company's business parties, requesting them to follow the rules and not to overstep the boundary during the window (such as alterations or releases).

Although systematic measures and some IT means have been adopted to control the behavior during the network blockout period, some corners may be overlooked. That is why publicity is equally important.

1.Duty. Usually divided into online duty and offline duty. The former is more or less the same as regular working hours. The DBA on duty may need to pay attention to the metrics of some NOC platforms and fill out intermittent duty reports.

Offline duty refers to the passive reception of warning messages and e-mails and then going online to handle issues.

2.Shifting duty. The shifting of duty is very important. Usually the DBA on the previous shift may discover some exceptions and pass his or her findings to the next shift. If a real problem occurs, the information from the shifting of duty will help to improve the response speed and handling efficiency.

After the network blockout is over, everything returns to normal. But do not forget one important thing.

Replay usually refers to the review of the system status during the network blockout. It serves the following purposes:

1.1 Determine whether the expansion estimation makes sense, and suggest the feedback data to business parties.

1.2 Check whether there is any fault, the causes and how to avoid the fault in the future.

1.3 Check whether the monitoring system has vulnerabilities and how to avoid the vulnerabilities in the future.

1.4 Identify whether there are nonconforming changes or releases and work out how to avoid the same issues in the future.

Summary

I believe that many companies have similar systems. DBAs must do everything they can to avoid rm -rf incidents, and be able to stay calm when such incidents do happen, while assuring users' ease of mind.

At the same time, it is vital to establish a sound system. A clear mind is also necessary during operations to avoid human errors as much as possible.

2,593 posts | 793 followers

FollowAlibaba Clouder - July 22, 2020

Alibaba Clouder - February 11, 2019

Alibaba Cloud Storage - April 3, 2019

Alibaba Cloud Storage - February 27, 2020

Alibaba Clouder - November 12, 2018

Alibaba Cloud Storage - October 31, 2018

2,593 posts | 793 followers

Follow Backup and Archive Solution

Backup and Archive Solution

Alibaba Cloud provides products and services to help you properly plan and execute data backup, massive data archiving, and storage-level disaster recovery.

Learn More Time Series Database (TSDB)

Time Series Database (TSDB)

TSDB is a stable, reliable, and cost-effective online high-performance time series database service.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Cloud Migration Solution

Cloud Migration Solution

Secure and easy solutions for moving you workloads to the cloud

Learn MoreMore Posts by Alibaba Clouder