By Digoal.

I'm sure that most of you, if not all of you reading this, have borrowed books from a library or exchanged or shared a book before. Well, the concept of borrowing a book in a way is a little bit like our topic today-data pumping, which refers to a transfer of large quantities of information between the two systems or parties. Although, unlike borrowing and sharing books, with data dumping, typically data copies can be shared in real time.

In Internet-of-Things (IoT) scenarios where loads of data is being processed every second, the same copy of data may be used for such things as streaming analyses, the storage of historical data, data mining, search engines, among other services and scenarios.

Want to do all of these things at once? Or use the same product or service to accomplish all of these things? Well, sorry, but that's not too realistic.

First of all, when data is all stored in one place, some of services may require real-time processing, while others may require batch processing, while some others may require real-time data updates, and yet some others may need to analyze the big data. In other words, the way things are dealt with all of these applications isn't exactly the same.

Next, a single product usually cannot be used to meet so many requirements. To make things better understood, let's consider databases. You can't use one database to fulfill all of your needs. That's why today there are so many database types out there: relational (SQL) databases, non-relational (NoSQL) databases, Online Transaction Processing (OLTP) databases, and Online Analytical Processing (OLAP) databases.

Nowadays, for a lot of companies and enterprises alike, the most important scenario is actually data redundancy. For them, data redundancy can serve as a means to address the requirements of various scenarios.

So, with all of that said, how can you better share data, ensure data consistency, and improve real-time sharing? What sort of products can help to meet all of your requirements?

Well, in this article, we're going to discuss how you can use the solution of Confluent and bottledwater-pg to meet your requirements.

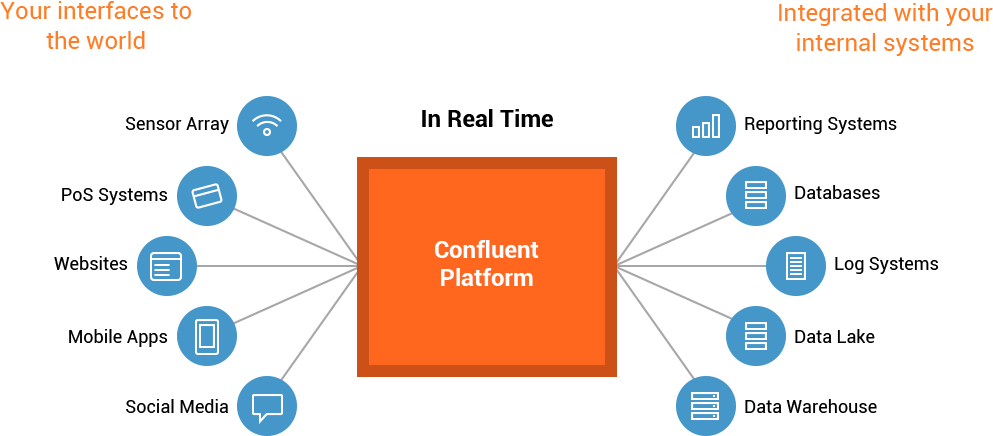

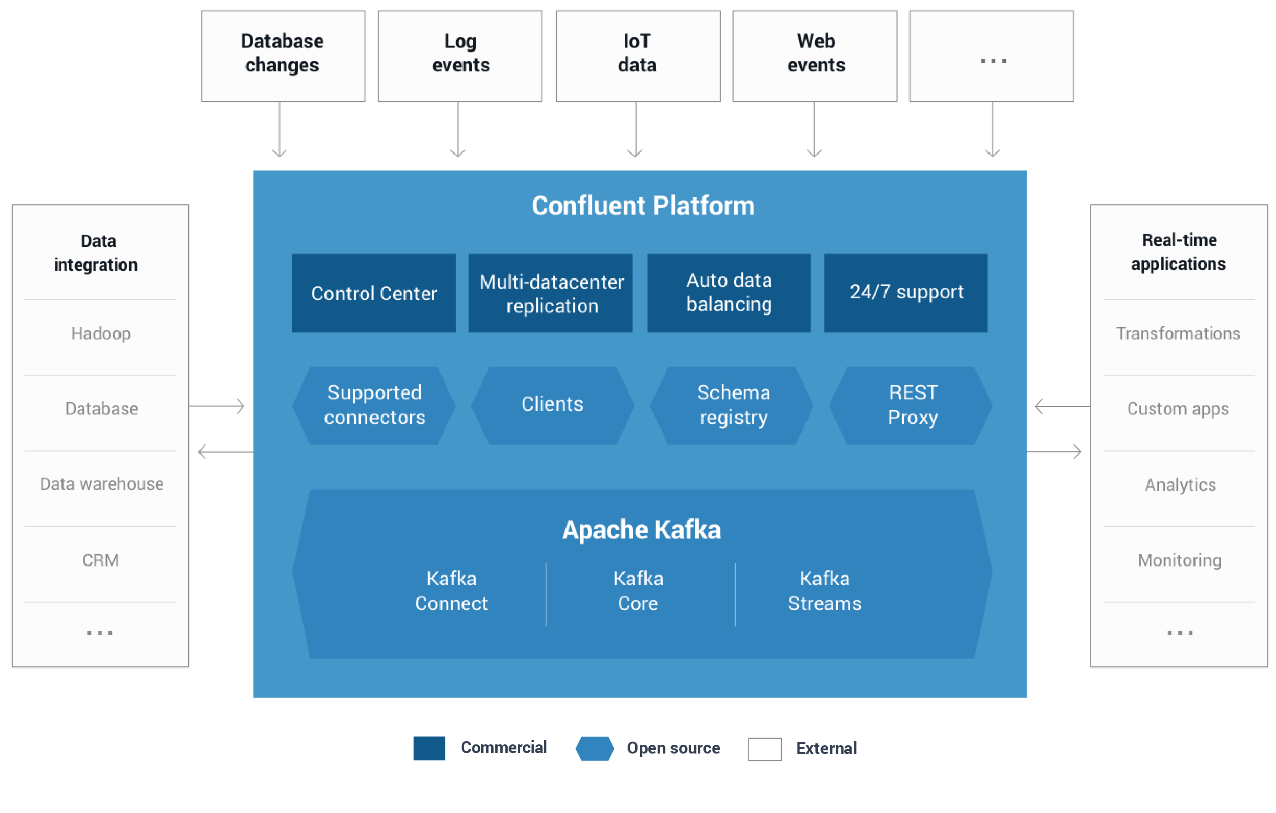

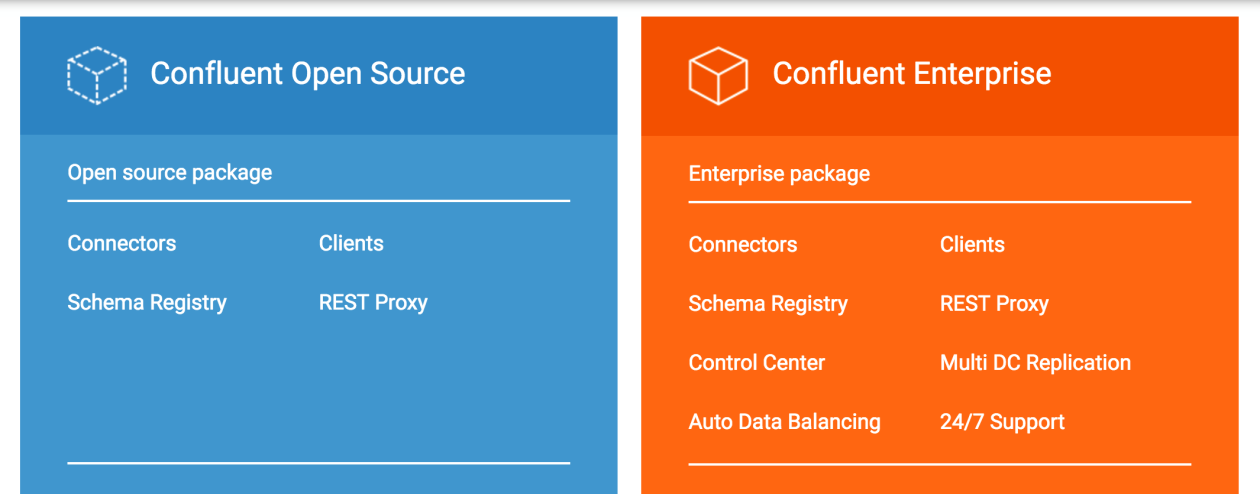

Now, let's talk about a powerful solution for data dumping. Confluent is a real-time data transfer service, which can be used to transfer data among various platforms to share and exchange data. This can be very useful if you need to use multiple products to be able to meet all of your requirements, as we discussed above.

The data you're transferring with Confluent could include data collected from IoT sensors, data in your database, HTTP data, mobile app data, application log data, and even event-triggered data.

Confluent relies on some basic components with Apache's Kafka being its core component. With Confluent, you can customize message producers and consumers and exchange data on the platform provided by Confluent. Consider the following graphics, again, all taken from Confluent's official documentation:

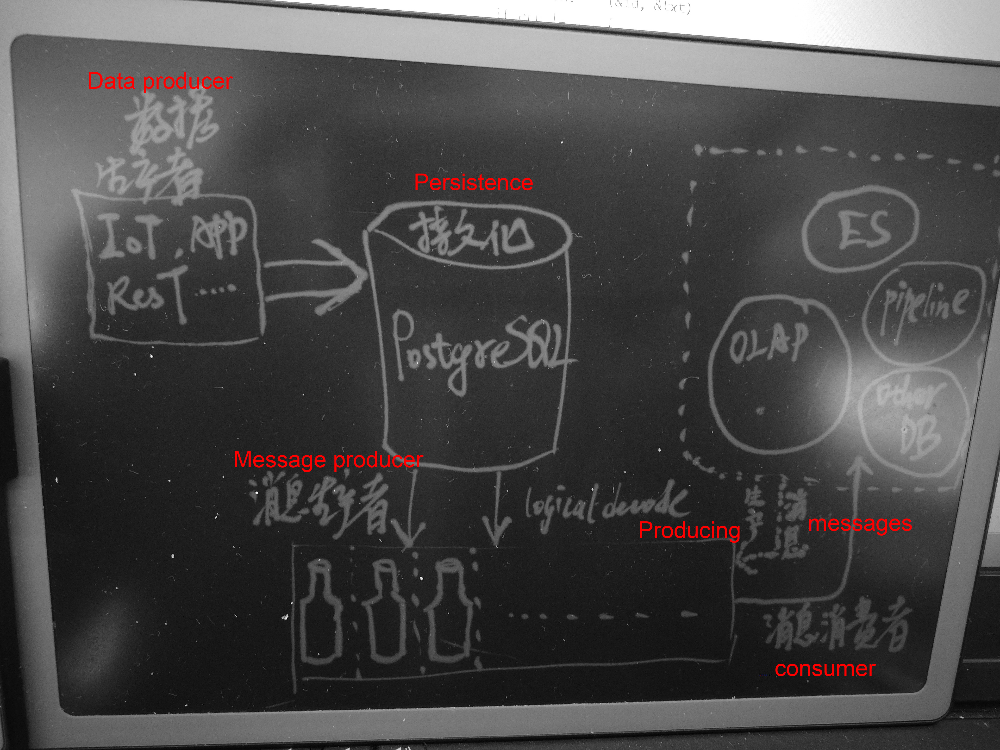

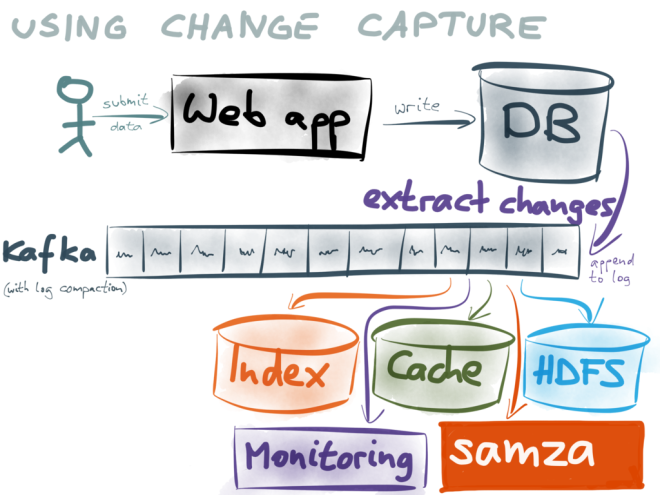

Now, let's discuss BottledWater-pg, which is a message producer on the Confluent platform. Consider this graphic below as a preview to understand how exactly BottledWater-pg functions.

Source: https://www.confluent.io/blog/bottled-water-real-time-integration-of-postgresql-and-kafka/

As I just said above, BottledWater-pg is a message producer on the Confluent platform. Data in PostgreSQL databases can be written to Confluent Kafka so that the databased can be shared among message subscribers in real time.

BottledWater-pg supports PostgreSQL 9.4 and later. It also supports full snapshots and continuous writing of incremental data to Kafka. To be more specific, BottledWater-pg uses PostgreSQL snapshots to read consistent snapshots and write them to Kafka. It also uses PostgreSQL's logical decoding function to parse the PostgreSQL Write-Ahead Logging (WAL) logs into rows and write the rows into Kafka.

Next, in Kafka, each topic represents a database table. After logical decoding is used to retrieve data from WALs and before such data is written to Kafka, Avro is used to package the rows in JSON, Protobuf, Thrift, or any other format, and then write the rows to Kafka.

Avro supports a wide range of data types and therefore provides good support for the diversified data types of PostgreSQL. For more information about why Avro is used for all of this, check out this site.

In summary of what we discussed above, BottledWater-pg is a PostgreSQL plug-in. It aims to parse Write-Ahead-Logging logs (WALs), use Avro to encapsulate data in JSON, Protobuf, Thrift, or other formats, and write the data into Kafka.

As a result of all of this, BottledWater-pg depends on these libraries or software. Consider the following code:

PostgreSQL 9.4+ development libraries (PGXS and libpq). (Homebrew: brew install postgresql; Ubuntu: sudo apt-get install postgresql-server-dev-9.5 libpq-dev)

libsnappy, a dependency of Avro. (Homebrew: brew install snappy; Ubuntu: sudo apt-get install libsnappy-dev)

avro-c (1.8.0 or later), the C implementation of Avro. (Homebrew: brew install avro-c; others: build from source)

Jansson, a JSON parser. (Homebrew: brew install jansson; Ubuntu: sudo apt-get install libjansson-dev)

libcurl, a HTTP client. (Homebrew: brew install curl; Ubuntu: sudo apt-get install libcurl4-openssl-dev)

librdkafka (0.9.1 or later), a Kafka client. (Ubuntu universe: sudo apt-get install librdkafka-dev, but see known gotchas; others: build from source)To deploy, BottledWater-pg, we will use the cmake command in its gcc implementation. We recommend that you deploy the latest BottledWater-pg version to avoid compilation problems. Consider the following:

gcc 6.2.0

python 2.7.12

cmake 3.6.3

vi /etc/ld.so.conf

/home/digoal/gcc6.2.0/lib

/home/digoal/gcc6.2.0/lib64

/home/digoal/python2.7.12/lib

ldconfig

export LD_LIBRARY_PATH=/home/digoal/gcc6.2.0/lib:/home/digoal/gcc6.2.0/lib64:/home/digoal/python2.7.12/lib:$LD_LIBRARY_PATH

export PATH=/home/digoal/gcc6.2.0/bin:/home/digoal/python2.7.12/bin:/home/digoal/cmake3.6.3/bin:$PGHOME/bin:$PATH:.Now let's prepare all the things:

Snappy is an optional compressor and decompressor that you can use. It is highly efficient for what we need it for, and therefore, it's recommend that you get it. However, Snappy is optional because Avro also supports the lossless compression program and file format of XZ. Consider the following:

/*

snappy

http://google.github.io/snappy/

wget https://github.com/google/snappy/archive/1.1.3.tar.gz

tar -zxvf 1.1.3.tar.gz

cd snappy-1.1.3

yum install -y libtool gcc-c++

./autogen.sh

./configure --prefix=/home/digoal/snappy_home

make

make install

- add LIBDIR to the `LD_LIBRARY_PATH' environment variable

during execution

- add LIBDIR to the `LD_RUN_PATH' environment variable

during linking

- use the `-Wl,-rpath -Wl,LIBDIR' linker flag

- have your system administrator add LIBDIR to `/etc/ld.so.conf'

*/Libjansson is a JSON parser that you must install for everything we'll show you to work. We recommend that you install Libjansson in the default directory during testing. Otherwise, you may encounter compilation problems. We also recommend that you set rpath. Again, consider the following:

http://www.digip.org/jansson/

wget http://www.digip.org/jansson/releases/jansson-2.9.tar.bz2

tar -jxvf jansson-2.9.tar.bz2

cd jansson-2.9

./configure --prefix=/home/digoal/jansson

make

make install

- add LIBDIR to the 'LD_LIBRARY_PATH' environment variable

during execution

- add LIBDIR to the 'LD_RUN_PATH' environment variable

during linking

- use the '-Wl,-rpath -Wl,LIBDIR' linker flag

- have your system administrator add LIBDIR to '/etc/ld.so.conf'

export PKG_CONFIG_PATH=/home/digoal/jansson/lib/pkgconfig:$PKG_CONFIG_PATH

pkg-config --cflags --libs jansson

-I/home/digoal/jansson/include -L/home/digoal/jansson//home/digoal/jansson/lib -ljanssonWe recommend that you install Libjansson in the default directory during testing, as shown in the following script.

./configure

make

make install

export PKG_CONFIG_PATH=/usr/local/lib/pkgconfig:$PKG_CONFIG_PATHyum install -y xz-*Boost is optional. Install boost if you want to install Avro doc.

/*

boost

http://www.boost.org/

https://sourceforge.net/projects/boost/files/boost/1.62.0/

wget http://downloads.sourceforge.net/project/boost/boost/1.62.0/boost_1_62_0.tar.bz2?r=https%3A%2F%2Fsourceforge.net%2Fprojects%2Fboost%2Ffiles%2Fboost%2F1.62.0%2F&ts=1480929211&use_mirror=ncu

tar -jxvf boost_1_62_0.tar.bz2

cd boost_1_62_0/libs/regex/build

To use a static library, run make -fgcc.mak.

To use a static library, run make -fgcc-shared.mak.

ll gcc

drwxr-xr-x 2 digoal users 4.0K Dec 5 17:18 boost_regex-gcc-1_53

drwxr-xr-x 2 digoal users 4.0K Dec 5 17:17 boost_regex-gcc-1_53_shared

drwxr-xr-x 2 digoal users 4.0K Dec 5 17:19 boost_regex-gcc-d-1_53

drwxr-xr-x 2 digoal users 4.0K Dec 5 17:18 boost_regex-gcc-d-1_53_shared

-rw-r--r-- 1 digoal users 2.6M Dec 5 17:18 libboost_regex-gcc-1_53.a

-rwxr-xr-x 1 digoal users 1.3M Dec 5 17:17 libboost_regex-gcc-1_53.so

-rw-r--r-- 1 digoal users 17M Dec 5 17:19 libboost_regex-gcc-d-1_53.a

-rwxr-xr-x 1 digoal users 7.4M Dec 5 17:18 libboost_regex-gcc-d-1_53.so

libboost_regex-gcc-1_53.a, This is a release version of a static library.

libboost_regex-gcc-1_53.so, This is a release version of a dynamic library (shared library).

libboost_regex-gcc-d-1_53.a, This is a debug version of a static library.

libboost_regex-gcc-d-1_53.so, This is a debug version of a dynamic library (shared library).

*/You'll want to get Avro version 1.8.0 or later. To download it, check out these websites: http://avro.apache.org/ and http://www.apache.org/dyn/closer.cgi/avro/

wget http://mirrors.hust.edu.cn/apache/avro/avro-1.8.1/avro-src-1.8.1.tar.gz

tar -zxvf avro-src-1.8.1.tar.gz

cd avro-src-1.8.1/lang/c

mkdir build

cd build

/*

cmake .. -DCMAKE_INSTALL_PREFIX=/home/digoal/avro -DCMAKE_BUILD_TYPE=Release -DSNAPPY_LIBRARIES=/home/digoal/snappy_home/lib -DSNAPPY_INCLUDE_DIR=/home/digoal/snappy_home/include

*/

yum install -y zlib-devel.x86_64

cmake .. -DCMAKE_INSTALL_PREFIX=/home/digoal/avro -DCMAKE_BUILD_TYPE=Release -DTHREADSAFE=true

make

make test

make install

The "RelWithDebInfo" build type will build an optimized copy of the

library, including debugging symbols. Use the "Release" build type if

you don't want debugging symbols. Use the "Debug" build type if you

want a non-optimized library, with debugging symbols.

On Unix, you can request thread-safe versions of the Avro library's

global functions by defining the THREADSAFE cmake variable. Just add

the following to your cmake invokation:

-DTHREADSAFE=trueYou'll also need to install libcurl. You can use the following command.

yum install -y libcurl-devel.x86_64Now you'll need to get librdkafka ready. You can use the following commands to set up librdkafka.

git clone https://github.com/edenhill/librdkafka

/*

./configure --prefix=/home/digoal/librdkafka_home

make -j 32

make install

export PKG_CONFIG_PATH=/home/digoal/avro/lib/pkgconfig:/home/digoal/librdkafka_home/lib/pkgconfig:/usr/local/lib/pkgconfig:$PKG_CONFIG_PATH

*/We recommend that you use the default directory for testing. Otherwise, you may encounter compilation errors, as shown below:

./configure

make

make install

export PKG_CONFIG_PATH=/usr/local/lib/pkgconfig:$PKG_CONFIG_PATHThe installation process is omitted here, as we're sure you've already got PostgreSQL installed.

You must first install PostgreSQL because BottledWater-pg is a PostgreSQL plug-in, as shown below:

git clone https://github.com/confluentinc/bottledwater-pg

cd bottledwater-pg

make

make install

vi /etc/ld.so.conf

/home/digoal/avro/lib

or

export LD_LIBRARY_PATH=/home/digoal/avro/lib:$LD_LIBRARY_PATHYou may need to restart the database and load bottledwater.so. Next, you'll want to create the BottledWater-pg plug-in for the database, as shown below:

psql

postgres=# create extension bottledwater ;

CREATE EXTENSIONNow that you've got all the stuff you need. Now we can move on to deploying Confluent. This step is the foundation for everything else to follow. After building the Confluent platform, you can also test the process by which BottledWater-pg produces messages and consumes them from the Confluent platform. For reference, see this page.

Next, let's deploy the PostgreSQL Streaming Replication. For this step, you must set WAL_LEVEL to Logical because BottledWater-pg needs to parse the logical rows from WALs. Furthermore, the number of WAL sender processes must be set to one or more, and the number of worker processes must also be set to one or more.

Replication slots ensure that BottledWater supports resumable data transfer and the unconverted WAL logs are not deleted or overwritten by the primary database. Therefore, you must set replication_slots to a value of one or greater, as shown below:

postgresql.conf

max_worker_processes = 8

wal_level = logical

max_wal_senders = 8

wal_keep_segments = 256

max_replication_slots = 4To ensure that the WAL subscribers of the database can connect to the database through the stream replication protocol, you need to configure pg_hba.conf, as shown below:

pg_hba.conf

local replication digoal trust

host replication digoal 127.0.0.1/32 trust

host replication digoal 0.0.0.0/0 md5Next, create a replication with the following command:

create role digoal login replication encrypted password 'digoal123';The client commands of the BottledWater-pg client are executed to parse Write-Ahead-Logging (WAL) logs and write the parsing results to Kafka.

In the "Options" part, you need to configure how to connect to the database (using the stream replication connection), output format, topic-prefix (preferentially set to the database name), whether to initialize snapshots, whether to allow tables without primary keys, the connection address and port of the Kafka broker, and the connection address and port of Schema-registry.

This part also contains some Kafka-related settings.

cd bottledwater-pg/kafka

./bottledwater --help

Exports a snapshot of a PostgreSQL database, followed by a stream of changes,

and sends the data to a Kafka cluster.

Usage:

./bottledwater [OPTION]...

Options:

-d, --postgres=postgres://user:pass@host:port/dbname (required)

Connection string or URI of the PostgreSQL server.

-s, --slot=slotname Name of replication slot (default: bottledwater)

The slot is automatically created on first use.

-b, --broker=host1[:port1],host2[:port2]... (default: localhost:9092)

Comma-separated list of Kafka broker hosts/ports.

-r, --schema-registry=http://hostname:port (default: http://localhost:8081)

URL of the service where Avro schemas are registered.

Used only for --output-format=avro.

Omit when --output-format=json.

-f, --output-format=[avro|json] (default: avro)

How to encode the messages for writing to Kafka.

-u, --allow-unkeyed Allow export of tables that don't have a primary key.

This is disallowed by default, because updates and

deletes need a primary key to identify their row.

-p, --topic-prefix=prefix

String to prepend to all topic names.

e.g. with --topic-prefix=postgres, updates from table

'users' will be written to topic 'postgres.users'.

-e, --on-error=[log|exit] (default: exit)

What to do in case of a transient error, such as

failure to publish to Kafka.

-x, --skip-snapshot Skip taking a consistent snapshot of the existing

database contents and just start streaming any new

updates. (Ignored if the replication slot already

exists.)

-C, --kafka-config property=value

Set global configuration property for Kafka producer

(see --config-help for list of properties).

-T, --topic-config property=value

Set topic configuration property for Kafka producer.

--config-help Print the list of configuration properties. See also:

https://github.com/edenhill/librdkafka/blob/master/CONFIGURATION.md

-h, --help

Print this help text.The BottledWater configuration file is described as follows:

./bottledwater --config-help 2>&1 |less

## Global configuration properties

Property | C/P | Range | Default | Description

-----------------------------------------|-----|-----------------|--------------:|--------------------------

builtin.features | * | | gzip, snappy, ssl, sasl, regex | Indicates the builtin features for this build of librdkafka. An application can either query this value or attempt to set it with its list of required features to check for library support. <br>*Type: CSV flags*

client.id | * | | rdkafka | Client identifier. <br>*Type: string*

metadata.broker.list | * | | | Initial list of brokers. The application may also use `rd_kafka_brokers_add()` to add brokers during runtime. <br>*Type: string*

bootstrap.servers | * | | | Alias for `metadata.broker.list`

message.max.bytes | * | 1000 .. 1000000000 | 1000000 | Maximum transmit message size. <br>*Type: integer*

message.copy.max.bytes | * | 0 .. 1000000000 | 65535 | Maximum size for message to be copied to buffer. Messages larger than this will be passed by reference (zero-copy) at the expense of larger iovecs. <br>*Type: integer*

receive.message.max.bytes | * | 1000 .. 1000000000 | 100000000 | Maximum receive message size. This is a safety precaution to avoid memory exhaustion in case of protocol hickups. The value should be at least fetch.message.max.bytes * number of partitions consumed from + messaging overhead (e.g. 200000 bytes). <br>*Type: integer*

max.in.flight.requests.per.connection | * | 1 .. 1000000 | 1000000 | Maximum number of in-flight requests the client will send. This setting applies per broker connection. <br>*Type: integer*

max.in.flight | * | | | Alias for `max.in.flight.requests.per.connection`

metadata.request.timeout.ms | * | 10 .. 900000 | 60000 | Non-topic request timeout in milliseconds. This is for metadata requests, etc. <br>*Type: integer*

topic.metadata.refresh.interval.ms | * | -1 .. 3600000 | 300000 | Topic metadata refresh interval in milliseconds. The metadata is automatically refreshed on error and connect. Use -1 to disable the intervalled refresh. <br>*Type: integer*

metadata.max.age.ms | * | | | Alias for `topic.metadata.refresh.interval.ms`

topic.metadata.refresh.fast.cnt | * | 0 .. 1000 | 10 | When a topic looses its leader this number of metadata requests are sent with `topic.metadata.refresh.fast.interval.ms` interval disregarding the `topic.metadata.refresh.interval.ms` value. This is used to recover quickly from transitioning leader brokers. <br>*Type: integer*

topic.metadata.refresh.fast.interval.ms | * | 1 .. 60000 | 250 | See `topic.metadata.refresh.fast.cnt` description <br>*Type: integer*

topic.metadata.refresh.sparse | * | true, false | true | Sparse metadata requests (consumes less network bandwidth) <br>*Type: boolean*

topic.blacklist | * | | | Topic blacklist, a comma-separated list of regular expressions for matching topic names that should be ignored in broker metadata information as if the topics did not exist. <br>*Type: pattern list*

debug | * | generic, broker, topic, metadata, queue, msg, protocol, cgrp, security, fetch, feature, all | | A comma-separated list of debug contexts to enable. Debugging the Producer: broker,topic,msg. Consumer: cgrp,topic,fetch <br>*Type: CSV flags*

socket.timeout.ms | * | 10 .. 300000 | 60000 | Timeout for network requests. <br>*Type: integer*

socket.blocking.max.ms | * | 1 .. 60000 | 100 | Maximum time a broker socket operation may block. A lower value improves responsiveness at the expense of slightly higher CPU usage. <br>*Type: integer*

socket.send.buffer.bytes | * | 0 .. 100000000 | 0 | Broker socket send buffer size. System default is used if 0. <br>*Type: integer*

socket.receive.buffer.bytes | * | 0 .. 100000000 | 0 | Broker socket receive buffer size. System default is used if 0. <br>*Type: integer*

socket.keepalive.enable | * | true, false | false | Enable TCP keep-alives (SO_KEEPALIVE) on broker sockets <br>*Type: boolean*

socket.nagle.disable | * | true, false | false | Disable the Nagle algorithm (TCP_NODELAY). <br>*Type: boolean*

socket.max.fails | * | 0 .. 1000000 | 3 | Disconnect from broker when this number of send failures (e.g., timed out requests) is reached. Disable with 0. NOTE: The connection is automatically re-established. <br>*Type: integer*

broker.address.ttl | * | 0 .. 86400000 | 1000 | How long to cache the broker address resolving results (milliseconds). <br>*Type: integer*

broker.address.family | * | any, v4, v6 | any | Allowed broker IP address families: any, v4, v6 <br>*Type: enum value*

reconnect.backoff.jitter.ms | * | 0 .. 3600000 | 500 | Throttle broker reconnection attempts by this value +-50%. <br>*Type: integer*

statistics.interval.ms | * | 0 .. 86400000 | 0 | librdkafka statistics emit interval. The application also needs to register a stats callback using `rd_kafka_conf_set_stats_cb()`. The granularity is 1000ms. A value of 0 disables statistics. <br>*Type: integer*

enabled_events | * | 0 .. 2147483647 | 0 | See `rd_kafka_conf_set_events()` <br>*Type: integer*

error_cb | * | | | Error callback (set with rd_kafka_conf_set_error_cb()) <br>*Type: pointer*

throttle_cb | * | | | Throttle callback (set with rd_kafka_conf_set_throttle_cb()) <br>*Type: pointer*

stats_cb | * | | | Statistics callback (set with rd_kafka_conf_set_stats_cb()) <br>*Type: pointer*

log_cb | * | | | Log callback (set with rd_kafka_conf_set_log_cb()) <br>*Type: pointer*

log_level | * | 0 .. 7 | 6 | Logging level (syslog(3) levels) <br>*Type: integer*

log.thread.name | * | true, false | false | Print internal thread name in log messages (useful for debugging librdkafka internals) <br>*Type: boolean*

log.connection.close | * | true, false | true | Log broker disconnects. It might be useful to turn this off when interacting with 0.9 brokers with an aggressive `connection.max.idle.ms` value. <br>*Type: boolean*

socket_cb | * | | | Socket creation callback to provide race-free CLOEXEC <br>*Type: pointer*

connect_cb | * | | | Socket connect callback <br>*Type: pointer*

closesocket_cb | * | | | Socket close callback <br>*Type: pointer*

open_cb | * | | | File open callback to provide race-free CLOEXEC <br>*Type: pointer*

opaque | * | | | Application opaque (set with rd_kafka_conf_set_opaque()) <br>*Type: pointer*

default_topic_conf | * | | | Default topic configuration for automatically subscribed topics <br>*Type: pointer*

internal.termination.signal | * | 0 .. 128 | 0 | Signal that librdkafka will use to quickly terminate on rd_kafka_destroy(). If this signal is not set then there will be a delay before rd_kafka_wait_destroyed() returns true as internal threads are timing out their system calls. If this signal is set however the delay will be minimal. The application should mask this signal as an internal signal handler is installed. <br>*Type: integer*

api.version.request | * | true, false | false | Request broker's supported API versions to adjust functionality to available protocol features. If set to false the fallback version `broker.version.fallback` will be used. **NOTE**: Depends on broker version >=0.10.0. If the request is not supported by (an older) broker the `broker.version.fallback` fallback is used. <br>*Type: boolean*

api.version.fallback.ms | * | 0 .. 604800000 | 1200000 | Dictates how long the `broker.version.fallback` fallback is used in the case the ApiVersionRequest fails. **NOTE**: The ApiVersionRequest is only issued when a new connection to the broker is made (such as after an upgrade). <br>*Type: integer*

broker.version.fallback | * | | 0.9.0 | Older broker versions (<0.10.0) provides no way for a client to query for supported protocol features (ApiVersionRequest, see `api.version.request`) making it impossible for the client to know what features it may use. As a workaround a user may set this property to the expected broker version and the client will automatically adjust its feature set accordingly if the ApiVersionRequest fails (or is disabled). The fallback broker version will be used for `api.version.fallback.ms`. Valid values are: 0.9.0, 0.8.2, 0.8.1, 0.8.0. <br>*Type: string*

security.protocol | * | plaintext, ssl, sasl_plaintext, sasl_ssl | plaintext | Protocol used to communicate with brokers. <br>*Type: enum value*

ssl.cipher.suites | * | | | A cipher suite is a named combination of authentication, encryption, MAC and key exchange algorithm used to negotiate the security settings for a network connection using TLS or SSL network protocol. See manual page for `ciphers(1)` and `SSL_CTX_set_cipher_list(3). <br>*Type: string*

ssl.key.location | * | | | Path to client's private key (PEM) used for authentication. <br>*Type: string*

ssl.key.password | * | | | Private key passphrase <br>*Type: string*

ssl.certificate.location | * | | | Path to client's public key (PEM) used for authentication. <br>*Type: string*

ssl.ca.location | * | | | File or directory path to CA certificate(s) for verifying the broker's key. <br>*Type: string*

ssl.crl.location | * | | | Path to CRL for verifying broker's certificate validity. <br>*Type: string*

sasl.mechanisms | * | | GSSAPI | SASL mechanism to use for authentication. Supported: GSSAPI, PLAIN. **NOTE**: Despite the name only one mechanism must be configured. <br>*Type: string*

sasl.kerberos.service.name | * | | kafka | Kerberos principal name that Kafka runs as. <br>*Type: string*

sasl.kerberos.principal | * | | kafkaclient | This client's Kerberos principal name. <br>*Type: string*

sasl.kerberos.kinit.cmd | * | | kinit -S "%{sasl.kerberos.service.name}/%{broker.name}" -k -t "%{sasl.kerberos.keytab}" %{sasl.kerberos.principal} | Full kerberos kinit command string, %{config.prop.name} is replaced by corresponding config object value, %{broker.name} returns the broker's hostname. <br>*Type: string*

sasl.kerberos.keytab | * | | | Path to Kerberos keytab file. Uses system default if not set.**NOTE**: This is not automatically used but must be added to the template in sasl.kerberos.kinit.cmd as ` ... -t %{sasl.kerberos.keytab}`. <br>*Type: string*

sasl.kerberos.min.time.before.relogin | * | 1 .. 86400000 | 60000 | Minimum time in milliseconds between key refresh attempts. <br>*Type: integer*

sasl.username | * | | | SASL username for use with the PLAIN mechanism <br>*Type: string*

sasl.password | * | | | SASL password for use with the PLAIN mechanism <br>*Type: string*

group.id | * | | | Client group id string. All clients sharing the same group.id belong to the same group. <br>*Type: string*

partition.assignment.strategy | * | | range,roundrobin | Name of partition assignment strategy to use when elected group leader assigns partitions to group members. <br>*Type: string*

session.timeout.ms | * | 1 .. 3600000 | 30000 | Client group session and failure detection timeout. <br>*Type: integer*

heartbeat.interval.ms | * | 1 .. 3600000 | 1000 | Group session keepalive heartbeat interval. <br>*Type: integer*

group.protocol.type | * | | consumer | Group protocol type <br>*Type: string*

coordinator.query.interval.ms | * | 1 .. 3600000 | 600000 | How often to query for the current client group coordinator. If the currently assigned coordinator is down the configured query interval will be divided by ten to more quickly recover in case of coordinator reassignment. <br>*Type: integer*

enable.auto.commit | C | true, false | true | Automatically and periodically commit offsets in the background. <br>*Type: boolean*

auto.commit.interval.ms | C | 0 .. 86400000 | 5000 | The frequency in milliseconds that the consumer offsets are committed (written) to offset storage. (0 = disable) <br>*Type: integer*

enable.auto.offset.store | C | true, false | true | Automatically store offset of last message provided to application. <br>*Type: boolean*

queued.min.messages | C | 1 .. 10000000 | 100000 | Minimum number of messages per topic+partition in the local consumer queue. <br>*Type: integer*

queued.max.messages.kbytes | C | 1 .. 1000000000 | 1000000 | Maximum number of kilobytes per topic+partition in the local consumer queue. This value may be overshot by fetch.message.max.bytes. <br>*Type: integer*

fetch.wait.max.ms | C | 0 .. 300000 | 100 | Maximum time the broker may wait to fill the response with fetch.min.bytes. <br>*Type: integer*

fetch.message.max.bytes | C | 1 .. 1000000000 | 1048576 | Initial maximum number of bytes per topic+partition to request when fetching messages from the broker. If the client encounters a message larger than this value it will gradually try to increase it until the entire message can be fetched. <br>*Type: integer*

max.partition.fetch.bytes | C | | | Alias for `fetch.message.max.bytes`

fetch.min.bytes | C | 1 .. 100000000 | 1 | Minimum number of bytes the broker responds with. If fetch.wait.max.ms expires the accumulated data will be sent to the client regardless of this setting. <br>*Type: integer*

fetch.error.backoff.ms | C | 0 .. 300000 | 500 | How long to postpone the next fetch request for a topic+partition in case of a fetch error. <br>*Type: integer*

offset.store.method | C | none, file, broker | broker | Offset commit store method: 'file' - local file store (offset.store.path, et.al), 'broker' - broker commit store (requires Apache Kafka 0.8.2 or later on the broker). <br>*Type: enum value*

consume_cb | C | | | Message consume callback (set with rd_kafka_conf_set_consume_cb()) <br>*Type: pointer*

rebalance_cb | C | | | Called after consumer group has been rebalanced (set with rd_kafka_conf_set_rebalance_cb()) <br>*Type: pointer*

offset_commit_cb | C | | | Offset commit result propagation callback. (set with rd_kafka_conf_set_offset_commit_cb()) <br>*Type: pointer*

enable.partition.eof | C | true, false | true | Emit RD_KAFKA_RESP_ERR__PARTITION_EOF event whenever the consumer reaches the end of a partition. <br>*Type: boolean*

queue.buffering.max.messages | P | 1 .. 10000000 | 100000 | Maximum number of messages allowed on the producer queue. <br>*Type: integer*

queue.buffering.max.kbytes | P | 1 .. 2147483647 | 4000000 | Maximum total message size sum allowed on the producer queue. <br>*Type: integer*

queue.buffering.max.ms | P | 1 .. 900000 | 1000 | Maximum time, in milliseconds, for buffering data on the producer queue. <br>*Type: integer*

message.send.max.retries | P | 0 .. 10000000 | 2 | How many times to retry sending a failing MessageSet. **Note:** retrying may cause reordering. <br>*Type: integer*

retries | P | | | Alias for `message.send.max.retries`

retry.backoff.ms | P | 1 .. 300000 | 100 | The backoff time in milliseconds before retrying a message send. <br>*Type: integer*

compression.codec | P | none, gzip, snappy | none | compression codec to use for compressing message sets. This is the default value for all topics, may be overriden by the topic configuration property `compression.codec`. <br>*Type: enum value*

batch.num.messages | P | 1 .. 1000000 | 10000 | Maximum number of messages batched in one MessageSet. The total MessageSet size is also limited by message.max.bytes. <br>*Type: integer*

delivery.report.only.error | P | true, false | false | Only provide delivery reports for failed messages. <br>*Type: boolean*

dr_cb | P | | | Delivery report callback (set with rd_kafka_conf_set_dr_cb()) <br>*Type: pointer*

dr_msg_cb | P | | | Delivery report callback (set with rd_kafka_conf_set_dr_msg_cb()) <br>*Type: pointer*

## Topic configuration properties

Property | C/P | Range | Default | Description

-----------------------------------------|-----|-----------------|--------------:|--------------------------

request.required.acks | P | -1 .. 1000 | 1 | This field indicates how many acknowledgements the leader broker must receive from ISR brokers before responding to the request: *0*=Broker does not send any response/ack to client, *1*=Only the leader broker will need to ack the message, *-1* or *all*=broker will block until message is committed by all in sync replicas (ISRs) or broker's `in.sync.replicas` setting before sending response. <br>*Type: integer*

acks | P | | | Alias for `request.required.acks`

request.timeout.ms | P | 1 .. 900000 | 5000 | The ack timeout of the producer request in milliseconds. This value is only enforced by the broker and relies on `request.required.acks` being != 0. <br>*Type: integer*

message.timeout.ms | P | 0 .. 900000 | 300000 | Local message timeout. This value is only enforced locally and limits the time a produced message waits for successful delivery. A time of 0 is infinite. <br>*Type: integer*

produce.offset.report | P | true, false | false | Report offset of produced message back to application. The application must be use the `dr_msg_cb` to retrieve the offset from `rd_kafka_message_t.offset`. <br>*Type: boolean*

partitioner_cb | P | | | Partitioner callback (set with rd_kafka_topic_conf_set_partitioner_cb()) <br>*Type: pointer*

opaque | * | | | Application opaque (set with rd_kafka_topic_conf_set_opaque()) <br>*Type: pointer*

compression.codec | P | none, gzip, snappy, inherit | inherit | Compression codec to use for compressing message sets. <br>*Type: enum value*

auto.commit.enable | C | true, false | true | If true, periodically commit offset of the last message handed to the application. This committed offset will be used when the process restarts to pick up where it left off. If false, the application will have to call `rd_kafka_offset_store()` to store an offset (optional). **NOTE:** This property should only be used with the simple legacy consumer, when using the high-level KafkaConsumer the global `enable.auto.commit` property must be used instead. **NOTE:** There is currently no zookeeper integration, offsets will be written to broker or local file according to offset.store.method. <br>*Type: boolean*

enable.auto.commit | C | | | Alias for `auto.commit.enable`

auto.commit.interval.ms | C | 10 .. 86400000 | 60000 | The frequency in milliseconds that the consumer offsets are committed (written) to offset storage. <br>*Type: integer*

auto.offset.reset | C | smallest, earliest, beginning, largest, latest, end, error | largest | Action to take when there is no initial offset in offset store or the desired offset is out of range: 'smallest','earliest' - automatically reset the offset to the smallest offset, 'largest','latest' - automatically reset the offset to the largest offset, 'error' - trigger an error which is retrieved by consuming messages and checking 'message->err'. <br>*Type: enum value*

offset.store.path | C | | . | Path to local file for storing offsets. If the path is a directory a filename will be automatically generated in that directory based on the topic and partition. <br>*Type: string*

offset.store.sync.interval.ms | C | -1 .. 86400000 | -1 | fsync() interval for the offset file, in milliseconds. Use -1 to disable syncing, and 0 for immediate sync after each write. <br>*Type: integer*

offset.store.method | C | file, broker | broker | Offset commit store method: 'file' - local file store (offset.store.path, et.al), 'broker' - broker commit store (requires "group.id" to be configured and Apache Kafka 0.8.2 or later on the broker.). <br>*Type: enum value*

consume.callback.max.messages | C | 0 .. 1000000 | 0 | Maximum number of messages to dispatch in one `rd_kafka_consume_callback*()` call (0 = unlimited) <br>*Type: integer*

### C/P legend: C = Consumer, P = Producer, * = bothConfluent stores the Avro-encapsulated data in binary format, so a consumer needs to parse the Avro-encapsulated data during message consumption. Consider the following:

./bin/kafka-avro-console-consumer --topic test --zookeeper localhost:2181 \

--property print.key=trueNow, let's consider the following points when it comes to risk assessment:

1. When you connect to the database for the first time, a slot is automatically created and the snapshot data is automatically written to Kafka. This process takes a rather long time if the database is large.

2. The transaction isolation level of repeatable read is enabled to obtain consistent data. If this level is 9.6 and the parameter "snapshot too old" is configured, snapshots may fail to be copied.

3. As logical decoding is used, the table to be copied must be coherent or a non-null and unique constraint column must be specified as the key for replication.

4. Insert, Delete, and Update are parsed as follows in Write-Ahead-Logging (WAL):

insert : key + full row

delete : old.key

update : old.key + new.key+full rowIf you use --allow-unkeyed to skip the primary key, no data is written to Kafka when you delete the table. If you insert or update the table, all columns are sent to Kafka.

5. DDL actions are not recorded in WAL logs. What can you do if you need to write DDL actions into Kafka?

You can use the event trigger to encapsulate and write DDL actions into tables when DDL actions occur. Then, the DML of these tables is written to Kafka to transfer DDL actions.

6. If you want to delete a producer, delete the corresponding slot from the database. Otherwise, PostgreSQL will always keep the logs that are not read by the slot. As a result, the WAL directory will soon be full.

7. If the REDO generated by the database is not promptly parsed and written to Kafka, the WAL files that have not been retrieved from the database may be left on the database server, and even exhaust the database space.

Therefore, be careful when using slots and ensure robust monitoring.

8. One Kafka topic is mapped to one table. The naming convention is as follows:

The name contains only three parts [Topic_prefix].[postgres_schema_name].table_name, and the database name is not considered. If you have multiple databases, we recommend that you configure top_prefix to match the database name.

For each table being streamed, Bottled Water publishes messages to a corresponding Kafka topic. The naming convention for topics is [topic_prefix].[postgres_schema_name].table_name:

table_name is the name of the table in Postgres.

postgres_schema_name is the name of the Postgres schema the table belongs to; this is omitted if the schema is "public" (the default schema under the default Postgres configuration). N.B. this requires the avro-c library to be at least version 0.8.0.

topic_prefix is omitted by default, but may be configured via the --topic-prefix command-line option. A prefix is useful:

to prevent name collisions with other topics, if the Kafka broker is also being used for other purposes besides Bottled Water.

if you want to stream several databases into the same broker, using a separate Bottled Water instance with a different prefix for each database.

to make it easier for a Kafka consumer to consume updates from all Postgres tables, by using a topic regex that matches the prefix.

For example:

with no prefix configured, a table named "users" in the public (default) schema would be streamed to a topic named "users".

with --topic-prefix=bottledwater, a table named "transactions" in the "point-of-sale" schema would be streamed to a topic named "bottledwater.point-of-sale.transactions".

(Support for namespaces in Kafka has been proposed that would replace this sort of ad-hoc prefixing, but it's still under discussion.)Using the Built-In and Custom Ranking Algorithms of PostgreSQL for Full-Text Searches

Streaming, Lambda, and Triggered Modes Compared: A Look at How You Can Process Data in Real Time

Farruh - January 12, 2024

Alibaba Cloud Community - October 16, 2023

Alibaba Cloud Indonesia - November 27, 2023

Alibaba Clouder - January 17, 2018

Apache Flink Community - October 15, 2025

Apache Flink Community China - June 28, 2020

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn More AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn More IoT Solution

IoT Solution

A cloud solution for smart technology providers to quickly build stable, cost-efficient, and reliable ubiquitous platforms

Learn MoreMore Posts by digoal

profedsonbelem@gmail.com October 23, 2021 at 7:54 am

parabéns pelo sey post.