By SysOM SIG

BPF is a new dynamic tracking technology, which is deeply affecting our production and life. BPF plays an important role in four major application scenarios:

BPF technology is developed with the Linux kernel. The Linux kernel version has undergone an evolution from 3.x, 4.x to 5.x. The eBPF technology has been supported since 4.x, and the 5.x kernel adds many advanced features. However, a large number of 3.10 kernel versions on cloud servers do not support eBPF. We have ported BPF to enable our eBPF tools to run on these existing machines and lower kernel versions. At the same time, based on the CO-RE capability of libbpf, we ensure that a tool can run in lower, middle, and higher kernel versions of 3.x/ 4.x/ 5.x.

There are many ways to develop BPF. Here are some popular ways:

1) Pure Libbpf Application Development: Load the BPF programs into the kernel with the help of the libbpf library. This development method is inefficient with no basic library encapsulation, and all necessary steps and basic functions need to be explored by oneself.

2) With the Help of BCC and Other Open-Source Projects: It supports dynamic modification of some kernel code, which is of high development efficiency, good portability, and flexibility. However, the deployment depends on libraries (such as Clang/LLVM). Clang/LLVM compilation must be performed every time, which consumes serious CPU, memory, and other resources. This makes it prone to preempt resources over other services.

The coolbpf project is implemented based on Compile Once-Run Everywhere (CO-RE), which retains the advantages of low resource occupation and strong portability. It also integrates the characteristics of BCC dynamic compilation and is suitable for batch deployment of developed applications in the production environment. Coolbpf has created a new idea, using remote compilation to push the user's BPF program to the remote server and return it to the user. o or. so. It can provide advanced languages (such as Python/Rust/Go/C) for loading and then run safely in the full kernel version. Users only need to focus on their function development. They do not need to care about the installation and environment construction of underlying libraries (such as LLVM and python), providing a new exploration and practice for BPF enthusiasts.

BPF has gone through the sock filter message filtering of the traditional setsockopt method. Now, it uses libbpf CO-RE to develop the monitoring and diagnosis functions. This change is due to the excellent instruction set capability with eBPF and hardware, and the open-source of the libbpf library. Let's review the development method of BPF first. We launched coolbpf based on the idea of remote compilation. It realizes resource optimization, concise programming, and efficiency improvement based on past results.

When BPF is still called cBPF, it uses the sock filter to load the original BPF code into the kernel by setsockopt and filters the message by calling runfilter in packet_rcv. As such, the generation of BPF bytecode is primitive, and the process is painful, similar to writing an assembler by hand:

static struct sock_filter filter[6] = {

{ OP_LDH, 0, 0, 12 }, // ldh [12]

{ OP_JEQ, 0, 2, ETH_P_IP }, // jeq #0x800, L2, L5

{ OP_LDB, 0, 0, 23 }, // ldb [23]

{ OP_JEQ, 0, 1, IPPROTO_TCP }, // jeq #0x6, L4, L5

{ OP_RET, 0, 0, 0 }, // ret #0x0

{ OP_RET, 0, 0, -1, }, // ret #0xffffffff

};

int main(int argc, char **argv)

{

…

struct sock_fprog prog = { 6, filter };

…

sock = socket(AF_PACKET, SOCK_RAW, htons(ETH_P_ALL));

…

if (setsockopt(sock, SOL_SOCKET, SO_ATTACH_FILTER, &prog, sizeof(prog))) {

return 1;

}

…

}Examples are sockex1_kern.c and sockex1_user.c under samples/bpf. The code is divided into two parts, usually named xxx_kern.c and xxx_user.c. The former is loaded into the kernel space, and the latter is executed in the user space. After the BPF program is written, it is compiled through Clang/LLVM, and the generated xxx_kernel.o file is explicitly loaded in xxx_user.c. Although this method uses a compiler that can automatically generate BPF bytecode, the code organization and BPF loading method are relatively conservative, and users need to write a lot of duplicate code.

struct {

__uint(type, BPF_MAP_TYPE_ARRAY);

__type(key, u32);

__type(value, long);

__uint(max_entries, 256);

} my_map SEC(".maps");

SEC("socket1")

int bpf_prog1(struct __sk_buff *skb)

{

int index = load_byte(skb, ETH_HLEN + offsetof(struct iphdr, protocol));

long *value;

if (skb->pkt_type != PACKET_OUTGOING)

return 0;

value = bpf_map_lookup_elem(&my_map, &index);

if (value)

__sync_fetch_and_add(value, skb->len);

return 0;

}

char _license[] SEC("license") = "GPL";

int main(int ac, char **argv)

{

struct bpf_object *obj;

struct bpf_program *prog;

int map_fd, prog_fd;

char filename[256];

int i, sock, err;

FILE *f;

snprintf(filename, sizeof(filename), "%s_kern.o", argv[0]);

obj = bpf_object__open_file(filename, NULL);

if (libbpf_get_error(obj))

return 1;

prog = bpf_object__next_program(obj, NULL);

bpf_program__set_type(prog, BPF_PROG_TYPE_SOCKET_FILTER);

err = bpf_object__load(obj);

if (err)

return 1;

prog_fd = bpf_program__fd(prog);

map_fd = bpf_object__find_map_fd_by_name(obj, "my_map");

...

}The emergence of BCC broke the conservative development method. Its excellent runtime compilation and basic library encapsulation capabilities reduce the difficulty of development and gain popularity. It continues to develop, similar to capital expansion. Users only need to attach a prog in the Python program and then perform data analysis and processing. The disadvantage is that Clang and python libraries must be installed in the production environment. CPU resources may be instantaneously high when running, resulting in no repeated problems after the BPF program is loaded.

int trace_connect_v4_entry(struct pt_regs *ctx, struct sock *sk)

{

if (container_should_be_filtered()) {

return 0;

}

u64 pid = bpf_get_current_pid_tgid();

##FILTER_PID##

u16 family = sk->__sk_common.skc_family;

##FILTER_FAMILY##

// stash the sock ptr for lookup on return

connectsock.update(&pid, &sk);

return 0;

}# initialize BPF

b = BPF(text=bpf_text)

if args.ipv4:

b.attach_kprobe(event="tcp_v4_connect", fn_name="trace_connect_v4_entry")

b.attach_kretprobe(event="tcp_v4_connect", fn_name="trace_connect_v4_return")

b.attach_kprobe(event="tcp_close", fn_name="trace_close_entry")

b.attach_kretprobe(event="inet_csk_accept", fn_name="trace_accept_return")BCC is all the rage and has attracted many developers. As time advances, the demand is also changing. Libbpf was created, and CO-RE ideas prevailed. BCC is also changing and beginning to support relocation with BTF, hoping that the same program can run smoothly on any Linux system. However, the structures on different kernel versions may change the name of the member or the meaning of the member (from microseconds to milliseconds), and this requires program processing. In the 4.x and other middle versions of the kernel, there is a need to generate independent BTF files through debuginfo, which can be quite complicated.

SEC("kprobe/inet_listen")

int BPF_KPROBE(inet_listen_entry, struct socket *sock, int backlog)

{

__u64 pid_tgid = bpf_get_current_pid_tgid();

__u32 pid = pid_tgid >> 32;

__u32 tid = (__u32)pid_tgid;

struct event event = {};

if (target_pid && target_pid != pid)

return 0;

fill_event(&event, sock);

event.pid = pid;

event.backlog = backlog;

bpf_map_update_elem(&values, &tid, &event, BPF_ANY);

return 0;

}#include "solisten.skel.h"

...

int main(int argc, char **argv)

{

...

libbpf_set_strict_mode(LIBBPF_STRICT_ALL);

libbpf_set_print(libbpf_print_fn);

obj = solisten_bpf__open();

obj->rodata->target_pid = target_pid;

err = solisten_bpf__load(obj);

err = solisten_bpf__attach(obj);

pb = perf_buffer__new(bpf_map__fd(obj->maps.events), PERF_BUFFER_PAGES,

handle_event, handle_lost_events, NULL, NULL);

...

}BCC also supports CO-RE, but its code is still relatively fixed and cannot be dynamically configured. It is also necessary to build a compilation project. Coolbpf puts compilation resources on one server to provide remote compilation capability that can be shared with everyone. Only the bpf.c is needed to be pushed to the remote server, which will start the motor to accelerate the output of .o and .so. No matter whether users use Python, Go, Rust or C, they only need to load these .o or .so to attach the BPF program to the hook point of the kernel during ini t. Then focus on processing the information output from the BPF program for functional development.

Coolbpf integrates BTF production, code compilation, data processing, and functional testing, which improves production efficiency and makes BPF development enter a more elegant realm.

First, install coolbpf on the local computer. The command in it sends xx.bpf.c to the compiler for compilation.

pip install coolbpf

...

import time

from pylcc.lbcBase import ClbcBase

bpfPog = r"""

#include "lbc.h"

SEC("kprobe/wake_up_new_task")

int j_wake_up_new_task(struct pt_regs *ctx)

{

struct task_struct* parent = (struct task_struct *)PT_REGS_PARM1(ctx);

bpf_printk("hello lcc, parent: %d\n", _(parent->tgid));

return 0;

}

char _license[] SEC("license") = "GPL";

"""

class Chello(ClbcBase):

def __init__(self):

super(Chello, self).__init__("hello", bpf_str=bpfPog)

while True:

time.sleep(1)

if __name__ == "__main__":

hello = Chello()

pass

We analyzed the development method of BPF previously. Coolbpf optimizes the development and compilation process further with the help of remote compilation. Here are the six functions it currently contains:

1) Local Compilation Service, Basic Library Encapsulation: The client uses the local container image to compile the program and calls the encapsulated general function library to simplify program writing and data processing.

Local compilation services put the same library and common tools in the container image and compile directly into the container. We use the following image for compilation. You can also use Docker to build your container image.

Container image:

registry.cn-hangzhou.aliyuncs.com/alinux/coolbpf:latest

Users can pull this image for local compilation. Some commonly used libraries and tools are already included in the image provided, thus eliminating the complexity of the construction environment.

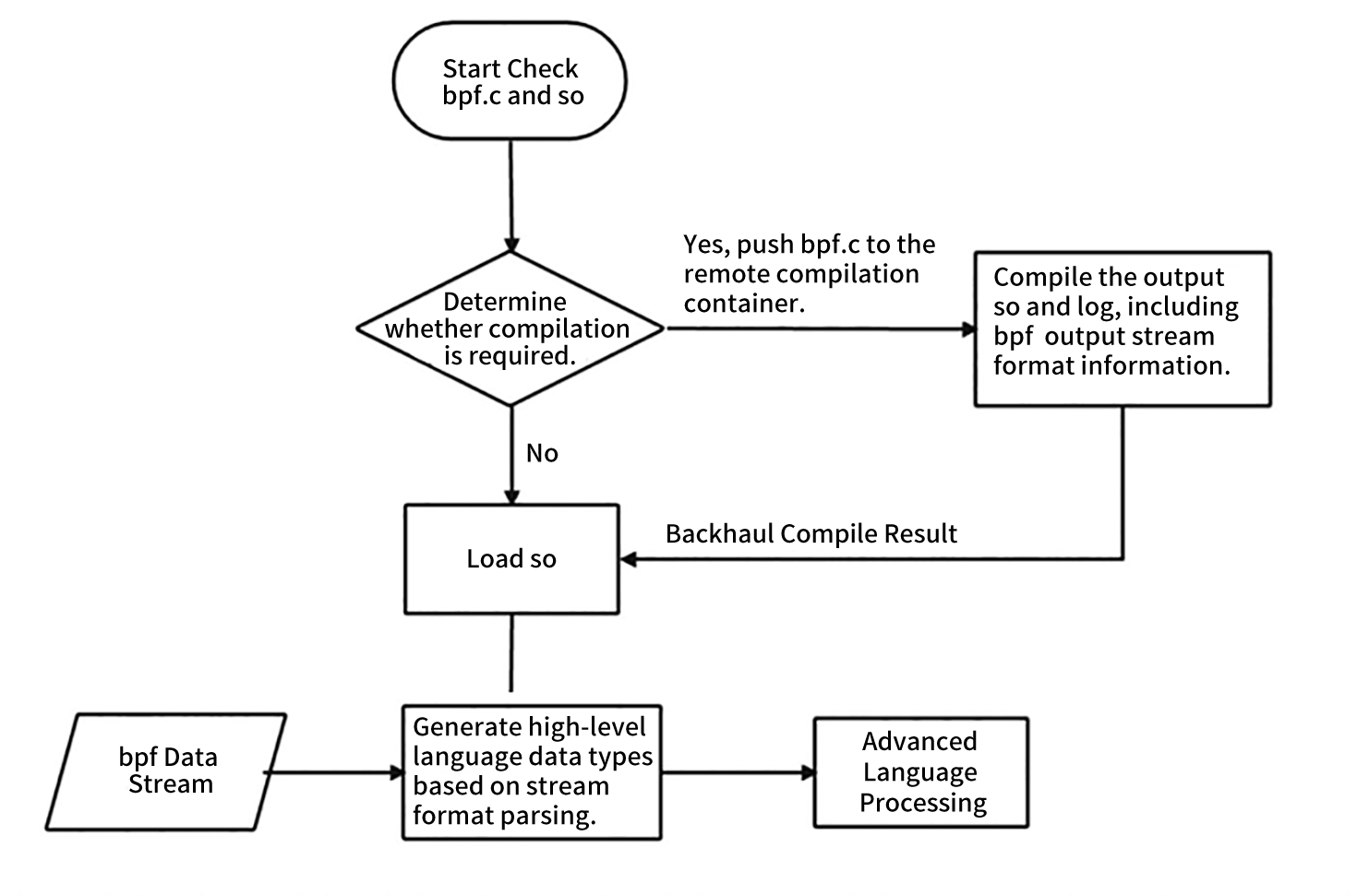

2) Remote Compilation Service: It receives bpf.c, generates bpf.so or bpf.o, and provides them to advanced languages for loading. Users only focus on their functional development and do not need to care about the installation of underlying libraries and environment building.

Currently, users only need to pip install coolbpf when developing code, and the program will automatically go to our compiler for compilation. You can also refer to this link to build your compiler. (We will open source the source code of this compiler later.) The process may be complicated. The server built this way can be used personally or provided by the company for everyone to use together.

3) The higher version features are added to the lower version using the kernel module, such as the ring buffer feature and the backport BPF feature to the 3.10 kernel.

Since there are still many servers with a 3.10 kernel, if we want to make the same BPF program run on the lower version of the kernel and facilitate maintenance without modifying the program code, we can only install a ko to support BPF, so the lower version also enjoys the dividend of BPF.

4) Automatic generation of BTF and crawler of the latest kernel version on the whole network. Automatically discover the latest kernel versions (such as CentOS, Ubuntu, and Anolis) and automatically generate the corresponding BTF.

It is not feasible to have the ability to compile multiple runs of CO-RE at a timewithout BTF. Coolbpf provides a tool for making BTF but also automatically discovers and makes the latest kernel version of BTF for everyone to download and use.

5) Each kernel version performs the functional test automatically. After the tool is written, it is automatically installed and tested to ensure user functions are pre-tested before running in the production environment.

BPF programs and tools that have not been launched are risky. Coolbpf provides a set of automated testing processes. In most kernel environments, perform the basic functional test in advance to ensure the tool does not cause major problems when it is running in the production environment.

6) It supports advanced languages (such as Python, Rust, Go, and C).

Currently, the coolbpf project supports user program development using Python, Rust, Go, and C languages. Developers in different languages can give full play to the best advantages in their fields.

In a word, coolbpf enables BPF program and application program development to be solved in a closed loop on one platform. It effectively improves productivity and covers the current mainstream development language. It is suitable for more BPF enthusiasts to learn and for system operation and maintenance personnel to develop monitoring and diagnostic programs efficiently.

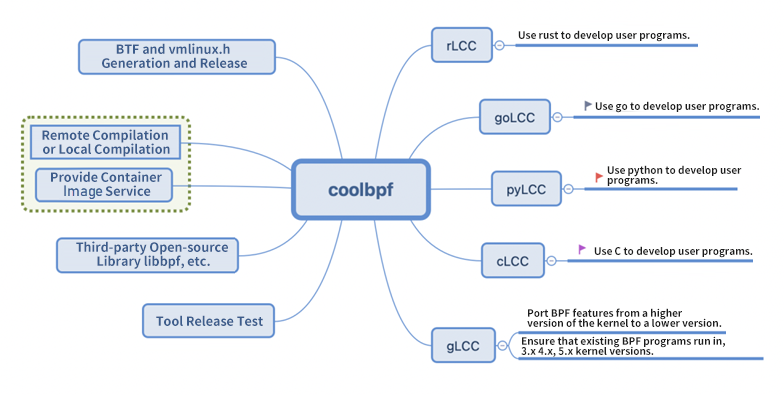

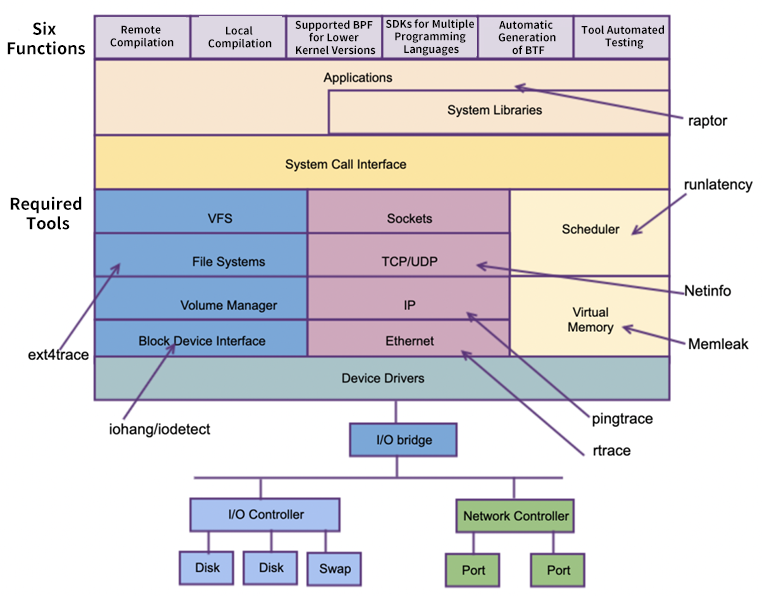

The following figure shows the features and tool support of coolbpf. More excellent BPF tools are welcome to join:

Coolbpf currently includes pyllcc, rlcc, golcc, and clcc and glcc subdirectories. The advanced languages Python, Rust, and Go support remote and local compilation. Glcc (G stands for generic) runs on a lower version by porting the BPF features of the higher version to the lower version and using the kernel module. Let's briefly introduce its usage.

Pylcc is encapsulated based on libbpf, and complex compilation projects are handed over to containers.

The code writing is concise and can be completed in only three steps. The key points of pyLCC technology are listed below:

1) Perform pip install coolbpf Installation

2) Compilation of xx.bpf.c:

bpfPog = r"""

#include "lbc.h"

LBC_PERF_OUTPUT(e_out, struct data_t, 128);

LBC_HASH(pid_cnt, u32, u32, 1024);

LBC_STACK(call_stack,32);3) The xx.py writing is needed to run the program. Users can pay attention to the data received from the kernel for analysis:

importtimefrompylcc.lbcBaseimportClbcBase

classPingtrace(ClbcBase):def__init__(self):super(Pingtrace, self).__init__("pingtrace")Bpf. c needs to actively include lbc.h, which informs the behavior of the remote server and does not need to have locally. Its content:

#include "vmlinux.h"

#include <linux/types.h>

#include <bpf/bpf_helpers.h>

#include <bpf/bpf_core_read.h>

#include <bpf/bpf_tracing.h>The Rust language supports remote compilation and local compilation. After using the coolbpf command in the makefile to send bpf.c to the server, the server returns .o. This is different from Python and C returning .so. Rust handles the common load and attaches processes by itself.

Compiling example process:

SKEL_RS=1 cargo build --release generates rust skel files;

SKEL_RS=0 cargo build --release does not need to generate rust skel files;

The default SKEL_RS is 1.

Compiling rexample process:

rexample uses the remote compilation function. The specific compilation process is as follows:

Run the command mkdir build & cd build to create a compilation directory;

Run the command cmake .. to generate Makefile files;

Run the command make rexample;

Run the example program: ../lcc/rlcc/rexample/target/release/rexample.fn main() -> Result<()>{

let opts = Command::from_args();

let mut skel_builder = ExampleSkelBuilder::default();

if opts.verbose {

skel_builder.obj_builder.debug(true);

}

bump_memlock_rlimit()?;

let mut open_skel = skel_builder.open()?;

let mut skel = open_skel.load()?;

skel.attach()?;

let perf = PerfBufferBuilder::new(skel.maps_mut().events())

.sample_cb(handle_event)

.lost_cb(handle_lost_events)

.build()?;

loop {

perf.poll(Duration::from_millis(100))?;

}

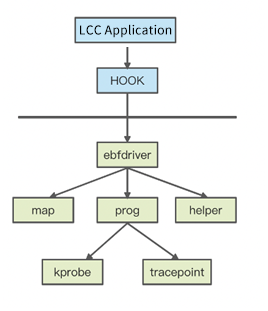

}To this end, we propose a method to run eBPF programs on a lower version of the kernel, so the binary program can run on a kernel that does not support BPF without any modification.

The following is an architectural review of the possibility of running BPF in the lower version of the kernel.

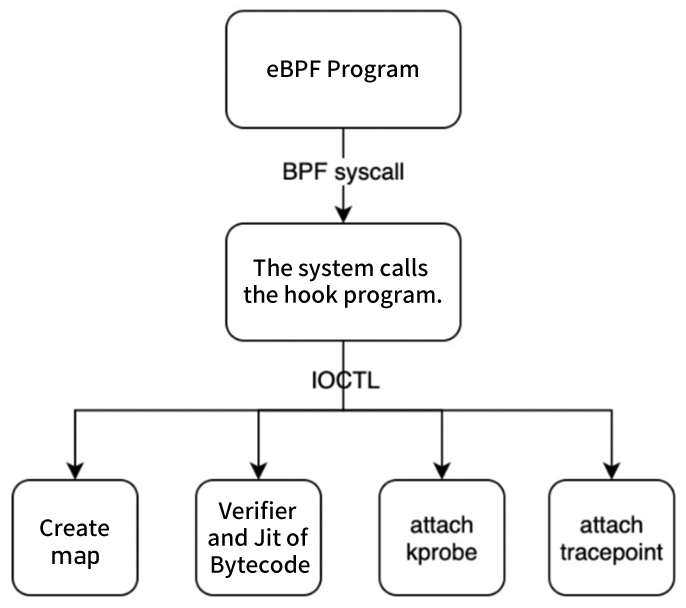

Hook is a dynamic library. Since the lower version of the kernel does not support bpf() system calls, the original map creation, prog creation, and many helper functions (such as bpf_update_elem, etc.) in the user state will not be able to run. Hook provides a dynamic mechanism to convert these system calls into ioctl commands, set them to a kernel module called ebpfdriver, and create some data structures to simulate map and prog. Meanwhile, register a handler for both kprobe and tracepoint. As such, when data arrives, register in kprobe and tracepoint callbacks.

The operation mechanism is shown in the following figure:

The Hook program converts the syscall of BPF into ioctl and passes system call parameters to the eBPF driver. It includes the following functions:

#define IOCTL_BPF_MAP_CREATE _IOW(';', 0, union bpf_attr *)

#define IOCTL_BPF_MAP_LOOKUP_ELEM _IOWR(';', 1, union bpf_attr *)

#define IOCTL_BPF_MAP_UPDATE_ELEM _IOW(';', 2, union bpf_attr *)

#define IOCTL_BPF_MAP_DELETE_ELEM _IOW(';', 3, union bpf_attr *)

#define IOCTL_BPF_MAP_GET_NEXT_KEY _IOW(';', 4, union bpf_attr *)

#define IOCTL_BPF_PROG_LOAD _IOW(';', 5, union bpf_attr *)

#define IOCTL_BPF_PROG_ATTACH _IOW(';', 6, __u32)

#define IOCTL_BPF_PROG_FUNCNAME _IOW(';', 7, char *)

#define IOCTL_BPF_OBJ_GET_INFO_BY_FD _IOWR(';', 8, union bpf_attr *)The eBPF driver receives an Ioctl request and performs corresponding operations based on the cmd. For example:

A. IOCTL_BPF_MAP_CREATE: Create a map

B. IOCTL_BPF_PROG_LOAD: Load eBPF bytecode and perform bytecode security verification and jit to generate machine code

C. IOCTL_BPF_PROG_ATTACH: Attach the eBPF program to a specified kernel function and use the register_kprobe and tracepoint_probe_register functions to attach the eBPF program

In addition, some features of the higher version (such as ringbuff) can also be used in the lower version through ko and other methods. Please see the GitHub link of coolbpf (at the end of this article) for more information about how to use clcc and golcc.

Coolbpf currently has the six functions above. Its purpose is to simplify the development and compilation process, allow users to focus on their function development, and enable the majority of BPF enthusiasts to start and write their function programs quickly without worrying about environmental problems. Today, we offer the open-source version of this system for more people to enhance its productivity, promote social progress, let more people participate in the construction of this project, form a joint force, and improve technology.

Our remote compiler solves the problem of productivity efficiency. The lower version of BPF support solves the problem of how the same bin file that troubles various developers runs indiscriminately in multi-kernel versions. At the same time, we also hope more people will participate in the joint improvement so people in the cloud computing industry and enterprise services can fully enjoy the dividends of BPF technology.

The OpenAnolis system operation and maintenance SIG is committed to building an automated operation and maintenance platform that integrates a series of functions such as host management, configuration and deployment, monitoring and alarm, exception diagnosis, and security audit. Coolbpf is a sub-project of the community. The goal is to provide a compilation and development platform to solve the problem of BPF operation and production efficiency improvement on different system platforms.

More developers are welcome to join the SysOM SIG:

io_uring vs. epoll – Which Is Better in Network Programming?

96 posts | 6 followers

FollowOpenAnolis - July 27, 2023

OpenAnolis - April 7, 2023

OpenAnolis - February 8, 2023

OpenAnolis - December 24, 2024

OpenAnolis - February 2, 2023

Alibaba Cloud Native Community - January 19, 2023

96 posts | 6 followers

Follow Bastionhost

Bastionhost

A unified, efficient, and secure platform that provides cloud-based O&M, access control, and operation audit.

Learn More Managed Service for Grafana

Managed Service for Grafana

Managed Service for Grafana displays a large amount of data in real time to provide an overview of business and O&M monitoring.

Learn More CloudOps Orchestration Service

CloudOps Orchestration Service

CloudOps Orchestration Service is an automated operations and maintenance (O&M) service provided by Alibaba Cloud.

Learn More YiDA Low-code Development Platform

YiDA Low-code Development Platform

A low-code development platform to make work easier

Learn MoreMore Posts by OpenAnolis