By Pitian

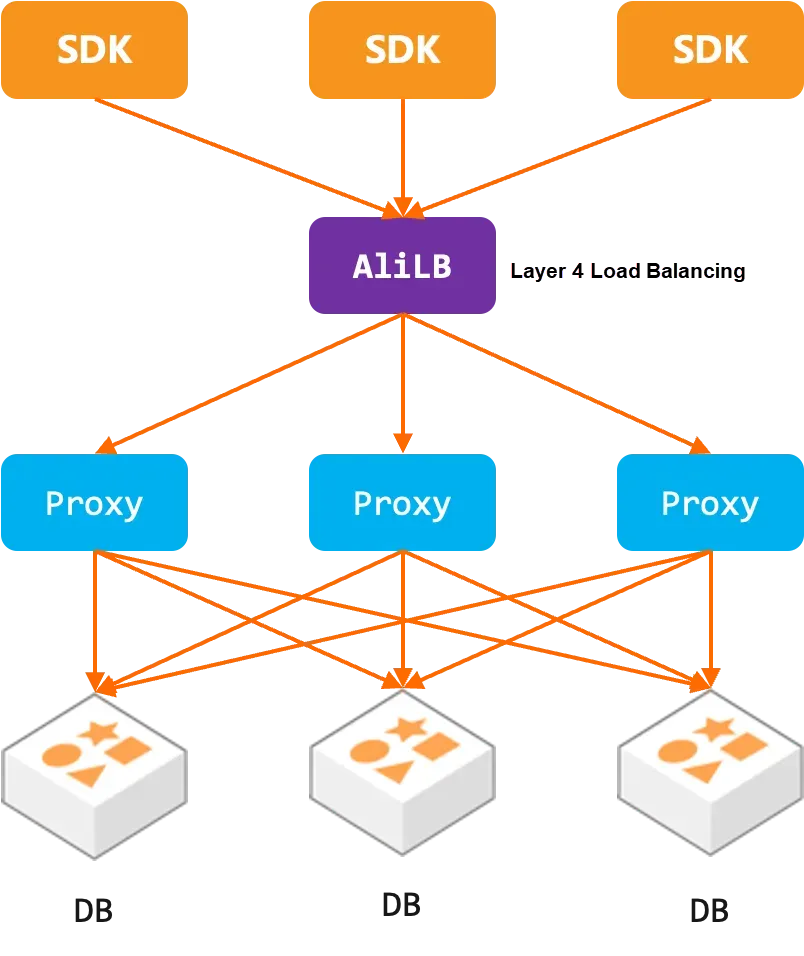

The preceding figure shows the traffic model of the Tair (Redis®* OSS-Compatible) cluster edition. Clients access AliLB using domain names. AliLB is a layer 4 load balancer that evenly distributes connections to backend proxies. Ideally, the number of client connections processed by each proxy should be similar.

If an uneven load occurs on the proxy, it can lead to one proxy's CPU being nearly maxed out while other proxies remain underutilized. As a result, the actual throughput experienced by users is far below the cluster's capacity limit. Additionally, the response time of requests to the highly loaded proxy starts to increase, causing damage to the business.

There are typically two reasons that cause uneven load on the proxy.

• In the early days, the AliLB scheduling algorithm used WRR, which is a weighted scheduling algorithm. When the number of back-end proxies increases or decreases, uneven connection scheduling occurs due to the algorithm itself. Now, this problem does not occur after the RR scheduling algorithm is replaced.

• Some proxies are restarted. In the RR algorithm, AliLB is in rotation scheduling and does not consider the number of connections on back-end proxies. Therefore, when some proxies are restarted, the number of restarted proxy connections becomes 0. The number of new connections is the same as that of other proxies, and the total number of connections is lower than that of other proxies. However, the proportion of proxies that are not actively restarted is relatively small. In actual cases, this situation has not caused problems.

• An application may use a pipeline or asynchronously to send a large number of requests on a connection, which results in a high load on the proxy that processes the connection. This issue can only be optimized by modifying the business access code to distribute requests through more connections to achieve load balancing.

In addition to the known causes mentioned above, there is also a connection imbalance issue that has been troubling us for 1-2 years.

The symptoms of this issue are as follows: At a certain moment, due to a machine failure, the RT of the proxy deployed on that machine increases, or due to a sudden traffic spike, the RT of one of the proxies increases. From the point of failure, the number of connections on that proxy gradually increases, leading to a higher load and even higher RT, resulting in an avalanche effect.

The proxy passively accepts new connections, so if it has more connections than other proxies, it is definitely receiving a disproportionate number of new connections. Therefore, the initial suspicion for this issue is that the AliLB is not scheduling connections evenly.

However, judging from the principle, the problem proxy is more likely to fail to keep AliLB alive. Theoretically, fewer new connections should be scheduled. This is contrary to the phenomenon. The analysis of back-end logs by relevant colleagues of ALB does not show uneven connection scheduling. At that time, the proxy's monitoring information did not include the total number of connections, which blocked the troubleshooting process. The only option was to add logs and continue monitoring.

Later, when the issue occurred for the second time, the proxy logs showed that the number of new connections on each proxy during the problem period was indeed similar. Therefore, the only reason for the connection imbalance could be that the number of disconnections on the problematic proxy had decreased.

However, during the problem period, the proxy did not actively disconnect; all disconnection requests were initiated by the client. This is very confusing because all client connections are directed to the AliLB domain name. For the client, there is no distinction between each connection, so why would it retain connections to the problematic proxy while disconnecting others?

After careful consideration, the conclusion was reached that it might be due to client access timing out when connecting to proxies with high RT. If the code does not handle these exceptions properly, it could lead to connection leaks, causing the number of connections on the problematic proxy to continuously increase.

This logic is plausible. Subsequently, we worked with the business side to attempt to reproduce the issue through stress testing. By analyzing the logs, we could see specific client IPs and QPS. However, the actual scenario is very complex. The business side has multiple applications accessing Tair (Redis® OSS-Compatible) using different models. We did not find any obvious client IPs with increased connection counts or traffic in the logs, nor did we identify specific code responsible for connection leaks on the client side.

So the conclusion after a long time is that AliLB distributes new connections evenly, the proxy passively establishes and releases connections, and the client treats all connections equally. The issue is likely due to some part of the business code not releasing connections after a timeout.

When troubleshooting another problem, it was found that the default management policy of Jedis, lettuce, and other client connection pools is LIFO. Previously, Jedis connection pools have been considered to be in rotation scheduling. No one has ever questioned this point in internal discussions and communication with business parties. The scheduling policy of LIFO itself is uneven, and a scenario is constructed based on this policy to reproduce the problem.

The server has four proxies for Tair (Redis® OSS-Compatible) cluster edition. One of the proxies increases the latency by 200ms.

Clients use the Jedis connection pool to access Tair (Redis® OSS-Compatible).

The traffic model is 100 GET requests per second for each client process.

There is a peak traffic of 10 seconds and 150 GET requests per second.

Start four client processes at the same time.

<dependency>

<groupId>redis.clients</groupId>

<artifactId>jedis</artifactId>

<version>3.6.3</version>

</dependency>

JedisPoolConfig config = new JedisPoolConfig();

config.setMaxIdle(200);

config.setMaxTotal(200);

config.setMinEvictableIdleTimeMillis(5000);

config.setTimeBetweenEvictionRunsMillis(1000);

config.setTestOnBorrow(false);

config.setTestOnReturn(false);

config.setTestWhileIdle(false);

config.setTestOnCreate(false);

JedisPool pool = new JedisPool(config, host, port, 10000, password);

Semaphore sem = new Semaphore(0);

for (int i = 0; i < 200; i++) {

new Thread(new Runnable() {

@Override

public void run() {

while (true) {

Jedis jedis = null;

try {

sem.acquire(1);

jedis = pool.getResource();

jedis.get("key");

} catch (Exception e) {

e.printStackTrace();

} finally {

if (jedis != null) {

jedis.close();

}

}

}

}

}).start();

}

long last_peak_time = System.currentTimeMillis();

while (true) {

try {

long cur = System.currentTimeMillis();

if (cur - last_peak_time > 10000) {

last_peak_time = cur;

sem.release(150);

} else {

sem.release(100);

}

Thread.sleep(1000);

} catch (Exception e) {

}

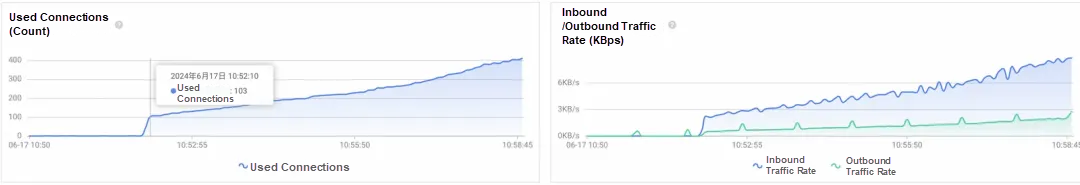

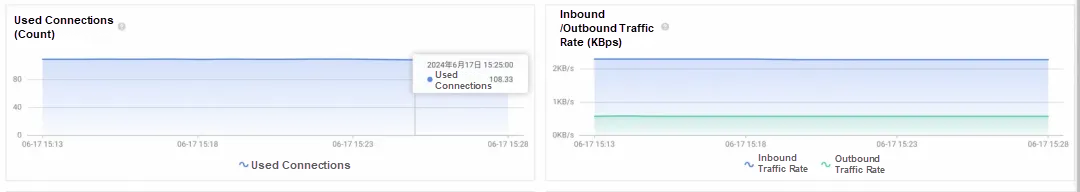

}The number of connections and traffic on the problematic proxy gradually increases.

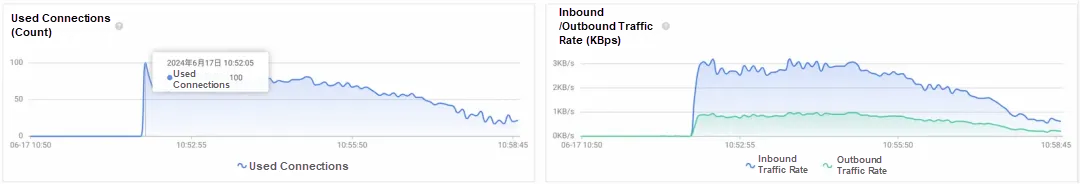

The number of connections and traffic on the normal proxy are dropping.

By default, the LIFO parameter of the Jedis connection pool is set to True. In this mode, the returned connections are placed at the head of the queue and are used more frequently.

During traffic peaks, the size of the connection pool is increased. These connections are randomly distributed among the 4 proxies. However, due to the high RT of the problematic proxy, connections to this proxy are returned to the pool later, causing subsequent requests to preferentially access the problematic proxy. Connections to proxies with lower RT are returned to the pool earlier and are placed at the end of the queue. During off-peak periods, these connections are not used, so they are automatically released after being idle for a while.

For a long time, Jedis will expand a batch of connections during peak hours, and release the normal RT proxy connections during off-peak hours. Finally, most of the connections will be concentrated on the problematic proxy, resulting in uneven load and increasing impact on business.

Use the above test code but add the following settings. At this point, Jedis will evenly distribute connections across all proxies. Test the results again.

config.setLifo(false)All proxies' connections and traffic are very stable (minute-level monitoring has smoothed out the fluctuations).

However, with this approach, all connections will be utilized, and connections are unlikely to be released due to idleness.

Jedis and lettuce client connection pool usage:

org.apache.commons.pool2.impl.GenericObjectPool management, the default policy of the object pool is LIFO, which will cause slow access connections to be placed at the head of the queue for more frequent use, while fast access connections are placed at the end of the queue and closed when idle, and eventually the connections will be skewed to the slow access proxy.

For Tair (Redis® OSS-Compatible) cluster edition with proxy nodes, we recommend that you set LIFO to false. In this way, the load of each proxy node is more uniform and connections are not skewed. However, this setting can make it difficult for connections to remain idle, potentially causing the total number of connections to increase. For applications with a large number of connections, a detailed evaluation is required.

We recommend setting LIFO to true for directly connected instances of the cluster or master-replica instances of Tair (Redis® OSS-Compatible). This will allow connections to be reused as much as possible, and idle connections can be released promptly, thereby improving the performance of Tair (Redis® OSS-Compatible).

Currently, the recommended LIFO settings are not fixed, and clients find it challenging to automatically adjust connection pool strategies to maintain optimal performance without back-end statistical information. A better approach would be to establish a graceful connection closure protocol between the proxy/Tair (Redis® OSS-Compatible) and the client. This protocol would enable the server to notify the client to gracefully close connections when detecting load imbalance or abnormal RT. This will enable dynamic balancing for connection counts, load, and RT. Additionally, a graceful connection closure protocol can reduce the impact on business operations during reconfiguration or upgrade scenarios.

*Redis is a registered trademark of Redis Ltd. Any rights therein are reserved to Redis Ltd. Any use by Alibaba Cloud is for referential purposes only and does not indicate any sponsorship, endorsement or affiliation between Redis and Alibaba Cloud.

Alibaba Clouder - February 14, 2020

Alibaba Clouder - January 21, 2021

Alibaba Clouder - November 27, 2017

Alibaba Container Service - December 19, 2024

Alibaba Clouder - March 24, 2021

Alibaba Cloud Community - March 25, 2022

Web Hosting Solution

Web Hosting Solution

Explore Web Hosting solutions that can power your personal website or empower your online business.

Learn More YiDA Low-code Development Platform

YiDA Low-code Development Platform

A low-code development platform to make work easier

Learn More Web Hosting

Web Hosting

Explore how our Web Hosting solutions help small and medium sized companies power their websites and online businesses.

Learn More mPaaS

mPaaS

Help enterprises build high-quality, stable mobile apps

Learn MoreMore Posts by ApsaraDB