by Aaron Berliano Handoko, Solution Architect Alibaba Cloud Indonesia

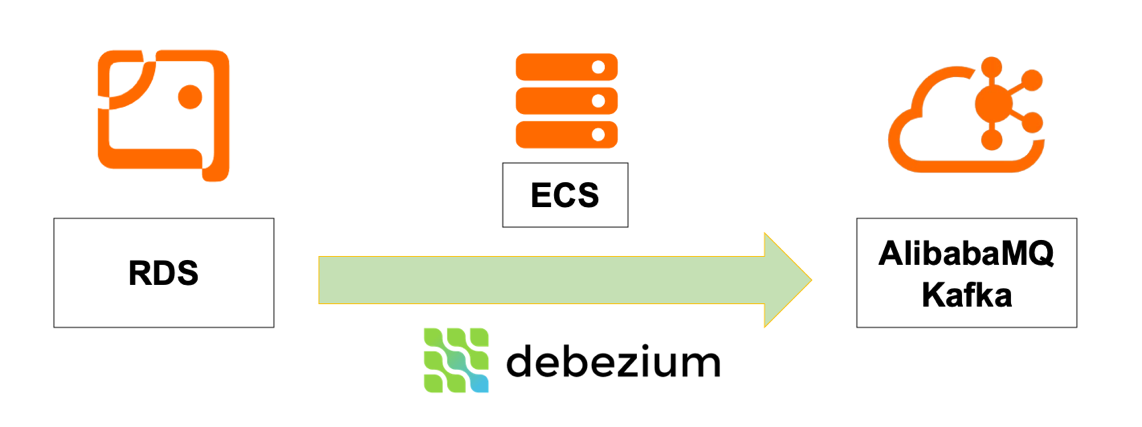

In today's fast-paced data landscape, real-time integration is crucial for organizations to stay competitive. Change Data Capture (CDC) plays a vital role in this, allowing businesses to capture and propagate database changes instantly. Debezium, an open-source platform, excels in CDC by capturing changes from database transaction logs and converting them into a stream of events. This stream is seamlessly handled by ApsaraMQ for Apache Kafka, a distributed streaming platform known for its scalability and fault tolerance. Together, Debezium and Kafka provide a robust solution for real-time CDC, empowering organizations to make timely decisions based on the latest data insights.

1. Configure ApsaraMQ for Apache Kafka

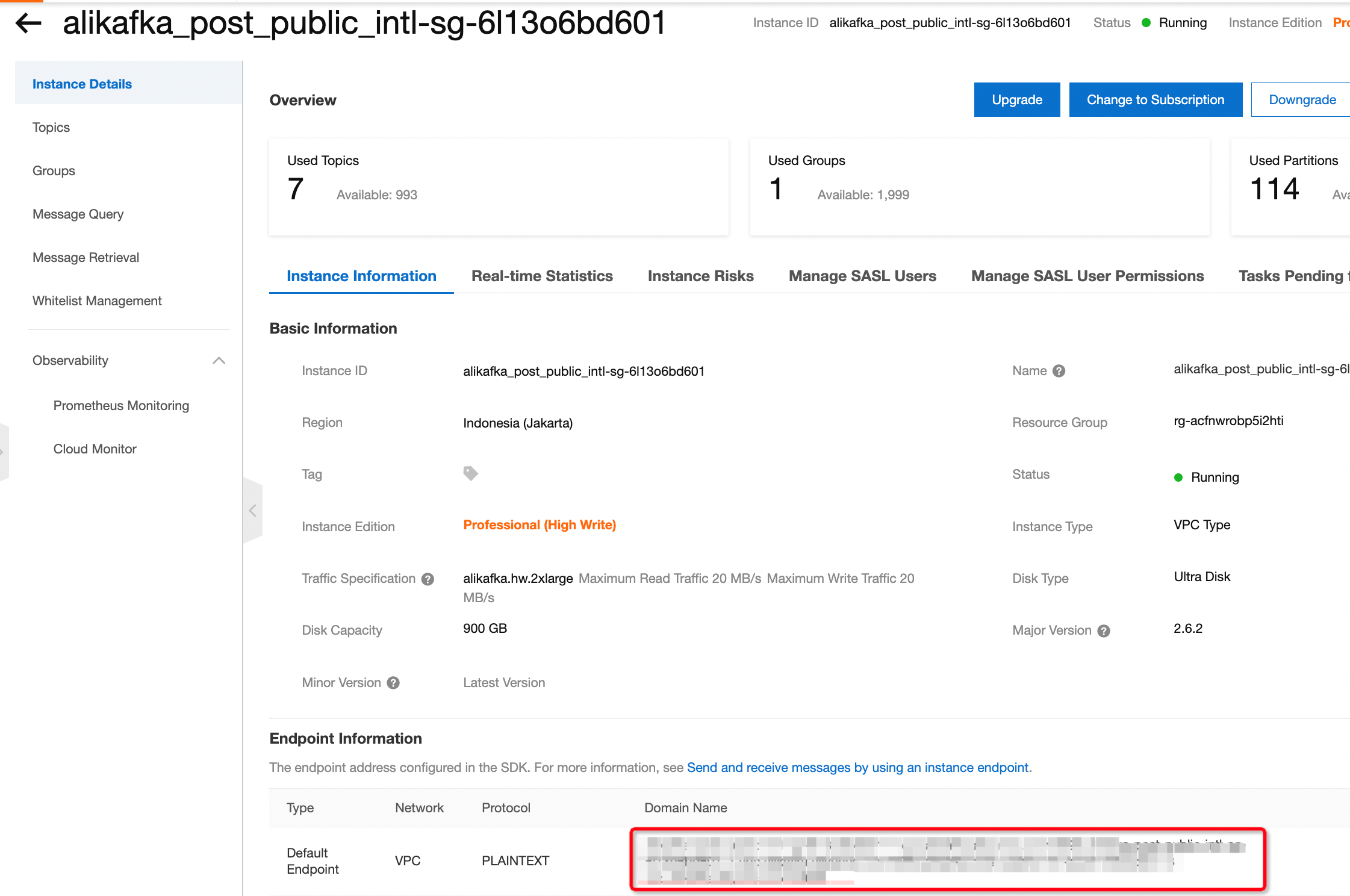

1.1. Getting Kafka Endpoint

You can simply get the kafka endpoint in the ApsaraMQ for Apache Kafka Console as shown in the picture below

1.2. Connection Whitelisting

Beside the endpoint there is an option to do ip whitelisting so we can connect debezium to the kafka instance from ECS. Please click the Manage Whitelist and add the ECS IP address

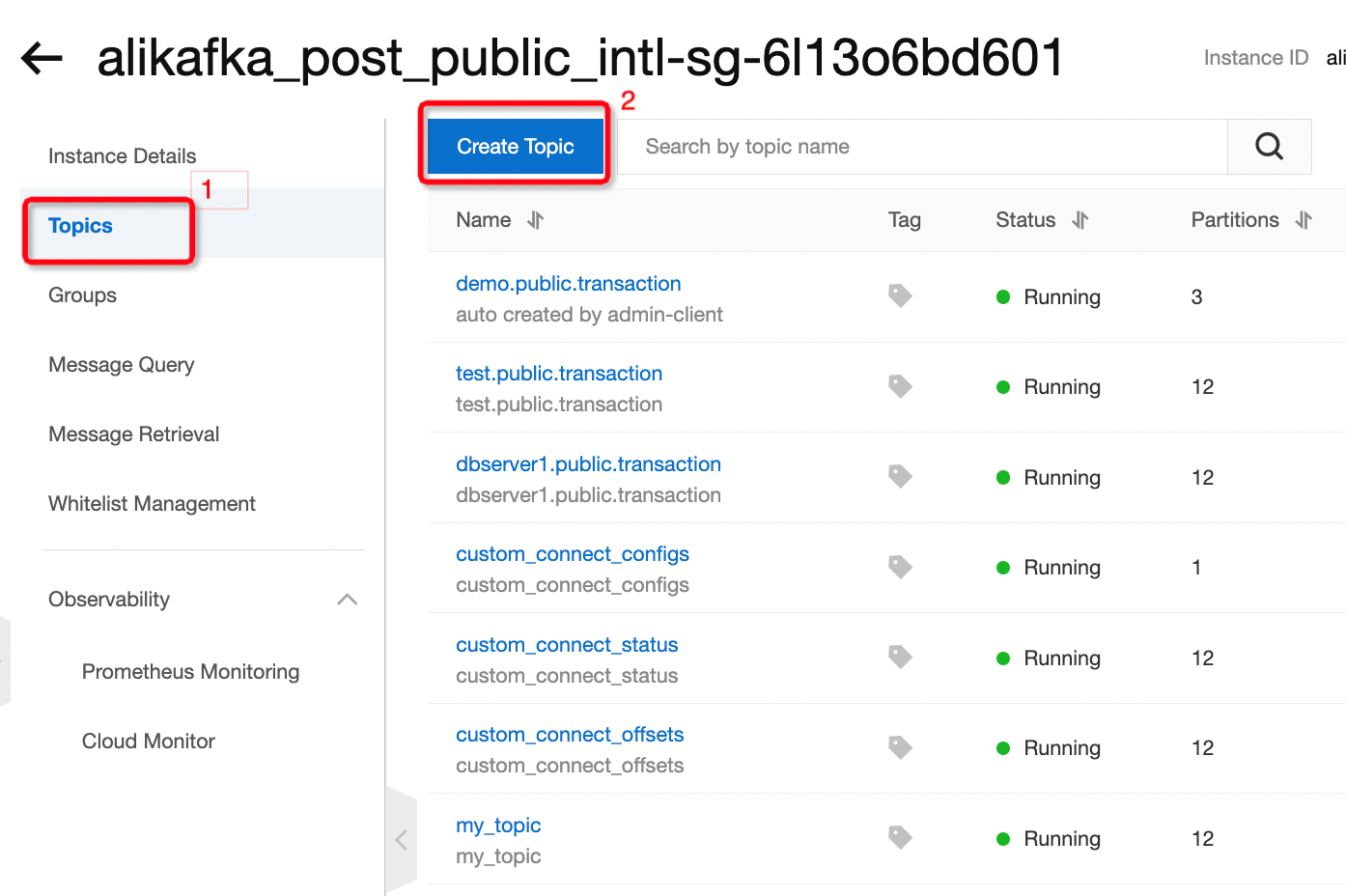

1.3. Creating Topic

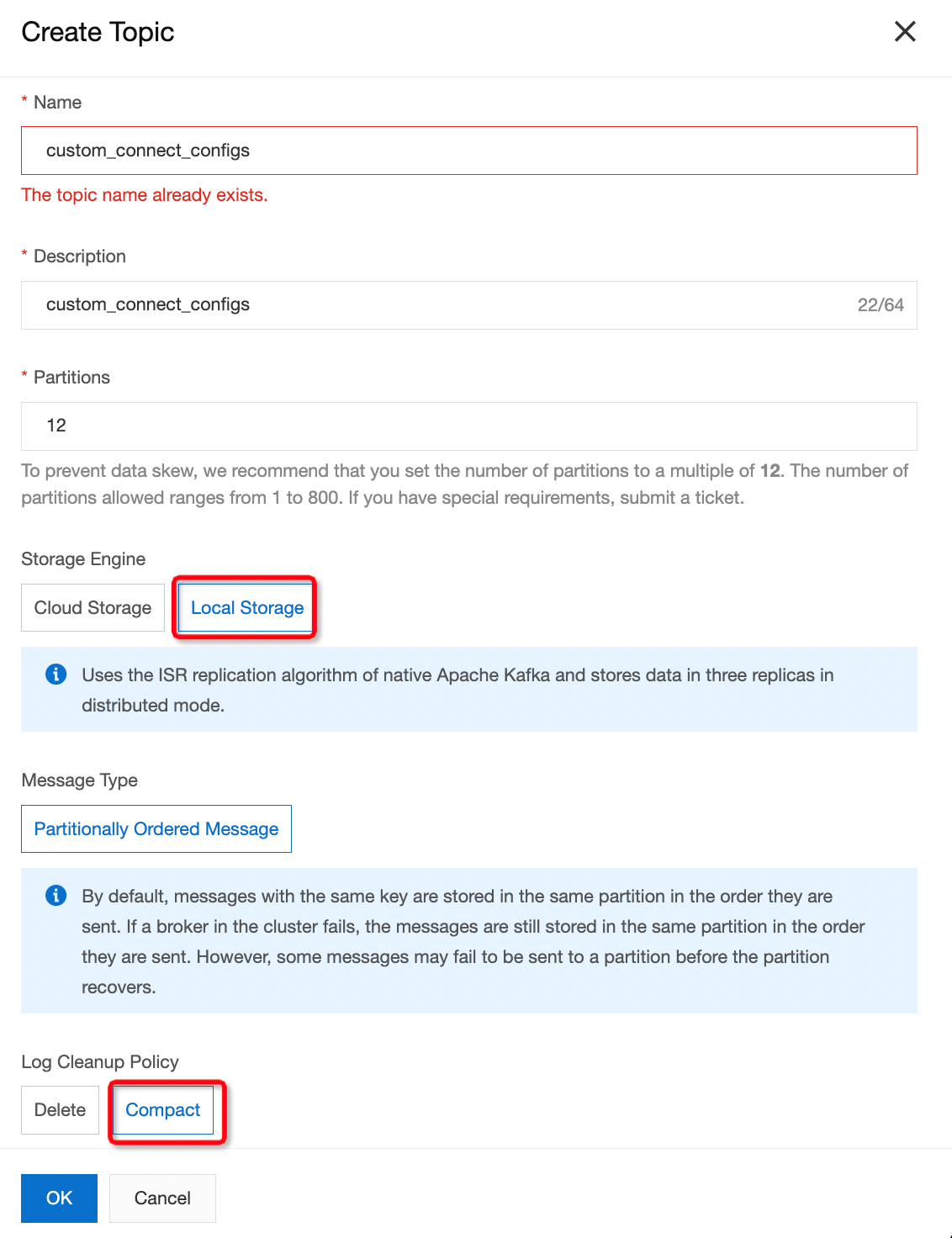

On the left-hand side choose the item Topics and then click Create Topic. A menu should pop out from the right hand side to configure the topic.

Debezium requires kafka to have 3 essential topics named configs, status and offset. For these 3 topics, it is required to set their log cleanup policy as compact. Choose Local Storage and then choose Compact for Log Cleanup Policy.

In this example we will use the names custom_connect_configs, custom_connect_status and custom_connect_offsets

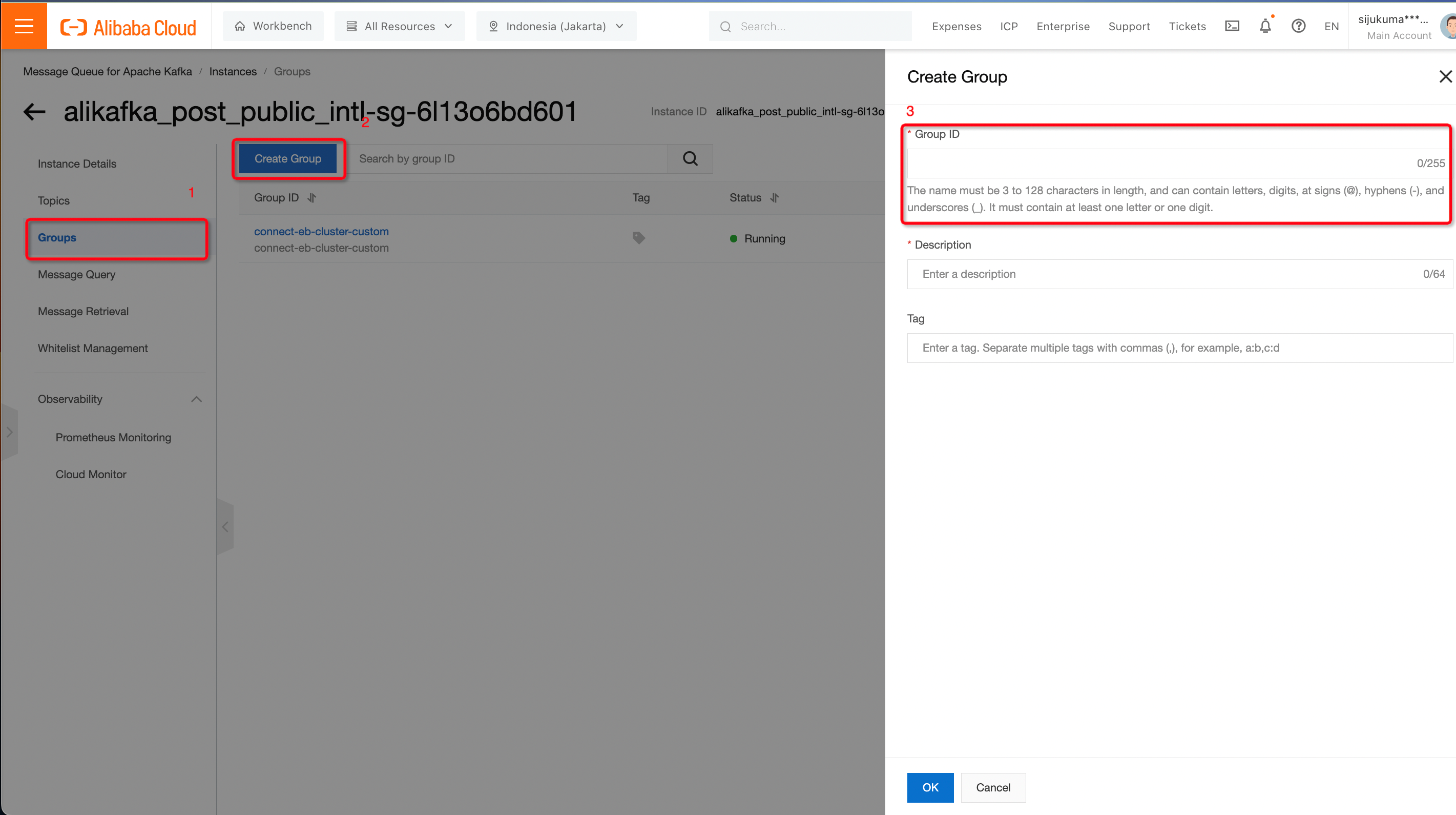

1.4. Creating Group ID

To create a group, simply choose the menu Groups and then Create Group. Fill in the Group ID that will be used to connect from debezium.

In this example we will name our group as connect-eb-cluster-custom

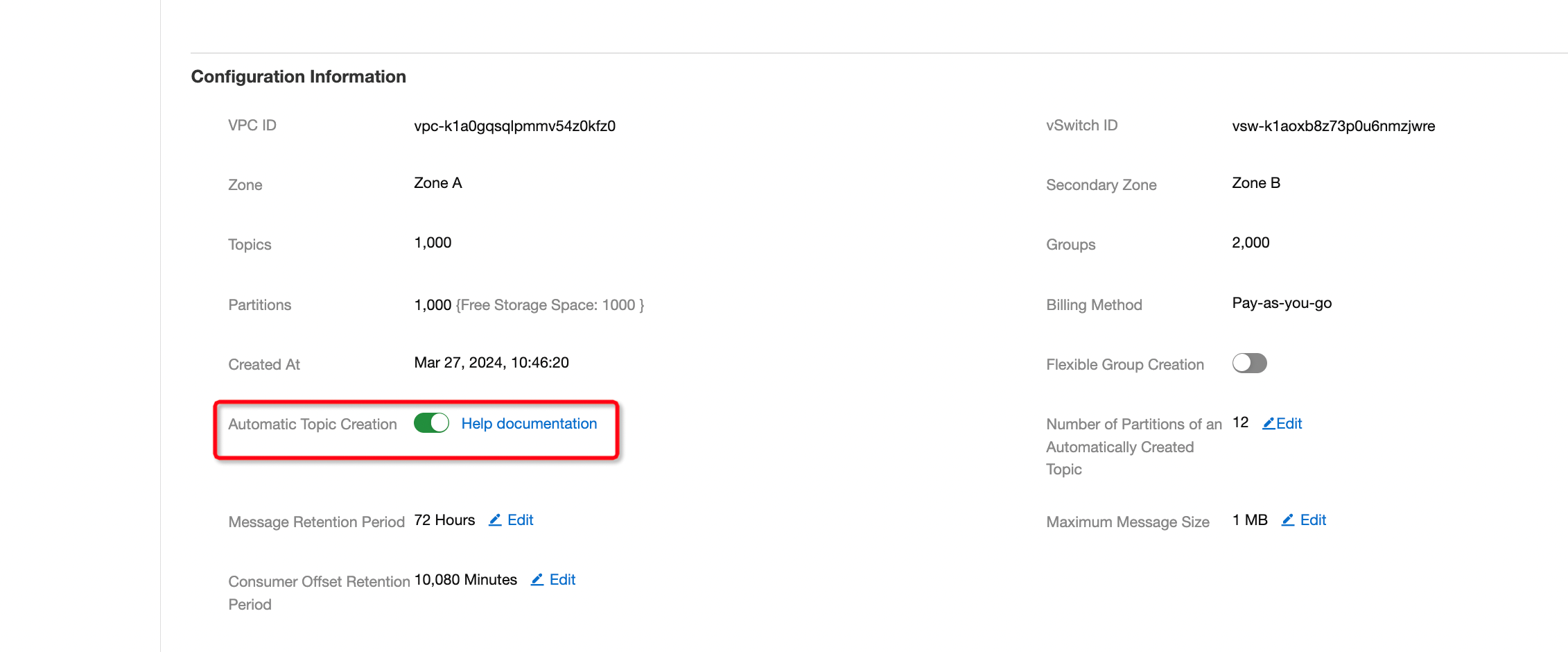

1.5. Enabling Automatic Topic Creation

Automatic Topic Creation is essential during the initial migration in order to eliminate the time used for topic creation. When using ApsaraMQ for Apache Kafka we need to enable the permission first. To do that simply go to the Instance Details then scroll down and enable the Automatic Topic Creation.

It will take about 15 minutes for the instance to apply this configuration.

2. Configure RDS for PostgreSQL

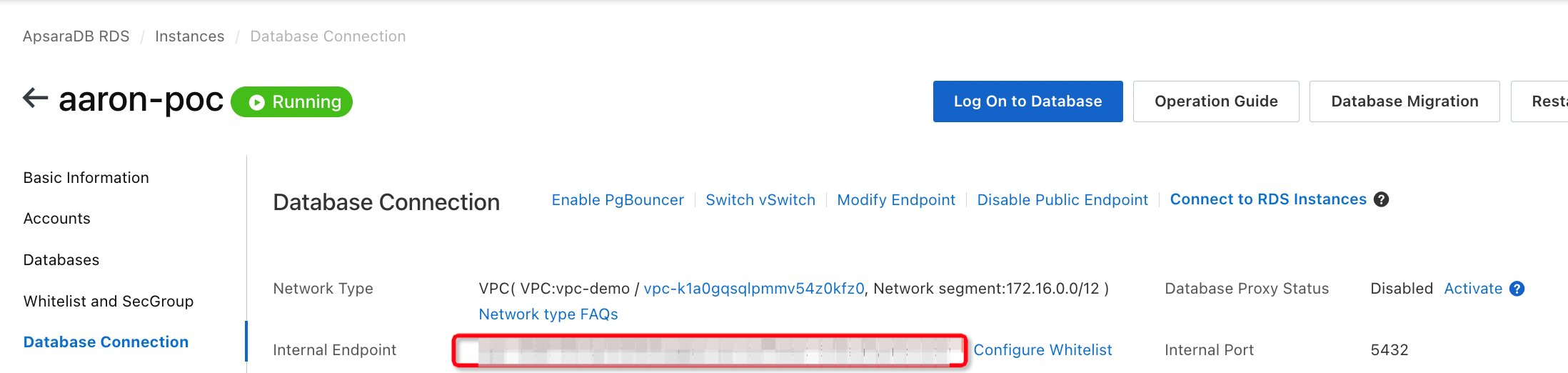

2.1. Configure Connection

The endpoint of the RDS can be found inside Database Connection menu and next to the Internal Endpoint as shown below. Since the ECS and Kafka instance reside in the same VPC, we can use internal endpoint

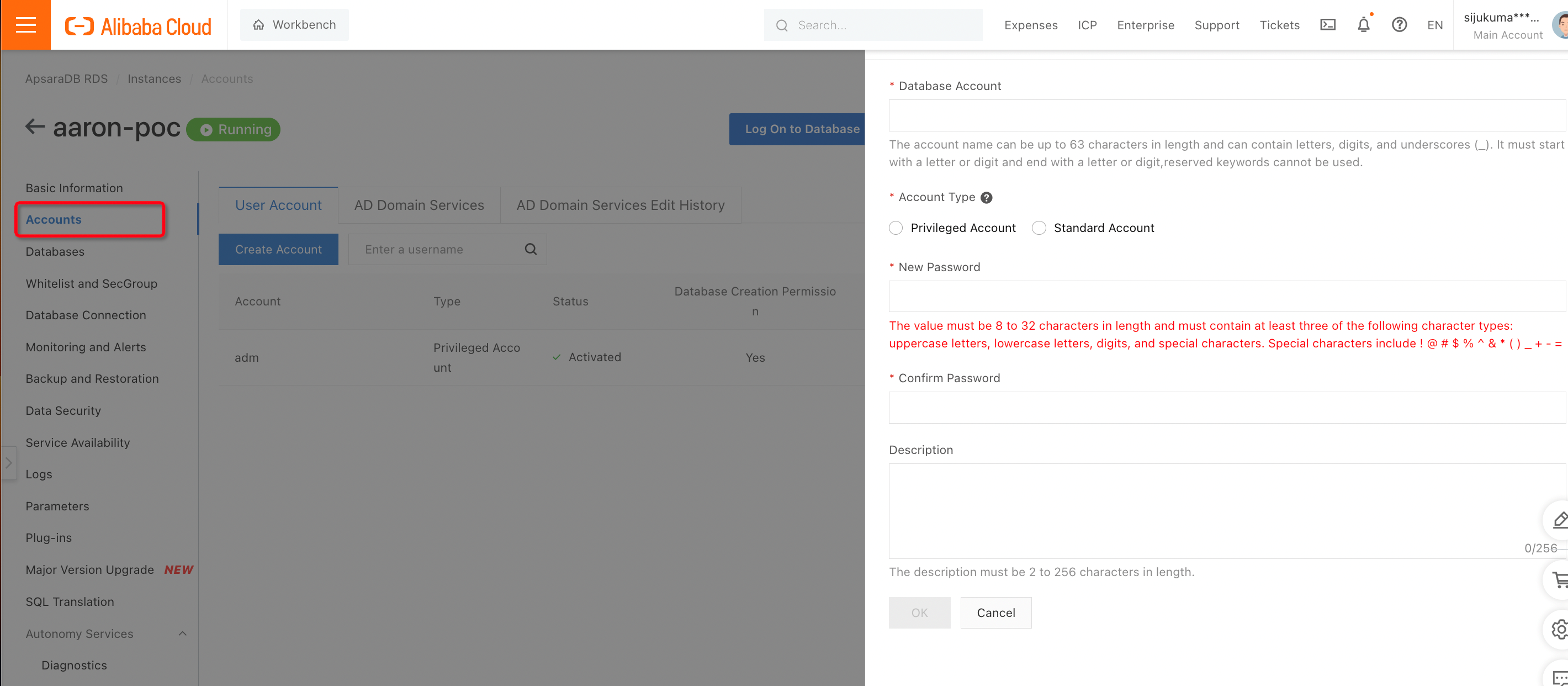

2.2. Create an Account

Simply select the Account menu on the left-hand side and create database account.

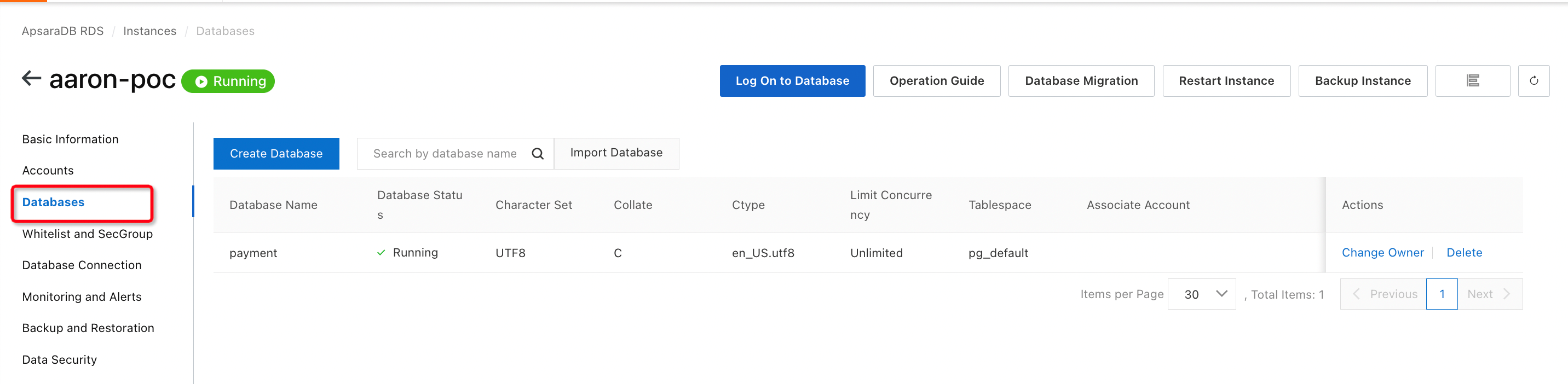

2.3. Create a Database

To create a database simply select the Database menu on the left-hand side and create a database. In this example we create database called payment.

2.4. Create a Table

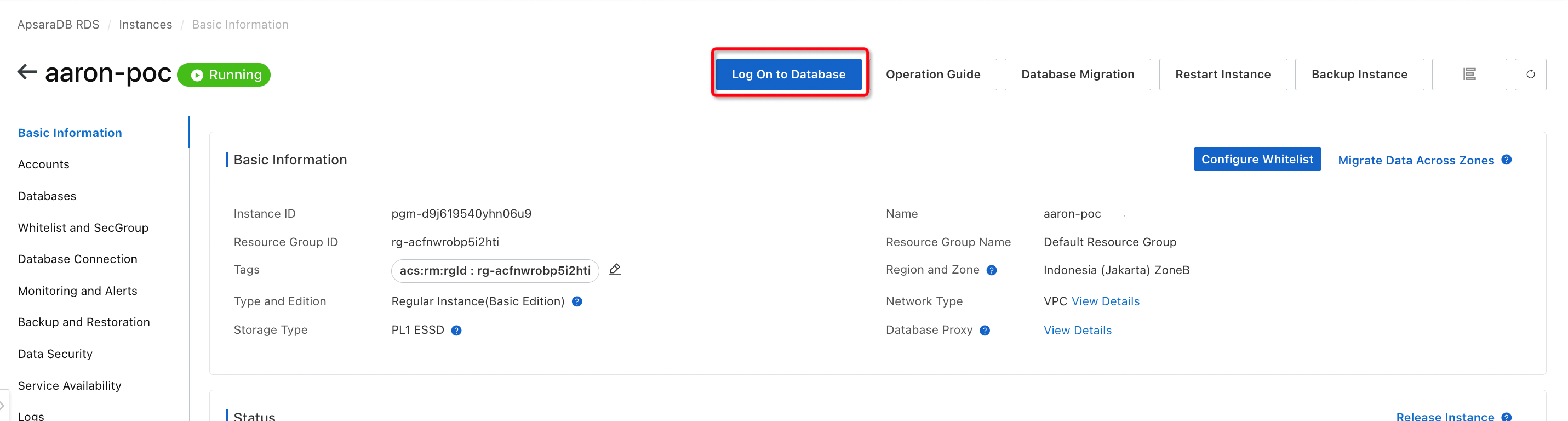

Log on to the Database by clicking the button as shown below. Fill out the necessary information needed including the account and password of the database.

Simply run the following query to create a sample table.

CREATE TABLE transaction(id SERIAL PRIMARY KEY, amount int, customerId varchar(36));

insert into transaction(id, amount,customerId) values(51, 12,'37b920fd-ecdd-7172-693a-d7be6db9792c');3. Connect to the ECS that is already been created and create a docker-compose.yml file. You can create a file by using vim docker-compose.yml command. This file will pull the docker image of the debezium to be used.

version: '3.1'

services:

connector:

image: debezium/connect:latest

ports:

- "8083:8083"

environment:

GROUP_ID: connect-eb-cluster-custom

CONFIG_STORAGE_TOPIC: custom_connect_configs

OFFSET_STORAGE_TOPIC: custom_connect_offsets

STATUS_STORAGE_TOPIC: custom_connect_status

BOOTSTRAP_SERVERS: <ApsaraMQ Kafka endpoint>4. After all the libraries have already been downloaded. Run this command below:

docker compose up5. If there is no error found when running the above command. Go to a new terminal and run the following command. This command is the configuration to create a connection from rds postgres to kafka via debezium.

curl -X POST -H "Accept:application/json" -H "Content-Type:application/json" localhost:8083/connectors/ -d '

{

"name": "debezium-pg",

"config": {

"connector.class": "io.debezium.connector.postgresql.PostgresConnector",

"tasks.max": "1",

"database.hostname": "<your-rds-pg-endpoint>",

"database.port": "5432",

"database.user": "<your-db-user>",

"database.password": "<your-db-password>",

"database.dbname" : "<your-db-name>",

"database.server.name": "<your-db-name>",

"database.whitelist": "<your-db-name>",

"database.history.kafka.bootstrap.servers": "<your-kafka-endpoint>",

"database.history.kafka.topic": "schema-changes.payment",

"topic.prefix":"<your-chosen-prefix>",

"topic.creation.enable":"true",

"topic.creation.default.replication.factor":12,

"topic.creation.default.partitions":<num-partition>

}

}'6. Check the log in the previous terminal and see if there is any error found. If no errors found you can go to the Kafka console and check if the topic has been automatically created.

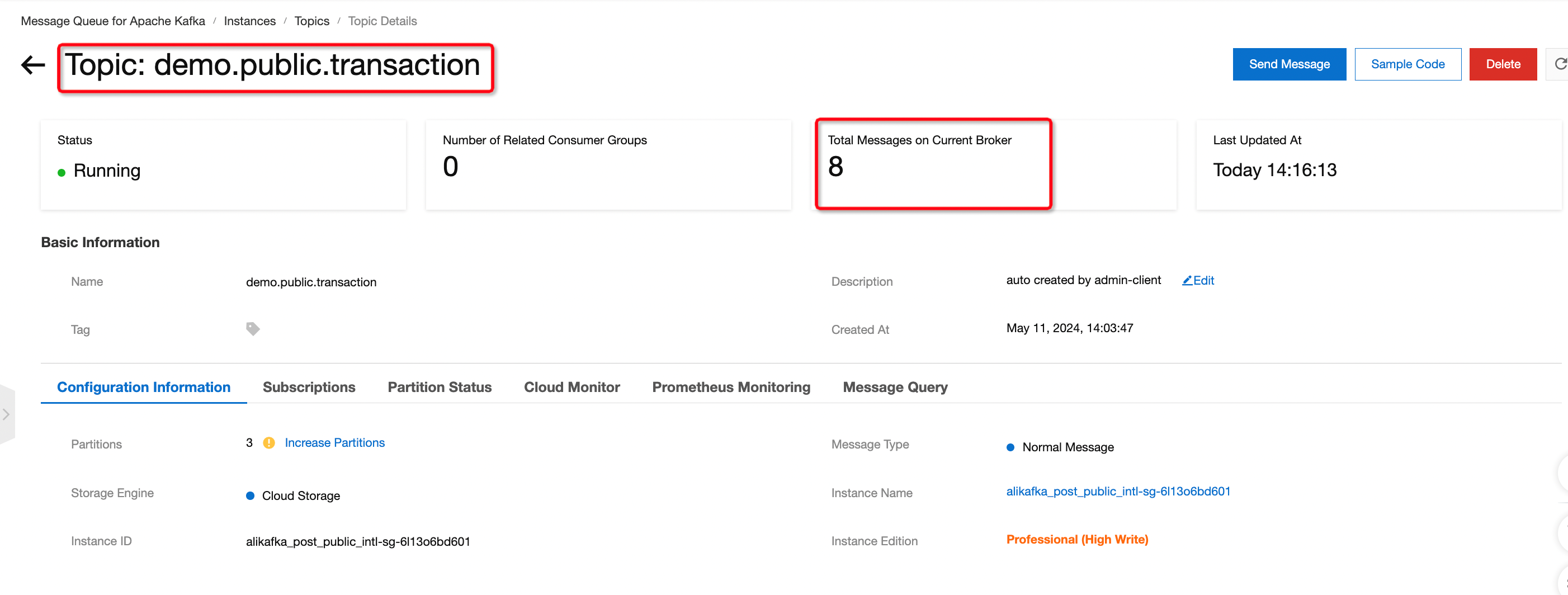

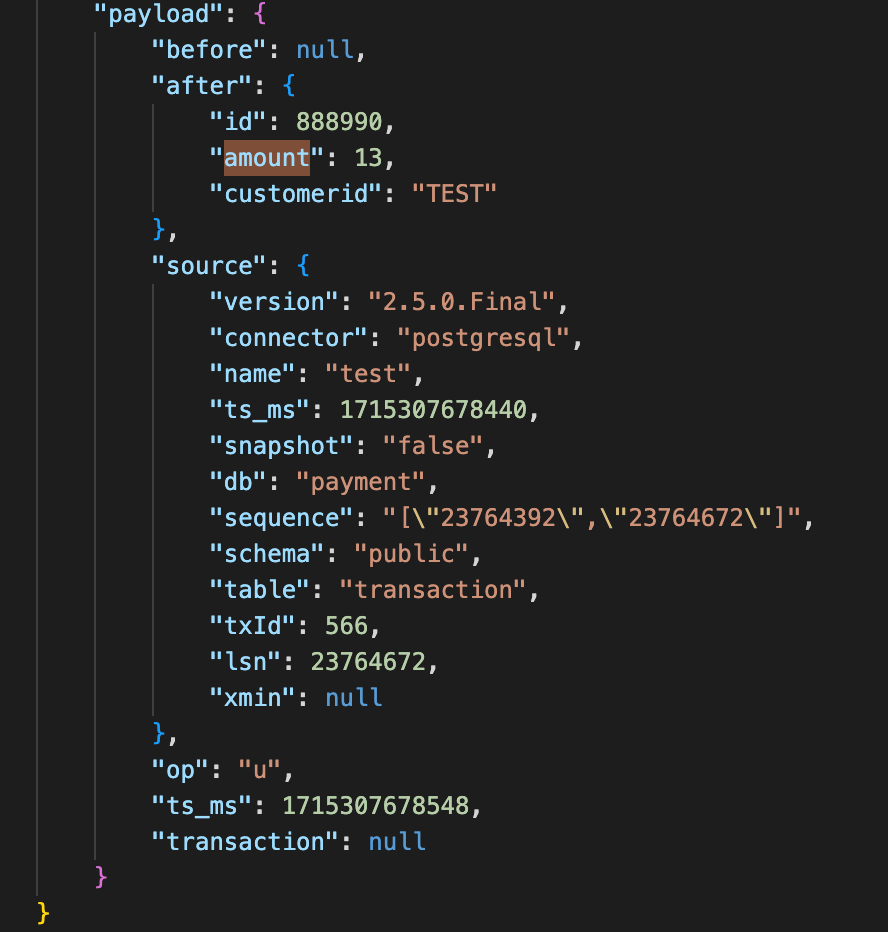

7. Insert data into the postgres database and see if the messages are able to be sent in real time to kafka.

insert into transaction(id, amount,customerId) values(1, 12,'TEST');To check the message in Kafka go inside the topic and go to message query.

Example of Debezium CDC Message

8. Helpful commands:

8.1. Edit debezium configuration

curl -X PUT -H "Accept:application/json" -H "Content-Type:application/json" localhost:8083/connectors/<name-of-connector>/config -d '

{

"name": "debezium-pg",

"config": {

"connector.class": "io.debezium.connector.postgresql.PostgresConnector",

"tasks.max": "1",

"database.hostname": "<your-rds-pg-endpoint>",

"database.port": "5432",

"database.user": "<your-db-user>",

"database.password": "<your-db-password>",

"database.dbname" : "<your-db-name>",

"database.server.name": "<your-db-name>",

"database.whitelist": "<your-db-name>",

"database.history.kafka.bootstrap.servers": "<your-kafka-endpoint>",

"database.history.kafka.topic": "schema-changes.payment",

"topic.prefix":"<your-chosen-prefix>",

"topic.creation.enable":"true",

"topic.creation.default.replication.factor":12,

"topic.creation.default.partitions":<num-partition>

}

}'8.2. Restart debezium configuration

curl -X POST localhost:8083/connectors/<name-of-connector>/restartOptimize Global Application Performance with Intelligent DNS and GTM Integration

115 posts | 21 followers

FollowAlibaba Cloud Community - June 14, 2024

Apache Flink Community - March 31, 2025

Apache Flink Community China - May 13, 2021

Apache Flink Community China - April 19, 2022

Apache Flink Community China - May 18, 2022

Apache Flink Community China - February 19, 2021

115 posts | 21 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Alibaba Cloud Indonesia