By Tian Yuan and Hui Shu.

In recent years, there's been much talk about multi-cloud and hybrid cloud. Multi-cloud and multi-cluster architectures can provide benefits such as high availability and multi-region zones. At the same time, both types of architectures can also reduce vendor dependency, which in turn offers a host of advantages, such as reduced costs, reduction in the need to store data with a single vendor, and the ability to take advantage of the best features each vendors has to offer, such as AI and GPU capabilities, without being locked in to one particular cloud vendor. The most discussed and implemented multi-cloud use case can be divided into these scenarios:

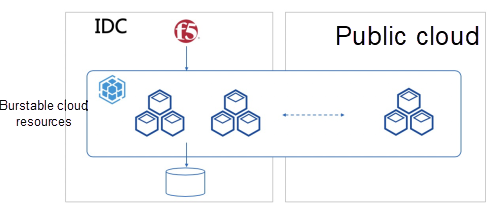

The first deals with sudden bursts of resource demands, which use multi-cloud setups as backup resource pools, as shown by the example in Figure 1. Daily operations of services are performed out of the user's on-premises data center, or IDC. However, when there is a sudden burst to traffic, the service can be quickly expanded to clusters on a public cloud, such as Alibaba Cloud, to meet the demand of the traffic spike. This setup is generally suited for stateless services that are CPU- and memory-intensive, such as search, query, and computing services. These services can be quickly scaled to meet the burst in resource demand.

Figure 1. Burstable cloud resources with multi-cloud

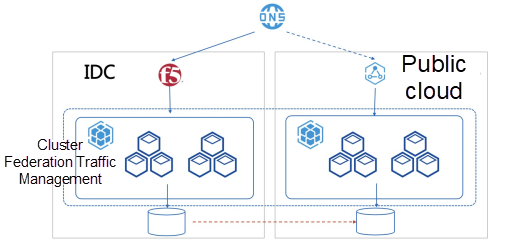

The second scenario deals with disaster recovery, as shown in Figure 2. Usually, one cluster runs the main service and the other is used for periodic backup. Alternatively, one cluster is responsible for reading and writing, and the other backup clusters are read only. If there is a problem with the main cluster, the service can be quickly switched to the backup clusters to avoid service interruptions. This is suitable for storage services with large data volumes.

Figure 2. Disaster recovery with multi-cloud

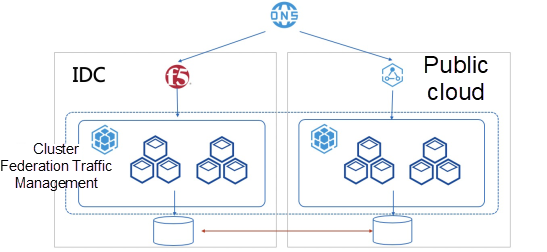

The third is designed for the multi-site active-active scenario, as shown in Figure 3. This is similar to the proceeding scenario with the major difference being all clusters are read-write and data is synchronized in real time. This scenario is best suited for critical data such as global user credentials.

Figure 3. Multi-site active-active scenario

Multi-cloud does have its challenges, though. Among them, deploying applications across the whole setup and how to seamlessly switch between multi-cloud and hybrid cloud are the top two problems. Also, migrating traditional applications can be a daunting task as well. That is why a mature way to deliver and manage applications in a multi-cloud multi-cluster environment is essential.

As early as 2013, established cloud computing vendors already started to talk about multi-cloud and considered it to be the next big thing.

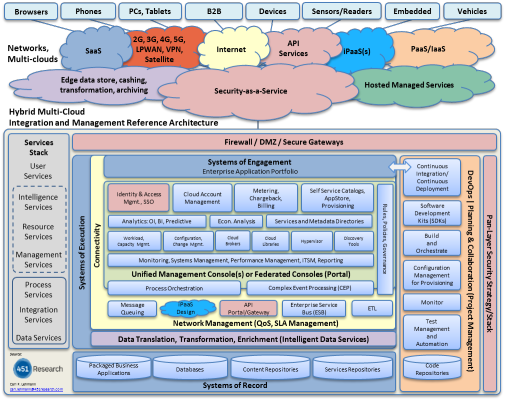

However, the sad reality is that every cloud platform and every data center has its own set of APIs and design language. So, these so-called "multi-cloud" architectures are nothing more than cloud A and B thrown together with a complex access layer acting as the translator. These kinds of multi-clouds and hybrid clouds are known for their complex architecture. There is no better proof than the bewildering solution illustrations from various vendors, as shown by the example in Figure 4.

Figure 4 Architecture of a traditional enterprise multi-cloud solution

These are multi-clouds or hybrid clouds in name only, which are actually just proprietary products from vendors. Different cloud platforms have different APIs and capabilities. Multi-cloud or hybrid cloud solutions built on these products are complex, confusing, and the majority of time money spent on these went into adapting and integrating different products. For users, the result is just another form of lock-in. Therefore, an ecosystem for multi-cloud was impossible and a widely accepted way to deliver and implement true multi-cloud never materialized.

Things changed for the better with the rapid popularization of the concept of cloud-native, with true multi-cloud has reached a critical juncture.

Since the Cloud Native Computing Foundation (CNCF) was founded in 2015, it has incubated dozens of projects, and attracted nearly 400 corporate members, over 63,000 contributors, and over 100,000 participants in the official CNCF activities. The number of projects that comply with Cloud Native standards under CNCF Landscape has reached thousands in number. The direct result of the rapid rise of Kubernetes and cloud-native technology is that Kubernetes is being used on the majority of public clouds, and nearly all technology firms and corporate end-users use Kubernetes to orchestrate and manage containerized applications in their data centers.

It is safe to say, Kubernetes is quickly becoming the de facto APIs for data centers worldwide.

Figure 5. Dawn of cloud-nativeness

With a standard API in place, multi-clouds and hybrid clouds are now able to show their true potential.

Kubernetes and its declarative container orchestration and management system have made application delivery increasingly standardized and completely decoupled from the underlying infrastructure. The implementation of cloud-native technology in public clouds and data centers makes it possible for applications to be "defined once and deployed everywhere".

The popularization of Kubernetes APIs in data centers around the world has finally built a solid foundation for multi-cloud and hybrid cloud architectures. With the software industry's ever-growing appetite for productivity and cost-cutting, cloud computing powered by the cloud-native technology is about to take over the world by storm.

Kubernetes is the platform for platforms. Comparing to container management platforms such as Apache Mesos and Docker's Swarm, Kubernetes' biggest advantage is its focus on helping users building robust distributed applications with declarative APIs, quickly and conveniently. At the same time, Kubernetes uses a unified model, that is the container design model and controller mechanism, to converge to the ideal state.

With that advantage, coupled with the multi-cloud technology, Kubernetes users can build standard platform-grade services in a multi-cloud environment.

kubeCDN, a project to build self-hosted content delivery networks based on Kubernetes, is the perfect example. With the cloud-native technology, it cleverly addresses many of the pain points of traditional CDN products, such as security concerns, service quality being dependent on external factors, services not always being very cost effective, and the potential of commercial secret leaks. In terms of implementation, kubeCDN builds Kubernetes clusters in different regions and uses Route53 to route users' requests to the optimal cluster based on latency. As the foundation on which CDNs are built, Kubernetes helps users integrate infrastructure, while its APIs allow users to quickly distribute content and deploy applications.

Figure 6. CDNs built on multi-cloud Kubernetes

Multi-cloud Kubernetes provides kubeCDN with disaster recovery and the ability to use resources from multiple clusters. At the same time, Kubernetes APIs reduce the workload of building a global-scale CDN to a few hundred lines of code. Multi-clouds and hybrid clouds based on Kubernetes are bringing robustness and imagination to different industries.

If multi-cloud is the future of cloud computing, then what is the future of multi-cloud?

The goal of the cloud-native technology is to implement the continuous delivery of what we call de facto applications. These applications should solve the three biggest problems that face us: resiliency, usability, and portability.

Resilience means service reliability and the agility to explore and innovate. This is what customers value the most. Another focus of application resilience is the ability to quickly deploy, deliver, and scale on the cloud. This requires a proven application delivery system and the support of cloud-native development framework.

Application resilience also relies on good usability. With the microservice architecture becoming mainstream, different parts of an application need a better way to control how they share data in order to optimize performance. This is where Service Mesh shines.

Resilience, usability, combined with portability, make multi-clouds and hybrid clouds unparalleled and unstoppable.

While Kubernetes is the operating system of the cloud era, applications built on this operating system still need a series of development, delivery, and management tools. The value of cloud computing comes down to the value of the application itself. And how to serve these applications better will determine who comes out on top in this new round of bout among cloud vendors.

On the road to the cloud holy grail, a cloud-native application definition and standard, the ability to govern application across clouds using Service Mesh, and a multi-cloud application delivery and management system based on Kubernetes, would prove instrumental.

There are many Kubernetes projects for multi-cluster, such as Federation V2, Cluster Registry, and kubemci. Before all these, there was Federation V1, the grandfather of Kubernetes multi-cluster projects, but that has since been deprecated. The main reason being that V1 designed a layer of Federation API in place of the widely accepted Kubernetes API, which went against the development philosophy of the cloud-native community.

The Federation V2 project, led by Red Hat, is integrated into Kubernetes APIs in the form of plug-ins, which has come to be known as CRD. It provides a way to convert any Kubernetes API type to a multi-cluster federated type, and a corresponding controller to push these federated objects. It is not as concerned with complex push policies (Federation Scheduler in V1) as V1, but only distributes these objects to clusters defined in advance.

Note that the objects pushed to these clusters come from the same template, with different metadata. Also, the object pushed must be converted from the original type to the corresponding federated type, which is convenient only when the number of types is limited.

This means that Federation V2 is mainly designed to push cluster configuration information such as role-based access control (RBAC) policies and other policies to multiple clusters. These types of resources are fixed, and the configuration policy format of each cluster is similar.

Therefore, Federation V2 is, as of now, a multi-cluster configuration pushing mechanism.

However, there is often a more complex decision-making logic behind a multi-cloud delivery system. For example, if Cluster A has a low workload, then more applications should be pushed to it. Another example would be if a user has hundreds or even thousands of second-party and third-party applications (all CRD + Operator) for multi-cloud delivery. In the current Federation V2 system, the cost of maintaining these CRD federated types is very high.

What should a multi-cloud multi-cluster application delivery management system be capable of? There are three technical points worth considering:

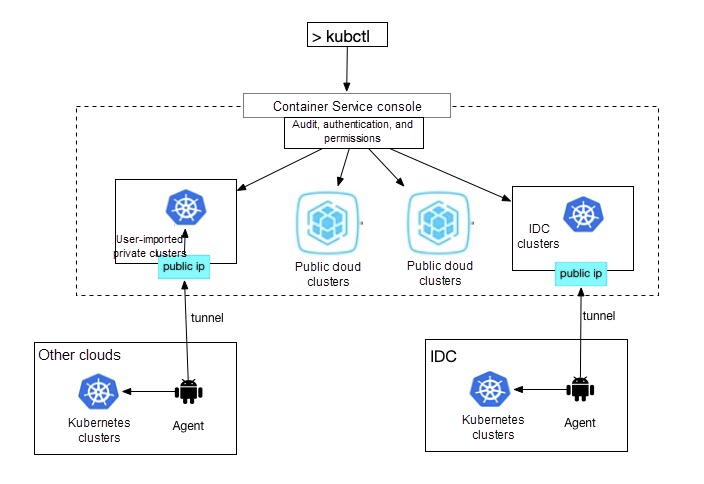

To connect Kubernetes clusters to a multi-cloud management system through Kubernetes API hosting, the main technical challenge lies in establishing "cluster tunnels".

The cluster tunnel installs an agent in your Kubernetes cluster on a private network, which enables access from the public network. With this tunnel, users can access your Kubernetes cluster as if it were running on a public cloud without the need for public IP addresses and use, manage, and monitor your clusters anytime, anywhere. Features such as authentication, authorization, logging, audit, and console are all controlled from a single point no matter how many clusters.

The architecture of the cluster tunnel is simple, as shown in Figure 7. There are two layers. The lower layer is the hosted clusters, with an agent for each. The agents have two main functions. They run in the hosted clusters and are able to access resources on the private networks where the clusters reside. They are also responsible for establishing tunnels that connect the private networks to the public clouds by using Stubs (access nodes). The upper layer is composed of clouds on the public network and is responsible for centralized authentication, authorization, and audit. Commands for the private Kubernetes cluster are passed on to the agents through the tunnels.

Figure 7. Multi-cloud Kubernetes tunnel architecture

By using this mechanism, users can access hosted clusters from a centralized location and at the same time, public clouds do not store private cluster user credentials. To implement cluster tunnels, the multi-cloud architecture must overcome two major challenges:

Both challenges are not completely met by the existing open source tunnel library, or native layer-4 and layer-7 forwarding. Here are some examples:

Container Service for Kubernetes (ACK) follows the application-oriented multi-cloud and multi-cluster architecture described above. It starts off by importing existing clusters, as shown in the following figure:

Figure 8. Import existing clusters from Alibaba Cloud Container Service for Kubernetes

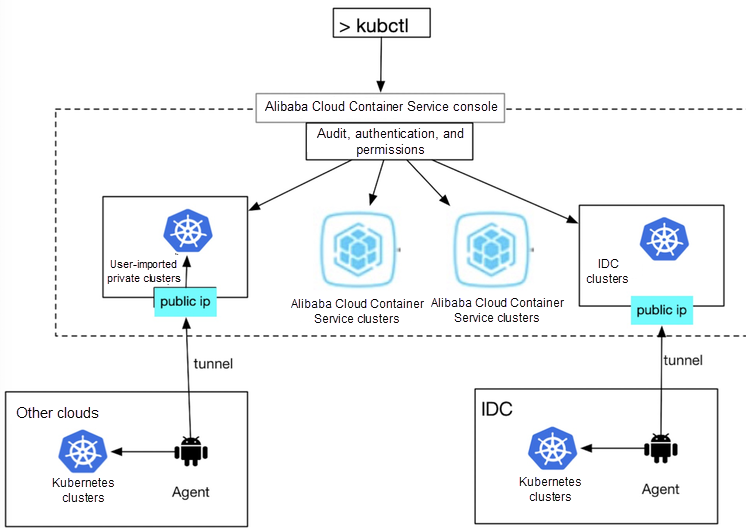

Figure 9. Architecture of the Alibaba Cloud cluster tunnel

As shown in Figure 9, this multi-cloud architecture is almost the same as that described in the previous section. ACK installs an agent on the user's cluster. As long as the agent is authorized to access the public network, you can use your cluster on ACK and use ACK to authenticate and experience native Kubernetes APIs.

Commands such as kubectl exec and kubectl port-forward use non-HTTP persistent connections, while kubectl logs-f sends responses that last for a long period of time. This type of connections prevent HTTP/2 multiplexing from functioning, and HTTP/1.1 from the official Go library does not support protocol upgrades, therefore native layer-7 HTTP forwarding is out of the question as well.

To solve these two problems, ACK tunneling adopts the following strategies.

By design, the connection between the agent and the stub is transparent to users. Problems with this connection should not prevent the whole system from functioning, as long as the client and the API server of the target cluster are running properly. Therefore, high availability capability is built into the ACK tunnel to provide fault tolerance and decrease downtime.

Multiple agents can connect to the stub at the same time to provide fault tolerance and load balancing.

Clients requests are sent to the same IP address, that is the same stub, for processing. If there were multiple stubs behind the same IP address, the system would have to accommodate this using load balancing. However, load balancing would cause a major problem because persistent connections are used, and requests from the client might be contextual. TCP layer-4 forwarding causes errors with this type of data. Therefore, only one stub can function at the same time.

To provide high availability when only one stub is running, the ACK tunnel uses high availability capabilities, such as the Kubernetes native Lease API. Although stub high availability does not provide load balancing as its agent counterpart, it does provide rolling upgrade and other capabilities.

Alibaba Cloud cluster tunnel provides a solid foundation for multi-cloud management.

The boundary of cloud computing is being quickly redrawn by technology and open source. More and more applications and frameworks are no longer directly tied to any cloud by design. After all, you cannot stop users from worrying and freaking out over competition, and you certainly cannot prevent users from deploying Kubernetes to the clouds and data centers around the world. Kubernetes will be the highway that ties cloud and applications together, and it will deliver applications to anywhere in the world in an efficient and standardized manner. The delivery target can be the end users or Platform-as-a-Service (PaaS) or Serverless, or even some other application hosting method we cannot imagine yet. The value of the cloud will come down to the application itself.

The multi-cloud and hybrid cloud era is here. Application-oriented multi-cloud and multi-cluster architecture is the inevitable trend of cloud computing. Your cloud can run anywhere, and we will be there to help you manage it. Let us embrace the application-oriented cloud, cloud-native computing, and the values of applications together.

Why It's Easier Than Ever Go Cloud Native with Alibaba Cloud

223 posts | 33 followers

FollowHironobu Ohara - February 3, 2023

Hironobu Ohara - May 18, 2023

5544031433091282 - April 7, 2025

Alibaba Container Service - December 5, 2024

Hironobu Ohara - February 3, 2023

Alibaba Container Service - July 29, 2019

223 posts | 33 followers

Follow ApsaraDB for MyBase

ApsaraDB for MyBase

ApsaraDB Dedicated Cluster provided by Alibaba Cloud is a dedicated service for managing databases on the cloud.

Learn More Hybrid Cloud Solution

Hybrid Cloud Solution

Highly reliable and secure deployment solutions for enterprises to fully experience the unique benefits of the hybrid cloud

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Hybrid Cloud Storage

Hybrid Cloud Storage

A cost-effective, efficient and easy-to-manage hybrid cloud storage solution.

Learn MoreMore Posts by Alibaba Container Service