The Alibaba Cloud 2021 Double 11 Cloud Services Sale is live now! For a limited time only you can turbocharge your cloud journey with core Alibaba Cloud products available from just $1, while you can win up to $1,111 in cash plus $1,111 in Alibaba Cloud credits in the Number Guessing Contest.

From 2009 to 2016, I participated in 8 technical preparations for the Double 11 Shopping Festival. For Double 11 in 2009, I didn't have much of an impression. The main reason is that Taobao was already very large at that time, and the daily transaction volume had been hundreds of millions of CNY; while the transaction volume in TMall Double Eleven was only about 50 million CNY, which was relatively small, so it didn't seem significant to me.

Over the next few years, we spent several months carefully preparing for each festival, including monitoring and alarming, capacity planning, and dependence management. We sorted out various methodologies. During this process, we learned a lot of very meaningful lessons. Today, if someone asks me how to prepare for Double 11, or how to prepare for other promotional events, I will reply with very simple methods including capacity planning, traffic restriction and downgrading.

In 2008, I joined Taobao and directly participated in Taobao Mall R&D. Taobao Mall later became TMall. At that time, Taobao Mall's entire technical system was completely different from Taobao, and it was a completely independent system. Its membership, merchandise, marketing, recommendations, credits, and forums were all separate from Taobao. The two systems are completely independent, the only connection is that they share all membership data.

At the end of 2008, the "Cobblestone Project" was launched, connecting the data and business of the two systems. The core affect was making business development very flexible. This project greatly advanced the development of Taobao Mall. Later, Taobao Mall transitioned into the TMall brand.

In addition, another significant result of this project was the smooth implementation of the architecture, business and technique scalability. We realized the full servitization of the entire business and extracted all the common elements related to e-commerce, including the membership system, commodity center, trading center, marketing, stores, and recommendation. Based on this system, it was very easy to build services like Juhuasuan, 3C, and Alitrip. After breaking the original architecture and extracting all the common services, the upper-level business ran fast, solving the problem of business scalability.

This project had the first large-scale use of middleware. The 3 major middleware, HSF, Notify, and TDDL underwent significant innovation and were widely used. The problem with a distributed system is that it is split into many sub-systems, which also contributes to scalability issues. This project also resulted in technological advances. Both business development and technological scalability achieved new heights.

Since 2012, I led the middleware product line and the high availability architecture team. But why capacity planning? Double 11 promoted Alibaba's great technical innovations, and capacity planning is also an innovation point during the process.

When I was preparing for Double 11 this year, the boss asked me, "What risks are we expecting this year?" I told him that there were definitely a lot of risks, but if a system eventually experiences issues, it'd probably be a transaction-related system. Alibaba's systems are divided into two parts: some are to promote transactions, for example, recommendations, shopping guide, search, channels, etc., which are for all kinds of marketing; others are for transactions, red envelopes, discounts, etc.

The reason is simple: the shopping guide system leaves you enough time to make decisions. The traffic does not increase instantly, leaving you 30 minutes to make a decision. However, the trading system does not have any decision buffer. Once the traffic initiates, there is no time to respond or decide, and all actions are automatically executed by the system. Capacity planning is very important during this process.

In the early years, the natural growth of our business was very fast. It was very impressive that, at that time, when customers opened a product details page while shopping, the page load was relatively high for a while. Alibaba leveraged some tuning and optimization specialists to maximize virtual machine performance, but eventually the system would hang after a few days. Fortunately, after resizing was complete, the system would be restored after restarting. What does this mean? It was similar to Taobao in the early years. There was no concept of capacity preparation and estimation, and the capacity required by the entire system was unknown.

New business was constantly coming online, and business operations and promotions were also very frequent. I remember there being very large promotions. The membership system is very important, because all the services access user data from the member center, including the buyers' data and the sellers' data. At that time, the cache ability of a single physical machine was about 80,000 requests per second. Today, looking back, it seems very insufficient. However, the requests per second were reaching 60,000 outside of the peak period, which is very large.

We listed all the systems that access the member center to see which ones were not related to trading, and these systems were fully or partly disabled. For example, systems related to the merchants and communities were disabled. Various problems occurred during this process. In summary, we had no idea about capacity planning. The essence of capacity planning is: how many servers are required by a certain system at a certain time? A certain and quantified number is required.

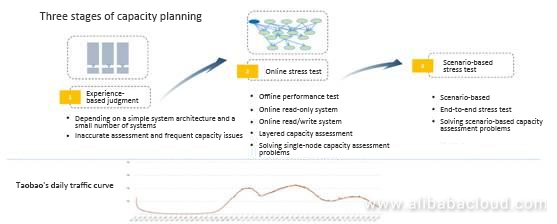

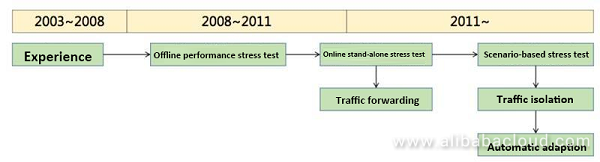

The entire process of capacity planning took seven or eight years, having a total of three stages.

The first stage was very early in our process, and at that time we evaluated the capacity based on experiences. We determined the capacity according to the load, system response time, and a variety of performance factors. At that time, I asked an executive, "How can I determine whether a server is sufficient, and how much traffic it can support?" He told me an empirical number: each server supports 1 million PVs.

At that time, the traffic curve had 3 peak periods in a day: 9 a.m. to 10 a.m., 2 or 3 p.m. to 5 p.m., and 8 p.m. to 10 p.m. Why is it 1 million? This is an empirical value, and also has a scientific basis. We hoped that the online traffic could be supported with half of the servers disabled, and all servers enabled would be able to support the traffic during peak periods. In fact, a single machine could support 3.2 million PVs, which was the empirical value at that time.

Of course, this empirical value worked back then, since the system architecture was simple at that time. It can be understood that all Taobao logic and modules were concentrated in one system, so peak periods were different depending on the module, which was solved through preemption or scheduling the internal CPU of a server at the OS level.

The second stage was the online stress test. Since the systems were distributed, problems could occur. For example, calls by the member and the transaction were originally on one server, but after they were separated, the traffic ratio was unclear. As a result, some stress test mechanisms had to be introduced. We introduced some commercial stress test tools for the stress test.

We had two purposes at that time: first, to perform a stress test before the system release, to determine whether the response time and the load could meet the release requirements; second, to accurately evaluate how many servers had to be brought online based on the stress test result. The second purpose is difficult to simulate. I remember the performance stress test team also launched a project called "Online/offline capacity relationship". Since the online/offline environments and data are completely different, there is no congruence, which makes it impossible to determine online needs through offline stress test metrics.

So, what could we do? First, we directly performed online stress tests. At that time, this decision seemed very crazy; no company, including Alibaba, had ever directly performed online stress tests. We coded a tool that extracted the previous day's logs and played them directly online. For example, setting a pre-determined value based on the response time and load, and checking the QPS when the value is triggered.

Second, we split the traffic. Alibaba's entire architecture is unified, and is all based on a set of middleware. Through soft load and ratio adjustment, for example, migrating the online traffic to a server based on the weight, and constantly migrating online application side and server side traffic to one server to increase its weight, its load rose, and the QPS also rose. The whole process was recorded.

These are two scenarios: simulation and log playback. Another is to use real traffic, which is automated to generate data every day. Once implemented, it replaced the process performed by offline performance stress tests. This allowed each system to get performance metrics on their own every day. Performance metrics for project releases and routine demand releases could be viewed directly. Later, the performance test team was disbanded.

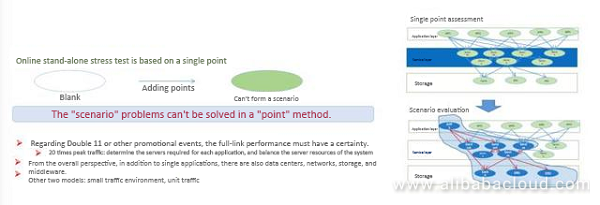

There was a problem: it was not scenario-based. Scenarios are very important. For example, if I want to buy a piece of clothing, I usually search for it in the website's search box or in the category navigation, add it to the shopping cart, and pay for it. The Double 11 is product-focused, and some best sellers may be presented separately as a single channel page. The Double 12 is shop-focused. Different KPIs apply.

The server traffic related to products is high on Double 11, and more servers related to products are required. Likewise, more servers related to shops are required on Double 12. This differs from the usual traffic performance. It is also inaccurate to calculate the scenario-based traffic with the usual capacity.

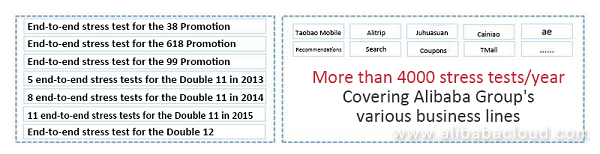

We also did something important: an end-to-end stress test. We started doing it in 2013, and it was never made public. This was a game changer. The Double 11 in 2009 was the smoothest, because there was no significant traffic. We can ignore it. In 2010, 2011, and 2012, there were always some minor problems during each Double 11, and we didn't know what to do.

In 2013, after the end-to-end stress test product was implemented, there was an essential change. The performance was excellent in both 2013 and 2014. This was the beginning of a new era. For marketing and promotion, there is a peak, before which the traffic is very low, and after which the traffic suddenly increases. This is a very effective way of dealing with this situation.

Online stress tests and scenario-based end-to-end stress tests are the focus.

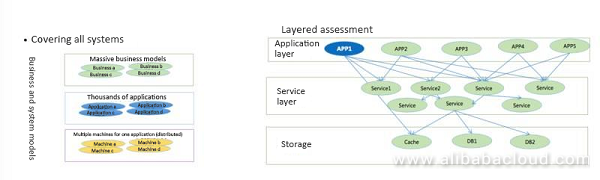

Online stress tests are mainly due to the diversity of Taobao's business models. Once adopting a distributed model, various services appeared, which helped to free up productivity. For example, in the past, more than 100 people were developing a single system, which was very challenging. After the distributed transformation, all services were extracted, and productivity improved.

Second, the size of each business machine is very large, and the number of applications per business is also large. We layered the system, with computing based on the capacity of a system, resulting in Alibaba's cluster capacity. We first made an application system. The traffic was imported through split loads, and it was computed after the loads were complete. How much traffic the whole cluster, for example, 100 servers, could support was computed. Of course, the databases were difficult to compute, and were planned in advance. In general, database capacity was reserved for years, which made it difficult.

There may be a problem with computing the capacity and size of the cluster. Why? Because once a system was split, it may be split multiple times. Although the system's dependencies can be sorted out by tools, we didn't have time to troubleshoot small problems across the whole cluster in this scenario. Once a small problem occurred, the entire cluster would go down, which couldn't be avoided.

This system was continuously utilized until 2013. It was quite reasonable, each system capacity was computed, then each cluster capacity was computed, then the large cluster capacity was computed. However, it was not a very good solution. One of its advantages was that it could run automatically to compute the system capacity every day, and could ensure that the system and daily performance metrics were not corrupted.

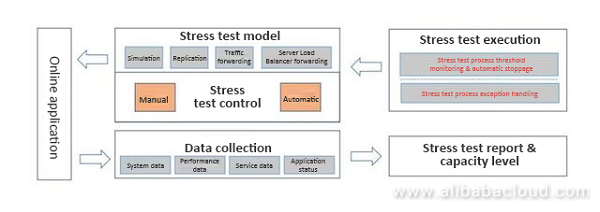

This system supported the Double 11 in 2013. It was implemented through several methods including simulation, traffic replication, traffic forwarding, and load-balanced traffic. The whole system was automated, which ran every week. A report was generated according to the resulting data for the day before and the day after the release, and whether any performance degradation could be seen. The traffic required for the event was prepared based on computed values. Here is some data: there were 5 days of automatic stress tests every month. In this case, it was impossible to do performance stress tests manually, because it was automated.

I also talked about some shortcomings. The whole capacity was estimated based on the capacity of each point. The biggest problem was that no one could figure out what the whole architecture was like, and an overall collapse would occur if some dependencies were missed system-wide.

Why did we do the scenario-based capacity assessment?

Another reason we needed to do scenario-based stress tests was that the background traffic was mostly split, which means that the real traffic was used. The real traffic was actually very low in volume relative to traffic during events. Without background traffic, the network devices and switches in the data center couldn't run at maximum load, so these problems wouldn't be exposed. The second problem is the certainty of the scenario. Each one has a different shopping process. The system resources for different processes must be determined in order to support the largest amount of traffic with the fewest number of servers.

Based on this, we argued about how to best perform scenario-based stress tests. The first method was to isolate a small environment from Taobao, and deploy more than 100 systems for it. Then introduce traffic into the environment, and let clusters run at maximum load. It solved the dependency problem, and the environment could verify if there was a dependency problem. However, the environment problem couldn't be solved. One year, one of our company's businesses adopted a solution similar to the small environment instead of this solution, resulting in maximum load on the ingress switches.

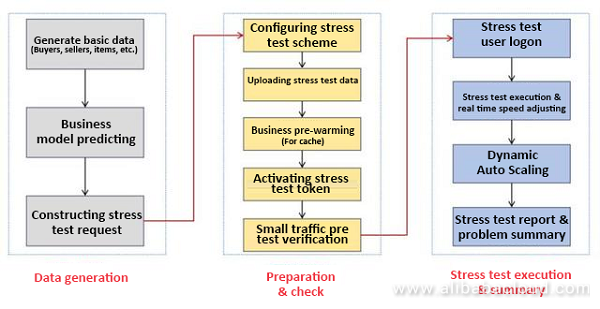

A simpler and more reliable assessment tool was needed, which was the scenario-based end-to-end stress test tool. We have been using this system since 2013. First we need to generate data, and the traffic needs to be generated closer resembling the real situation. Since decisions can't be made regarding the peak mentioned above, what can we do? Is it possible to simulate the peak at 0 o'clock in advance? We want to simulate all traffic. It is an ideal architecture, but there are also a lot of challenges.

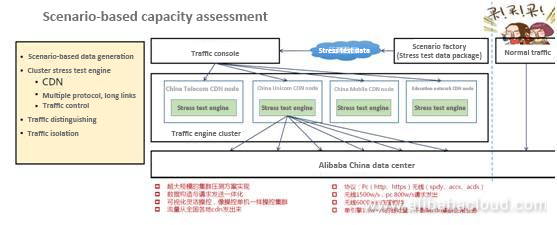

We must generate data as accurately as possible, and simulate the various scenarios, such as how coupons are used, the proportion of the items in shopping carts, how many items are placed in one order, how many items are submitted to Alipay, etc. The amount of data is growing every year. For example, in 2015, there was about 1 TB of data. This 1 TB of data was transmitted to the data center and then forwarded to the stress test node. This is the stress test cluster. It is a cluster stress test tool, which can generate very large amounts of traffic, in line with the amount of data on Double 11.

The cluster was deployed on a CDN node to generate a large amount of traffic. There are some technical points: the stress test tool supports multiple protocols, such as HTTPS, which requires performance improvements. Traffic control is also required to adjust traffic based on different scenarios. Third, traffic needs to be distinguished. The picture on the right reflects the real traffic, which is all online. It is impossible to simulate this environment offline, otherwise it will affect the normal online traffic, so the normal traffic and the stress test traffic should be completely separate. The fourth is the isolation of traffic. Without traffic isolation, we can only identify problems with the system when there isn't much traffic after 0 o'clock, which is very hard. Traffic isolation was launched in the second year, in order to ease the team's workload.

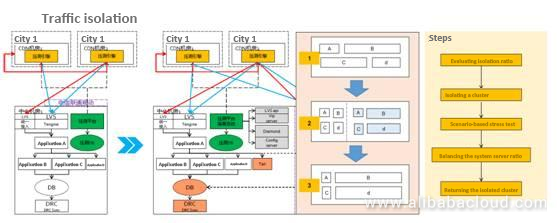

Traffic isolation is to isolate a cluster from the original online cluster through the Server Load Balancer. Of course, the size of the isolated cluster is very large, which can account for 10% to 90% of the original cluster. For example, if there were originally 100,000 servers, 90,000 servers can be isolated. When we are preparing for a promotion, for example, the traffic on Double 11 is more than 20 times normal, so the traffic can be isolated and will not affect the existing traffic.

What is the entire process? Taking the figure as an example, there are four scenarios: A, B, C, and D. Originally, scenario C requires more servers, but after the stress test, it is determined that B and D require more servers. The whole process is automated. If C does not need so many servers, its servers will be removed and added to B and D. Since it is automated, it is very efficient and does not need to run in the wee hours. Finally, the isolated cluster needs to be returned to the original online cluster to restore original server proportions, then it can resume the challenge on the following day.

During the entire capacity assessment, from data construction to traffic, we have an objective. For example, we want to hold an event, which has about 50,000 transactions per second. After inputting the value 50,000, the whole system starts the stress test, flexible scheduling, and isolation. This is the automated process. Capacity can be predicted, but cannot be planned for, and we can only limit it. We can accurately predict the amount of traffic, the number of users, and the peak value for Double 11.

However, there is not much value in this prediction. What we can do is limit the number of transactions. For example, in 2016, we achieved 175,000 transactions per second, and the limit was set to 172,000 for all systems. This is all we can do. Since the real amount of traffic is much larger than this, the cost of supporting real traffic will be very high.

The number of daily-used servers is small. During Double 11, we basically use Alibaba Cloud's servers, which significantly reduces the cost. So, if capacity planning sets a limit, for example 170,000 transactions per second, it may be 200,000 or 250,000 next year. Based on this value, through the scenario-based end-to-end stress test tool, the capacity of the entire system is computed, and the server resource occupation is balanced. The benefit of this is to hold a successful event using the fewest resources.

Since 2013, we have discovered many problems through using this technology, and these problems cannot be found through daily tests, functional tests, or some tool tests. Problems related to hardware, network, and operating system that never occurred were all exposed. Under heavy load, even some very weird problems occurred.

If any of these problems came up during Double 11, it may be catastrophic. After we finished with 2013, problems in 2012 and 2011 look reasonable. If an event's peak traffic is more than a few times larger than the usual peak value, there will definitely be problems, because many problems wont be discovered through some logic or thinking, and must be discovered through a real environment that simulates traffic for all scenarios.

Capacity planning is a long-term process. At the beginning, commercial software was used for performance stress tests, and it worked well, and could also support calculating the entire capacity. Even today many companies are still using similar software for performance assessment, and it is continuously evolving. Later, a huge gap between the real capacity and the stress test assessment result was discovered, so we introduced the online stress test, traffic splitting, traffic replication, and log playback in order to evaluate the traffic through various nodes.

We thought that the system was amazing. At that time, the system won the innovation award for the entire technical department. Then we thought that with this system, we wouldn't have to worry about Double 11 or any other events in the future. In actuality, the system still needed development. Then the end-to-end stress test was implemented to test the entire cluster based on scenario simulation.

Looking back, when you are satisfied with the current situation, for example, CSP "daily stress test platform" can fully satisfy the current situation, and is ahead of many products in China, it's important to remember that it can still keep moving forward.

We just did capacity planning for Double 11. Alibaba's entire technical architecture is unified. After the end-to-end stress test, many business units, such as Alipay, can utilize the same method, which significantly reduces the costs. It reduces costs in R&D, learning, O&M process, and O&M product line. As a result, we have quite a small team, with only 4 or 5 working on the full line stress test. We still manage to serve more than 100 teams group-wide, which is also a result of the uniformity of the structure.

After this year's Double 11, our CTO also presented us with a new challenge: lower costs for the following Double 11. The end-to-end stress test verifies the daily systems, rather than look for problems, and we hope that the system can be made more automated and intelligent. We are considering how to best achieve this.

Jiang Jiangwei, also known as Xiaoxie, is a researcher at Alibaba. He joined Taobao in 2008 and participated in business systems R&D. Since 2012, he has been in charge of Alibaba's middleware technology products and high availability architecture. The middleware products are the basic technical components of the distributed architecture of Alibaba's e-commerce and other business, enabling various business systems to quickly build a high availability distributed architecture cluster.

Safeguarding the Double 11 Shopping Festival with Powerful Security Technologies

2,599 posts | 764 followers

FollowAlibaba Clouder - December 13, 2019

Alibaba Clouder - December 2, 2020

Alibaba Clouder - November 8, 2018

Alibaba Clouder - November 13, 2018

Alibaba Clouder - December 3, 2020

Ling Xin - November 28, 2019

2,599 posts | 764 followers

Follow Black Friday Cloud Services Sale

Black Friday Cloud Services Sale

Get started on cloud with $1. Start your cloud innovation journey here and now.

Learn More Architecture and Structure Design

Architecture and Structure Design

Customized infrastructure to ensure high availability, scalability and high-performance

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn MoreMore Posts by Alibaba Clouder