By Chao Shi

Master-worker architectures are a well-established pattern for designing distributed systems, offering benefits such as centralized management, high resource utilization, and fault tolerance. In our data centers, almost all distributed systems adopt this architecture.

We once experienced a cascading failure that led to a cluster-wide service interruption. This incident prompted us to reflect on the challenges of effective canary releases in master-worker architectures. In this article, we will analyze the reasons behind this issue and propose several solutions.

Master-worker architectures are also known as master-server or master-slave architectures.

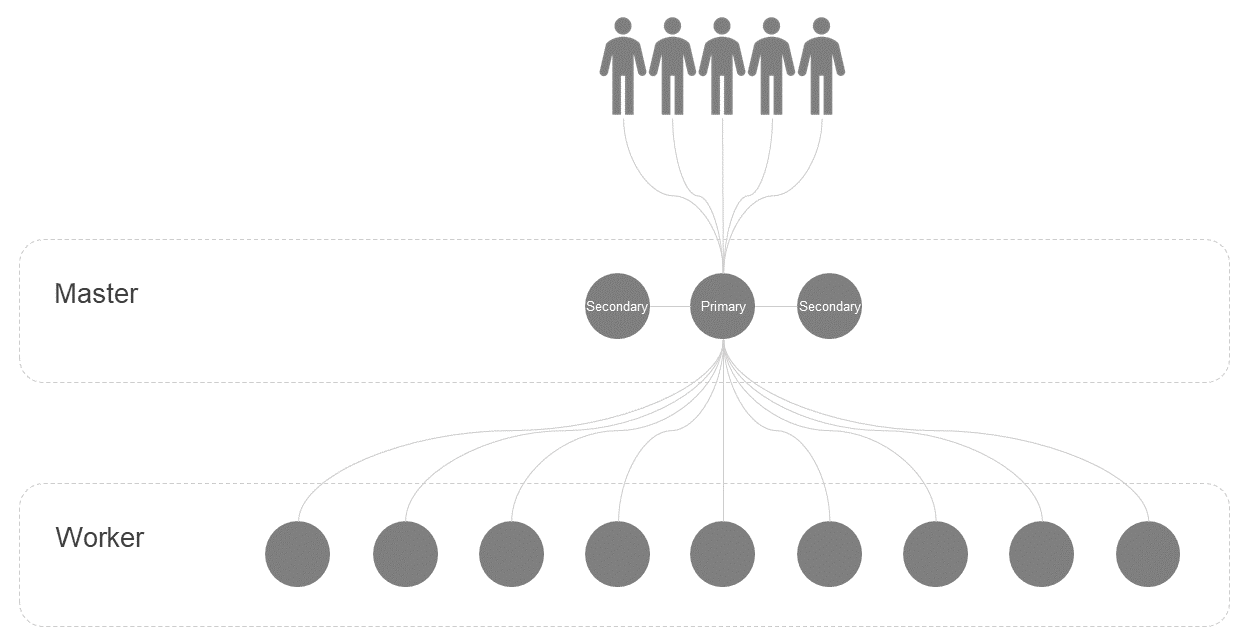

In practice, to avoid becoming a single point of failure, a master server typically consists of multiple Raft-based nodes, with one as the primary node and others as secondary nodes. Sometimes, the terms master and slave are used to refer to primary and secondary nodes, respectively, within a Raft service. To avoid confusion, in this article, we refer to the primary and secondary nodes of a master server as master nodes.

Depending on the size of the system, a master node can manage several to tens of thousands of worker nodes.

Here, we introduce several common types of master-worker architectures. The first type is the classic architecture, and the other two are common variants. These architectures vary in form but are essentially the same.

In some systems, users must connect to the master server to get a service. If the master server encounters a failure, the service provided by the entire cluster is interrupted. The failure mentioned here is not necessarily a program crash. It can also be other functional failures such as performance degradation or deadlock. The high availability mechanism of Raft can handle problems that have obvious causes, such as breakdowns and program crashes, but it cannot handle problems whose causes are difficult to locate. An example is a situation we have encountered where some threads failed but the heartbeat thread was still alive. As Murphy's law warns, anything that can go wrong will go wrong. In our years of experience in deploying large-scale cloud data centers, we have encountered many problems whose causes are hard to locate.

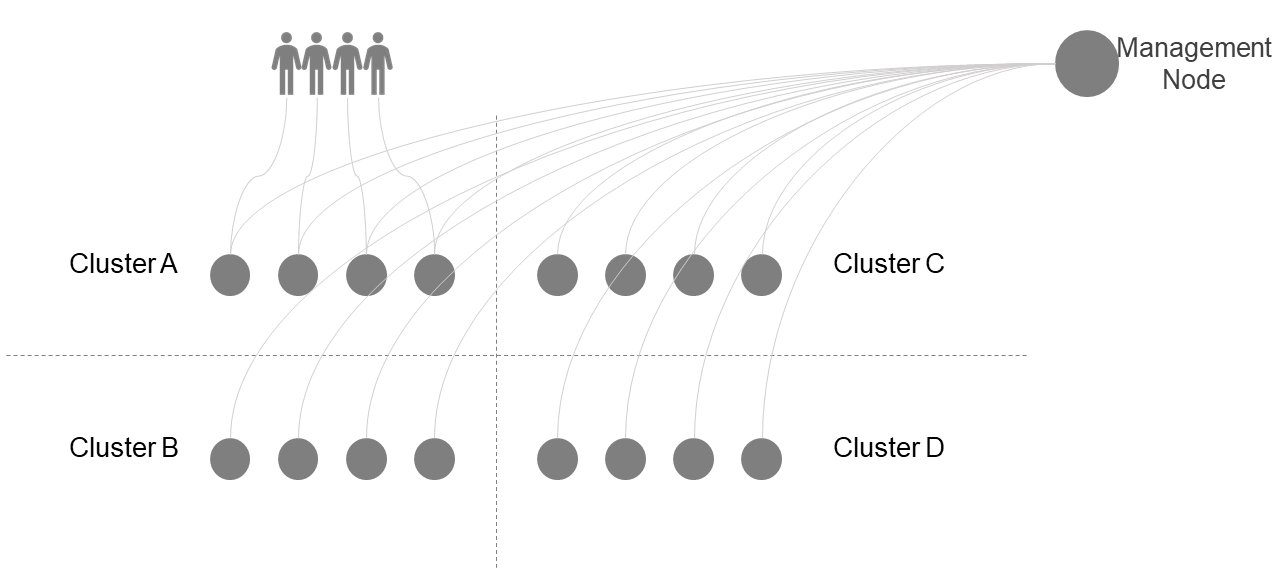

In an architecture where the data plane and control plane are isolated, users interact directly with the nodes on the data plane. A management node is deployed in the central region to perform tasks such as load balancing, breakdown recovery, and throttling. User requests are not transmitted to the management node in the central region. The management node and the nodes on the data plane constitute a master-worker architecture. Although the data plane is divided into multiple clusters, the management node is connected to all the clusters simultaneously and is still vulnerable to cascading failures. For example, after an upgrade of the management node in the central region, a request in a new format is sent to a data node, but the data node is unable to properly handle the request in the new format, and therefore, core dumps occur.

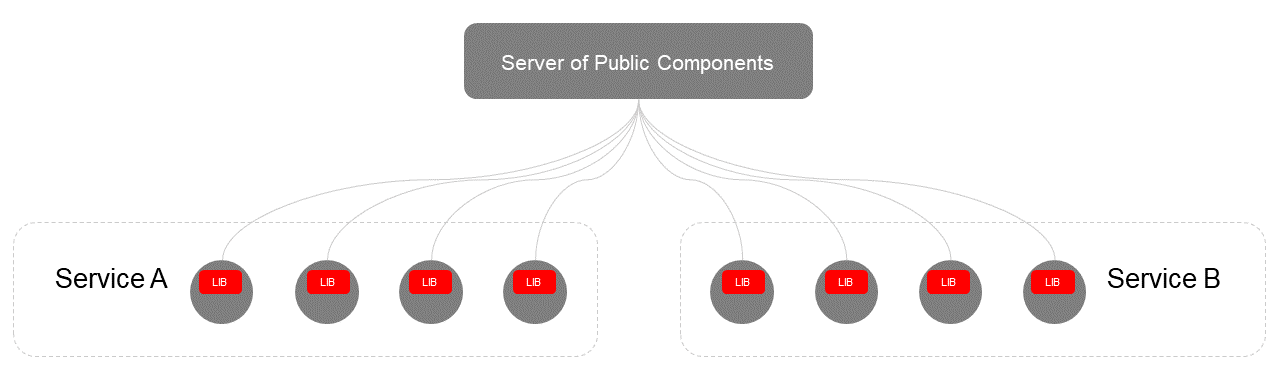

Many common basic components that programs rely on, such as security, O&M, and monitoring components, are embedded in service processes in the form of SDK libraries. The SDKs serve as clients to communicate with the server of the common component service, either reporting information or pulling configurations. This way, the SDKs and the server constitute the classic master-worker architecture. These SDKs are independent streams, but they can also malfunction and cause cascading failures on data nodes as described in section 2.2. Public services are usually used by many services. If such problems occur, the scope of impact will be large.

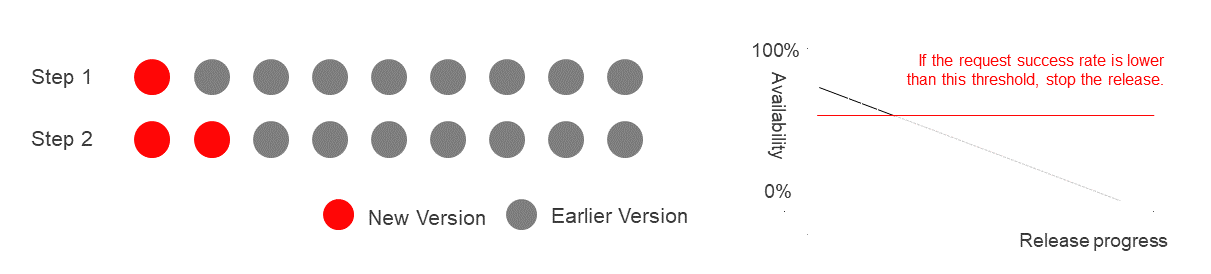

Canary release is the most important means to prevent faults. The typical practice for performing a canary release is to upgrade the nodes in a cluster one at a time while monitoring the entire service for either single-node exceptions or service downtime. If service downtime is observed, the canary release will be stopped immediately. Because distributed systems have failover capabilities, problems may not be observed immediately after they occur during monitoring of the entire service, unless they are obvious problems such as core dumps.

This canary release mechanism works well for regular services. For example, to perform a canary release of a service that consists of multiple nodes, release one node at a time. Monitor the request success rate after each node is released. If the request success rate drops below the threshold, stop the canary release. Now we release a node with a bug in it. After the faulty node is released, we can see that the request success rate in the system drops below the threshold. In this case, the canary release is stopped immediately.

This example is simple, but illustrative enough. In practice, failover mechanisms can mask faults. For example, in a gateway service, a failed request will be sent to other nodes for retries. However, we can still manage to detect faults at the earliest opportunity. In this example, we can identify the problem by monitoring the retry rate of the gateway.

In master-worker architectures, the canary release mechanism is not always effective. Let's analyze why by looking at how to perform a canary release in a system with a master-worker architecture.

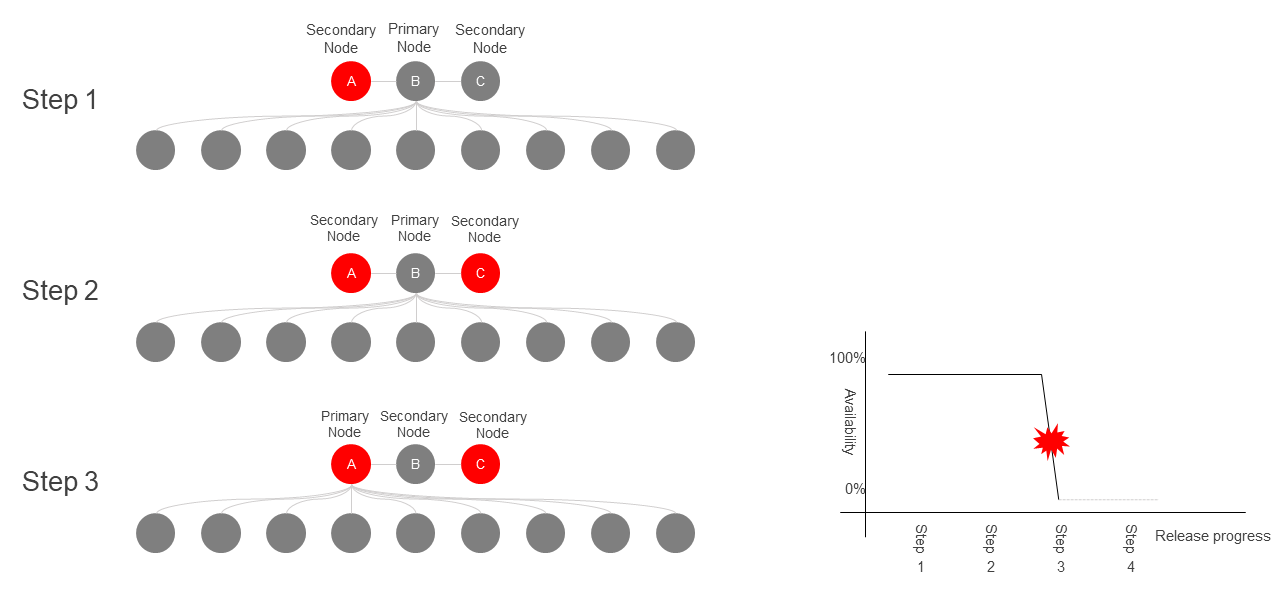

Assume that three master nodes A, B, and C are available, and B is the primary node. To perform a canary release, take the following steps:

As we perform each step, we observe whether service downtime occurs. If service downtime is observed, stop the canary release immediately. This way, we seem to have performed a canary release, but there is a huge risk with the primary/secondary switch in the third step. Consider this extreme case. During the upgrade of node A, a new feature is added to the node. Specifically, a new request field is added to broadcast messages that node A sends to the worker node, such as heartbeat messages. However, the worker node cannot process this new field, resulting in a deadlock or program crash. This will cause a large-scale service interruption within the cluster.

The canary release mechanism is powerless to prevent such problems. The new code on the primary node of master nodes can run only after the primary/secondary switch in the third step is complete. Once the new code is running, especially on such critical nodes as master nodes, it can have a severe impact on the entire cluster. This impact is either zero or devastating, leaving little room for maneuver. Therefore, canary releases cannot be effectively implemented in master-worker architectures.

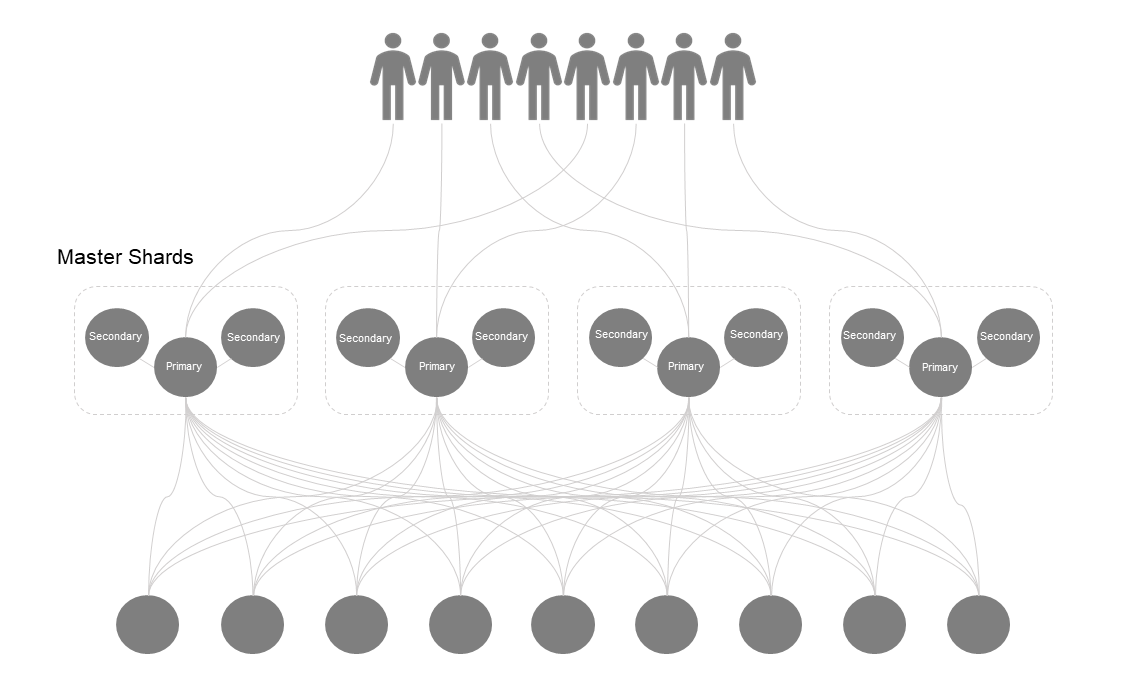

In the classic master-worker architecture, the primary node of master nodes is monolithic. We can split its features into multiple shards so that each shard can be upgraded separately. This way, the release of the entire system becomes gradual, giving us an observation window.

This method can prevent major master server failures. However, if master and worker nodes are highly interconnected, failures at master nodes can still propagate through the network to all worker nodes, resulting in a service interruption. In addition, specific features that a primary master node provides are inherently centralized and cannot be split into shards as in this method.

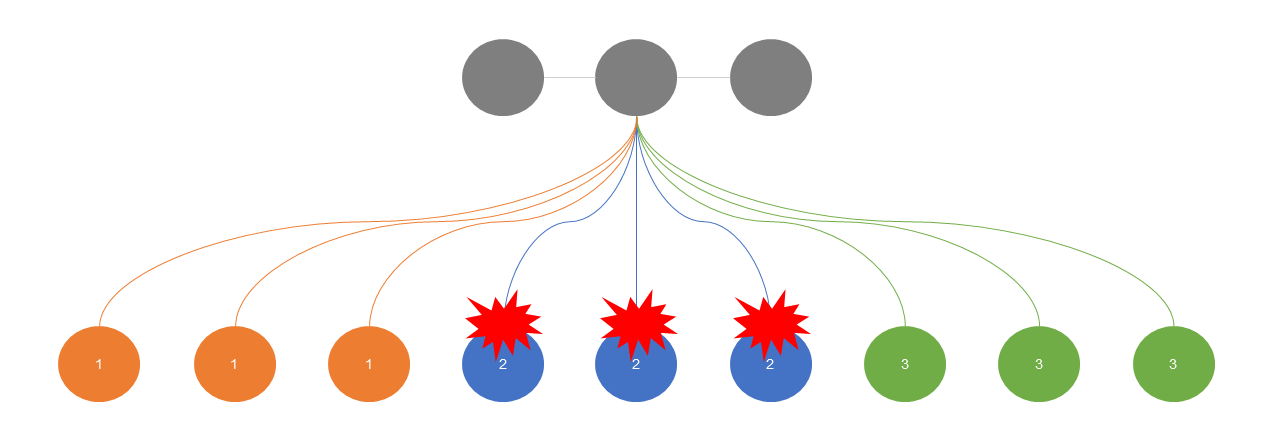

Another thought is to prevent the rapid propagation of failures. The cascading failure occurs because the master server runs code in the new code path immediately after the upgrade and sends a new remote procedure call (RPC) to the worker nodes, causing the worker nodes to run the untested code in the new code path. This process happens instantly, without a canary release. Therefore, we can introduce the canary release mechanism here.

This method also has limits. In complex systems, interactions between master nodes and servers are abundant. It is difficult to implement the canary release mechanism for each interaction. In practice, the canary release mechanism is often implemented only for a few core features.

This solution is tailored to the SDK libraries of public services. DNS SDK is the simplest SDK library with network features, and it rarely has issues. The reason is that its features are simple enough. Therefore, we can simplify SDK libraries, keeping the code under a thousand lines, and thoroughly testing to ensure they are nearly bug-free.

As far as I know, SQLite is the most complex library to meet this standard, which has 150,000 lines of code but an astonishing 100% branch coverage. Given our current state of development, a thousand lines of code is about as far as we can go. If an SDK has complex features that cannot be streamlined, some of its logic can be moved to locally deployed agents. Agents are always easier to perform canary releases for than SDKs.

Preventing cluster-wide cascading failures is a challenge in distributed systems. This article proposes three methods, but they each have their limitations. While writing this article, I discussed it with many colleagues, searched the internet, and even consulted ChatGPT, but could not find better methods. If readers have better ideas, they are welcome to discuss.

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

Alibaba Cloud V-Boxing Day - Where We "Punch Beyond Reality!"

1,084 posts | 278 followers

FollowAlipay Technology - May 14, 2020

Alibaba Clouder - July 16, 2019

Alibaba Cloud Native Community - September 18, 2023

Alibaba Cloud New Products - June 11, 2020

Alibaba Container Service - March 29, 2019

Alibaba Developer - April 15, 2021

1,084 posts | 278 followers

Follow SOFAStack™

SOFAStack™

A one-stop, cloud-native platform that allows financial enterprises to develop and maintain highly available applications that use a distributed architecture.

Learn More Web Hosting Solution

Web Hosting Solution

Explore Web Hosting solutions that can power your personal website or empower your online business.

Learn More Architecture and Structure Design

Architecture and Structure Design

Customized infrastructure to ensure high availability, scalability and high-performance

Learn More Web Hosting

Web Hosting

Explore how our Web Hosting solutions help small and medium sized companies power their websites and online businesses.

Learn MoreMore Posts by Alibaba Cloud Community