By Afzaal Ahmad Zeeshan.

In this article, I will describe the difference between offerings of serverless clusters and the serverless jobs that can run on managed clusters, and which type of the subscription is cost-effective for small-medium businesses and enterprises.

Service orchestration is the primary focus of cloud-native solutions and enterprises that deploy their solutions on private or public clouds. Alibaba Cloud offers several hosting options, and many deployment models are supported to enable customers to fully utilize the cloud. But this sometimes is not the case, as many customers are unaware of the potential of the cloud, and sometimes they do not use the right architectural design for their solutions. Think of this, many people use the lift-and-shift approach to migrate their legacy solutions from a VM-based hosting platform to a cloud; say Alibaba Cloud or any other cloud in this manner.

The serverless application design approach enables organizations to author their solutions that face undefined user traffic and goes under peak traffic scenarios. Deployment of a legacy application on the cloud would cost the enterprise and not yield good results. A common practice is to distribute the different areas of the application that is under stress and load¡ªthe microservices approach. Even with this approach your monthly bill for cloud services does not do justice. The reasons are several:

In these cases, even microservices sometimes fail to provide the best solution on a cloud-hosted environment. This article will discuss the benefits of using Serverless Container Service for Kubernetes for Serverless jobs.

What I have mentioned so far is the serverless jobs and tasks. You distribute your overall solution into multiple services and then further distribute them into minor functions and tasks that are executed based on an event or a trigger, or they keep on executing ¨C such as a handler for HTTP request. Serverless functions option help when you want to keep an HTTP handler for the requests when customers are only going to send a request at a specific time. For example, a contact form is one good candidate for this reason. If you are a blog owner or a small website owner, it is not feasible for you to host a complete web application, just to hold a database of orders or user queries. In this case, a simple function with Alibaba Cloud Function Compute would be more than enough. Here are a few snippets that might help you understand how to develop a serverless function, this one and this one with the URL capture. Now, you will only be charged when a user sends a request. Another major benefit of the serverless jobs is that you only pay for the resources when they are being used, and as soon as the usage goes down, your jobs are scaled down to zero!

A typical web application contains web route handlers, views, models and database manager. Apart from these services, the components for logging, backups, availability also make up a minor part of the application. In a microservices environment, each of these components is broken down and deployed separately. This helps achieve high-availability and better developer experience because your teams can work separately on the projects. You should check this blog out to learn more about the differences between monolith, microservices and serverless application development architectures.

Although Alibaba Cloud offers several platforms and hosting solutions for serverless jobs, especially the Alibaba Cloud Function Compute, it offers Node.js, Python and other SDKs. But we will focus on the containers-based jobs and the serverless approaches taken by the infrastructure too.

First things first, there is no such thing as serverless infrastructure, but orchestrated infrastructure. If you design your solutions to be scalable, then the problem would show up when the infrastructure (or resources) hit their limit. Classical approach to create the resources for Kubernetes require manual creation of the VMs, and other resources and their attachment to the cluster. Our cloud environments should not have these limitations, as that will kill the overall purpose of a cloud.

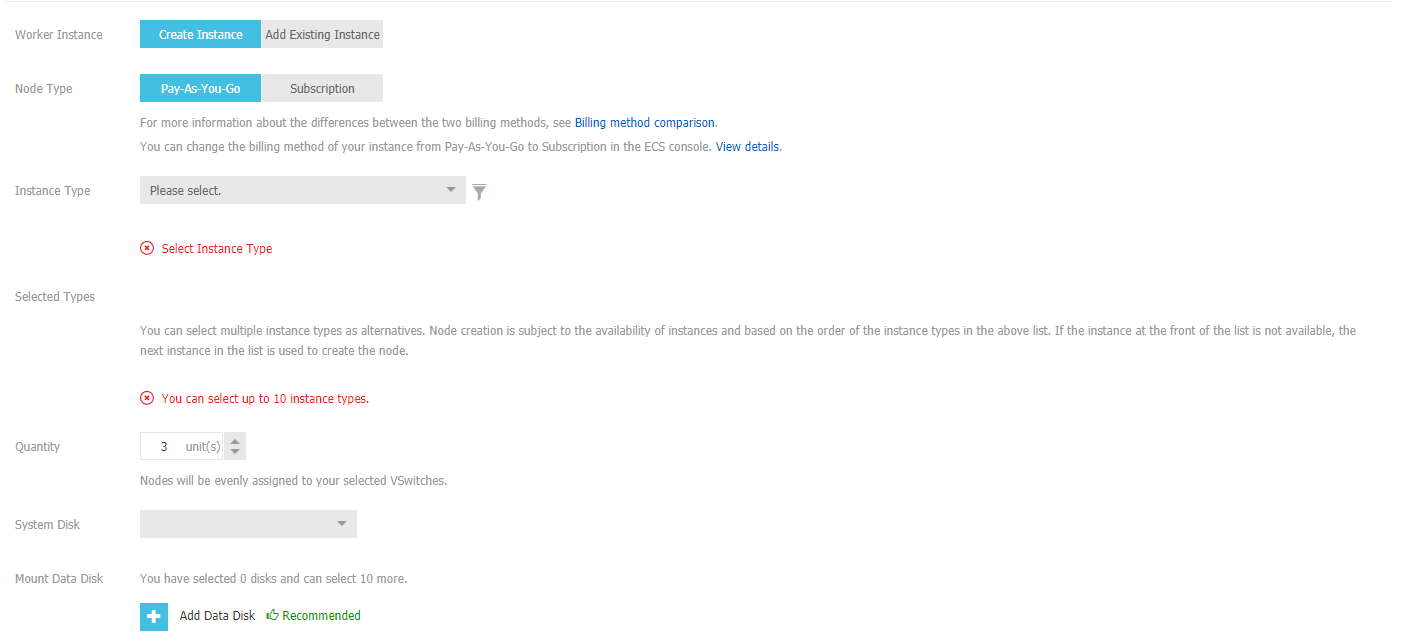

This is what you get when you are creating a managed Kubernetes cluster on Alibaba Cloud:

As you see, you specify the number of nodes in the cluster. Note that in a pay-as-you-go model you can change the number of the nodes, but this require a manual configuration for the node count change, or you might need to use the Alibaba Cloud SDK to manage it manually.

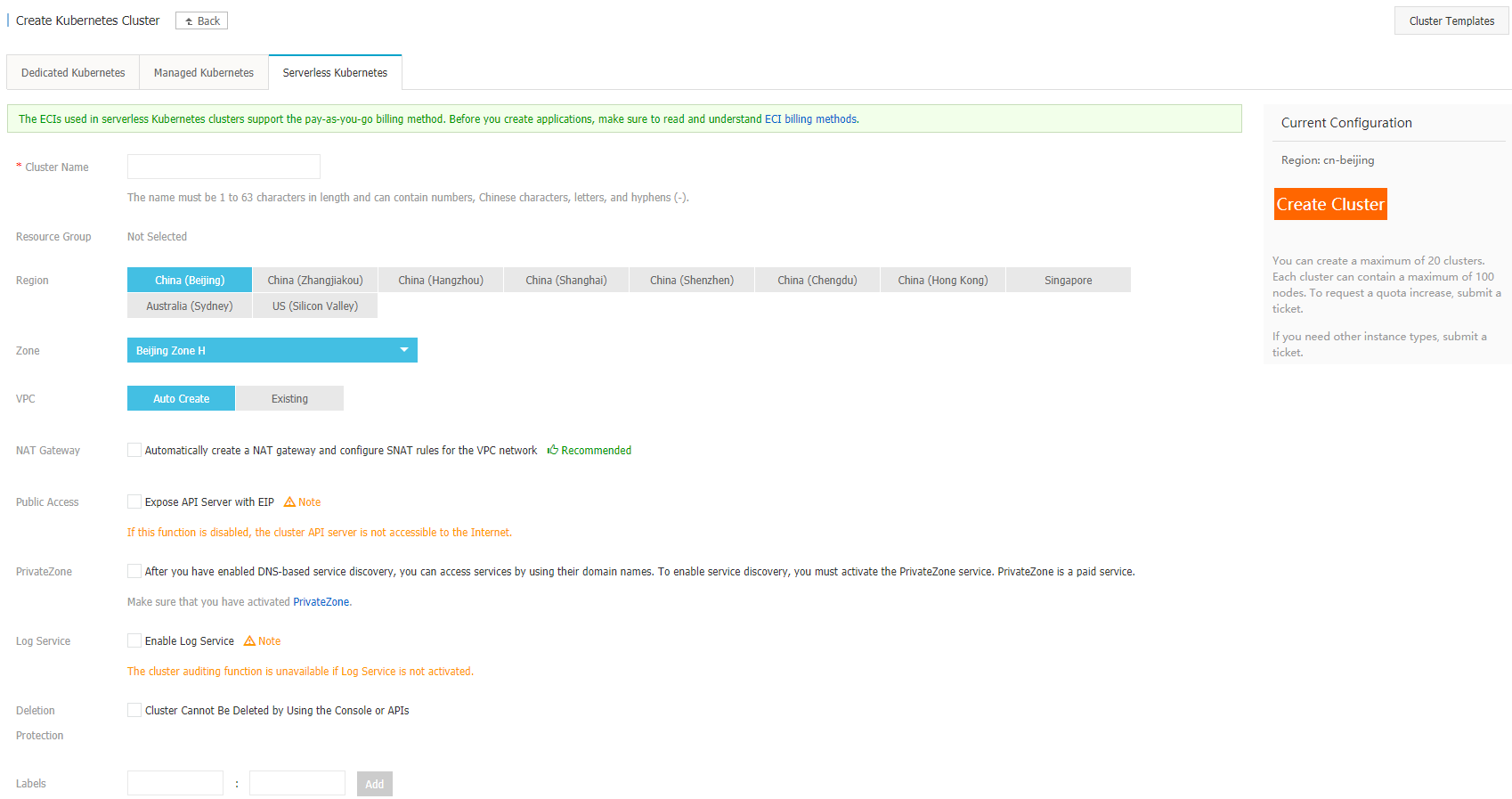

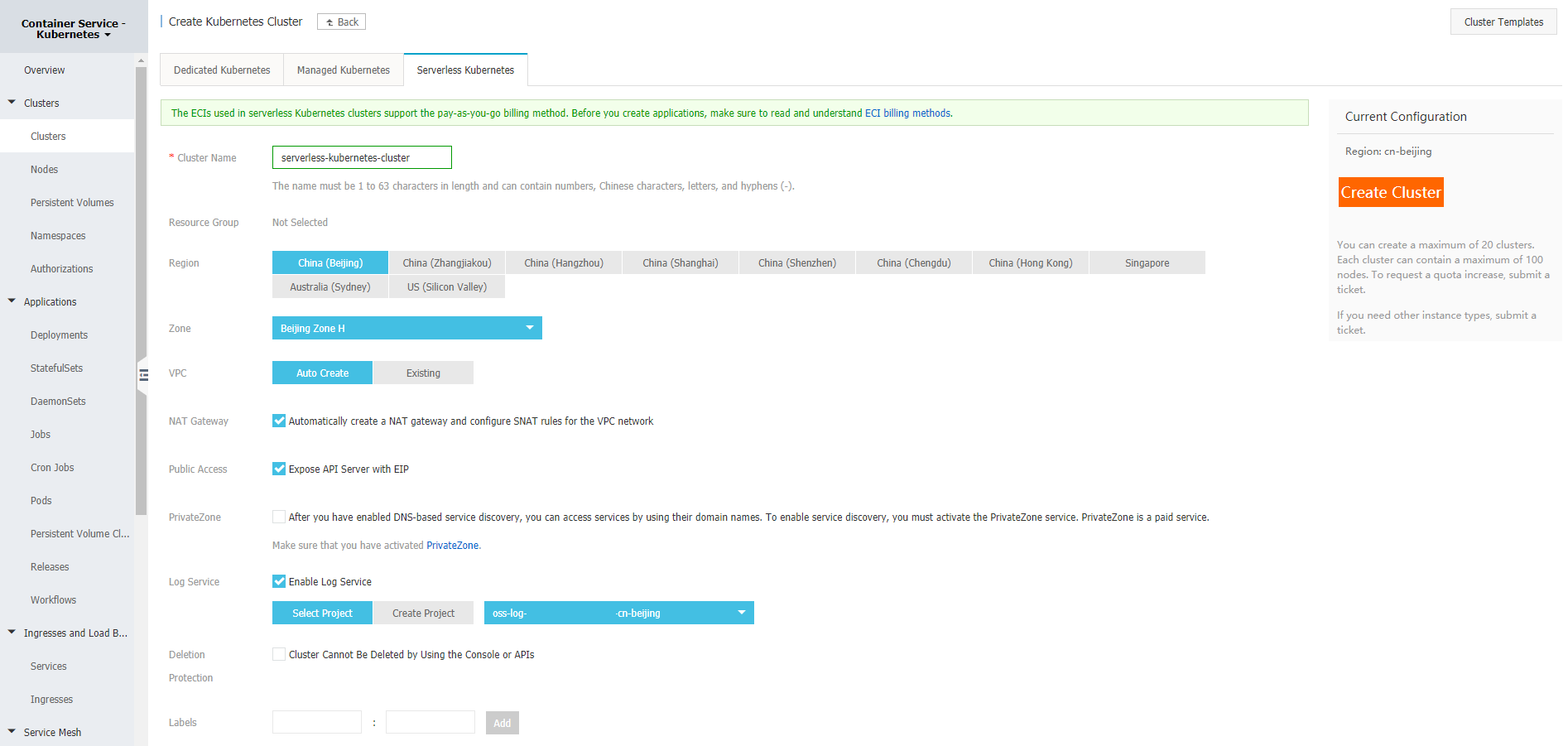

On the other hand, if you select to create a serverless instance of the Kubernetes cluster, that would support your workloads in a serverless fashion. Note that you will not be asked for the node count in this.

The maximum count for this cluster would be 100. But the plus point of this approach is to support workloads of high demand, and shrink the cluster size down to zero, to prevent any charges.

You can create the Kubernetes cluster for serverless jobs in a serverless fashion, and only pay for the resources that you consume.

I recommend that you select the recommended configurations and enable the logging, backups, internet access for the API.

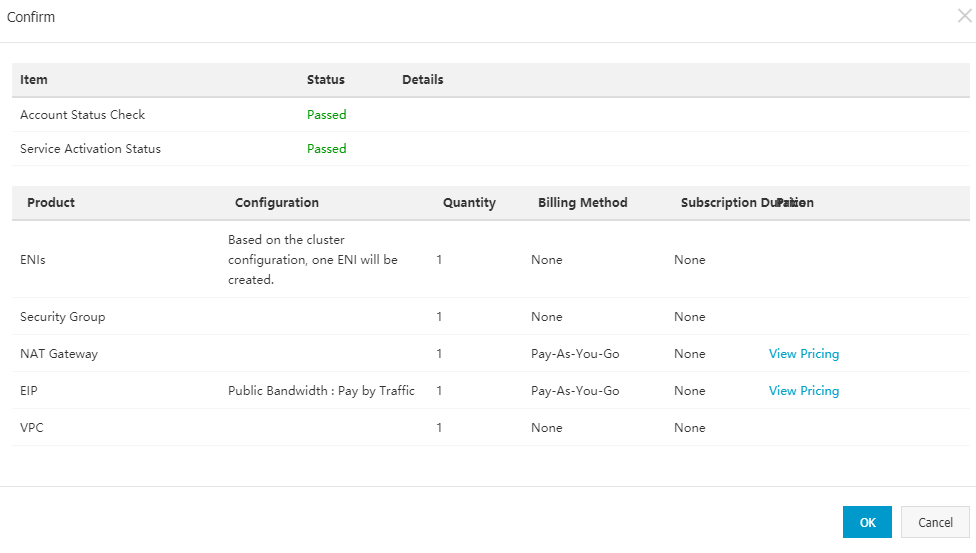

Create the resource, you will be charged for any resources that are created as a part of this cluster. For example, the Private Zone service on Alibaba Cloud is a paid resource.

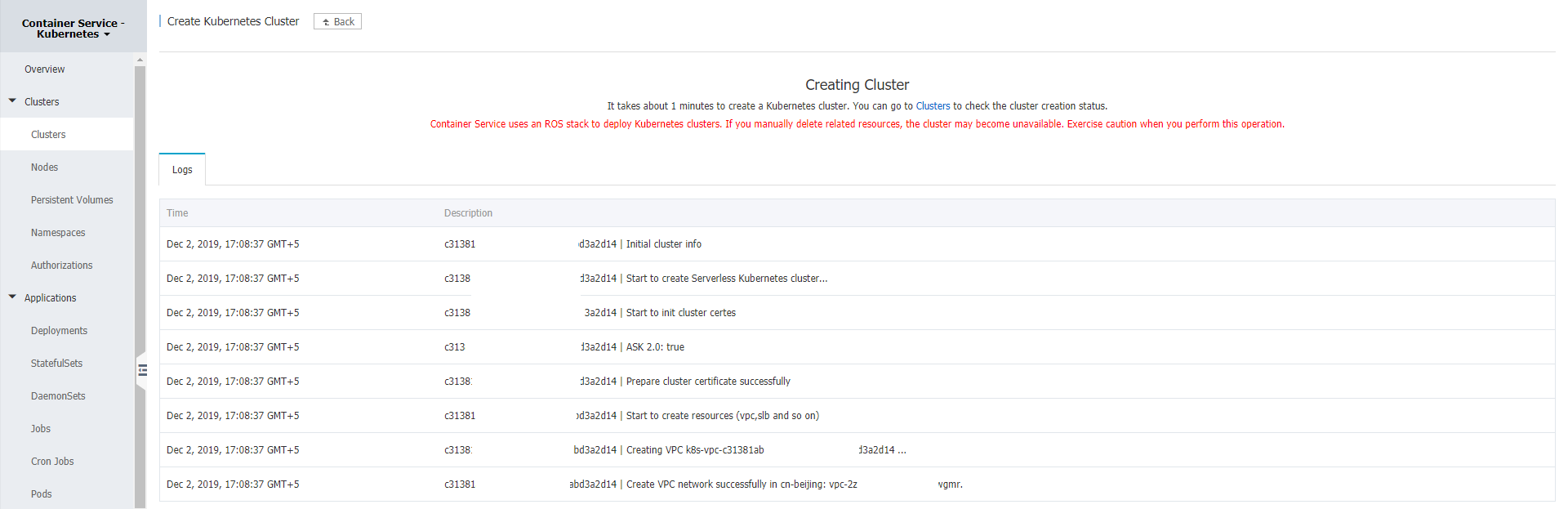

You will be shown this dialog before you create the resources. Check the pricing information for the resources to make sure that you have enough resources and credits to try out or to be charged for the services on your payment options. After you purchase the service, you will be shown the status of the resources being deployed.

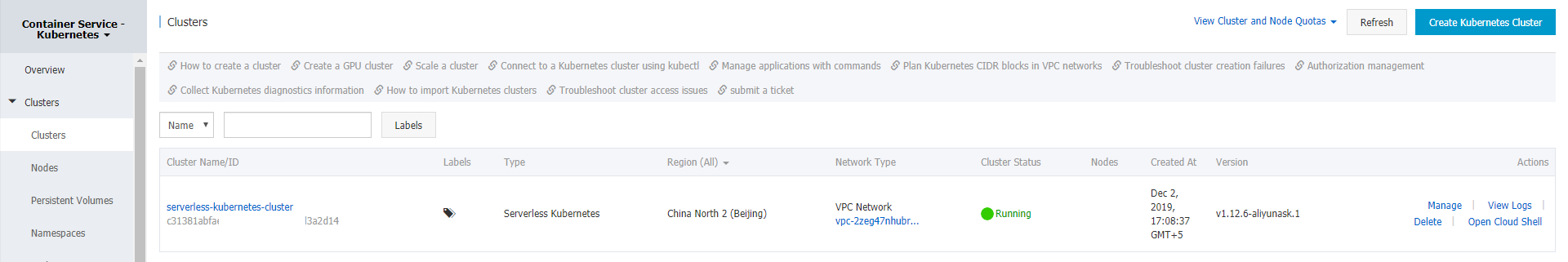

Alibaba Cloud uses Resource Orchestration Service (ROS) to orchestrate the services here, and as the warning message tells, you should not delete the resources (compute, storage or network etc.) manually. You can have your resources deleted by the service itself when you delete the Kubernetes cluster. After a few minutes, your cluster would be available on the portal and you can access it to start deployments.

This Kubernetes cluster would contain only the bare Kubernetes services, and not containers/deployments or services. It will be your responsibility to create the services. Alibaba Cloud will then orchestrate them, and automatically scale the resources to accommodate the demands.

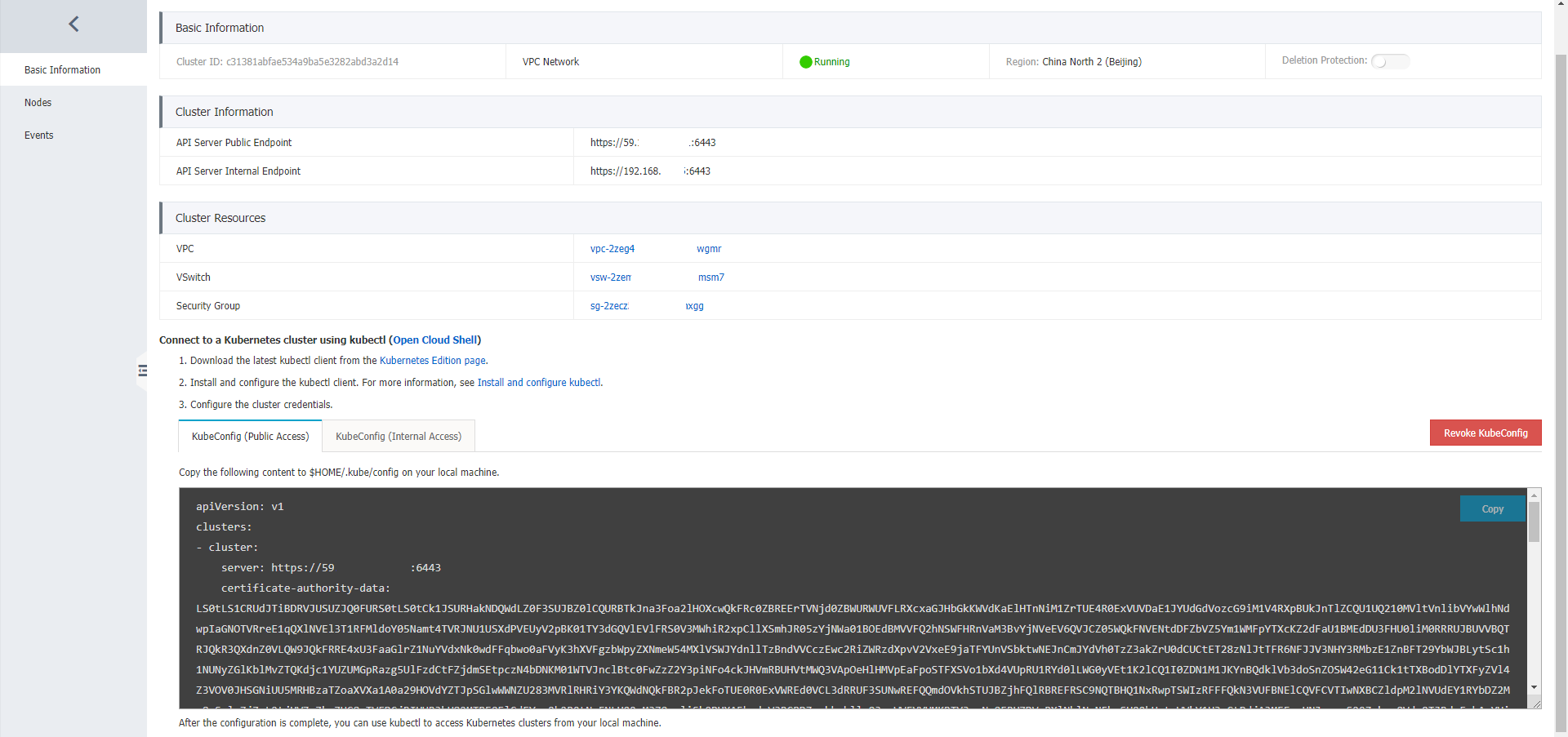

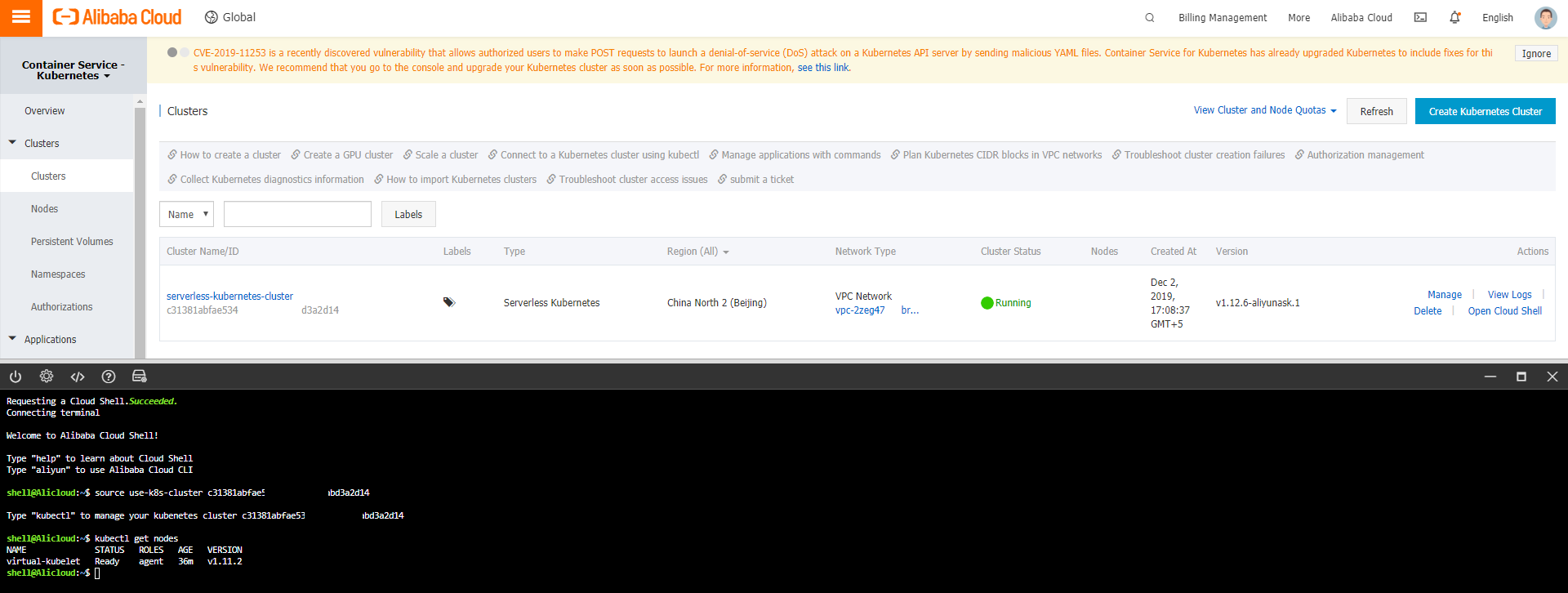

You can connect to the cluster by going to the manage tab and copying the Kubernetes cluster configuration information from the portal.

You should copy it under a ".kube" directory in your user account directory in a file named "config". For example, on a Linux environment it has to be at "~/.kube/config". On Windows platform you should keep it at your profile and then follow the directory path.

Note that the API is safeguarded by a certificate authority, so anyone with the access to the configuration of Kubernetes would be able to control your Kubernetes cluster—disable this feature if you do not wish to allow public access to these resources. You can always control the services from within the portal.

I have created a repository on GitHub that you can use to perform certain actions on Kubernetes clusters, such as creating the Pods, creating Deployments, exposing them as Services and much more. You can access the repository at, https://github.com/afzaal-ahmad-zeeshan/hello-kubernetes. I will use a file from the repository to create the deployment and expose a service and see how Alibaba Cloud automatically create them for us.

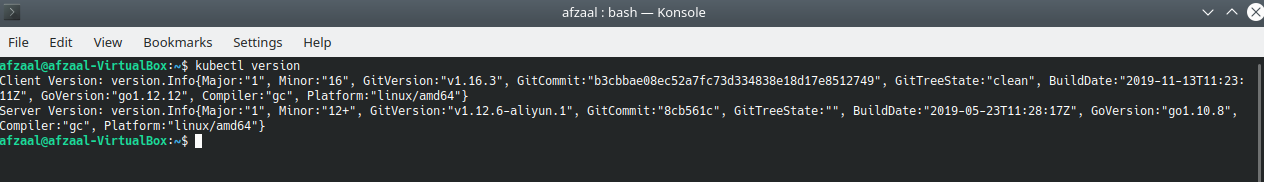

First you need to verify that everything is okay, after you copy the configuration content on your machine and install the kubectl command line utility, execute the following command to verify that your cluster is connected to your machine.

$ kubectl versionThis would yield the version information of server and client.

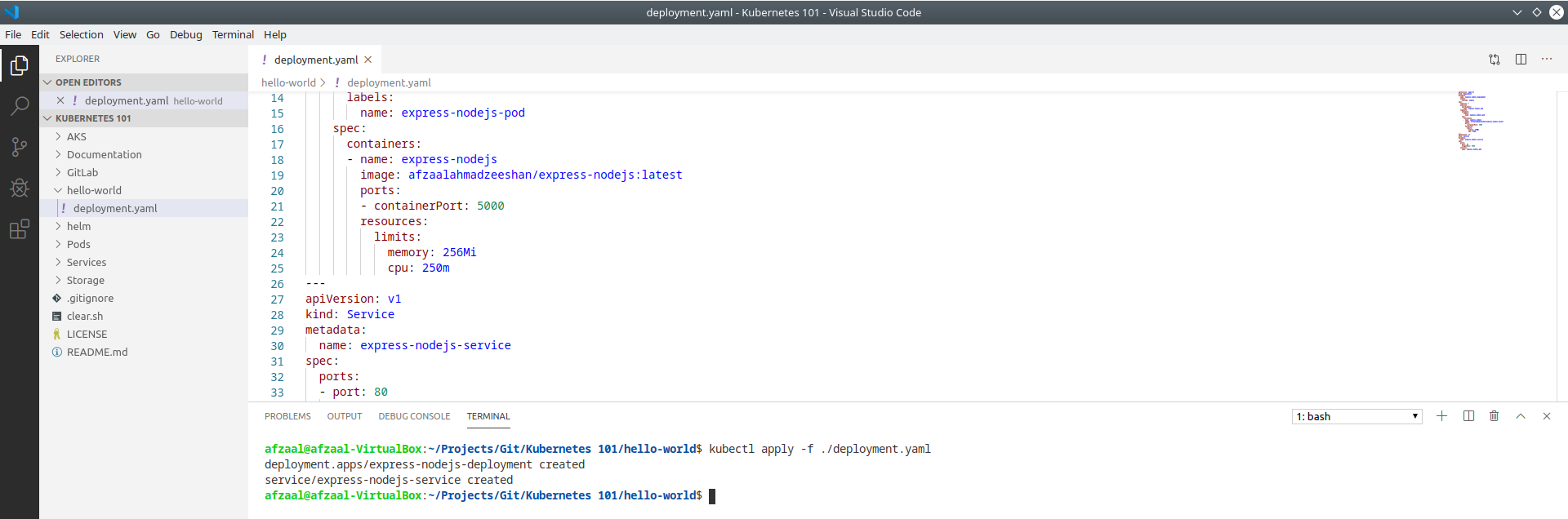

Now that you have connected to the cluster, next step is to create a deployment. I will use the code as I have previously mentioned,

You would use the "hello-world/deployment.yaml" file from the repository. This file contains a deployment and a service that exposes the deployment. The deployment is a basic Node.js based web application.

apiVersion: apps/v1

kind: Deployment

metadata:

name: express-nodejs-deployment

labels:

runtime: nodejs

spec:

replicas: 3

selector:

matchLabels:

name: express-nodejs-pod

template:

metadata:

labels:

name: express-nodejs-pod

spec:

containers:

- name: express-nodejs

image: afzaalahmadzeeshan/express-nodejs:latest

ports:

- containerPort: 5000

resources:

limits:

memory: 256Mi

cpu: 250m

---

apiVersion: v1

kind: Service

metadata:

name: express-nodejs-service

spec:

ports:

- port: 80

targetPort: 5000

selector:

name: express-nodejs-podThe key point to note here is that our deployment exposes the resources that it requires. You can explore the currently deployed pods and services on the Alibaba Cloud portal as well.

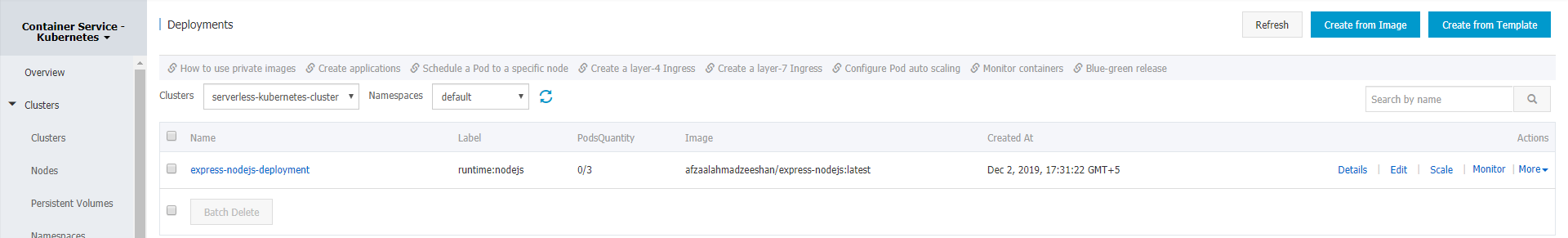

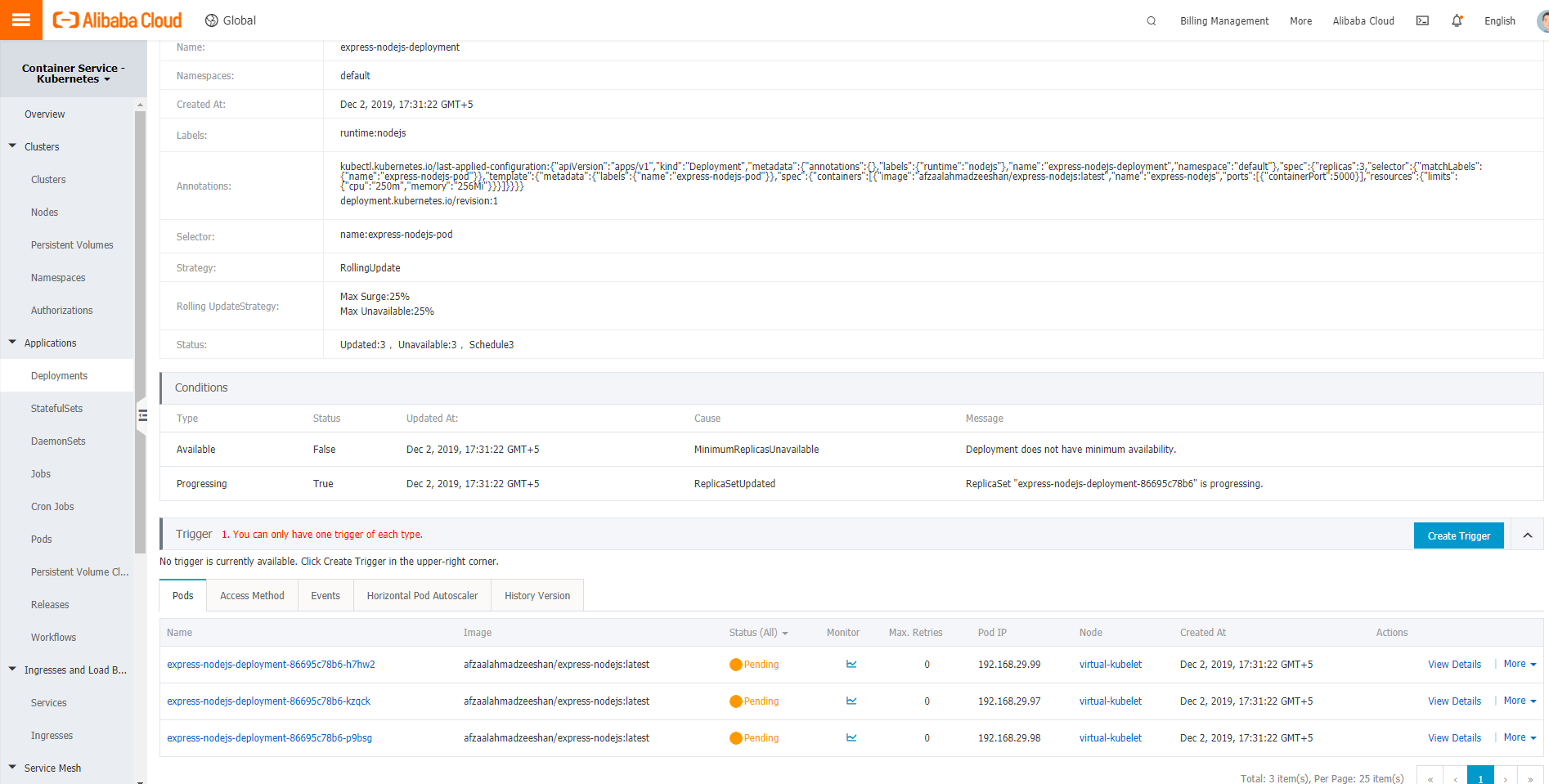

You can further explore the deployment and the number of pods in it by exploring the deployment.

The file that I used to create the deployment listed only 3 pods, so we see that we have those 3 pods created ¨C they are in pending state, since Kubernetes needs to pull the container images from Docker Hub and then deploy them on the cluster.

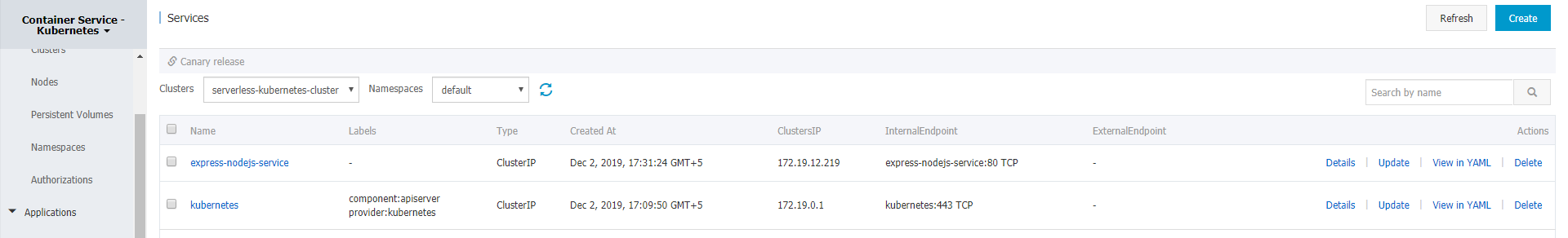

Your deployments also use a service to be exposed to the internet, you can find all the services currently exposed on the cloud under the Services tab.

The second service is the default service that allows us to connect to the Kubernetes cluster, and provides connection to other components of the cluster. Each time we create a new resource, Alibaba Cloud will automatically provision extra resources for us, and shrink the infrastructure down when no longer needing it.

If you do not want to setup any command-line tools on your system, you can also connect to the Kubernetes cluster using the Cloud Shell provided by Alibaba Cloud. It is ironic, how you might also need to install the Alibaba Cloud CLI. The option is available in the top-right corner of the portal, and the shell contains all the executables needed to work with the Kubernetes cluster.

The command-line interface is same, in case of Alibaba Cloud CLI you will not have access to local directories, so you would need to use Git version control to access the Kubernetes objects and the files ¨C but you can easily manage that by providing fully qualified URLs from a public GitHub repository.

Now that you have explored how to create the serverless Kubernetes clusters, next step is to learn how to create the serverless jobs. Modifying your existing applications would not work as expected, that is why there are special SDKs and development runtimes that support serverless development and deployments. One of such frameworks is Knative, Knative supports native serverless development and deployment of the applications on a Kubernetes cluster. Learn more about Knative at, https://knative.dev/.

Since our clusters are serverless, and the jobs and deployments are serverless in nature, we can easily achieve a complete serverless deployment on the cloud that provides (almost) unlimited scale for the resources and cuts the resources down to zero when they are no longer needed.

hs;

NoSQL Database for Mission Critical Business: ApsaraDB for MongoDB and PostgreSQL

9 posts | 1 followers

FollowAlibaba Clouder - September 24, 2020

Alibaba Cloud Serverless - December 17, 2020

Alibaba Container Service - July 24, 2024

Alibaba Cloud Native - October 18, 2023

Alibaba Cloud Native Community - March 11, 2024

Alibaba Container Service - September 19, 2024

9 posts | 1 followers

Follow Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Robotic Process Automation (RPA)

Robotic Process Automation (RPA)

Robotic Process Automation (RPA) allows you to automate repetitive tasks and integrate business rules and decisions into processes.

Learn More Serverless Workflow

Serverless Workflow

Visualization, O&M-free orchestration, and Coordination of Stateful Application Scenarios

Learn More Serverless Application Engine

Serverless Application Engine

Serverless Application Engine (SAE) is the world's first application-oriented serverless PaaS, providing a cost-effective and highly efficient one-stop application hosting solution.

Learn MoreMore Posts by afzaalvirgoboy