You can access the tutorial artifact, including the deployment script (Terraform), related source code, sample data, and instruction guidance from this GitHub project.

Please refer to this link for more tutorials about Alibaba Cloud Database.

Database Deployment Tutorials Series: https://community.alibabacloud.com/series/118

Luigi is a Python (3.6, 3.7, 3.8, 3.9 tested) package that helps you build complex pipelines of batch jobs. It handles dependency resolution, workflow management, visualization, handling failures, command line integration, and much more. Document reference: https://luigi.readthedocs.io/en/stable/.

Metabase is an open-source business intelligence tool. It lets users ask questions about the data and displays answers in formats that make sense, such as a bar graph or a detailed table. Metabase uses the default application database (H2) initially when starting Metabase. To enhance the database's high availability behind the Metabase BI Server, we will use Alibaba Cloud Database RDS PostgreSQL as the backend database of Metabase.

Metabase supports either PostgreSQL or MySQL as the backend database. On Alibaba Cloud, You can any of the following databases:

In this tutorial, we will use RDS PostgreSQL high availability edition for more stable production purposes.

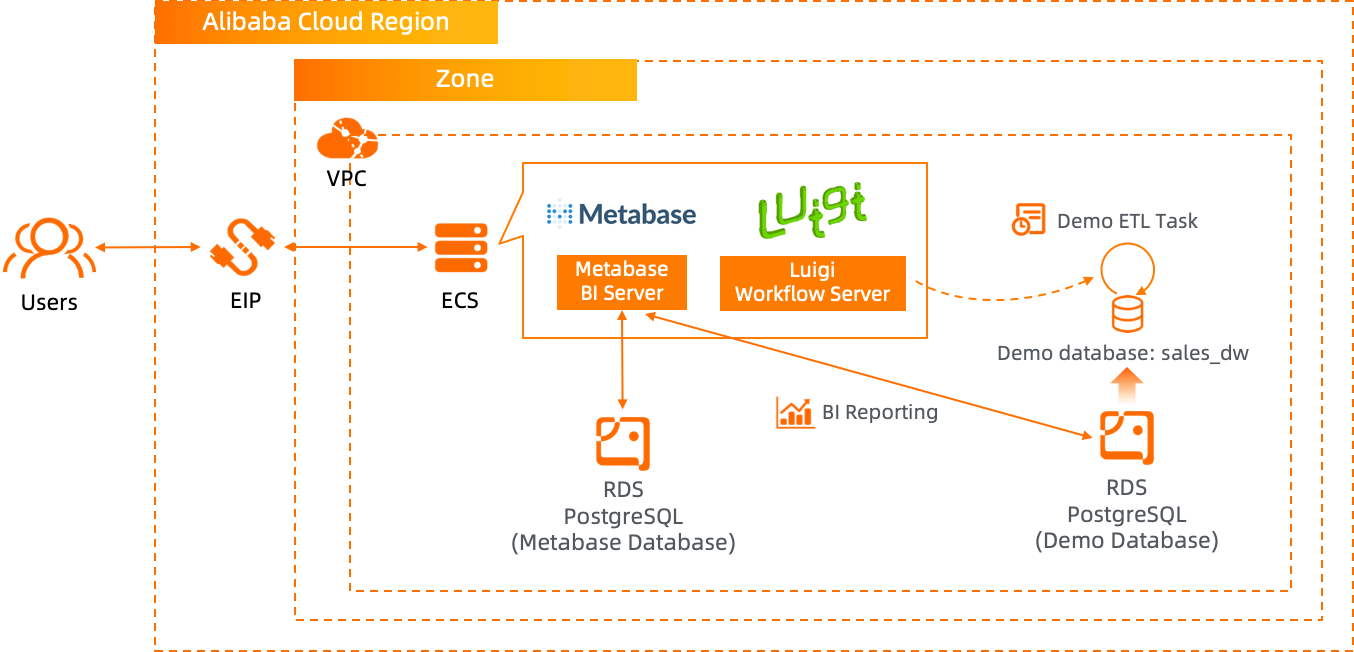

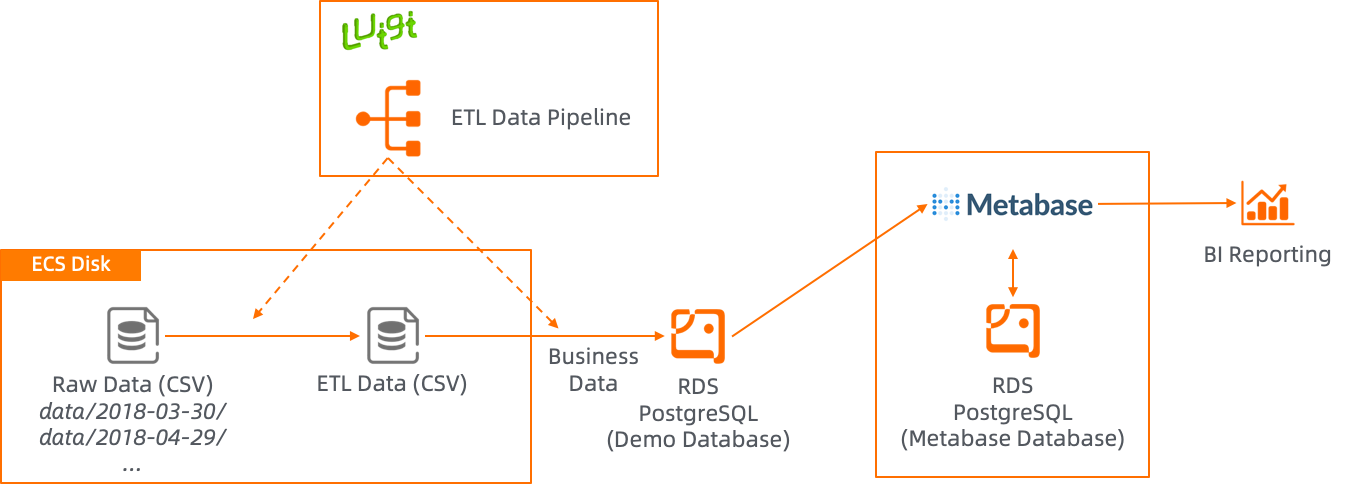

Deployment Architecture:

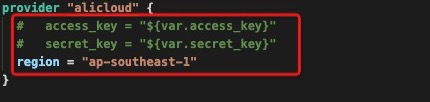

Run the Terraform script to initialize the resources. In this tutorial, we use RDS PostgreSQL as the backend database of Metabase and RDS PostgreSQL as the demo database showing the ETL data pipeline via Luigi task and BI in Metabase. One ECS and 2two RDS PostgreSQL instances are included in the Terraform script. Please specify the necessary information and region to deploy it.

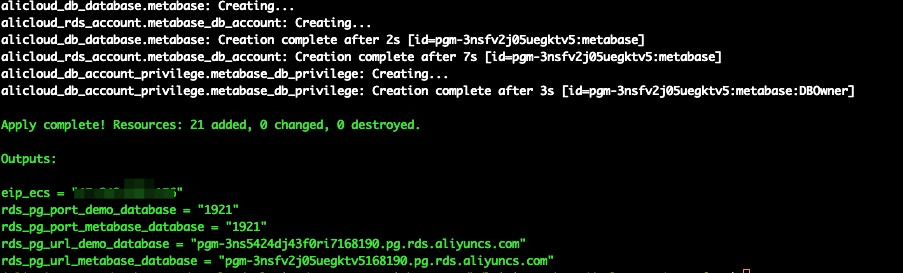

After the Terraform script execution finishes, the ECS and RDS PostgreSQL instances information are listed as below:

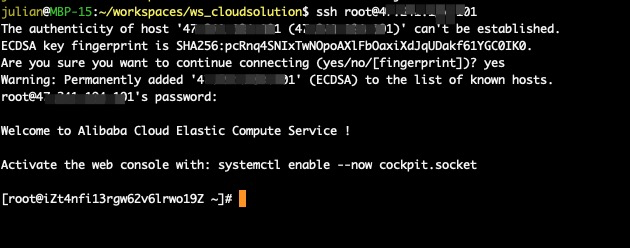

eip_ecs: The public EIP of the ECS for the Azkaban installation hostrds_pg_url_metabase_database: The connection URL of the backend RDS PostgreSQL database for Metabaserds_pg_port_metabase_database: The connection port of the backend RDS PostgreSQL database for Metabase; it is 1921 for RDS PostgreSQL by default.rds_pg_url_demo_database: The connection URL of the demo RDS PostgreSQL database using Luigi and Metabaserds_pg_port_demo_database: The connection Port of the demo RDS PostgreSQL database using Luigi and Metabase; it is 1921 for RDS PostgreSQL by default.Please log on to ECS with ECS EIP

ssh root@<ECS_EIP>

Execute the following command to install gcc, python, related python modules, Luigi, JDK 8, Git, and the PostgreSQL client.

yum install -y gcc-c++*

yum install -y python39

yum install -y postgresql-devel

pip3 install psycopg2

pip3 install pandas

pip3 install mlxtend

pip3 install pycountry

pip3 install luigi

yum install -y java-1.8.0-openjdk-devel.x86_64

yum install -y git

cd ~

wget http://mirror.centos.org/centos/8/AppStream/x86_64/os/Packages/compat-openssl10-1.0.2o-3.el8.x86_64.rpm

rpm -i compat-openssl10-1.0.2o-3.el8.x86_64.rpm

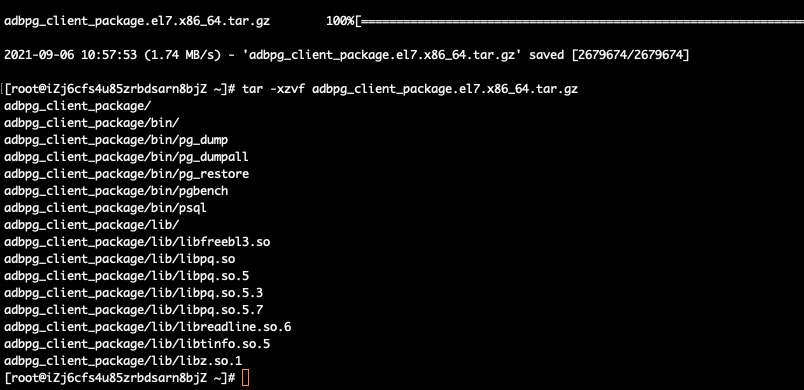

wget http://docs-aliyun.cn-hangzhou.oss.aliyun-inc.com/assets/attach/181125/cn_zh/1598426198114/adbpg_client_package.el7.x86_64.tar.gz

tar -xzvf adbpg_client_package.el7.x86_64.tar.gz

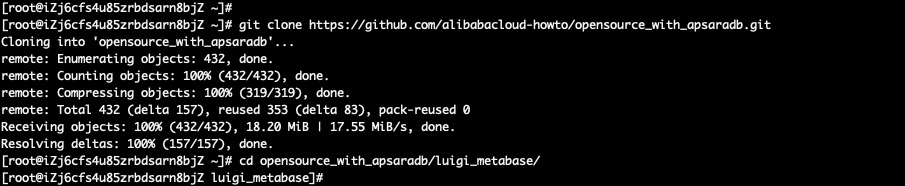

Execute the commands to check the project files from GitHub and navigate to the project directory:

git clone https://github.com/alibabacloud-howto/opensource_with_apsaradb.git

cd opensource_with_apsaradb/luigi_metabase/

In this tutorial, I show the Metabase execution approach by running the Metabase JAR file.

Please execute the following commands to download the Metabase JAR file:

cd ~/opensource_with_apsaradb/luigi_metabase/metabase

wget https://downloads.metabase.com/v0.40.3.1/metabase.jarMetabase uses the default application database (H2) initially when starting Metabase. However, in this tutorial, I show the best practice of switching to a more production-ready database RDS PostgreSQL.

It follows the official document – Migrating from using the H2 database to Postgres or MySQL/MariaDB.

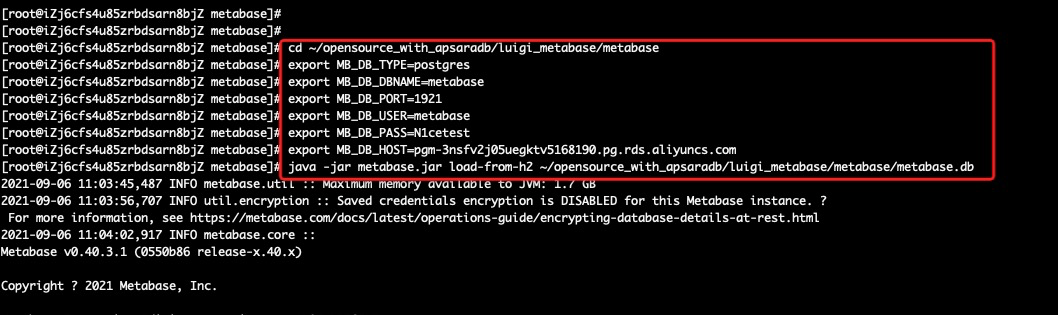

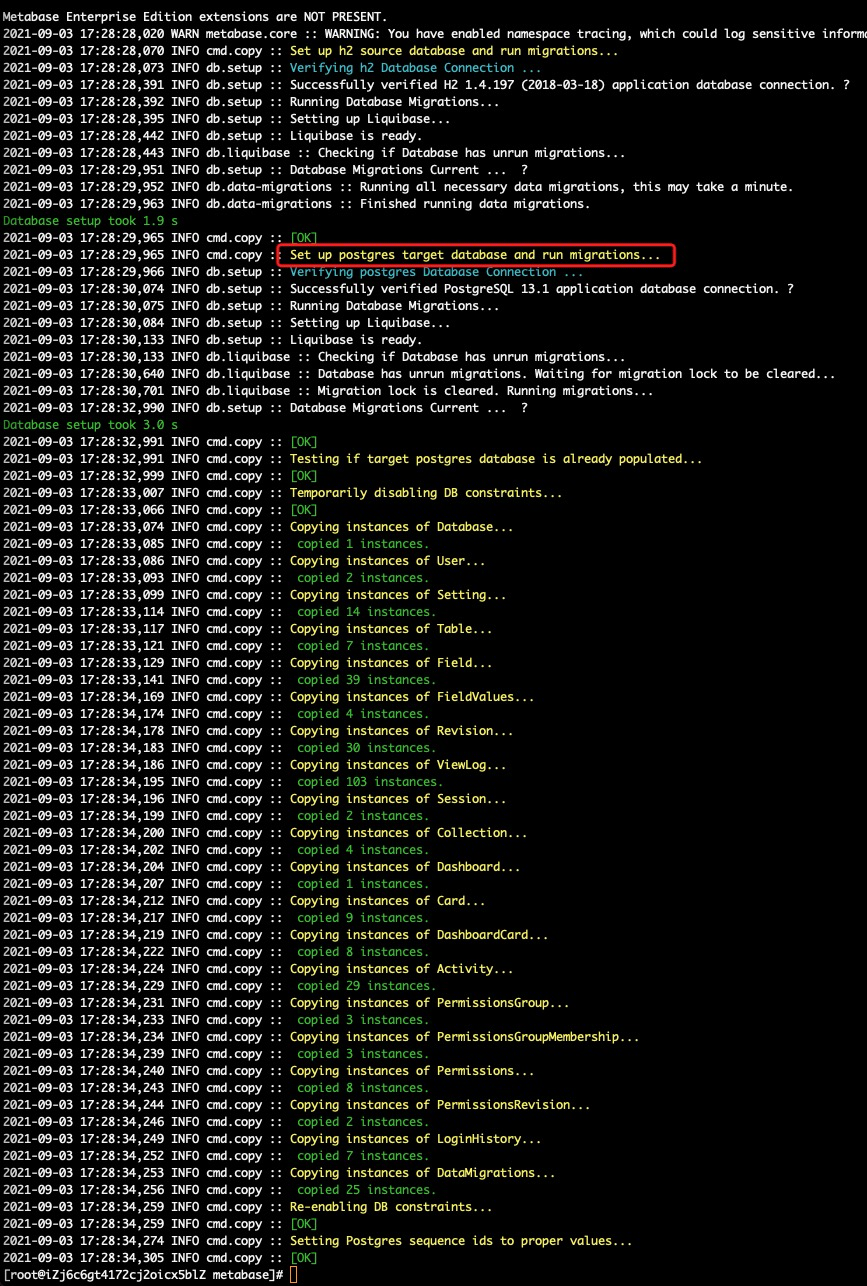

Execute the following commands to migrate the Metabase backend database from H2 to RDS PostgreSQL that was provisioned before in Step 1.

Please update <rds_pg_url_metabase_database> with the corresponding connection string:

cd ~/opensource_with_apsaradb/luigi_metabase/metabase

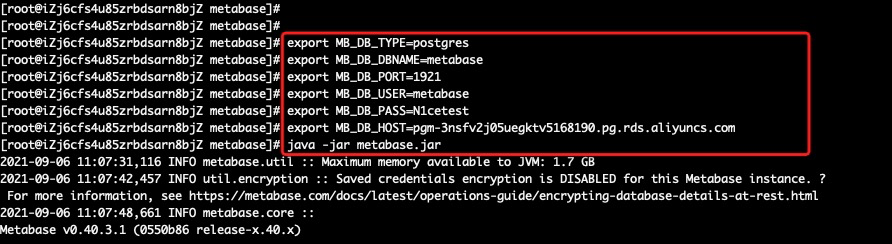

export MB_DB_TYPE=postgres

export MB_DB_DBNAME=metabase

export MB_DB_PORT=1921

export MB_DB_USER=metabase

export MB_DB_PASS=N1cetest

export MB_DB_HOST=<rds_pg_url_metabase_database>

java -jar metabase.jar load-from-h2 ~/opensource_with_apsaradb/luigi_metabase/metabase/metabase.db

Then, execute the command to start Metabase using the RDS PostgreSQL as the backend database. Please update <rds_pg_url_metabase_database> with the corresponding connection string:

export MB_DB_TYPE=postgres

export MB_DB_DBNAME=metabase

export MB_DB_PORT=1921

export MB_DB_USER=metabase

export MB_DB_PASS=N1cetest

export MB_DB_HOST=<rds_pg_url_metabase_database>

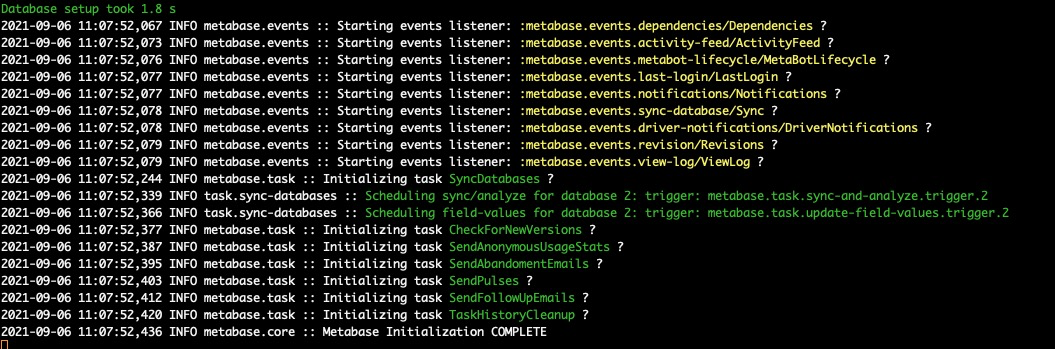

java -jar metabase.jar

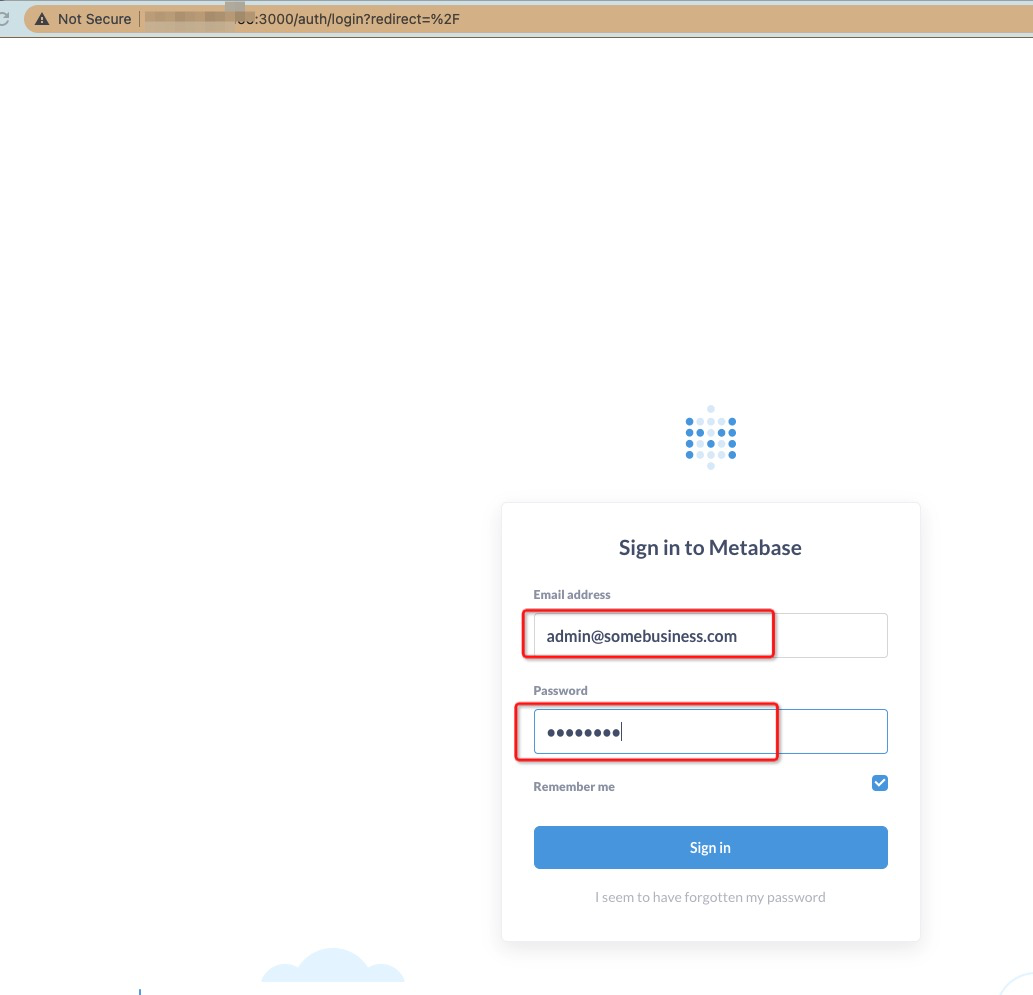

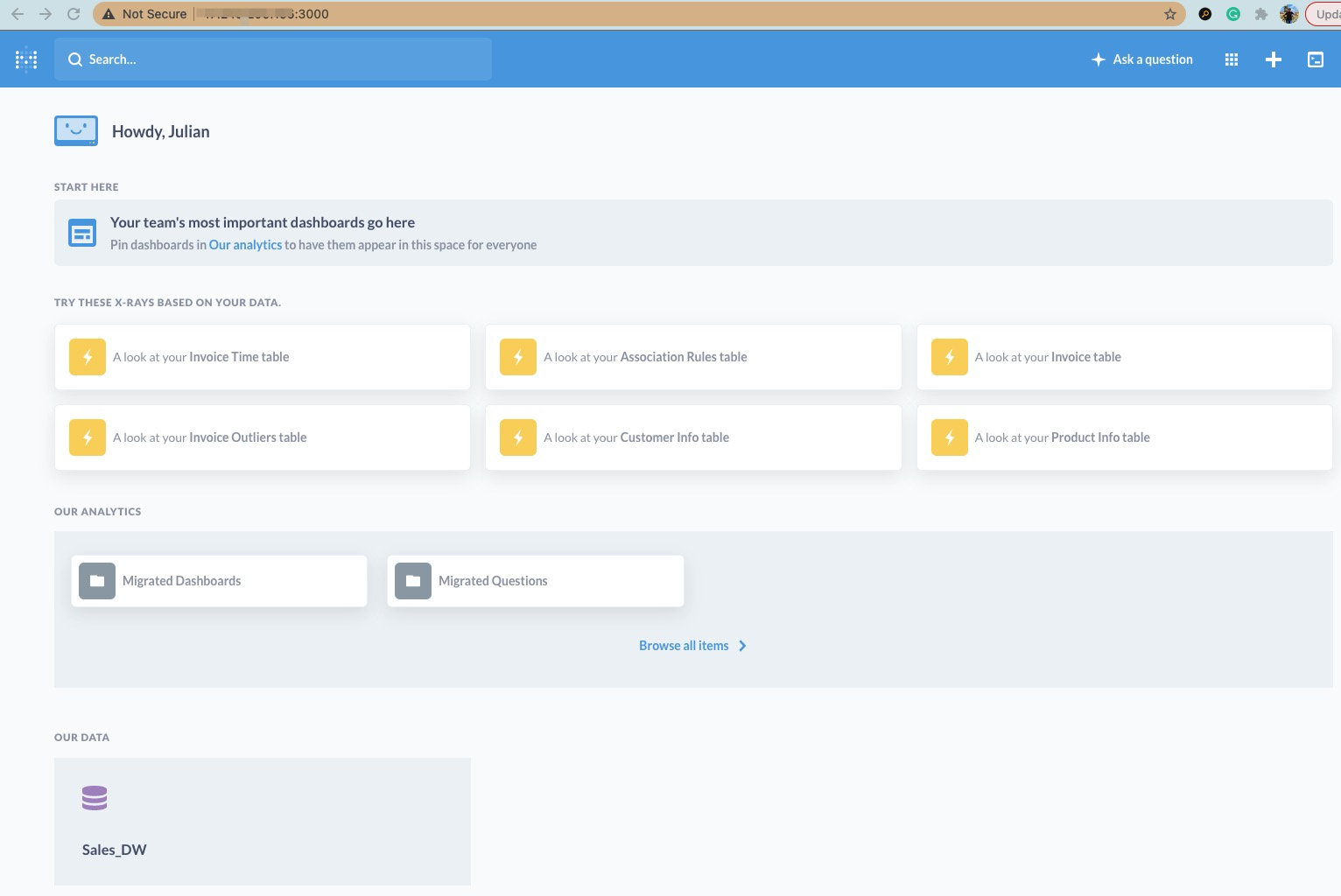

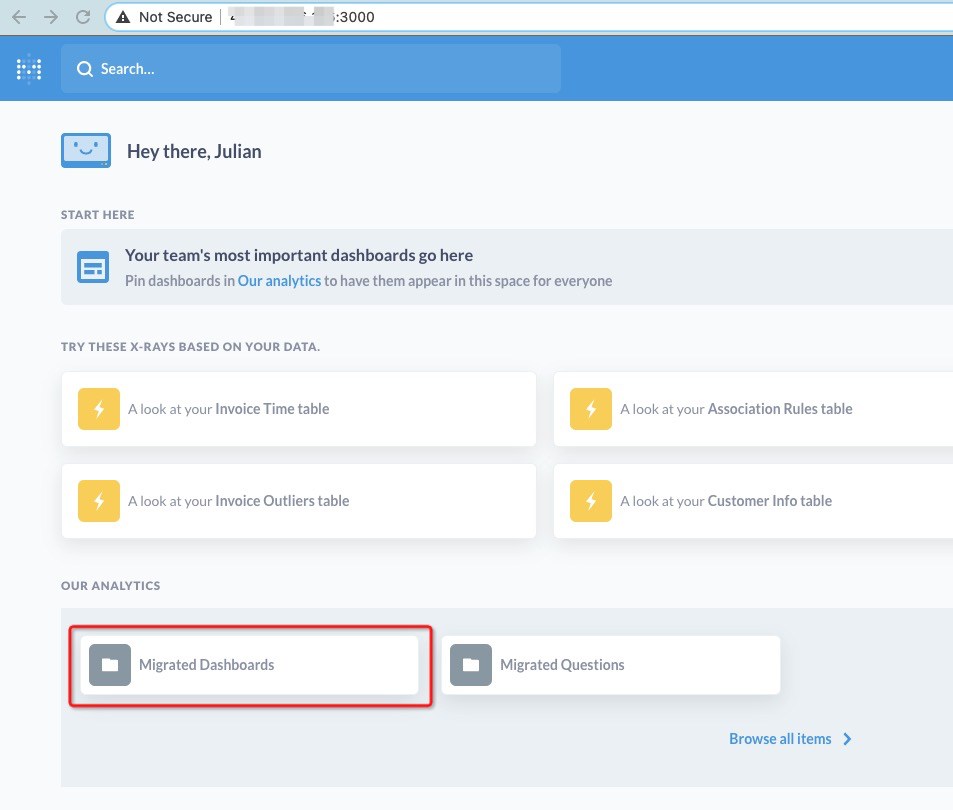

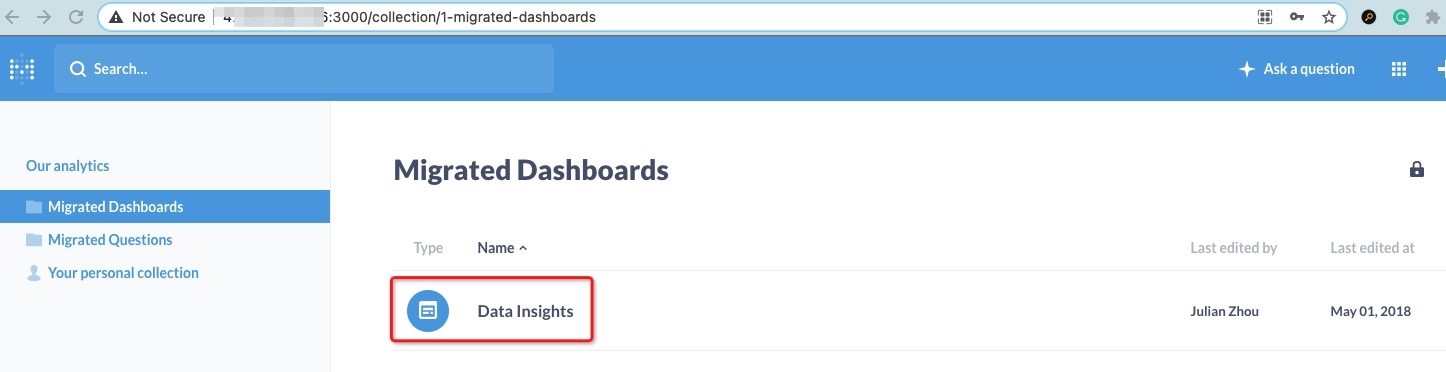

Once it is up and running, navigate to http://<ECS_EIP>:3000/

I've preset the following accounts in the demo Metabase. Please log on with the Admin User:

Admin User: admin@somebusiness.com

Password: N1cetest

Business Owner user: owner@somebusiness.com

Password: N1cetest

Please log on to ECS with ECS EIP in another CLI window. DO NOT close the CLI window logged in Step 2.

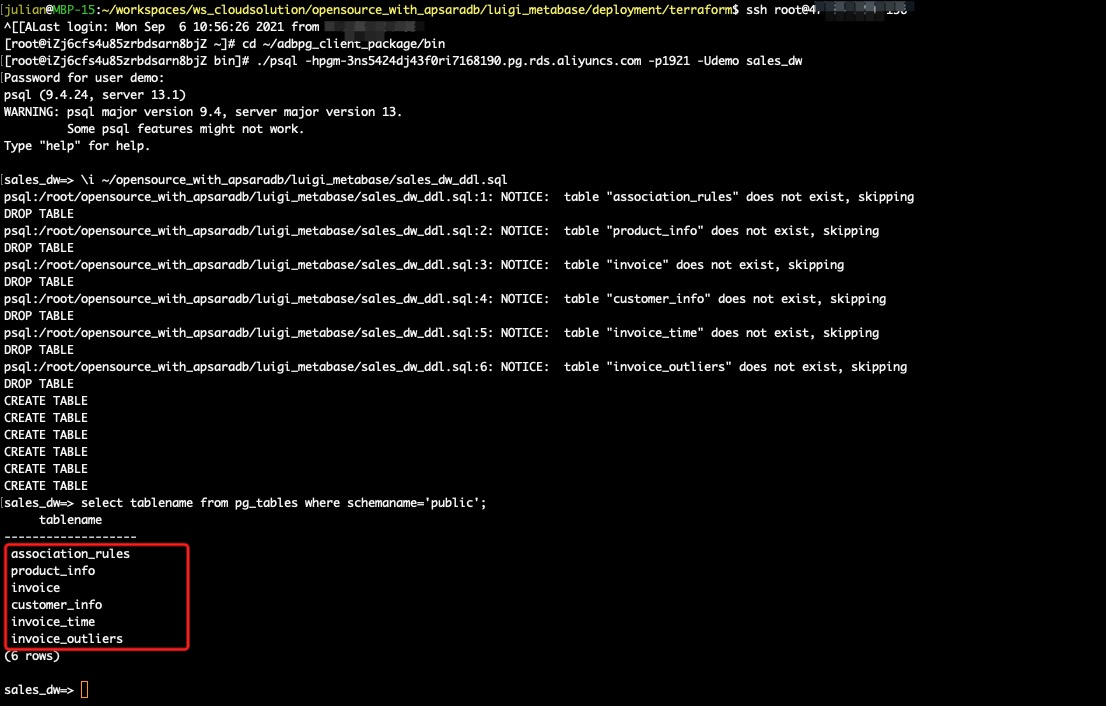

ssh root@<ECS_EIP>Before we demo the ETL data pipeline, let's execute the following commands to create the schema sales_dw and tables. CREATE TABLE DDL are within the SQL file in the demo RDS PostgreSQL database.

Please replace <rds_pg_url_demo_database> with the corresponding connection string of the demo RDS PostgreSQL instance. When prompting the password of connecting to the schema sales_dw, please input N1cetest, which is preset in the terraform provision script in Step 1. If you've already changed it, please update accordingly.

cd ~/adbpg_client_package/bin

./psql -h<rds_pg_url_demo_database> -p1921 -Udemo sales_dwIn the PG client, execute the DDL SQL file and check that six empty tables are created.

\i ~/opensource_with_apsaradb/luigi_metabase/sales_dw_ddl.sql

select tablename from pg_tables where schemaname='public';

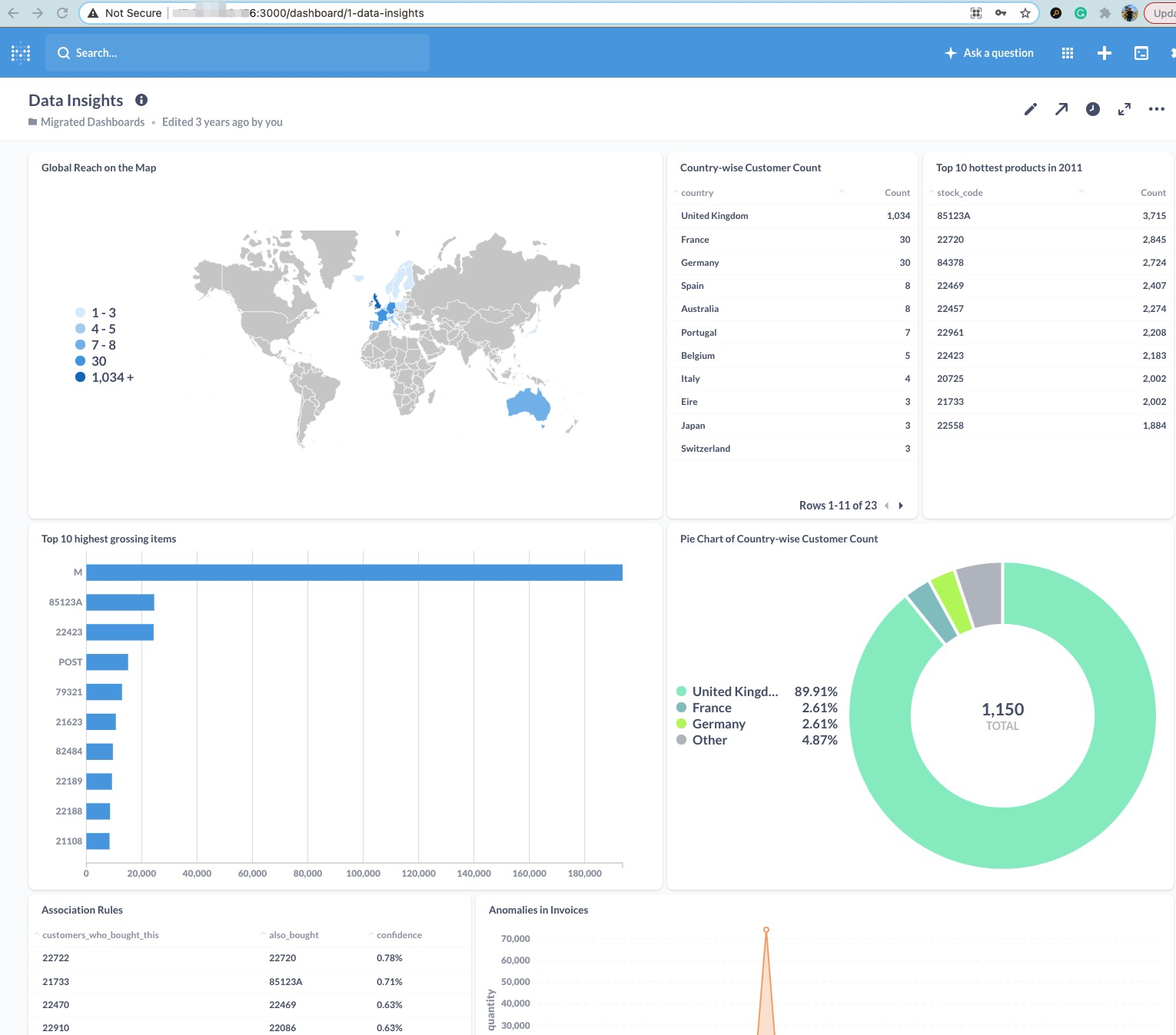

There are three tables as the source tables and three tables as the target tables in the demo ETL data pipeline:

product_info: a source table in the demo ETL data pipelineinvoice: a source table in the demo ETL data pipelinecustomer_info: a source table in the demo ETL data pipelineinvoice_time: a target table in the demo ETL data pipelineinvoice_outliers a target table in the demo ETL data pipelineassociation_rules a target table in the demo ETL data pipelinePlease log on to ECS with ECS EIP in another new CLI window. DO NOT close the CLI window logged in Step 2 and Step 3.

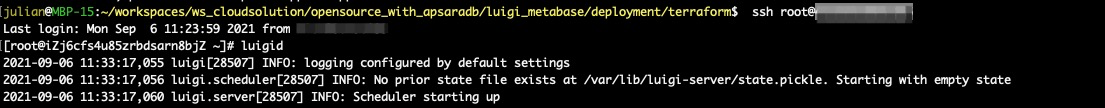

ssh root@<ECS_EIP>Within this CLI window, execute the command to start Luigi daemon:

luigid

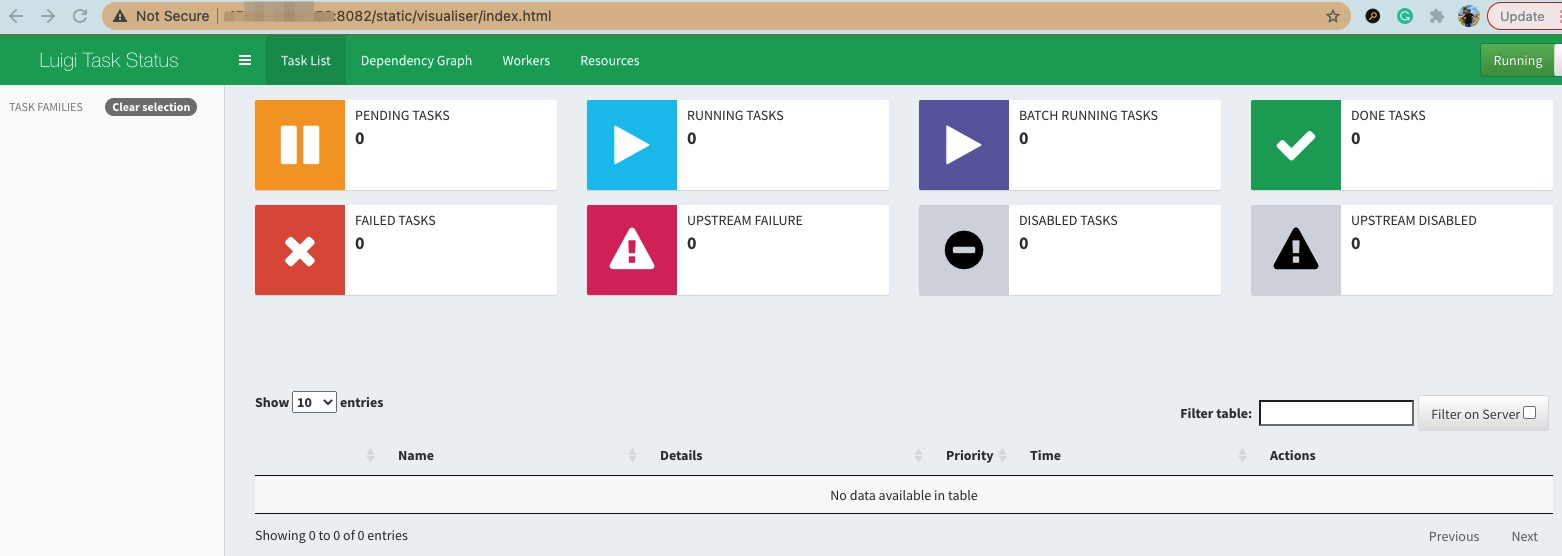

Once it is up and running, navigate to http://<ECS_EIP>:8082/

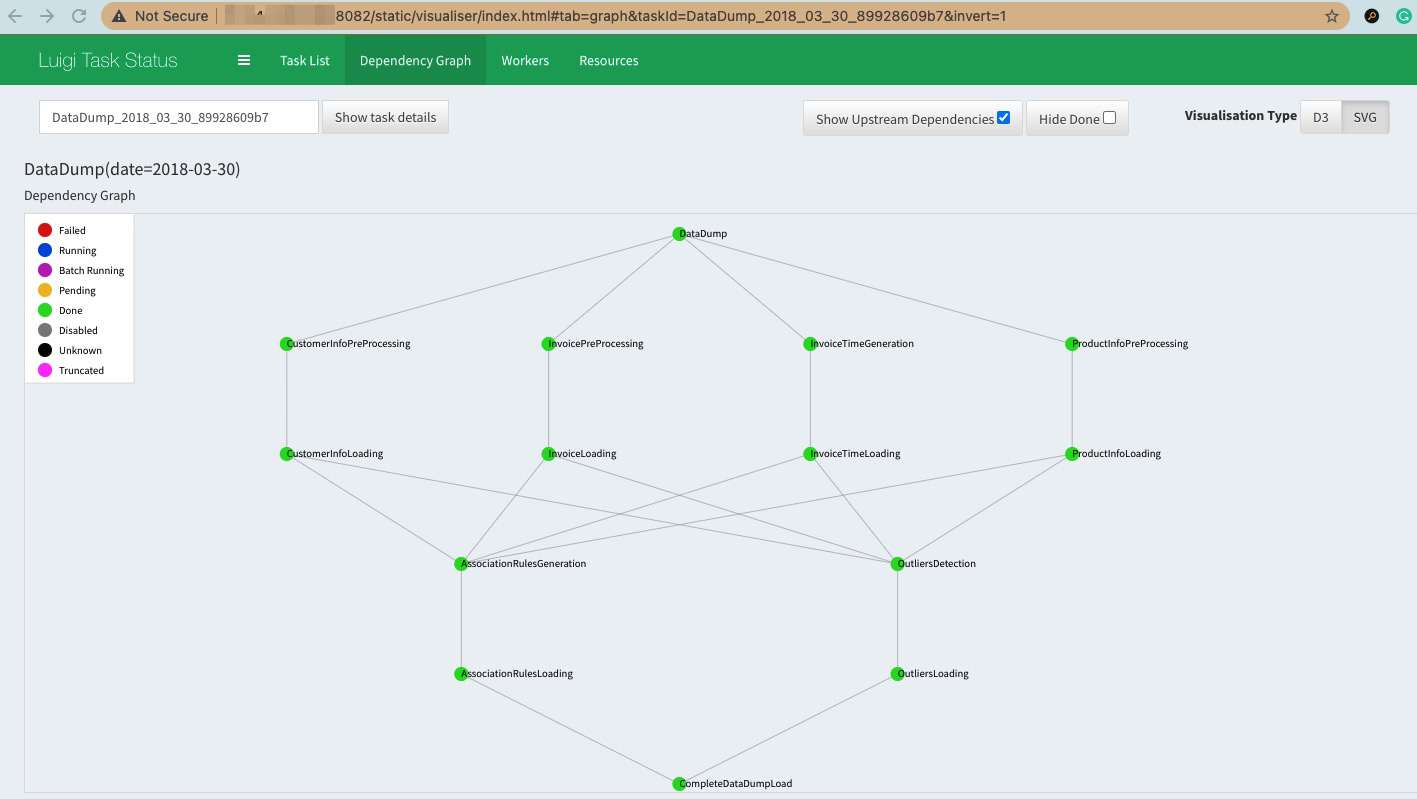

Now, we can run the ETL data pipeline in Luigi. The following image shows the ETL data pipeline workflow in the demo:

The full ETL data pipeline code is located here. It will load the raw data in the local ECS disk here, process and transform the data to a local disk, and load the data into the RDS PostgreSQL database for Metabase BI reporting. The BI reports in Metabase have already been composed in this demo within Metabase.

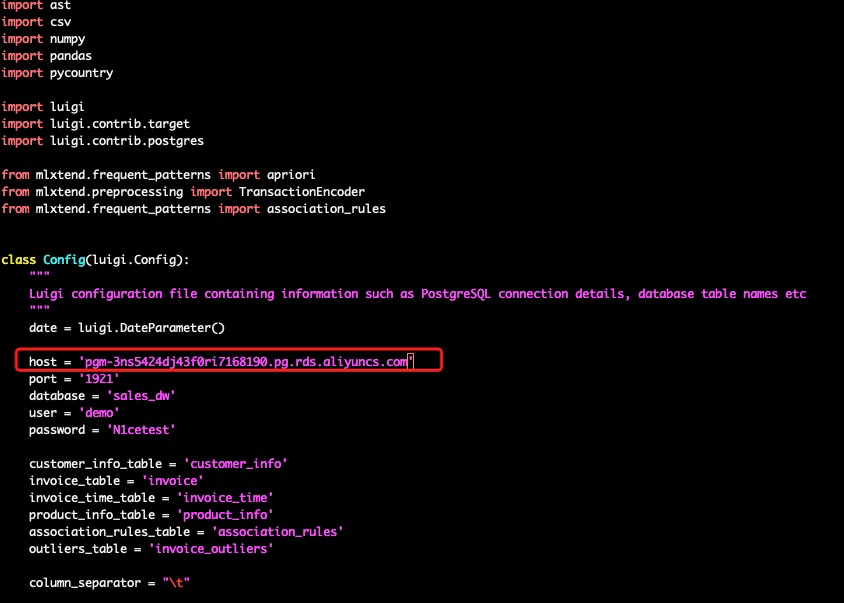

Switch to the CLI window created at Step 3. Before execution, please edit the pipeline python code to change the demo database connection string URL to the value of <rds_pg_url_demo_database>.

cd ~/opensource_with_apsaradb/luigi_metabase

vim data_pipeline.py

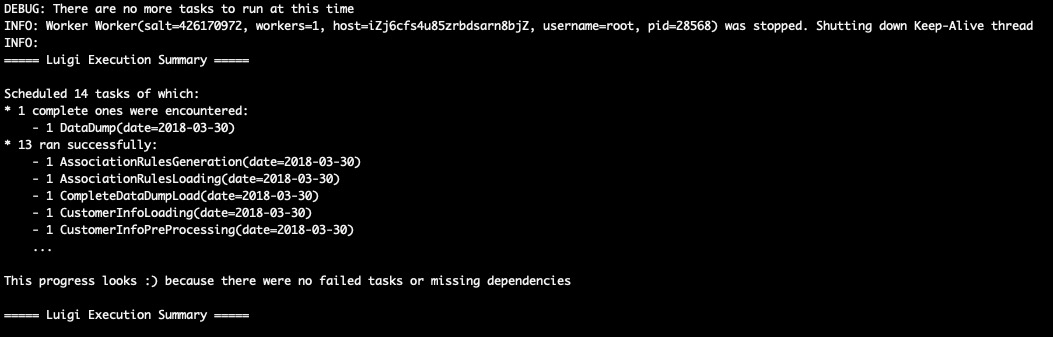

Then, execute the following commands to kick off a pipeline execution for the data at 2018-03-30 in this CLI window:

cd ~/opensource_with_apsaradb/luigi_metabase

PYTHONPATH='.' luigi --module data_pipeline CompleteDataDumpLoad --date 2018-03-30The data pipeline execution summary shows at the end.

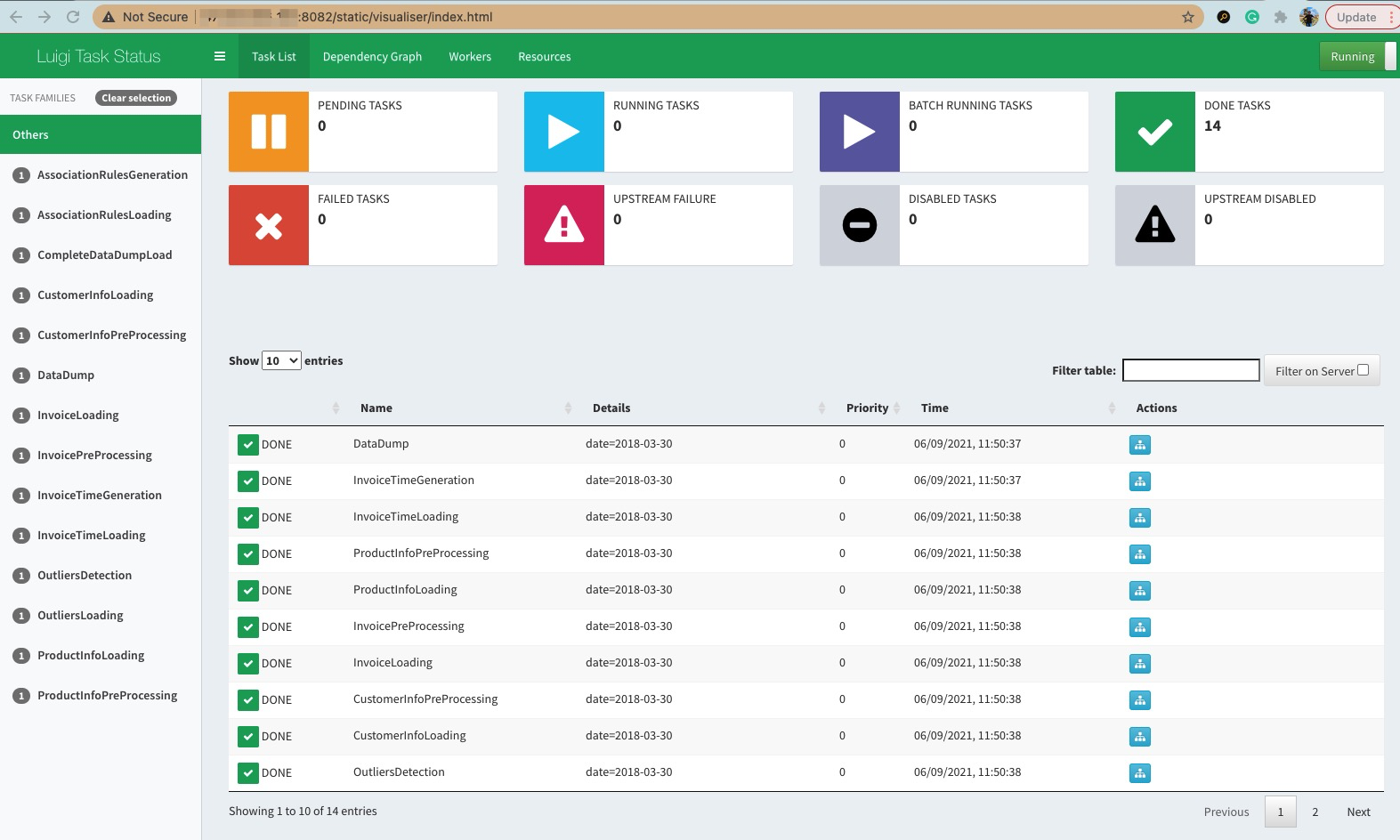

Refresh the Luigi web page http://<ECS_EIP>:8082/ to see the data pipeline execution information:

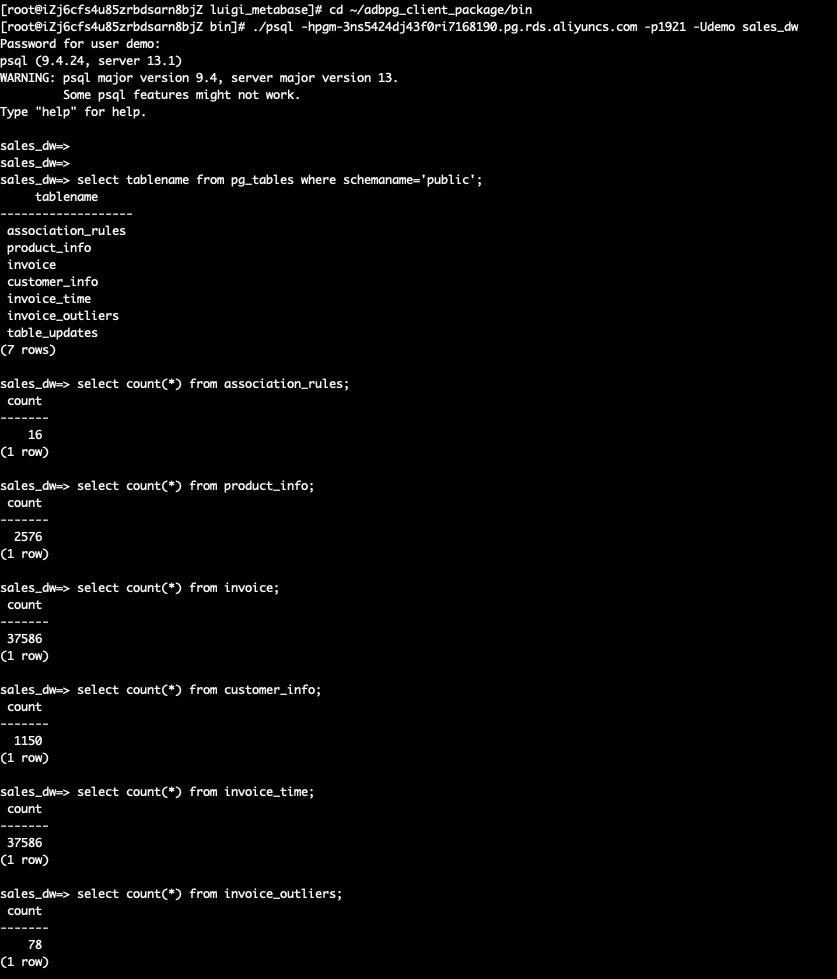

In the CLI window created in Step 3, execute the following commands to verify the data processed in the data pipeline.

Please replace <rds_pg_url_demo_database> with the corresponding connection string of the demo RDS PostgreSQL instance. When prompting the password of connecting to the schema sales_dw, please input N1cetest, which is preset in the Terraform provision script in Step 1. If you've already changed it, please update it accordingly.

cd ~/adbpg_client_package/bin

./psql -h<rds_pg_url_demo_database> -p1921 -Udemo sales_dwIn the PG client, execute the SQL to view the data:

select tablename from pg_tables where schemaname='public';

select count(*) from association_rules;

select count(*) from product_info;

select count(*) from invoice;

select count(*) from customer_info;

select count(*) from invoice_time;

select count(*) from invoice_outliers;

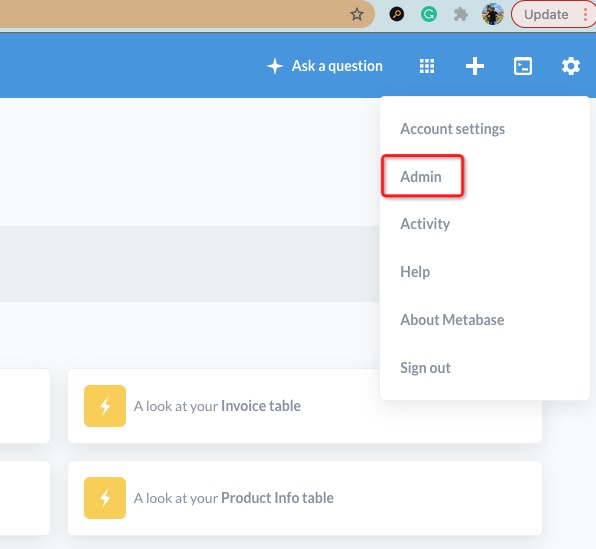

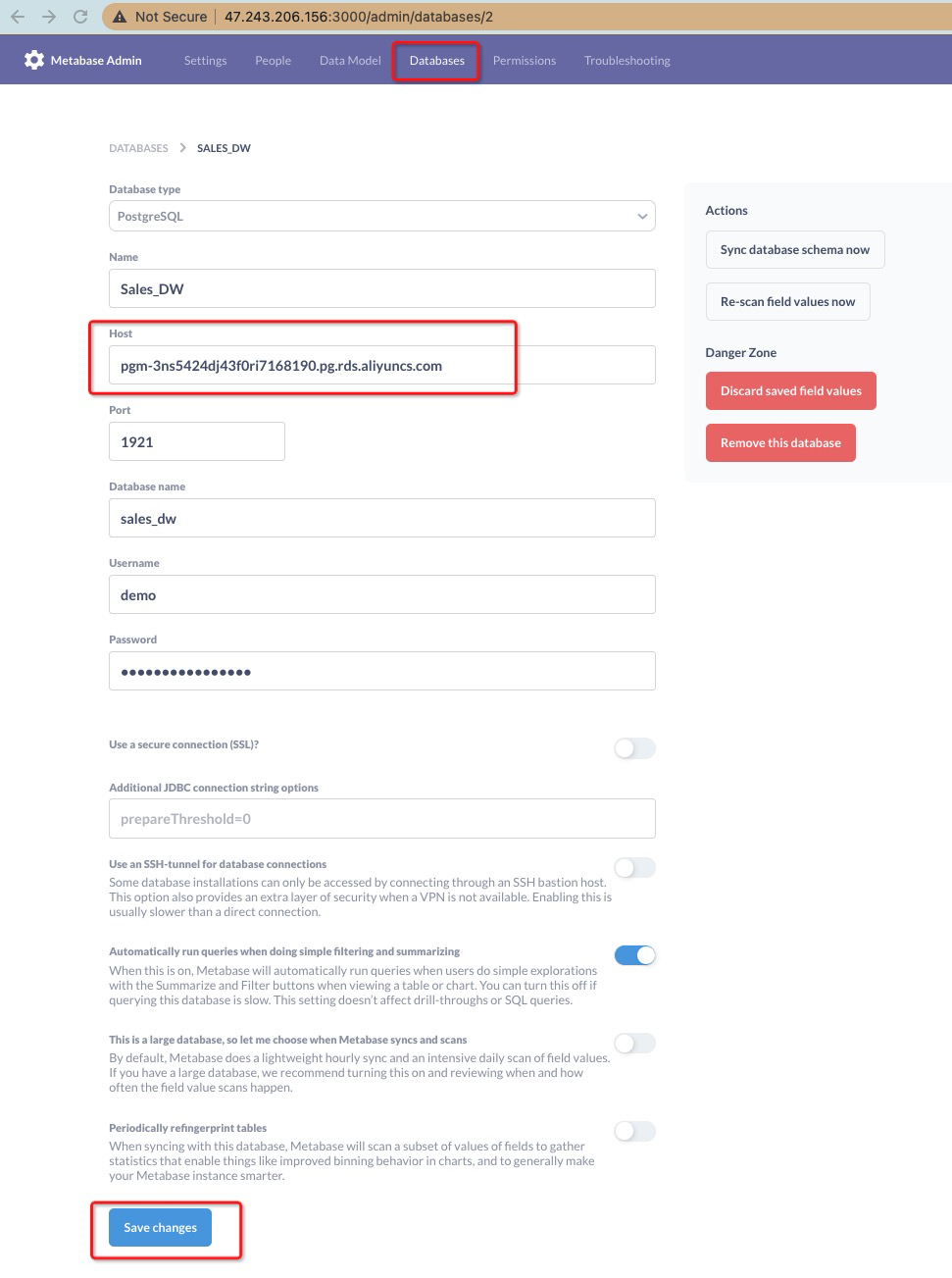

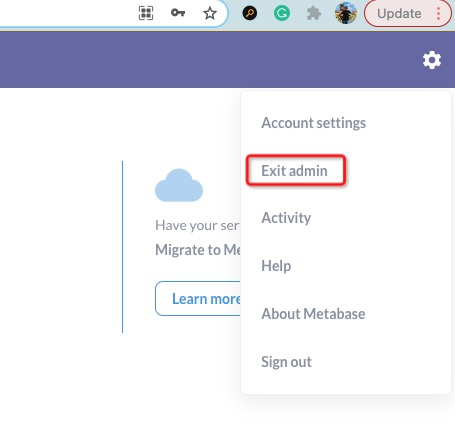

Then, navigate to the Metabase database Admin setting to update the target database to the demo RDS PostgreSQL database <rds_pg_url_demo_database>:

This tutorial is modified based on a data warehouse using Luigi, PostgreSQL, and Metabase to run on Alibaba Cloud. There are some errors in the original source code, but I've already fixed them to work on Alibaba Cloud.

Use Case | Precision Marketing with Low-Cost: Game Publisher Best Practices

Neel_Shah - September 17, 2025

Alibaba Clouder - July 15, 2020

ApsaraDB - October 24, 2025

Alibaba Clouder - January 20, 2021

liptanbiswas - July 15, 2018

Alibaba Clouder - May 11, 2018

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn More Quick BI

Quick BI

A new generation of business Intelligence services on the cloud

Learn MoreMore Posts by ApsaraDB