by Sunny Jovita, Solution Architect Alibaba Cloud Indonesia

Artificial Intelligence (AI) has become a global phenomenon, reshaping industries and redefining what’s possible. As AI continues to dominate headlines, it’s crucial to understand what sets different types of AI apart and how they are transforming the way we work and live. The evolution of AI from rule based systems to models capable of generating human-like content, has been nothing short of revolutionary. Let’s dive deeper into this fascinating journey.

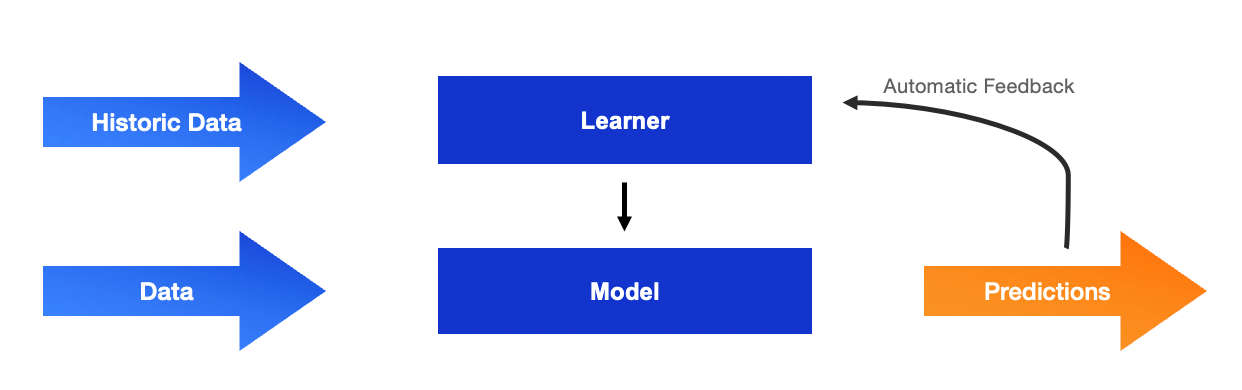

At its core, traditional AI refers to systems designed to perform specific tasks intelligently by responding to a particular set of inputs. These systems are capable of learning from data and making decisions or predictions based on that information. However, their scope is limited, they excel within predefined boundaries but lack the ability to think creatively or go beyond their programmed rules.

To better understand traditional AI, imagine playing a game of chess against a computer. The computer knows all the rules of the game and can predict your moves while selecting its own strategies from a predefined set of options. it doesn’t invent new ways to play chess, instead, it operates within the constraints of the game, applying logic and strategy to outsmart you. This is the essence of traditional AI, it’s like a master strategist which is highly intelligent within a specific domain but unable to step outside its defined parameters.

Other examples of traditional AI include:

These systems have been meticulously trained to execute particular tasks efficiently and accurately. While they don’t create anything new, they do what they’re designed to do exceptionally well, making them indispensable in industries ranging from healthcare to finance.

However, traditional AI has its limitations. It struggles with unstructured data (like free-form text, images, or videos) and requires extensive manual effort to adapt to new scenarios. This rigidity highlights the need for more flexible and creative approaches which something Generative AI aims to address.

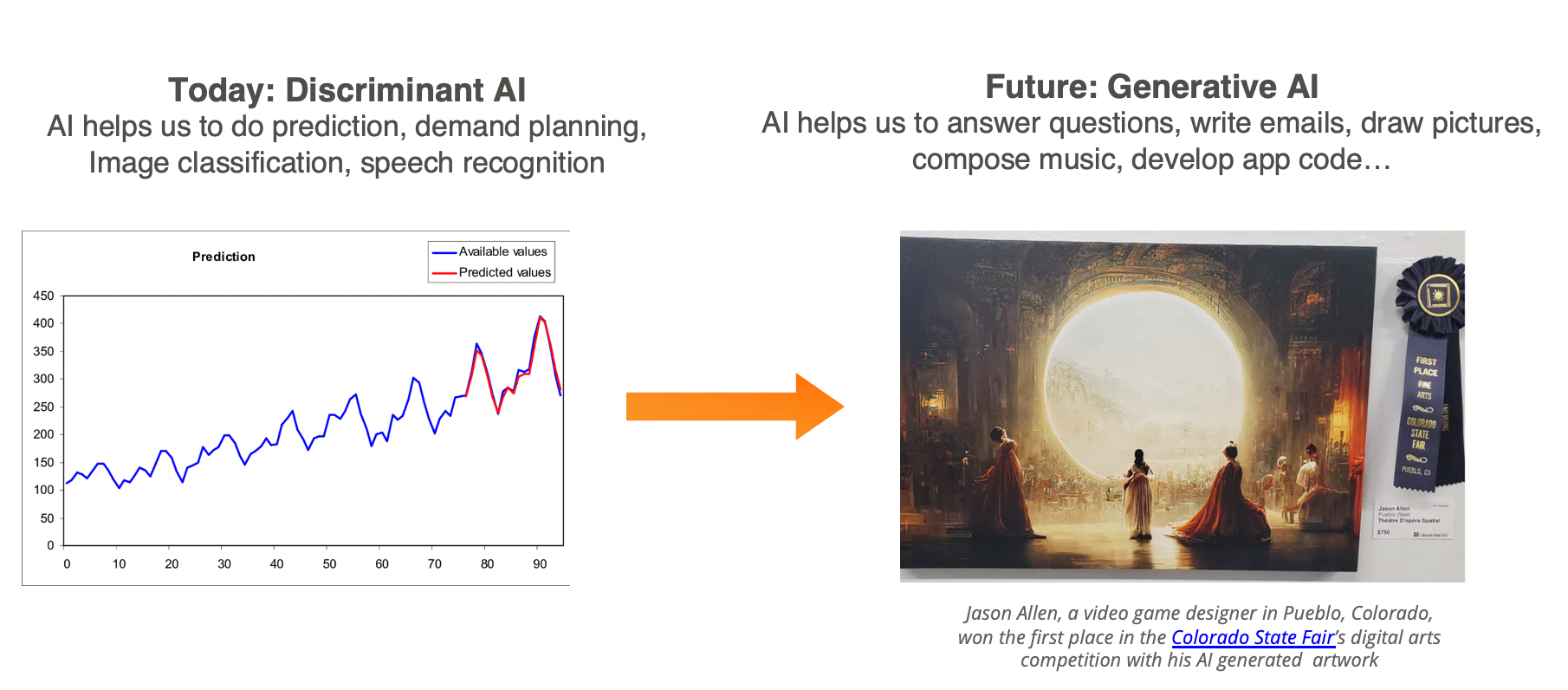

Generative AI represents the next frontier in artificial intelligence, where machines are capable of creating something entirely new based on the information they’ve been given. Unlike traditional AI, which operates within predefined rules, Generative AI is like an imaginative friend who can generate original, creative content. Today’s generative AI models can produce not only text but also images, music, and even computer code.

In essence, Generative AI breaks free from the constraints of traditional AI by introducing creativity and innovation into the realm of machine learning. This breakthrough has been made possible through advancements in deep learning, particularly Large Language Models (LLMS) like Qwen, GPT, LLaMA, Gemini, and others.

As AI becomes ubiquitous discussion worldwide from tech conferences to policy debates, it’s clear that we’re witnessing a transformative era.

But amidst all the hype, it’s essential to differentiate between various forms of AI and their respective strengths. Not every problem requires the sophistication of Generative AI, sometimes, traditional AI suffices. Conversely, relying solely on older methods may stifle progress in fields demanding greater flexibility and creativity.

Understanding these distinctions empowers organizations to adopt the right tools for the job. For instance:

By combining the best of both worlds, businesses can achieve optimal results without overcomplicating their workflows.

Despite their remarkable capabilities, Large Language Models (LLMs) have inherent limitations that can impact their performance in real-world applications. These pain points include:

1. Knowledge Cutoff Dates:

LLMs are trained on data up to a certain point in time. After training, they lack awareness of new information or updates. For example, if an LLM was trained before a major event of discovery, it won’t know about it unless explicitly retrained.

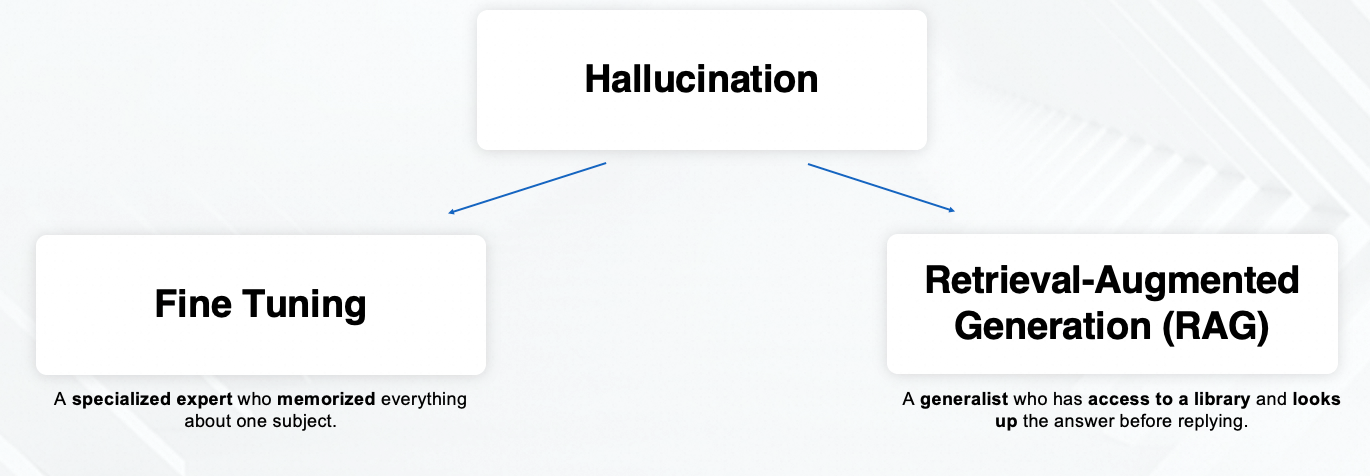

2. Hallucination:

LLMs sometimes generate incorrect or fabricated information, even when presented with valid inputs. This phenomenon, known as “hallucination”, occurs because the model may not have enough context or accurate data to produce reliable outputs.

3. Hard to Update:

Re-training an LLM is computationally expensive and time consuming. It’s impractical to retrain the model every time new information becomes available or when domain-specific knowledge changes.

These limitations highlight the need for solutions that allow LLMs to access external knowledge dynamically, ensuring they remain accurate, up-to-date, and reliable.

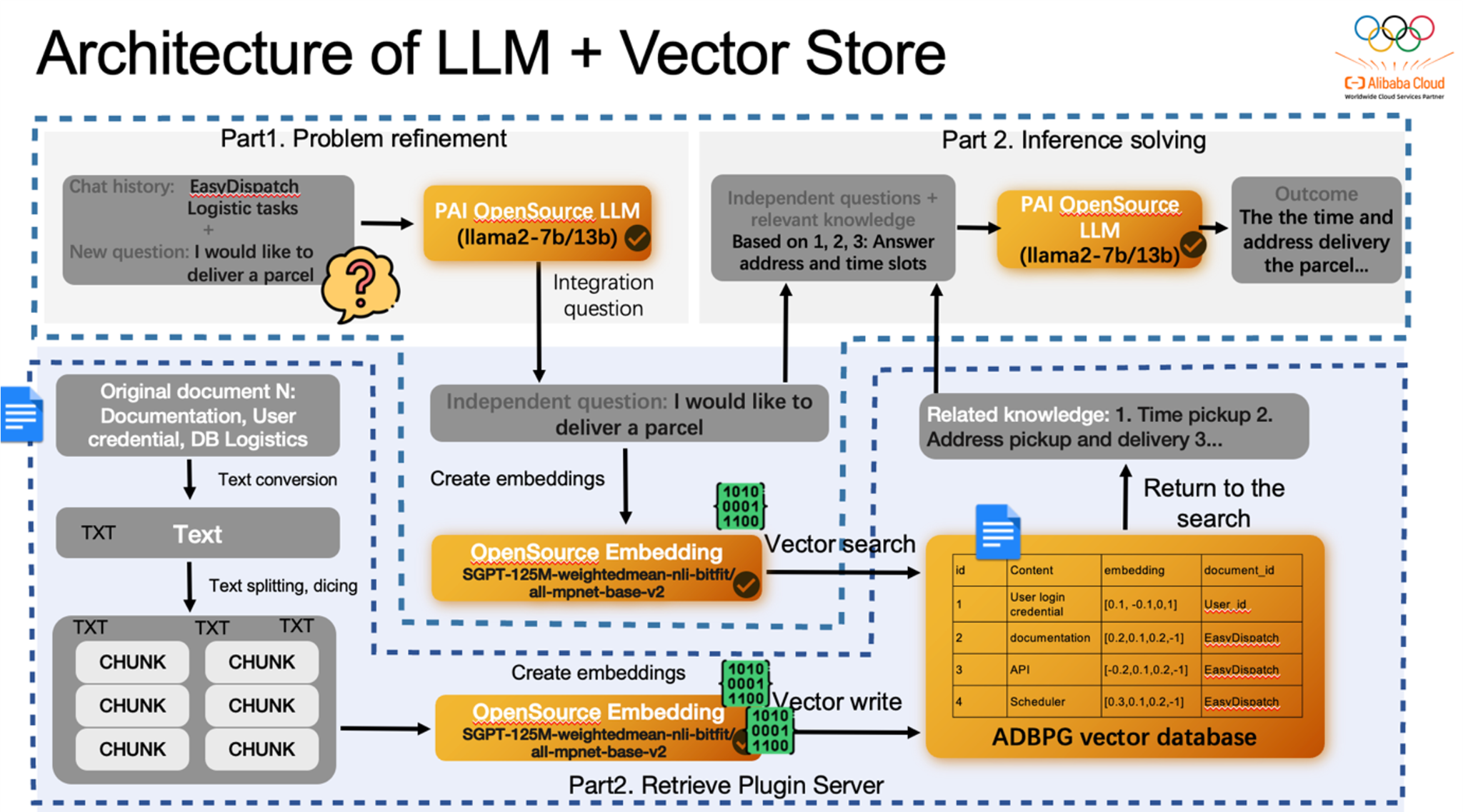

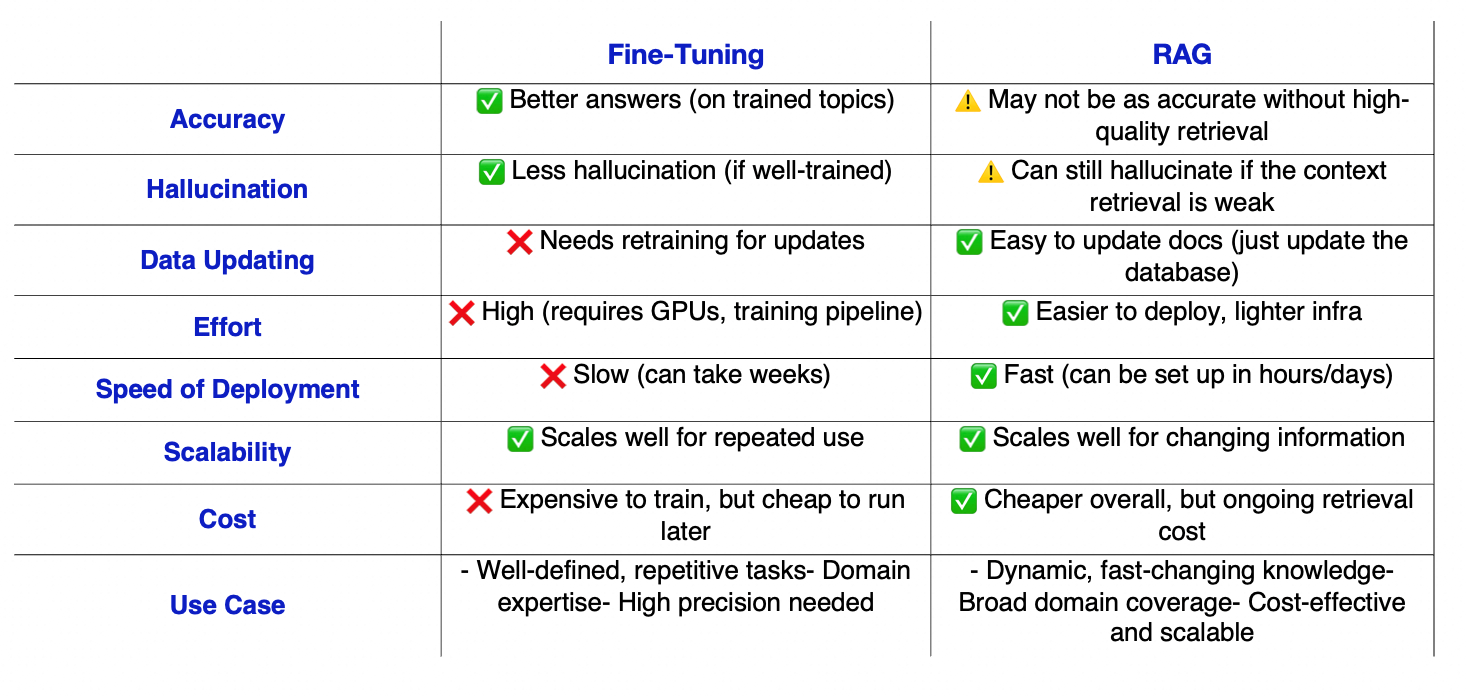

To tackle these challenges, two key approaches have emerged: Fine Tuning and Retrieval Augmented Generation (RAG). Each solution addresses specific pain points, and they can also be combined for optimal results.

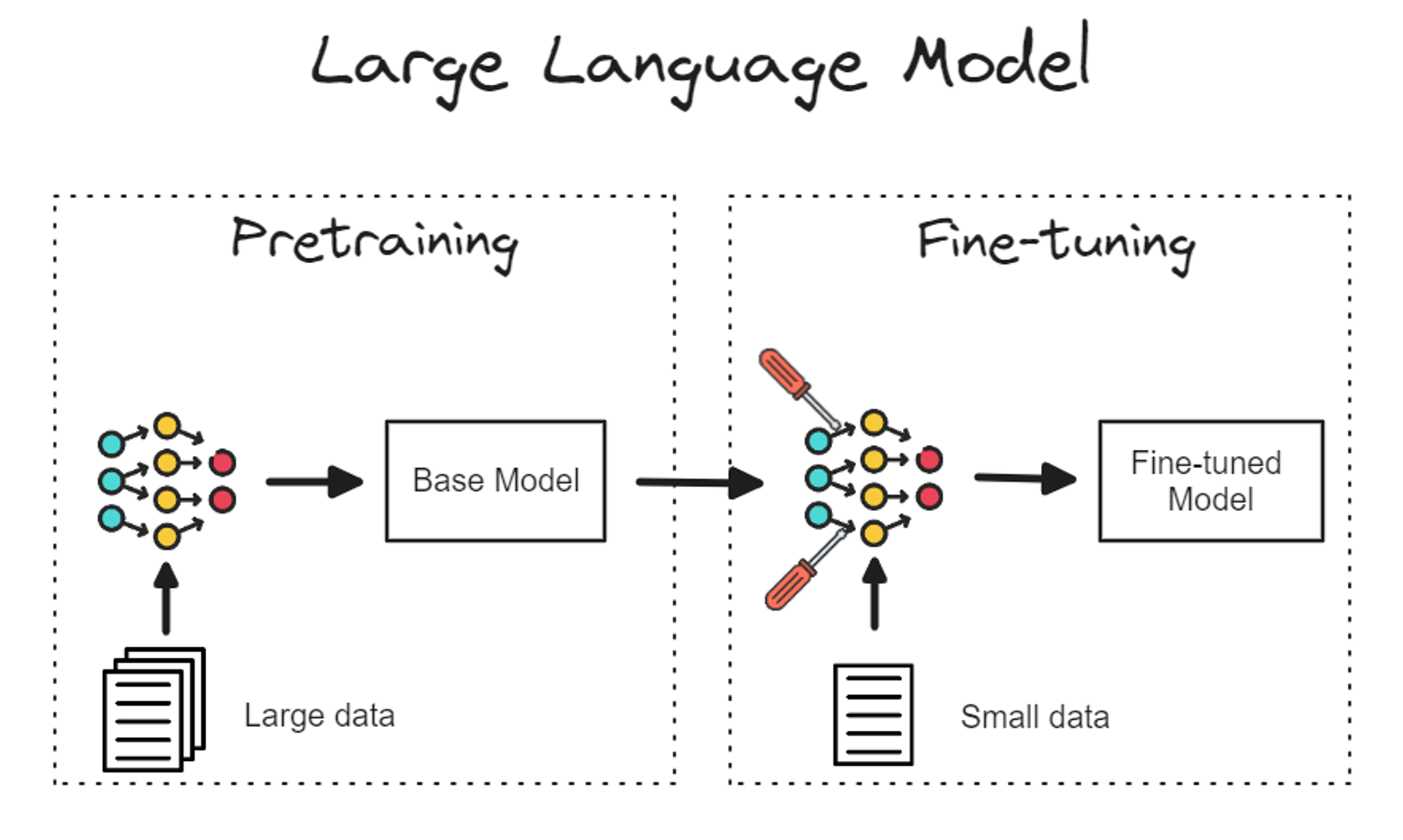

Fine-Tuning involves retraining the base model on domain-specific data to improve its understanding and reduce hallucinations. By specializing the model for a particular task or domain, it becomes better at generating accurate responses.

RAG reduces hallucinations by grounding the model’s responses in factual information retrieved from external sources (knowledge base). Instead of relying solely on its internal knowledge, the model consults up-to-date data to ensure accuracy.

Benefits:

Challenges:

Benefits:

Use Cases:

While Fine-Tuning and RAG address different aspects of Generative AI’s limitations, they can be used together to achieve the best of both worlds. Together, they:

1. Fine-Tune the Base Model:

2. Add RAG for Real-Time Knowledge:

3. Inference:

Alibaba Cloud provides a comprehensive suite of tools and platforms to help businesses implement Fine-Tuning and Retrieval Augmented Generation (RAG) effectively. These solutions are designed to streamline the development, deployment, and optimization of Generative AI pipelines, ensuring scalability, flexibility, and high performance.

Alibaba Cloud’s Platform for AI (PAI) offers a robust ecosystem for fine-tuning Large Language Models (LLMs) and other AI models. Key components include:

1. Model Development

PAI-Designer:

PAI-DSW:

LangStudio:

2. Model Training

3. Model Deployment

PAI-EAS:

For implementing Retrieval-Augmented Generation (RAG), Alibaba Cloud offers Model Studio, a powerful platform that streamlines the creation and deployment of RAG-based systems. Key features include:

Vector Search Integration:

End-to-End RAG Workflow Support:

Scalability and Flexibility:

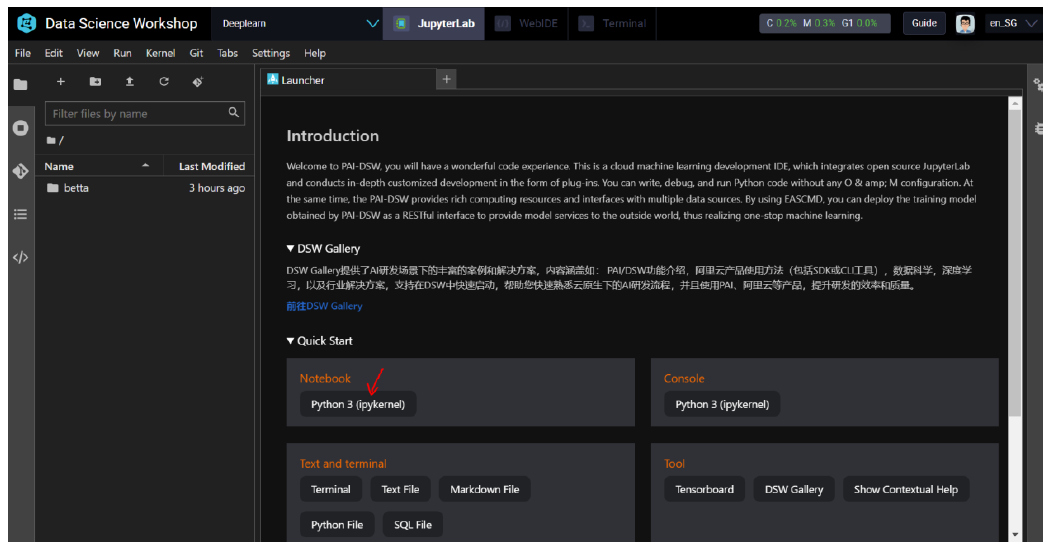

PAI-DSW stands out as a versatile tool for developers and researchers working on Generative AI pipelines. Its Jupyter-style interface makes it easy to:

Whether you’re fine-tuning a model or designing a RAG pipeline, PAI-DSW provides the flexibility and control needed to accelerate development.

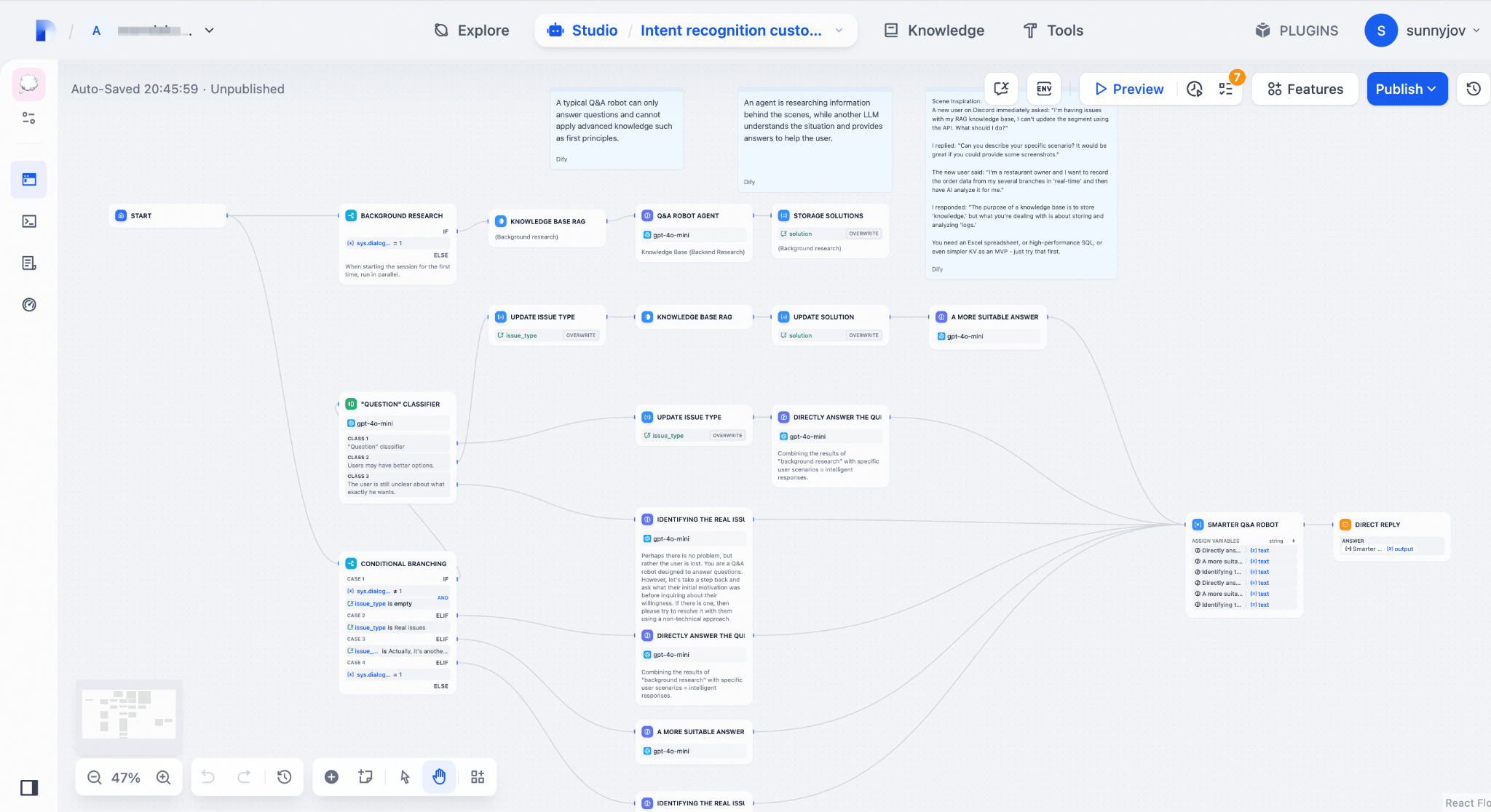

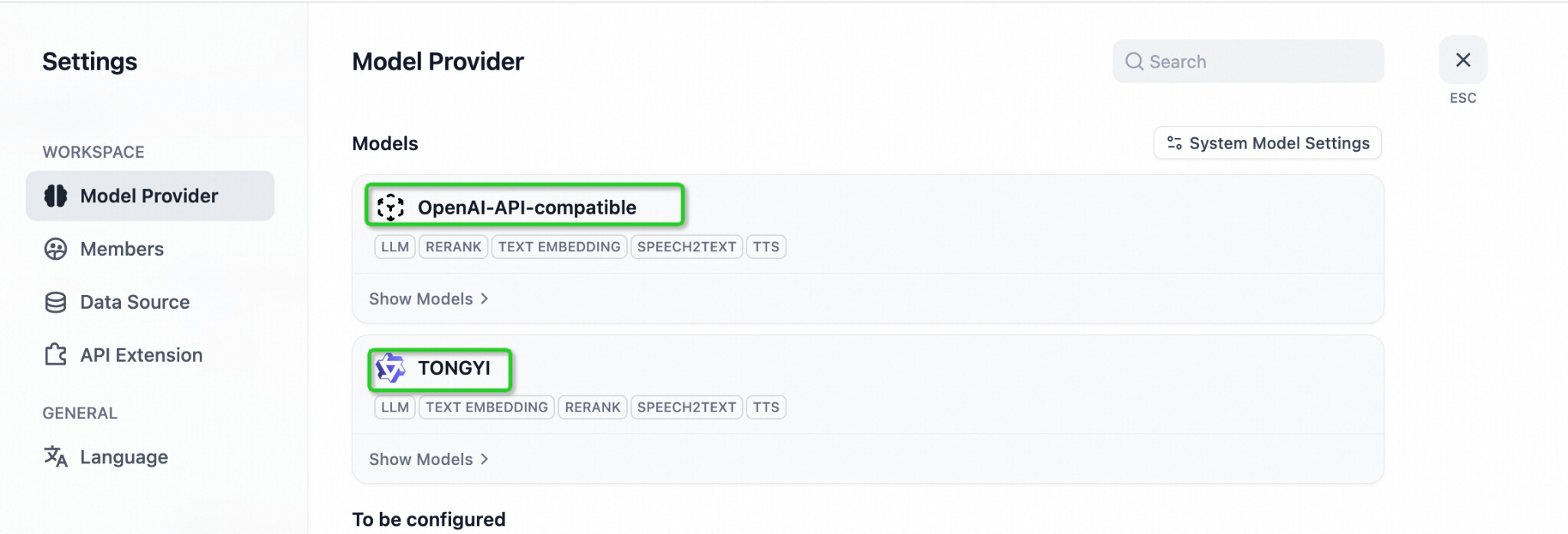

To further enhance and accelerate model capabilities, Dify now supports popular open-source large language models like Qwen. Users can easily access these cutting-edge Qwen models by entering API keys (from Model Studio) on Dify, and build high-performance AI applications in minutes.

Generative AI is powerful, but real-world adoption needs reliability. Fine-tuning and RAG offer complementary solutions to address hallucination, data freshness, and domain expertise.

Alibaba Cloud provides a full-stack ecosystem, from model training to deployment to retrieval, so you can build GenAI applications that actually work in production.

Unlock the Power of Conversational AI: Create Your Own Chatbot Using Qwen, Model Studio, and FC

110 posts | 20 followers

FollowAmuthan Nallathambi - July 12, 2024

Data Geek - February 25, 2025

Farruh - July 18, 2024

Alibaba Cloud Native - September 12, 2024

Regional Content Hub - August 12, 2024

Ashish-MVP - April 8, 2025

110 posts | 20 followers

Follow Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn MoreMore Posts by Alibaba Cloud Indonesia

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free