Container-based virtualization is a type of virtualization technology. Compared with a virtual machine (VM), a container is lighter and more convenient to deploy. Docker is currently a mainstream container engine, which supports platforms such as Linux and Windows, as well as mainstream Docker orchestration systems such as Kubernetes (K8S), Swarm, and Rocket (RKT). Common container networks support multiple models such as Bridge, Overlay, Host, and user-defined networks. Systems such as K8S rely on the Container Network Interface (CNI) plug-ins for network management. Commonly used CNI plug-ins include Calico and Flannel.

This article will introduce the basics of container networks. Based on Alibaba Cloud's Elastic Network Interface (ENI) technology, the ECS container network features high performance, easy deployment and maintenance, strong isolation, and high security.

This section introduces the working principle of traditional container networks.

CNI is an open source project managed by the Cloud Native Computing Foundation (CNCF). It develops standards and provides source code libraries for major vendors to develop plug-ins for Linux container network management. Well-known CNI plug-ins include Calico and Flannel. Calico implements protocols such as BGP through Flex/Bird, and stores them into a distributed in-memory database to establish a large Layer 3 network, enabling containers on different hosts to communicate with containers on different subnets without sending ARP.

Flannel implements a container overlay network based on tunneling technologies such as VXLAN. The CNIs such as Calico/Flannel use VETH pairs to configure the container network. A pair of VETH devices are created, with one end bound to the container, and the other end to the VM. The VM forwards the container network through technologies such as the network protocol stack (overlay network), Iptables (Calico plug-in), or Linux Bridge. (When the container network is connected to the vSwitch through the bridge in the ECS, the VPC can only reach the ECS level, and the container network is a private network on the bridge.)

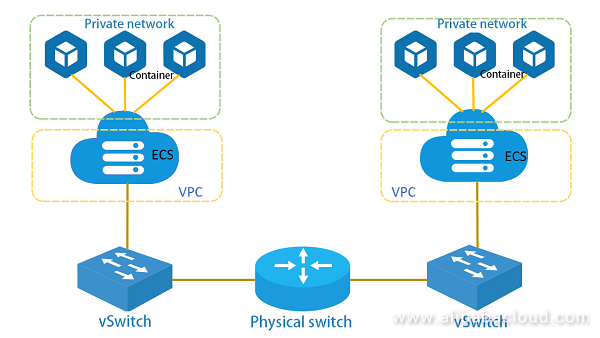

The following figure shows the workflow of the currently mainstream container network, which differs from the multi-NIC container network from the following aspects:

In the entire network system, the VM internally needs CNI plug-ins of orchestration systems such as K8S for network configuration. The vSwitch supports communication protocols such as Openflow and Netconf, which are managed and configured through a Software Defined Network (SDN) controller. Mainstream ToR switches use the Netconf protocol for remote configuration. SDN physical switches that support Openflow are also available in the market.

To manage the entire network, two different network control systems are needed. The configuration is relatively complicated, and certain performance bottlenecks exist due to factors such as the implementation mechanism. The security policies on the host cannot be applied to container applications.

When a VM has multiple Network Interface Cards (NICs) that are dynamically hot swappable, these NICs can be used on a container network, so that the container network will no longer need to utilize technologies such as Linux VETH and Bridge. At the same time, messages are forwarded to the Virtual Switch (vSwitch) on the host to improve network performance through a simplified process.

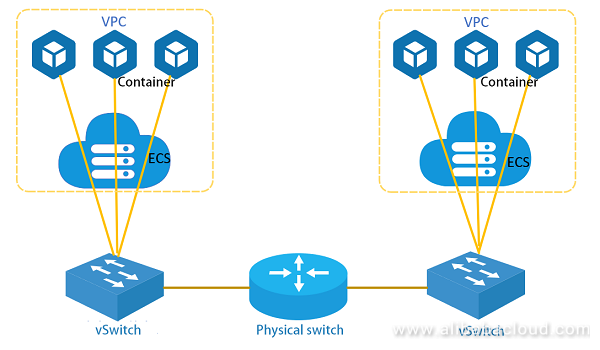

As shown in the following figure, a vSwitch is running on the host to forward traffic from the VM and container. Multiple virtual NICs are connected to the vSwitch. When the container is started on the VM, the virtual NIC is dynamically bound to the VM where the container is located on the host, then the NIC is bound inside the VM to the network namespace where the container is located, and the network traffic in the container can be directly sent to the vSwitch located on the host through the NIC (that is, the container network can connect to the vSwitch directly).

Rules such as ACL, QoS, and Session are applied in vSwitch to forward the traffic. When a container running on a VM on host 1 accesses a container running on a VM on host 2, the traffic generally goes through the following process:

Compared with traditional solutions where containers are running on VMs, this solution is characterized by high performance, easy management, and strong isolation.

The multi-NIC solution allows containers to directly access the VPC network plane, so that each container can provide full VPC network functions, including EIP, SLB, Anti-DDoS Pro, security group, HAVIP, NAT, and user routing.

A container that accesses the VPC network plane directly through the multi-NIC solution can use some advanced functions of the VPC, such as the peer function. The cross-VPC ENI is also available for the access to cloud products, and multiple NICs within different VPCs can be assigned to the container. This ensures that the container can be used across multiple VPCs.

In the multi-NIC solution, the network traffic in the container does not need to be forwarded through the Iptables/Bridge on the VM, but goes directly to the vSwitch located on the host. This eliminates the data message forwarding logic on the VM, and simplifies the data copying process, which greatly improves the performance of the container network. The following table lists the basic performance data of different solutions in a single test.

| Single-thread (Mbps) | Single-thread (pps) | Multi-thread (pps) | TBase test 1 KB (QPS) | |

| Linux Bridge | 32.867 | 295,980 | 2,341,669 | 363,300 |

| Multi-NIC solution | 51.389 | 469,865 | 3,851,922 | 470,900 |

| Performance improvement | 56.35% | 58.7% | 64.49% | 29.6% |

In the traditional Bridge solution, all container instances are on the same large Layer 2 network, which leads to the flooding of broadcast, multicast, and unknown unicast. The direct connection function provided by the multi-NIC solution and the ACL and security group functions provided by the ECS network can ensure security isolation effectively. The container network traffic cannot be viewed even on the management plane of the container. The security rules are applies at the container level instead of the VM level.

When the management system dispatches the container to a VM, the control system creates a NIC on the vSwitch on the host where the VM is located, inserts the NIC into the VM through hot swapping, and configures the NIC into the container network namespace on the VM. By configuring the traffic forwarding rules of the vSwitch and then configuring HaVIP on the xGW, external applications and clients can access the services provided by the container.

The multi-NIC solution also facilitates container migration. Taking another VM migrated to the same host as an example, K8S's Kubelet module migrates the applications, then reconfigures the network through CNI plug-ins, manages the container IP and VIP, and configures the way to access the container application. The whole process is complicated, but the NIC solution can make it easy. After the container is dispatched to a VM, the NIC bound to the old container is unplugged from the old VM and inserted into the VM where the new container is located. The NIC is then bound to the container network namespace on the VM. The new container can communicate normally with no more network re-configuration needed.

Due to its superior performance advantages, DPDK has become popular, and more and more applications are developed based on DPDK. Traditional container networks use VETH as a network device, which currently cannot directly use the PDK driver of the DPDK, so DPDK-based applications cannot be directly used in the container. In the multi-NIC solution, the container uses the ECS network device, which is a common E1000 or virtio_net device. Both devices have a PMD driver, and the container can use the device to directly run DPDK-based applications, which improves the network performance of the application within the container.

To enable cross-domain of a physical host, you need to insert multiple NICs into the physical machine. Due to the limitation of PCI slots and costs, it is rare to deploy more than two NICs on a physical machine. Powering on or off hardware devices more or less adds pulses to the whole system, which affects the stability of the machine, and limits the hot swapping of devices. A common hot swapping device is a USB device. Hot swapping for PCI devices has not received restrictive support until recent years due to the need for two enumerations and power effects.

In a virtual environment, the low cost and flexibility of the virtual NIC greatly improve the availability of the VM. Users can dynamically allocate or release NICs as required, and dynamically plug or unplug NICs into or from the VM without affecting the normal operation of the VM. The way libvirt/qemu simulates virtual devices has the following advantages that physical hosts cannot match:

As long as the system has enough resources such as memory, it can simulate multiple NICs and assign them to the same VM, and 64 or even 128 NICs can be installed on one VM. Software-simulated NICs cause far less cost than those of the physical hardware environment. They also feature better support for multi-queue and some uninstallation functions for mainstream hardware, which improves the flexibility of the system.

The NIC on the VM is simulated by software. Therefore, when a NIC is needed, some basic resources are allocated by software to simulate the NIC. The hot swapping framework enables libvirt/qemu to be easily bound to a running VM, and the VM can immediately use the NIC to send network messages. When the NIC is no longer needed, it can be "unplugged" by a libvirt/qemu interface call without stopping the VM. The resources allocated to the NIC are destroyed, the memory allocated to the NIC is recycled, and the interruption is recovered.

This section describes how to implement container network communication step by step by using VM multi-NIC.

~# docker run -itd --net none ubuntu:16.04Note: Specify the container's network type to be none when starting Docker

~# mkdir /var/run/netns

~# ln -sf /proc/2017/ns/net /var/run/netns/2017

~# ip link set dev eth2 netns 2017

~# ip netns exec 2017 ip link set eth2 name eth0

~# ip netns exec 2017 ip link set eth0 up

~# ip netns exec 2017 dhclient eth0Note: Depending on the release version, users may not need to "create" the container's network namespace by manually creating a connection. After binding eth2 to the container's network namespace, rename it to eth0.

~# ifconfig -aCheck whether there is a newly configured NIC in the container.

/# ifcofig -aIt can be seen that eth2 has been removed from the VM and applied in the container.

$ cat server.sh

#!/bin/bash

for i in $(seq 1 $1)

do

sockperf server --port 123`printf "%02d" $i` &

Done

$ sh server.sh 10

$ cat client.sh

#!/bin/bash

for i in $(seq 1 $1)

do

sockperf tp -i 192.168.2.35 --pps max --port 123`printf "%02d" $i` -t 300 &

done

$ sh client 10Tier-Base (TBase) is a distributed KV database similar to Redis. It is written in C++ and supports almost all Redis data structures. It also supports RocksDB as a backend. TBase is widely used in Ant Financial. This section will introduce the TBase business test of this solution.

Test environment

Server: 16C60G x 1 (half A8)

Client: 4C8G x 8

TBase server deployment: 7G x 7 instances

TBase client deployment: 8 x (16 threads + 1 client) => 128 threads + 8 clients

Testing report

| Operation | Packet size | Clients | NIC | load1 | CPU | QPS | AVG rt | 99th rt |

| set | 1 KB | 8 | 424 MB | 7.15 | 44% | 363,300 | 0.39 ms | < 1 ms |

| get | 1 KB | 8 | 421 MB | 7.06 | 45% | 357,000 | 0.39 ms | < 1 ms |

| set | 64 KB | 1 | 1,884 MB | 2.3 | 17% | 29,000 | 0.55 ms | < 5 ms |

| set | 128 KB | 1 | 2,252 MB | 2.53 | 18% | 18,200 | 0.87 ms | < 6 ms |

| set | 256 KB | 1 | 2,804 MB | 2.36 | 20% | 11,100 | 1.43 ms | < 5 ms |

| set | 512 KB | 1 | 3,104 MB | 2.61 | 20% | 6,000 | 2.62 ms | < 10 ms |

Test environment

Server: 16C60G x 1 (half A8)

Client: 4C8G x 8

TBase server deployment: 7G x 7 instances

TBase client deployment: 16 x (16 threads + 1 client) => 256 threads + 16 clients

Testing report

| Operation | Packet size | Clients | NIC | load1 | CPU | QPS | AVG rt | 99th rt |

| set/get | 1 KB | 16 | 570 MB | 6.97 | 45% | 470,900 | 0.30 ms | < 1 ms |

Based on the ENI multi-NIC solution, the overall performance is improved and the delay is shortened significantly compared to the Bridge solution (QPS is increased by 30% and average latency is reduced by 23%). Assume that a 16C60G server is used and the QPS is around 470,900. In this case, the average rt is 0.30 ms and the 99th rt is less than 1 ms. 45%, 29%, 18%, and 2% of the CPU is consumed by user, sys, si, and st, respectively. Compared with Linux Bridge, the multi-NC solution features a significantly lower CPU consumption by si. Through kernel queue dispersion, CPU consumption by st is distributed over multiple different cores, so that processing resource usage is more balanced.

For the solution of the VPC route table, Flannel/Canal, there is no substantial loss in bandwidth and throughput. The latency will be about 0.1 ms relative to the host. Nginx is used to test the QPS, and the loss is about 10% when the page is small. For the ENI solution, there is no substantial loss in bandwidth and throughput relative to the host, and the latency is slightly lower than that on the host. In the application test, the performance is better than that on the host network by about 10%, because the POD has not been subject to iptables. For the default Flannel VXLAN, the bandwidth and throughput loss is about 5%, and in the maximum QPS for the Nginx small page test, the performance loss is about 30% relative to the host.

This article introduces a container network solution based on VM multi-NIC hot swapping. By dynamically hot-swapping the NIC for the VM and applying it to the container for sending and receiving the container network data messages, and by forwarding network messages through a virtual software switch running on a VM, we have greatly reduced the complexity of the container network's management and control system, improved the network performance, and enhanced the container network security.

Alibaba Cloud Elastic Network Interface (ENI) is a virtual network interface that can be attached to an ECS instance in a VPC. By using ENIs, you can build high-availability clusters, implement failover at a lower cost, and achieve refined network management. The ENI feature is available in all regions. To learn more about ENI, visit the following pages:

19 posts | 11 followers

FollowAlibaba Clouder - July 14, 2020

Alibaba Cloud Blockchain Service Team - August 29, 2018

Alibaba Developer - June 30, 2020

Alibaba Cloud Native Community - November 5, 2020

Alibaba Clouder - May 25, 2018

Alibaba Container Service - November 21, 2024

19 posts | 11 followers

Follow Networking Overview

Networking Overview

Connect your business globally with our stable network anytime anywhere.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Edge Network Acceleration

Edge Network Acceleration

Establish high-speed dedicated networks for enterprises quickly

Learn MoreMore Posts by AlibabaCloud_Network