By Chang Shuai

Thanks to simple APIs and excellent scalability, Object Storage Service (OSS) allows applications in different scenarios to easily store several billion object files every day. The simple data access structure of key-value pairs has greatly simplified data uploading and reading. In addition to uploading and reading, a series of new application scenarios around OSS will emerge soon. Here are some examples:

The preceding three scenarios share some common challenges:

This article will introduce a serverless best practice based on Serverless Workflow and Function Compute (FC) to address the preceding three scenarios.

We believe that a simple list-and-copy main program can back up OSS files, but this involves many considerations. For example, how can we automatically restore the operation (for high availability) when the computer stops or the relevant process exits unexpectedly while running the main program? After recovery, how can we quickly know the processed files, for example, by manually modifying the database maintenance status? How can we coordinate the active and standby processes, for example, by manually implementing service discovery? How can we reduce the replication time? How do we choose between the infrastructure maintenance cost, the economic cost, and reliability, for example, by manually implementing parallel calls and management measures? With hundreds of millions of OSS objects, a simple single-threaded main program of list-and-copy cannot fully meet such requirements.

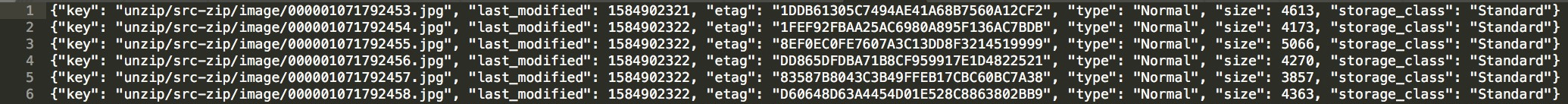

For example, you need to copy hundreds of millions of OSS files into a bucket to another bucket in the same region to convert standard storage into archive storage. In this oss -batch-copy instance, we provide a workflow application template to back up all the files listed in your index file by calling the OSS CopyObject function in sequence. The index file contains the OSS object meta to be processed. For example:

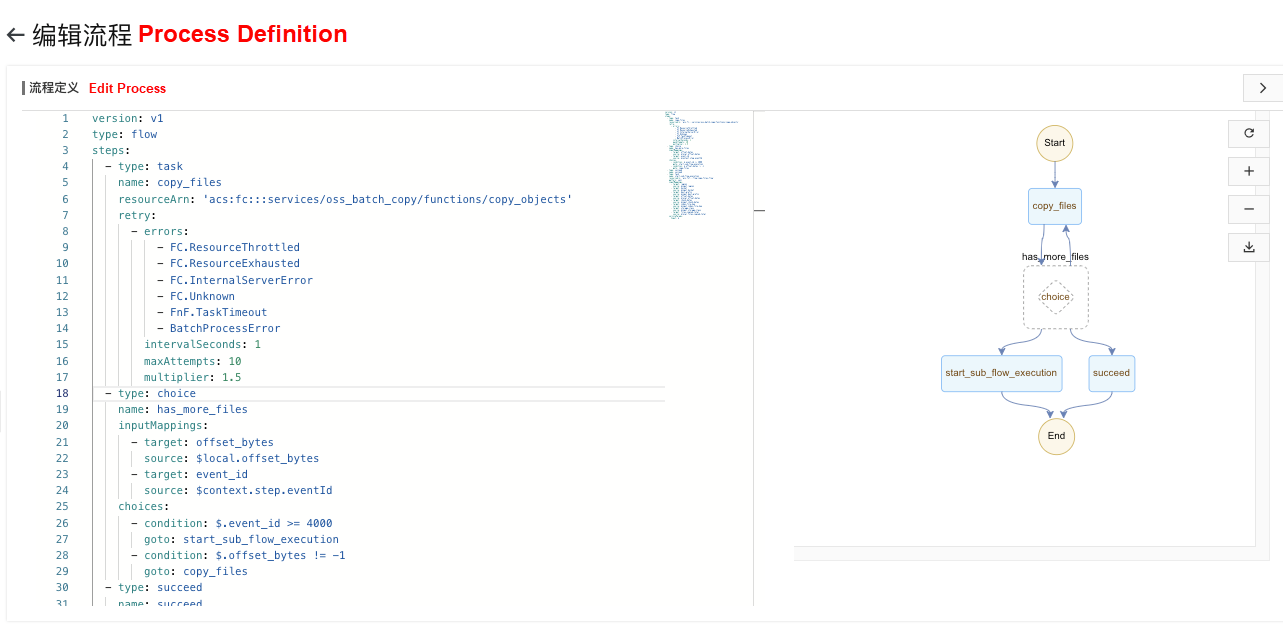

The index for hundreds of millions of OSS files can be hundreds of GB. Therefore, we need to use the range to read the index file and process part of the OSS files at a time. In this case, we need a control logic similar to while hasMore {} to ensure the index file is fully processed. Serverless Workflow adopts the following implementation logic:

copy_files task step: Read the size of the input from the offset position of the input index file, extract the files to be processed, and call the OSS CopyObject function through FC.has_more_files selection step: After you process a batch of files, check whether the current index file is fully processed by running the conditional comparison. If yes, proceed to the success step. If no, input the (offset, size) value of the next page to copy_files for loop execution.start_sub_flow_execution task step: Since the execution of a single workflow is limited by the number of history events, the event ID for the current workflow can be referred to for judgment during this step. If the number of current events exceeds a threshold, a new identical process is triggered, and the process continues after the sub-process ends. A sub-process can also trigger its own sub-process, which ensures that the entire process can be completed regardless of the number of OSS files.

Using the workflow for batch processing can guarantee the following expectations:

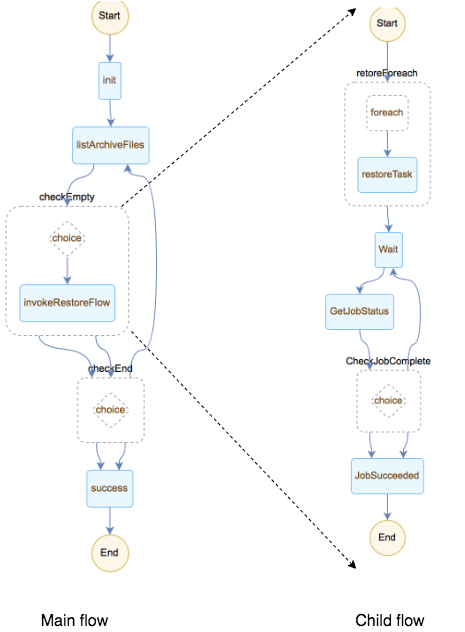

This article introduces an efficient and reliable solution to restore large amounts of OSS archive files by using Serverless Workflow. This scenario is special despite similar challenges to those of copying files:

With the logic similar to oss-batch-copy, in this instance, you can restore OSS files in batches through ListObjects. Restoring a batch of files is a sub-process. In each sub-process, use this for each parallel loop step to restore OSS objects at high concurrency. A maximum of 100 OSS objects can be restored concurrently. Restore is an asynchronous operation. After each Restore operation for an object, you must poll the object status until the object is restored. Restoring and polling are done in the same concurrent branch.

Restoring files in batches by using Serverless Workflow and FC has the following features:

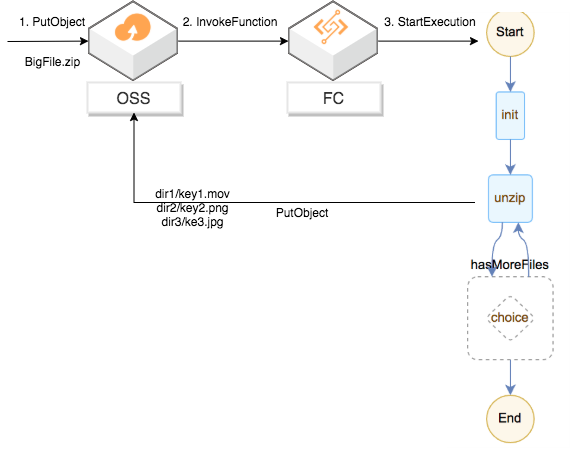

Shared storage of files is a major highlight of OSS. For example, processed content uploaded by one party can be used by downstream applications. Uploading multiple files needs to call the PutObject operation several times, which leads to a high probability of error and upload failure. Therefore, many upstream services call an operation to upload files by using a package. Although this simplifies the operation by the uploader, downstream users want to see the uploaded files in the original structure for their use. The demand here is to automatically decompress a package and store it to another OSS path in response to the OSS file upload event. Today, a function that decompresses a package through FC triggered by events is available in the console. However, the solution based solely on FC has some problems:

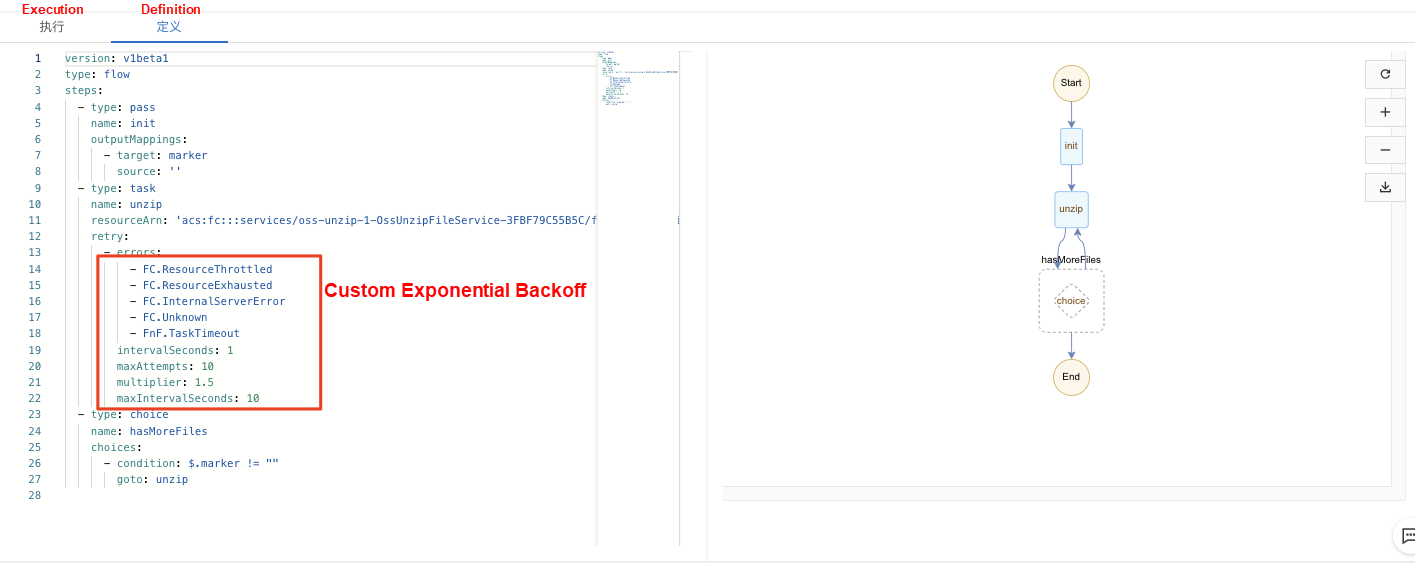

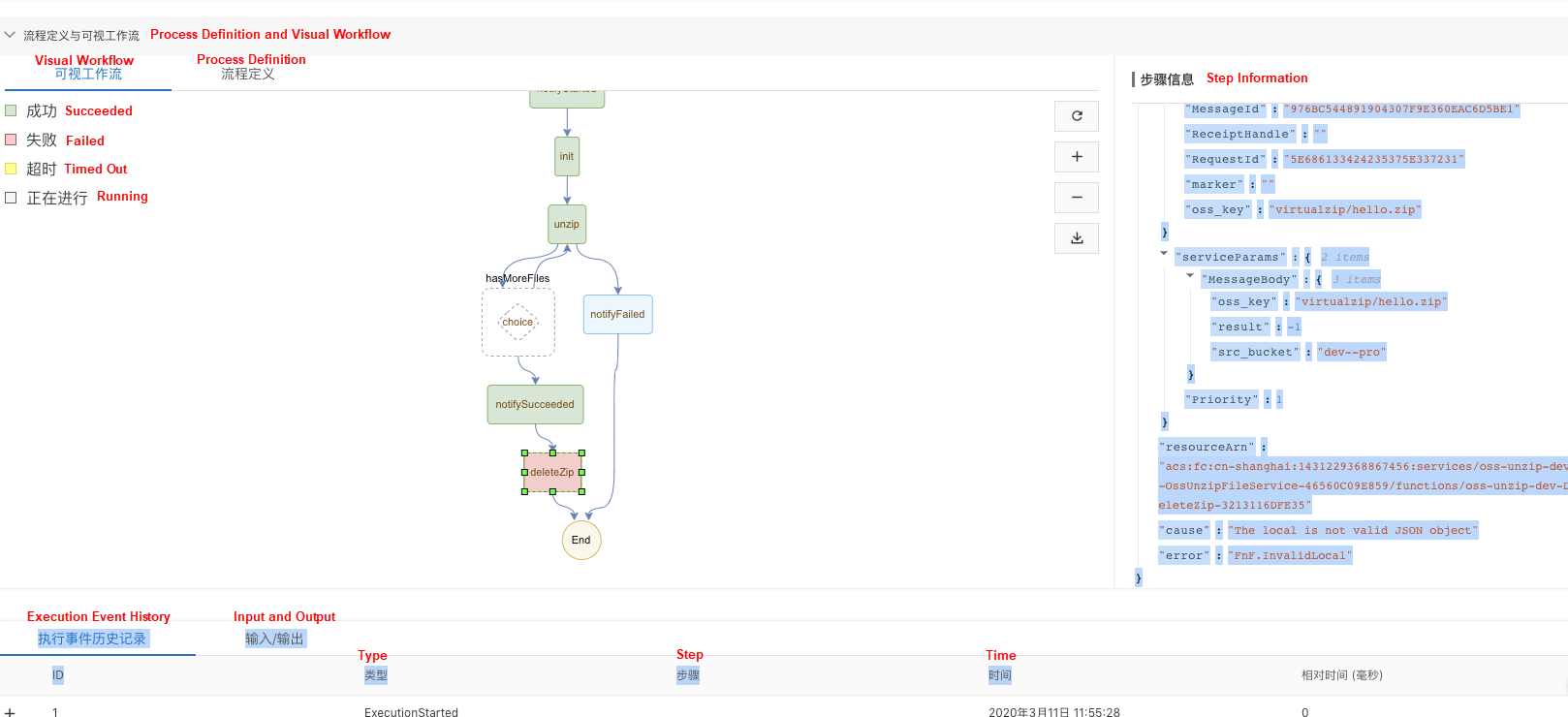

To address the prolonged execution and custom retries, in this instance, we introduce Serverless Workflow to schedule FC tasks. Start Serverless Workflow after an OSS event triggers FC. Serverless Workflow uses the metadata of the zip package for streaming reading, unzipping, and uploading to the OSS target path. The current marker is returned when the execution time of each function exceeds a threshold. Then, Serverless Workflow determines whether the current marker indicates that all files are processed. If yes, the process ends. If no, the streaming decompression continues from the current marker until the end.

The addition of Serverless Workflow removes the 10-minute limit for function calls. Moreover, built-in status management and custom retry ensure that GB-level packages and packages with more than 100,000 files can be decompressed reliably. Serverless Workflow supports a maximum execution time of one year. On this basis, almost any size of zip packages can be streaming decompressed.

The decompression process can be customized flexibly thanks to Serverless Workflow. The following figure shows how a user notifies the MNS queue after decompression and how to delete the original package in the next step.

As you can see, the mass adoption of OSS brings a series of problems, but the ways to solve them are tedious and error-prone. In this article, we introduce a simple and lightweight serverless solution based on Serverless Workflow and Function Compute for three common scenarios: batch backup of files, high-concurrency recovery, and event-triggered decompression of ultra-large zip files. This solution can efficiently and reliably support the following purposes:

The batch processing of large amounts of OSS files involves more than the three scenarios mentioned in this article. We look forward to discussing more scenarios and requirements with you at a later date.

99 posts | 7 followers

FollowAlibaba Clouder - January 20, 2021

Alibaba Cloud Serverless - November 25, 2020

Alibaba Container Service - December 18, 2024

Alibaba EMR - November 14, 2024

Alibaba Container Service - December 18, 2024

Alibaba Cloud Native Community - March 11, 2024

99 posts | 7 followers

Follow Hybrid Cloud Distributed Storage

Hybrid Cloud Distributed Storage

Provides scalable, distributed, and high-performance block storage and object storage services in a software-defined manner.

Learn More OSS(Object Storage Service)

OSS(Object Storage Service)

An encrypted and secure cloud storage service which stores, processes and accesses massive amounts of data from anywhere in the world

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Hybrid Cloud Storage

Hybrid Cloud Storage

A cost-effective, efficient and easy-to-manage hybrid cloud storage solution.

Learn MoreMore Posts by Alibaba Cloud Serverless