By Xiliu, Alibaba Cloud Technical Expert

You may have noticed a new usage of Alibaba Cloud's serverless series, which is not that obvious, during your first use. Compared with the traditional server-based approach, the serverless service platform enables rapid scale-out of your apps and more efficient parallel processing. With this platform, you do not need to pay for idle resources or worry that reserved resources may be insufficient. However, in a traditional usage paradigm, you must reserve hundreds or thousands of servers for highly paralleled and short-lived tasks. In addition, you must pay for each of the used servers, even if some of them are no longer in use.

Alibaba Cloud's serverless product, Function Compute, can address all the preceding concerns:

In these scenarios, whether your tasks can be divided-and-conquered and whether their subtasks can run in parallel may be your only concerns. A long task that takes one hour to complete can be divided into 360 independent subtasks, each of which takes ten seconds for parallel processing. This division shortens the processing time from the previous one hour to only ten seconds. Moreover, when using the pay-as-you-go billing method, the computing budget squares with the cost. In traditional billing methods, however, waste is inevitable because you always have to pay for all the reserved resources.

Next, let's elaborate on serverless practices for large-scale data processing.

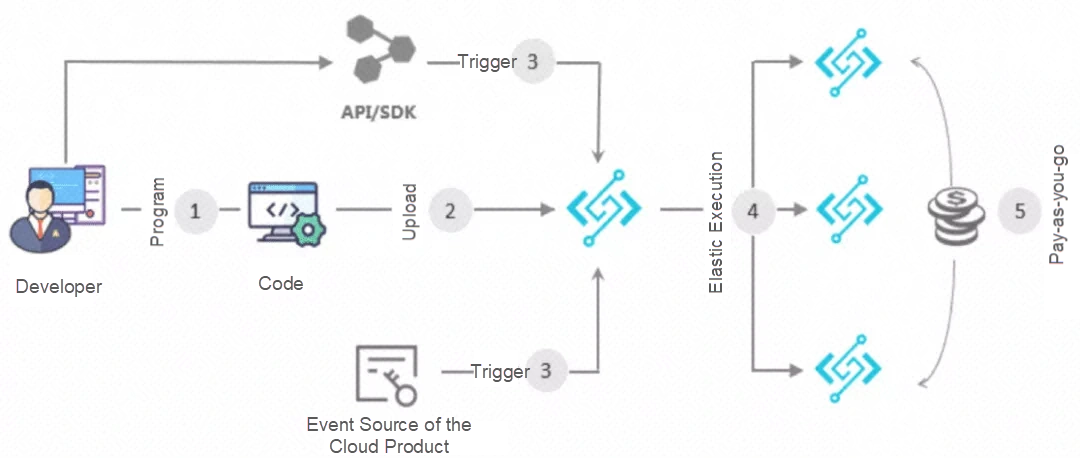

Before we move on to large-scale data processing examples, let's take a brief look at Function Compute.

For more information, please visit the Function Compute product page.

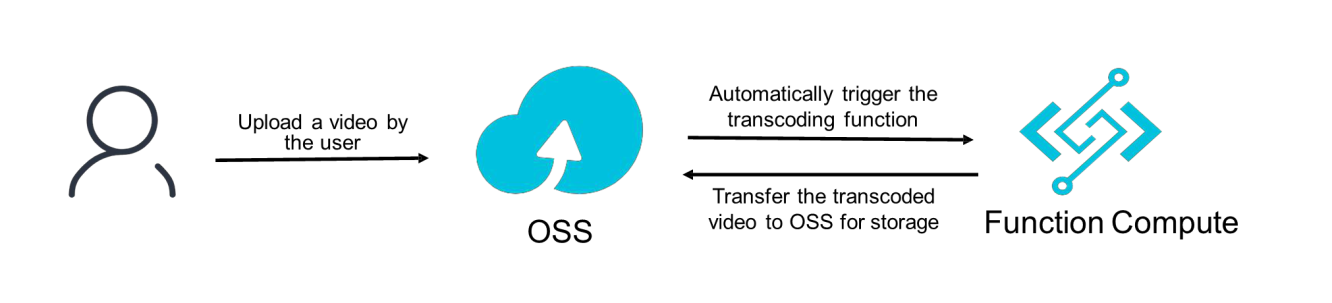

Thus far, you have seen how Function Compute works. Next, let's explain its operation with a case that involves a large amount of parallel video transcoding. Let’s assume there is a homeschooling or entertainment enterprise that produces teaching videos or new videos in a centralized manner. The enterprise wants to transcode these videos quickly so the clients can play them back shortly. Amid the current pandemic, the number of online education courses has surged, and the peak hours of classes are generally at 10:00, 12:00, 16:00, and 18:00. In this case, it is common to process all newly uploaded videos within a specific period, such as half an hour.

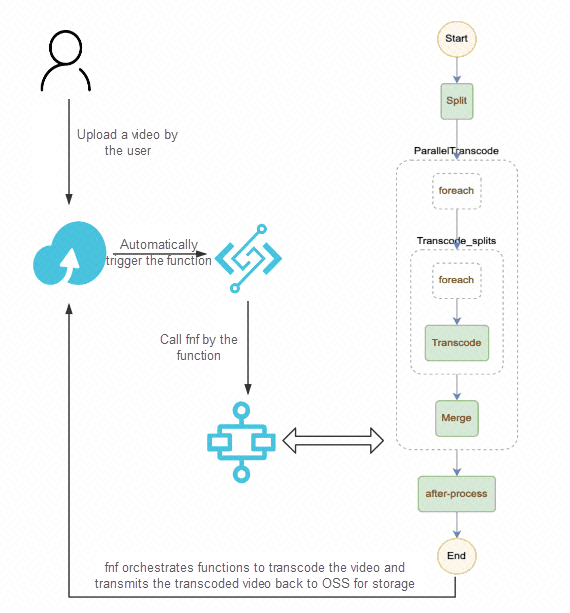

As shown in the preceding figure, when a user uploads a video to OSS, the OSS trigger automatically triggers function execution, and Function Compute automatically scales out. The function logic in the execution environment calls FFmpeg to transcode the video and transmits the transcoded video back to OSS for storage.

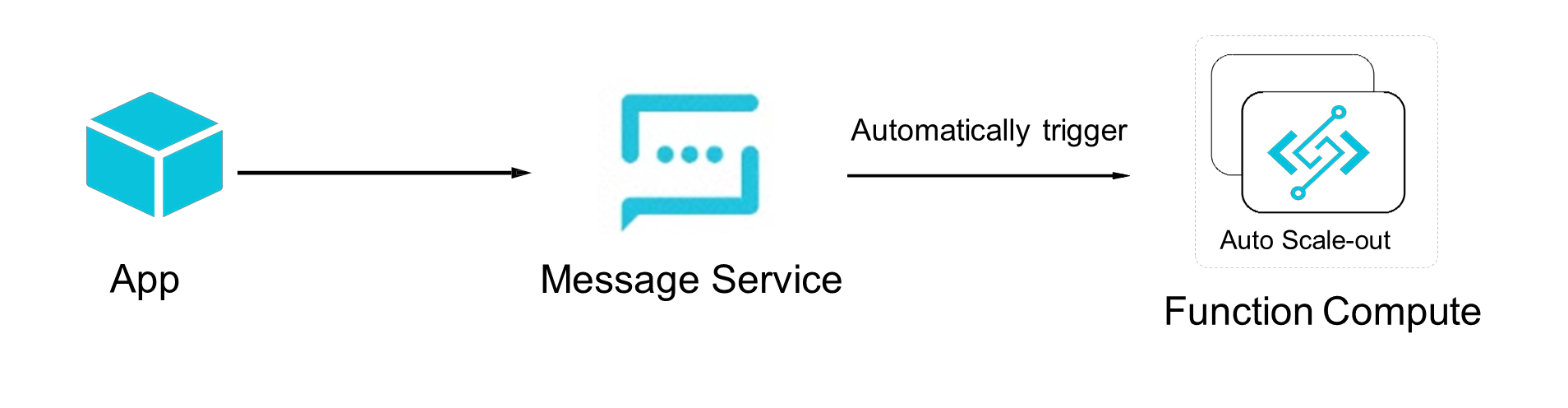

As shown in the preceding figure, an app only needs to send a message to automatically trigger a function for executing audio and video processing tasks. Accordingly, Function Compute automatically scales out. The function logic in the execution environment calls FFmpeg to transcode the video and transmits the transcoded video back to OSS for storage.

Take Python as an example. The procedure is shown below:

python

# -*- coding: utf-8 -*-

import fc2

import json

client = fc2.Client(endpoint="http://123456.cn-hangzhou.fc.aliyuncs.com",accessKeyID="xxxxxxxx",accessKeySecret="yyyyyy")

# Select synchronous or asynchronous calling

resp = client.invoke_function("FcOssFFmpeg", "transcode", payload=json.dumps(

{

"bucket_name" : "test-bucket",

"object_key" : "video/inputs/a.flv",

"output_dir" : "video/output/a_out.mp4"

})).data

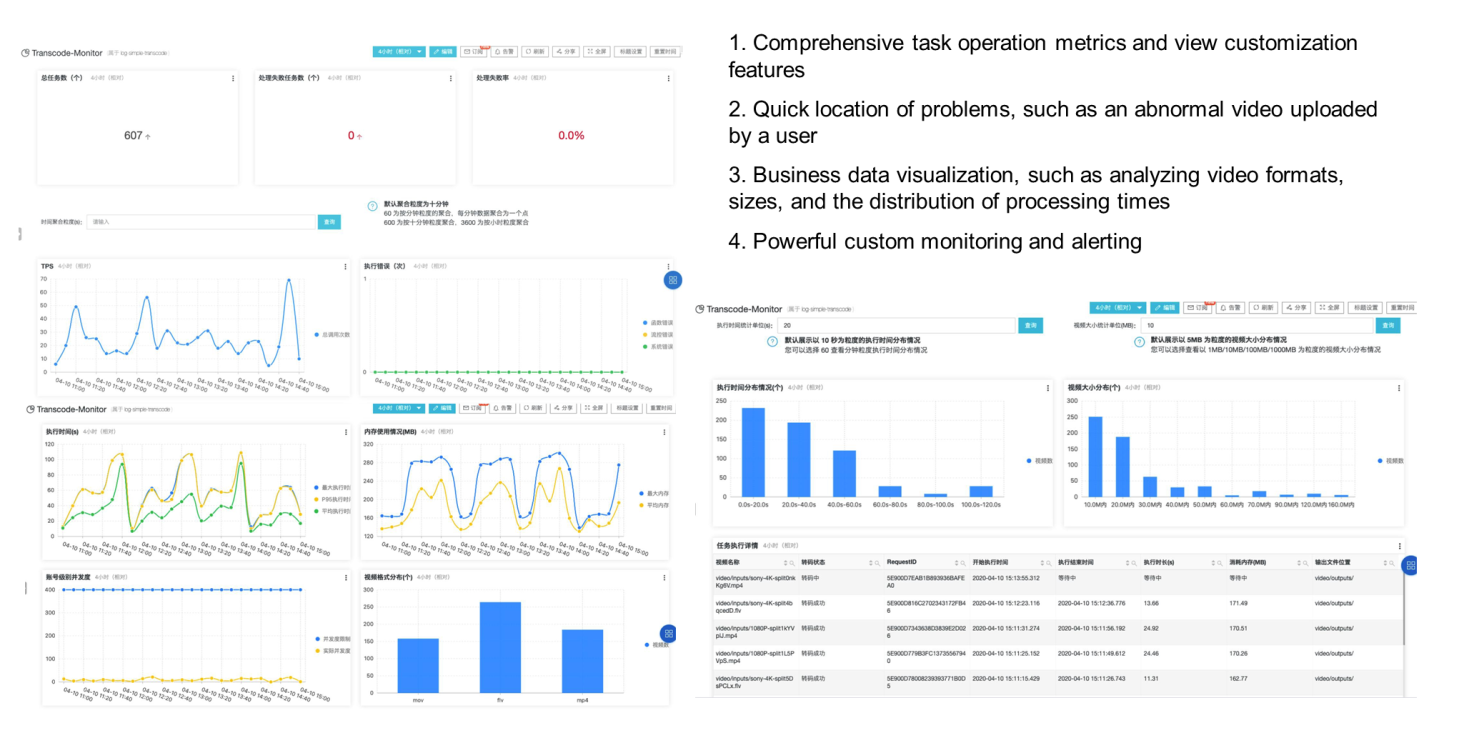

print(resp)As indicated in the preceding section, there are many methods to trigger function execution. With easy configuration on SLS logs, you can quickly implement an elastic, highly available, and pay-as-you-go audio and video processing system, with a dashboard that supports zero O&M, the visualization of specific business data, and powerful custom monitoring and alerting.

Currently, the implemented audio and video user cases include UC, Yuque, Tangping Shejijia, Hupu, and several leading online education clients. During peak hours, some of them use computing resources with more than 10,000 CPUs and can process more than 1,700 videos in parallel, sporting extremely high-cost-effectiveness.

For more information, check the links below:

It is interesting to apply the divide-and-conquer idea for tasks to Function Compute. For example, you have a 20 GB 1080p HD video to be transcoded, it may take hours for you to complete the transcoding with a single computer. Even worse, if the transcoding is interrupted midway, you must restart from the beginning. If you adopt the divide-and-conquer idea together with Function Compute, the transcoding process turns into a sequence of sharding > parallel transcoding and sharding > shard merging, resolving the pain points mentioned above.

By properly dividing the large task and using Function Compute, coupled with a small amount of coding effort, you can quickly build a large data processing system that features high elasticity and availability, parallel acceleration, and pay-as-you-go billing.

Before we introduce this solution, let's take a brief look at Serverless Workflow. Serverless Workflow can orchestrate functions in an organized manner with other cloud services and your independently developed services.

Serverless Workflow is a fully managed cloud service that coordinates multiple distributed tasks. In Serverless Workflow, you can orchestrate distributed tasks by sequence, branch, or in parallel. Serverless Workflow coordinates task execution based on specified steps. It tracks the status change of each task and executes user-defined retry logic as necessary to ensure smooth completion of the workflow. Serverless Workflow streamlines complex task coordination, status management, error handling, and other efforts required for business workflow development and operation, allowing you to focus on business logic development.

For more information, please visit the Serverless Workflow product page.

Next, let's use a quick transcoding case for a large video to describe Serverless Workflow's orchestration function, which breaks down large computing tasks into smaller subtasks for parallel processing to quickly complete a single large task.

As shown in the preceding figure, let’s assume that you upload a .mov video file to OSS. After the upload is completed, the OSS trigger automatically triggers function execution, and the triggered function calls FnF for execution. At the same time, FnF transcodes the video into one or more formats depending on the DST_FORMATS parameter in the template.yml file. In this example, we want to transcode the video into the MP4 and FLV formats simultaneously.

For more information, see fc-fnf-video-processing.

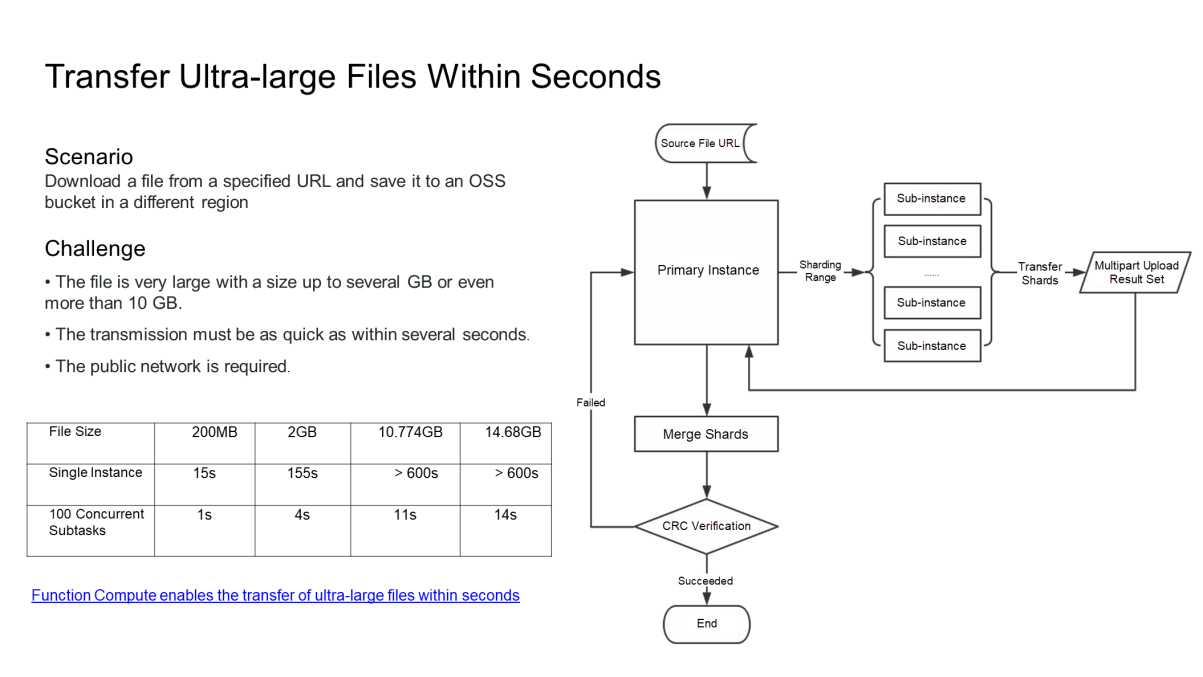

In the specific case study of task dividing-and-conquering and parallel acceleration, the previous section explained how to break down a CPU-intensive task. However, you can also break down I/O-intensive tasks. For example, a large 20 GB object is saved in an OSS bucket of the China (Shanghai) region and needs to be transmitted to an OSS bucket of the China (Hangzhou) region within seconds. In this case, the divide-and-conquer idea also works. After the Master function receives the transfer task, it allocates the sharding range of the ultra-large file to each Worker sub-function. The Worker sub-functions then store the shards they are responsible for in parallel. After all Worker sub-functions finish execution, the Master function sends a request to merge the shards to complete the entire transmission task.

This article explained how to scale your apps in or out on the serverless service platform to process tasks in parallel with practical cases. Whether you are handling a CPU-intensive scenario or an I/O-intensive one, the combination of Function Compute and Serverless Workflow can alleviate the following concerns.

The serverless audio and video processing case described in this article is only an example. It showcases the capabilities and unique advantages of Function Compute in conjunction with Serverless Workflow in offline processing scenarios. We can employ a divergent approach to expand the boundaries of Serverless Workflow's large-scale data processing practices, such as artificial intelligence (AI), gene computing, and scientific simulation. We hope you find this article interesting, and it inspires you to embark on a journey with Serverless Workflow.

99 posts | 7 followers

FollowAlibaba Cloud Serverless - March 7, 2023

Alibaba Clouder - July 12, 2019

Alibaba Clouder - December 4, 2020

Alibaba Developer - March 3, 2022

Alibaba Cloud Native - October 9, 2021

Alibaba Clouder - December 11, 2020

99 posts | 7 followers

Follow Quick BI

Quick BI

A new generation of business Intelligence services on the cloud

Learn More Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn MoreMore Posts by Alibaba Cloud Serverless