By Sha Shengyang

Flink is an open-source big data project with Java and Scala as development languages. It provides open-source code on GitHub and uses Maven to compile and build the project. Java, Maven, and Git are essential tools for most Flink users. In addition, a powerful integrated development environment (IDE) helps to read code, develop new functions and fix bugs faster. While this article doesn't include the installation details of each tool, it provides the necessary installation suggestions.

The article includes the following:

Use Mac OS, Linux or Windows systems in the development and testing environment. If you are using Windows 10, we recommend using the Windows 10 subsystem for Linux to compile and run.

| Tools | Description |

|---|---|

| Java | The Java version must be Java 8 or later. It is recommended that you use Java 8u51 or later. |

| Maven | Maven 3 is required, and Maven 3.2.5 is recommended. If you are using Maven 3.3.x, the compilation can be successful, but some problems may occur in the process of shading some dependencies. |

| Git | The Flink code repository is - https://github.com/apache/flink. |

We recommend you to use a stable branch released by the community, such as Release-1.6 or Release-1.7.

After configuring the above-mentioned tools, execute the following commands to simply compile Flink.

mvn clean install -DskipTests

# Or

mvn clean package -DskipTests

Below are common compilation parameters.

-Dfast This is mainly to ignore the compilation of QA plugins and JavaDocs.

-Dhadoop.version=2.6.1 To specify the Hadoop version

--settings=${maven_file_path} To explicitly specify the maven settings.xml configuration file

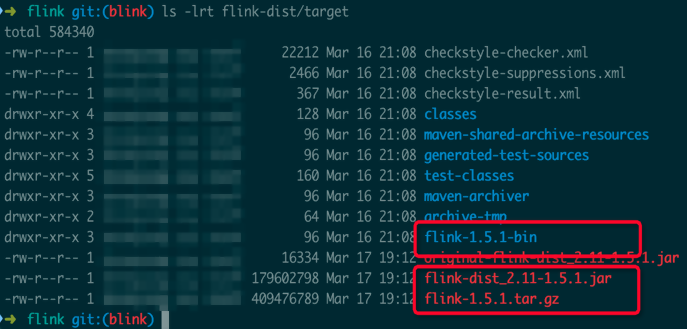

Once the compilation is complete, you see the following files in the flink-dist/target/ subdirectory under the current Flink code directory (the version numbers compiled with different Flink code branches are different, and the version number here is Flink 1.5.1).

Note the following three types of files.

| Version | Description |

|---|---|

| flink-1.5.1.tar.gz | Binary package |

| flink-1.5.1-bin/flink-1.5.1 | Decompressed Flink binary directory |

| flink-dist_2.11-1.5.1.jar | Jar package that contains the core features of Flink |

Note: Users in China may encounter "Build Failure" (MapR related errors) during compilation. This may relate to the download failures of MapR-related dependencies. Even if recommended settings.xml configuration (the Aliyun Maven source acts as a proxy for MapR-related dependencies) is used, the download failure may still occur.

The problem mainly relates to the larger Jar package of MapR. If you encounter this problem, try again. Before retrying, delete the corresponding directory in Maven local repository according to the failure message, else wait for the Maven download to time out before you download the dependency to the local device again.

The IntelliJ IDEA IDE is the recommended IDE tool for Flink. The Eclipse IDE is not officially recommended, mainly because Scala IDE in Eclipse is incompatible with Scala in Flink.

If you need to develop Flink code, configure the Checkstyle according to the configuration file in the tools/maven/ directory of Flink code. Flink force checks the code style during compilation, and if the code style does not conform to the specification, compilation may fail.

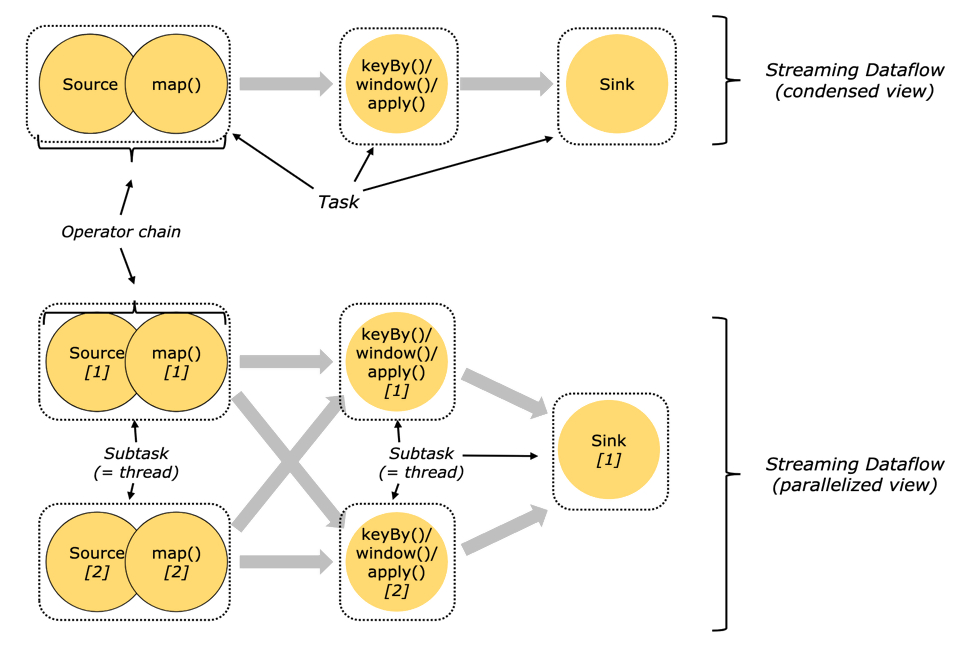

It is simple to run a Flink application. However, before running a Flink application, it is necessary to understand the components of the Flink runtime, because this involves the configuration of the Flink application. Figure 1 shows a data processing program written with the DataStream API. The operators that cannot be chained in a DAG Graph are separated into different tasks. Tasks are the smallest unit of resource dispatching in Flink.

Figure 1. Parallel Dataflows

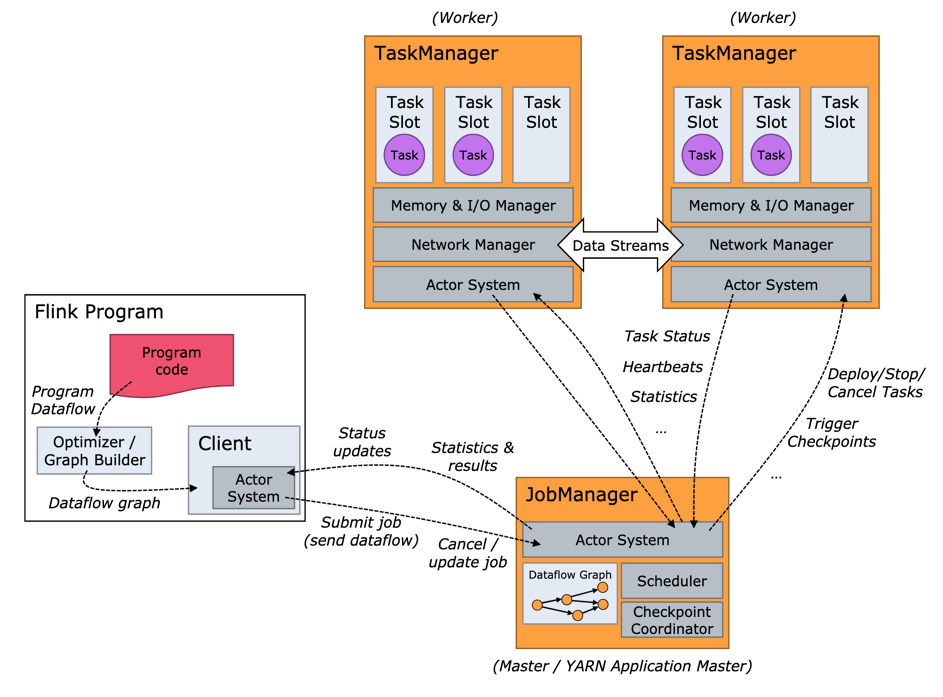

Next, figure 2 shows that the Flink runtime environment consists of two types of processes.

Figure 2. Flink Runtime Architecture Diagram

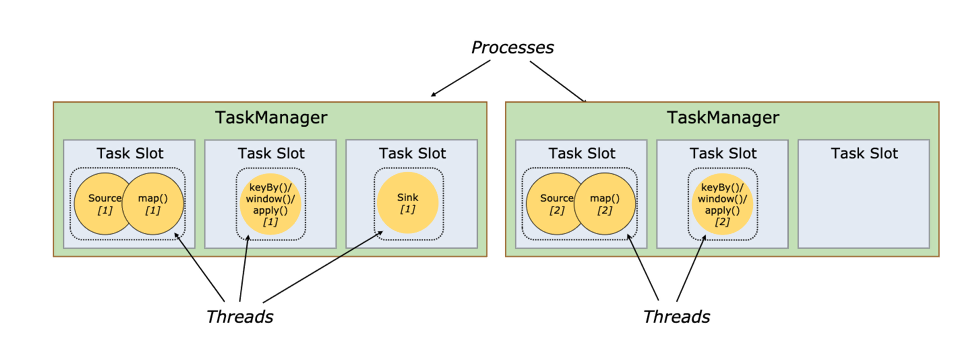

Figure 3 shows that a task slot is the smallest resource allocation unit in TaskManager. The number of task slots in TaskManager indicates the number of concurrent processing tasks. Note that multiple operators may execute in a task slot. Generally, these operators are chained and processed.

Figure 3. Process

Preparing a runtime environment includes the following:

The simplest way to run a Flink application is to run it in the local Flink cluster mode.

First, start the cluster using the following command.

./bin/start-cluster.sh

Visit http://127.0.0.1:8081/ to see the Web interface of Flink and try to submit a WordCount task.

./bin/flink run examples/streaming/WordCount.jar

Also, explore the information displayed on the Web interface. For example, view the stdout log of the TaskManager to see the computation result of the WordCount job.

Use the -- input parameter to specify your own local file as the input, and then execute the following command.

./bin/flink run examples/streaming/WordCount.jar --input ${your_source_file}

Stop the cluster by executing the command below.

./bin/stop-cluster.sh

Use the '- conf/slaves' configuration to configure the TaskManager deployment. By default, only one TaskManager process starts. To add a TaskManager process, add a "localhost" line to the file.

Run the ./bin/taskmanager.sh start command to add a new TaskManager process.

./bin/taskmanager.sh start|start-foreground|stop|stop-all

- conf/flink-conf.yaml

Use conf/flink-conf.yaml to configure operation parameters for JM and TM. Common configurations include:

jobmanager.heap.mb: 1024

taskmanager.heap.mb: 1024

taskmanager.numberOfTaskSlots: 4

taskmanager.managed.memory.size: 256

Once the standalone cluster starts, analyze the operation of the two Flink related processes: the JobManager process and the TaskManager process. Run the jps command. Further, use the ps command to see the configuration of "-Xmx" and "-Xms" in the startup parameters of the process. Then try to modify several configurations in flink-conf.yaml and restart the standalone cluster to see what has changed.

Note that in the open-source branch of Blink, the memory computing of TaskManager is more refined than that of the current community version. The general method for computing the heap memory limit (-Xmx) of the TaskManager process is shown below.

TotalHeapMemory = taskmanager.heap.mb + taskmanager.managed.memory.size + taskmanager.process.heap.memory.mb(the default value is 128MB)

In the latest Flink Community Release-1.7, the default memory configuration for JobManager and TaskManager is as follows.

The heap size for the JobManager JVM

jobmanager.heap.size: 1024m

The heap size for the TaskManager JVM

taskmanager.heap.size: 1024m

The taskmanager.heap.size configuration in Flink Community Release-1.7 actually refers to the total memory limit of the TaskManager process, instead of the memory limit of the Java heap. Use the above method to view the -Xmx configuration of the TaskManager process started by the Flink binary in Release-1.7.

Observe that the -Xmx value in the actual process is smaller than the configured taskmanager.heap.size because the network buffer deducts the memory.

The memory used by the network buffer is direct memory, thus it is not included in the heap memory limit.

The startup logs of JobManager and TaskManager are available in the log subdirectory under the Flink binary directory. The files prefixed with flink-${user}-standalonesession-${id}-${hostname} in the log directory correspond to the output of JobManager. These include the following three files:

flink-${user}-standalonesession-${id}-${hostname}.log: the log output in the codeflink-${user}-standalonesession-${id}-${hostname}.out: the stdout output during process executionflink-${user}-standalonesession-${id}-${hostname}-gc.log: the GC log for JVMThe files prefixed with flink-${user}-taskexecutor-${id}-${hostname} in the log directory correspond to the output of TaskManager. The output of JobManager includes these three files.

The log configuration file is in the conf subdirectory of the Flink binary directory.

log4j-cli.properties: The log configuration used by the Flink command-line client (such as executing the flink run command).log4j-yarn-session.properties: The log configurations used by the Flink command-line client while starting a YARN session (yarn-session.sh).log4j.properties: Whether in Standalone or Yarn mode, the log configuration used on JobManager and TaskManager is log4j.properties.Three logback.xml files correspond to these three log4j.properties files respectively.

If you want to use logback files, just delete the corresponding log4j.*properties files. The corresponding relationship is as follows.

log4j-cli.properties -> logback-console.xml log4j-yarn-session.properties -> logback-yarn.xmllog4j.properties -> logback.xmlNote that, flink-$ {user}-manualonesession-$ {id}-$ {hostname} and flink-$ {user}-taskexecutor-$ {id}-$ {hostname} "contains" $ {id }". "$ {id} indicates the start order of all processes of this role (JobManager or TaskManager) on the local machine and its default value is 0.

Repeat the ./bin/start-cluster.sh command and check the Web page (or execute the jps command) to see what happens.

Check the startup script and analyze the cause. Further, repeat the ./bin/stop-cluster.sh command, and see what happens after each execution.

Note the following key points before deployment.

JAVA_HOME environment variables are configured on each host.HADOOP_CONF_DIR environment variables.Modify the conf/masters and conf/slaves configurations according to your cluster information.

Modify the conf/flink-conf.yaml configuration, and make sure that the address is the same as in the Masters file.

jobmanager.rpc.address: z05f06378.sqa.zth.tbsite.net

Make sure that the configuration files in the conf subdirectory of the Flink binary directory are the same on all hosts, especially the following three files.

conf/masters

conf/slaves

conf/flink-conf.yaml

Start the Flink cluster.

./bin/start-cluster.sh

Submit a WordCount job.

./bin/flink run examples/streaming/WordCount.jar

Upload the input file for the WordCount job.

hdfs dfs -copyFromLocal story /test_dir/input_dir/story

Submit a WordCount job to read and write HDFS.

./bin/flink run examples/streaming/WordCount.jar --input hdfs:///test_dir/input_dir/story --output hdfs:///test_dir/output_dir/output

Increase the concurrency of the WordCount job (note that submission fails if the output file name is duplicate).

./bin/flink run examples/streaming/WordCount.jar --input hdfs:///test_dir/input_dir/story --output hdfs:///test_dir/output_dir/output --parallelism 20

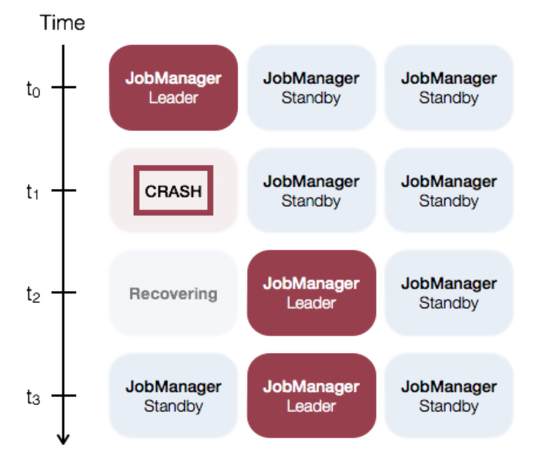

In Figure 2, the Flink runtime architecture shows that the JobManager is the most likely role in the entire system that causes the system unavailability. In case a TaskManager fails, if there are enough resources, you only need to dispatch related tasks to other idle task slots, and then recover the job from the checkpoint.

However, if only one JobManager is configured in the current cluster, once the JobManager fails, you must wait for the JobManager to recover. If the recovery time is too long, the entire job may fail.

Therefore, if the Standalone mode is used for a production business, you need to deploy and configure High Availability, so that multiple JobManagers can be on standby to ensure continuous service of the JobManager.

Figure 4. Flink JobManager HA diagram

Note

Flink currently supports ZooKeeper-based HA. If ZK is not deployed in your cluster, Flink provides a script to start the ZooKeeper cluster. First, modify the configuration file conf/zoo.cfg, and configure the server.X=addressX:peerPort:leaderPort based on the number of ZooKeeper server hosts that you want to deploy. "X" is the unique ID of the ZooKeeper server and must be a number.

# <em>The port at which the clients will connect</em>

clientPort=3181

server.1=z05f06378.sqa.zth.tbsite.net:4888:5888

server.2=z05c19426.sqa.zth.tbsite.net:4888:5888

server.3=z05f10219.sqa.zth.tbsite.net:4888:5888Then, start ZooKeeper.

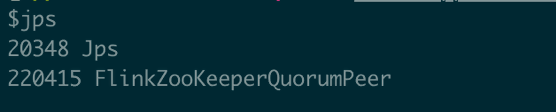

./bin/start-zookeeper-quorum.sh

Execute the jps command to verify that the ZK process has started.

Execute the command to stop the ZooKeeper cluster.

./bin/stop-zookeeper-quorum.sh

Modify the conf/masters file and add a JobManager.

$cat conf/masters

z05f06378.sqa.zth.tbsite.net:8081

z05c19426.sqa.zth.tbsite.net:8081

The previously modified conf/slaves file remains unchanged.

$cat conf/slaves

z05f06378.sqa.zth.tbsite.net

z05c19426.sqa.zth.tbsite.net

z05f10219.sqa.zth.tbsite.net

Modify the conf/flink-conf.yaml file.

Configure the high-availability mode

high-availability: zookeeper

Configure the ZooKeeper Quorum (the hostname and port must be configured based on the actual ZK configuration)

high-availability.zookeeper.quorum z05f02321.sqa.zth.tbsite.net:2181,z05f10215.sqa.zth.tbsite.net:2181

Set the ZooKeeper root directory (optional)

high-availability.zookeeper.path.root: /test_dir/test_standalone2_root

It is equivalent to the namespace of the ZK node created in the standalone cluster (optional)

high-availability.cluster-id: /test_dir/test_standalone2

The metadata of the JobManager is stored in DFS. A pointer pointing to the DFS path is saved on ZK

high-availability.storageDir: hdfs:///test_dir/recovery2/

Note that in the HA mode, both configurations in conf/flink-conf.yaml are invalid.

jobmanager.rpc.address

jobmanager.rpc.port

After the modification, make sure that the configuration is synchronized to other hosts.

Start the ZooKeeper cluster.

./bin/start-zookeeper-quorum.sh

Start the standalone cluster (make sure that the previous standalone cluster has been stopped).

./bin/start-cluster.sh

Open the JobManager Web pages on the two master nodes respectively.

Observe that the two pages finally go to the same address. The former address is the host where the current leading JobManager is located, and the other is the host where the standby JobManager is located. Now, the HA configuration in the Standalone mode completes.

Next, test and verify the effectiveness of HA. After determining the host of the leading JobManager, kill the leading JobManager process. For example, if the current leading JobManager is running on the host z05c19426.sqa.zth.tbsite.net, you can kill the process.

Then, open the two links again.

Note that the latter link is no longer displayed, while the former link can be displayed, indicating that a master-slave switch has occurred.

Then, restart the previous leading JobManager.

./bin/jobmanager.sh start z05c19426.sqa.zth.tbsite.net 8081

If you open the link http://z05c19426.sqa.zth.tbsite.net:8081 again, you should see that this link will open the page http://z05f06378.sqa.zth.tbsite.net:8081.

This indicates that the JobManager has completed a failover recovery.

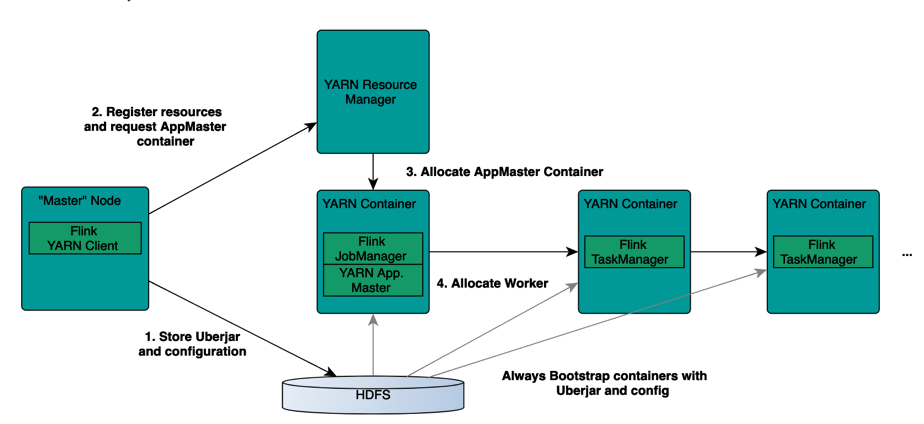

Figure 5. Flink Yarn Deployment Flowchart

Compared to Standalone mode, a Flink job has the following advantages in Yarn mode:

View command parameters.

./bin/yarn-session.sh -h

Create a Flink cluster in the Yarn mode.

./bin/yarn-session.sh -n 4 -jm 1024m -tm 4096m

The parameters used include the following:

-n,--container ~ Number of TaskManagers-jm,--jobManagerMemory ~ Memory for JobManager Container with an optional unit (default: MB)-tm,--taskManagerMemory ~ Memory per TaskManager Container with optional unit (default: MB) -qu,--queue ~ Specify YARN queue.-s,--slots ~ Number of slots per TaskManager-t,--ship ~ Ship files in the specified directory (t for transfer)Submit a Flink job to the Flink cluster.

./bin/flink run examples/streaming/WordCount.jar --input hdfs:///test_dir/input_dir/story --output hdfs:///test_dir/output_dir/output

Although there is no specific information about the corresponding Yarn application, submit the Flink job to the corresponding Flink cluster, since the file - /tmp/.yarn-properties-${user} holds the cluster information used to create the last Yarn session.

Therefore, if the same user creates another Yarn session on the same host, this file will be overwritten.

If /tmp/.yarn-properties-${user} is deleted or the job is submitted on another host, it can be submitted to the expected Yarn session. The high-availability.cluster-id parameter is configured to obtain the address and port of the JobManager from ZooKeeper and submit the job.

If the Yarn session is not configured with HA, you must specify the application ID on Yarn in the Flink job submission command and pass it through the -yid parameter.

/bin/flink run -yid application_1548056325049_0048 examples/streaming/WordCount.jar --input hdfs:///test_dir/input_dir/story --output hdfs:///test_dir/output_dir/output

Note that the TaskManager is released soon after a task is completed, and it will be pulled up again the next time a task is submitted. To extend the timeout period of idle TaskManagers, configure the following parameter in the conf/flink-conf.yaml file, in milliseconds.

slotmanager.taskmanager-timeout: 30000L # deprecated, used in release-1.5

resourcemanager.taskmanager-timeout: 30000L

Run the following command if you only want to run a single Flink job and then exit.

./bin/flink run -m yarn-cluster -yn 2 examples/streaming/WordCount.jar --input hdfs:///test_dir/input_dir/story --output hdfs:///test_dir/output_dir/output

Common configurations include:

-yn,--yarncontainer ~ Number of Task Managers-yqu,--yarnqueue ~ Specify YARN queue.-ys,--yarnslots ~ Number of slots per TaskManager-yqu,--yarnqueue ~ Specify YARN queue.View the parameters available for Run through the Help command.

./bin/flink run -h

The parameters prefixed with -y and -- yarn in "options for yarn-cluster mode of the ./bin/flink run -h command corresponds to those of the ./bin/yarn-session.sh -h command one-to-one, and their semantics are basically the same.

The relationship between the -n (in Yarn Session mode), -yn (in Yarn Single Job mode), and -p parameters as defined below.

./bin/flink run -yd -m yarn-cluster xxx).First, make sure that the configuration in the yarn-site.xml file is used to start the Yarn cluster. This configuration is the upper limit for restarting the YARN cluster-level AM.

<property>

<name>yarn.resourcemanager.am.max-attempts</name>

<value>100</value>

</property>

Then, in the conf/flink-conf.yaml file, configure the number of times the JobManager for this Flink job can be restarted.

yarn.application-attempts: 10 # 1+ 9 retries

Finally, configure the ZK-related configuration in the conf/flink-conf.yaml file. The configuration methods are basically the same as those of Standalone HA, as shown below.

Configure the high-availability mode

high-availability: zookeeper

Configure the ZooKeeper Quorum (the hostname and port must be configured based on the actual ZK configuration)

high-availability.zookeeper.quorum z05f02321.sqa.zth.tbsite.net:2181,z05f10215.sqa.zth.tbsite.net:2181

Set the ZooKeeper root directory (optional)

high-availability.zookeeper.path.root: /test_dir/test_standalone2_root

Delete the configuration

high-availability.cluster-id: /test_dir/test_standalone2

The metadata of the JobManager is stored in DFS. A pointer pointing to the DFS path is saved on ZK

high-availability.storageDir: hdfs:///test_dir/recovery2/

It is better to remove the high-availability.cluster-id configuration, because in Yarn (and Mesos) mode, if the cluster-id is not configured, it will be configured as the Application ID on Yarn to ensure uniqueness.

159 posts | 46 followers

FollowApache Flink Community China - January 9, 2020

Apache Flink Community China - January 9, 2020

Apache Flink Community China - January 9, 2020

Apache Flink Community China - January 9, 2020

Apache Flink Community - May 10, 2024

Apache Flink Community China - April 19, 2022

159 posts | 46 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn More Resource Management

Resource Management

Organize and manage your resources in a hierarchical manner by using resource directories, folders, accounts, and resource groups.

Learn More Super App Solution for Telcos

Super App Solution for Telcos

Alibaba Cloud (in partnership with Whale Cloud) helps telcos build an all-in-one telecommunication and digital lifestyle platform based on DingTalk.

Learn MoreMore Posts by Apache Flink Community