By Gongqi

The eighth article of this series(Submission and Playback of Transaction Logs) introduced the design concept of the log module and the life of the log. The ninth article of this series explains the Macro Block Storage Format of Storage Layer Code Interpretation.

A macro block is a data structure between an SSTable and a micro block. A macro block in OceanBase is a fixed-length data block of 2MB.

As we all know, the micro block in OceanBase is the minimum unit for reading IO because the micro-block reading is on the critical path of the user request. The micro-block cannot be too large to ensure a fast response to the user's request, so the default size of the micro-block is generally not more than 16KB. The macro-block writing is not on the critical path of the user request as the minimum unit for writing IO, but there is a 2MB macro block. The purpose is to maximize the throughput performance of the disk and quickly perform operations such as compression, migration, replication, and bad block checking.

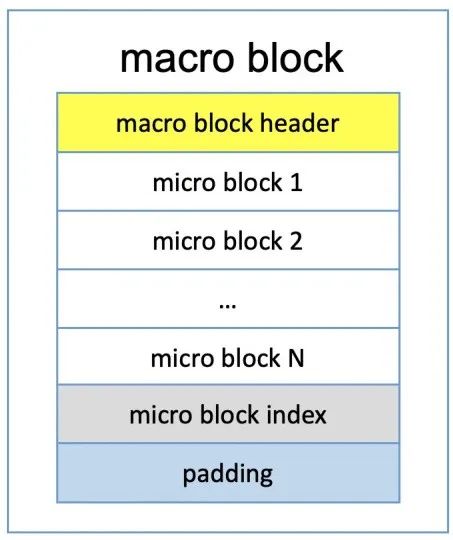

Please refer to the following figure for the simple structure of the macro block. The detailed macro block format is described in the next section:

Note: All the instructions and code in this article are based on the OceanBase open-source code of the v3.1.0_CE_BP1 version.

Currently, OceanBase supports many types of macro blocks, which can be defined by enum MacroBlockType. There are more than a dozen types in total. However, there are three types of commonly used data macro blocks:

This article mainly introduces the first conventional data macro block. LobData and BloomFilterData will be explained later.

Generally speaking, the overall format of a macro block is a classic storage structure ( header + payload + trailer + padding ):

Later, we will introduce the storage format of its structure one by one for different parts.

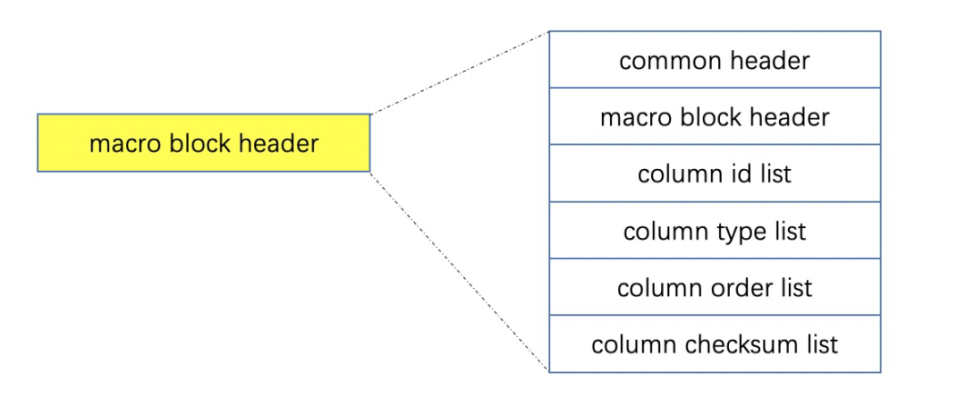

The metadata of a macro block is recorded in its header. It consists of multiple parts, as shown in the following figure:

Each part of the header of a macro block stores different metadata. The specific meaning is listed below:

Please refer to the following code for the storage format of the micro-block header:

// src/storage/blocksstable/ob_macro_block.cpp

// This function mainly points the macro block header structure to different offsets of the buffer in advance.No serialization operation is required in the future.

// This function is called when the macro block is initialized. The specific value of the header member variable is specified after subsequent data is written.

int ObMacroBlock::reserve_header(const ObDataStoreDesc& spec)

{

int ret = OB_SUCCESS;

common_header_.reset();

common_header_.set_attr(ObMacroBlockCommonHeader::SSTableData);

common_header_.set_data_version(spec.data_version_);

common_header_.set_reserved(0);

const int64_t common_header_size = common_header_.get_serialize_size();

// The type of data_is ObSelfBufferWriter, which is a memory buffer that supports automatic expansion.

// For ObSelfBufferWriter implementation, see src/storage/blocksstable/ob_data_buffer.h.

MEMSET(data_.data(), 0, data_.capacity());

// 1. The first part of data_is ObMacroBlockCommonHeader

if (OB_FAIL(data_.advance(common_header_size))) {

STORAGE_LOG(WARN, "data buffer is not enough for common header.", K(ret), K(common_header_size));

}

if (OB_SUCC(ret)) {

int64_t column_count = spec.row_column_count_;

int64_t rowkey_column_count = spec.rowkey_column_count_;

int64_t column_checksum_size = sizeof(int64_t) * column_count;

int64_t column_id_size = sizeof(uint16_t) * column_count;

int64_t column_type_size = sizeof(ObObjMeta) * column_count;

int64_t column_order_size = sizeof(ObOrderType) * column_count;

int64_t macro_block_header_size = sizeof(ObSSTableMacroBlockHeader);

// 2. The second part of data_is ObSSTableMacroBlockHeader.

header_ = reinterpret_cast<ObSSTableMacroBlockHeader*>(data_.current());

// 3. The third part of data_is column_ids_.

column_ids_ = reinterpret_cast<uint16_t*>(data_.current() + macro_block_header_size);

// 4. The fourth part of data_is column_types.

column_types_ = reinterpret_cast<ObObjMeta*>(data_.current() + macro_block_header_size + column_id_size);

// 5. The fifth part of data_is column_orders_.

column_orders_ =

reinterpret_cast<ObOrderType*>(data_.current() + macro_block_header_size + column_id_size + column_type_size);

// 6. The sixth part of data_is column_checksum_.

column_checksum_ = reinterpret_cast<int64_t*>(

data_.current() + macro_block_header_size + column_id_size + column_type_size + column_order_size);

macro_block_header_size += column_checksum_size + column_id_size + column_type_size + column_order_size;

// for compatibility, fill 0 to checksum and this will be serialized to disk

for (int i = 0; i < column_count; i++) {

column_checksum_[i] = 0;

}

// 7. The memory behind data_is reserved for micro blocks.

if (OB_FAIL(data_.advance(macro_block_header_size))) {

STORAGE_LOG(WARN, "macro_block_header_size out of data buffer.", K(ret));

} else {

// Initialize the member variables in the header.

memset(header_, 0, macro_block_header_size);

header_->header_size_ = static_cast<int32_t>(macro_block_header_size);

header_->version_ = SSTABLE_MACRO_BLOCK_HEADER_VERSION_v3;

header_->magic_ = SSTABLE_DATA_HEADER_MAGIC;

header_->attr_ = 0;

header_->table_id_ = spec.table_id_;

header_->data_version_ = spec.data_version_;

header_->column_count_ = static_cast<int32_t>(column_count);

header_->rowkey_column_count_ = static_cast<int32_t>(rowkey_column_count);

header_->column_index_scale_ = static_cast<int32_t>(spec.column_index_scale_);

header_->row_store_type_ = static_cast<int32_t>(spec.row_store_type_);

header_->micro_block_size_ = static_cast<int32_t>(spec.micro_block_size_);

header_->micro_block_data_offset_ = header_->header_size_ + static_cast<int32_t>(common_header_size);

memset(header_->compressor_name_, 0, OB_MAX_HEADER_COMPRESSOR_NAME_LENGTH);

MEMCPY(header_->compressor_name_, spec.compressor_name_, strlen(spec.compressor_name_));

header_->data_seq_ = 0;

header_->partition_id_ = spec.partition_id_;

// copy column id & type array;

for (int64_t i = 0; i < header_->column_count_; ++i) {

column_ids_[i] = static_cast<int16_t>(spec.column_ids_[i]);

column_types_[i] = spec.column_types_[i];

column_orders_[i] = spec.column_orders_[i];

}

}

}

if (OB_SUCC(ret)) {

// Specify the offset of data in data_.

data_base_offset_ = header_->header_size_ + common_header_size;

}

return ret;

}The header structure design of macro blocks has these characteristics:

The payload of a macro block is the data of multiple micro blocks. You can refer to the following code to learn the format of micro blocks. This article does not describe it in detail.

// src/storage/blocksstable/ob_micro_block_writer.h

// The following is the persistence storage format of micro blocks in memory:

// memory

// |- row data buffer

// |- ObMicroBlockHeader

// |- row data

// |- row index buffer

// |- ObRowIndex

//

// build output

// |- compressed data

// |- ObMicroBlockHeader

// |- row data

// |- RowIndex

class ObMicroBlockWriter : public ObIMicroBlockWriter {

public:

virtual int append_row(const storage::ObStoreRow& row) override;

virtual int build_block(char*& buf, int64_t& size) override;

virtual void reuse() override;

virtual int64_t get_block_size() const override;

virtual int64_t get_row_count() const override;

virtual int64_t get_data_size() const override;

virtual int64_t get_column_count() const override;

virtual common::ObString get_last_rowkey() const override;

void reset();

};The trailer of a macro block mainly records the index information of each micro block. It contains the index information of the micro block and the following information:

In addition, if it is a multi-version macro block, the trailer includes two pieces of multi-version-related information.

The main reason you need to record the index information (offset, length, end key) of a micro block is to quickly retrieve the micro block where the specified row key is located and quickly read the micro block separately without reading the entire macro block.

The trailer code for a macro block is listed below:

// src/storage/blocksstable/ob_micro_block_index_writer.cpp

int ObMicroBlockIndexWriter::add_entry(

const ObString& rowkey, const int64_t data_offset, bool can_mark_deletion, const int32_t delta)

{

int ret = OB_SUCCESS;

int32_t endkey_offset = static_cast<int32_t>(buffer_[ENDKEY_BUFFER_IDX].length());

// Remove some parameter check codes

if (OB_FAIL(buffer_[INDEX_BUFFER_IDX].write(static_cast<int32_t>(data_offset)))) {

STORAGE_LOG(WARN, "index buffer fail to write data_offset.", K(ret), K(data_offset));

} else if (OB_FAIL(buffer_[INDEX_BUFFER_IDX].write(endkey_offset))) {

STORAGE_LOG(WARN, "index buffer fail to write endkey_offset.", K(ret), K(endkey_offset));

} else if (OB_FAIL(buffer_[ENDKEY_BUFFER_IDX].write(rowkey.ptr(), rowkey.length()))) {

STORAGE_LOG(WARN, "data buffer fail to writer rowkey.", K(ret), K(rowkey));

} else if (is_multi_version_minor_merge_ &&

OB_FAIL(buffer_[MARK_DELETE_BUFFER_IDX].write(static_cast<uint8_t>(can_mark_deletion)))) {

STORAGE_LOG(WARN, "fail to write mark deletion", K(ret), K(can_mark_deletion));

} else if (is_multi_version_minor_merge_ && OB_FAIL(buffer_[DELTA_BUFFER_IDX].write(delta))) {

STORAGE_LOG(WARN, "failed to write delta", K(ret));

} else {

++micro_block_cnt_;

}

return ret;

}The trailer of the final macro block is serialized in ObMacroBlock::flush. Please see ObMacroBlock::build_index for more information about serialization.

With the index of the micro block in the trailer, there are two ways to read the data of each micro block in the macro block:

The 2MB macro blocks of OceanBase cannot be fully written due to various reasons (such as insufficient data volume and specially reserved 10% space) for subsequent insert, etc., to avoid excessive macro-block splitting. At this time, padding is required to make up 2MB. Essentially, padding is a waste of space, but it is still necessary for good performance and simplified design.

What should we do when the macro block does not have padding?

The padding of the OceanBase macro block is not an explicit implementation. The size of each macro block is fixed at 2MB. The header records the real data size of the macro block. OceanBase does not perform zero-padding operations to the padding data.

The underlying reading and writing of data blocks are mainly implemented by inheriting the class ObStorageFile. The following code describes the external interface of this class:

// src/storage/blocksstable/ob_store_file_system.h

class ObStorageFile {

public:

...

// Asynchronously read the macro block and micro block interfaces.

// Specify whether to read a micro block or a macro block by using offset_and size_in read_info.

// The interface is asynchronous. After a successful data reading, the caller is notified through macro_handle.

virtual int async_read_block(const ObMacroBlockReadInfo& read_info, ObMacroBlockHandle& macro_handle) = 0;

// Asynchronous writing to macro blocks.

virtual int async_write_block(const ObMacroBlockWriteInfo& write_info, ObMacroBlockHandle& macro_handle) = 0;

// The synchronous reading /writing interface is generally implemented through the above two asynchronous interfaces.

virtual int write_block(const ObMacroBlockWriteInfo& write_info, ObMacroBlockHandle& macro_handle) = 0;

virtual int read_block(const ObMacroBlockReadInfo& read_info, ObMacroBlockHandle& macro_handle) = 0;

...

};

The implementation of the specific reading/writing interface is in the derived class ObLocalStorageFile of ObStorageFile. You can refer to the following code to understand its implementation:

src/storage/blocksstable/ob_local_file_system.h。OceanBase mainly involves write operations on macro blocks in cases of compression, data migration, and data replication. The write operations initiated by users directly write to WAL and do not directly trigger write operations on macro blocks. Write-related basic operations on macro blocks are implemented in class ObMacroBlock. The main external interfaces are listed below:

// src/storage/blocksstable/ob_macro_block.h

class ObMacroBlock {

public:

// Initialize the macro block structure to do some initialization operations:

// 1. Call reserve_header to map the header of the macro block to the buffer. For more information, see the code description 2.1 in this article.

// 2. Call the init_row_reader and initialize the line reader based on ObRowStoreType.

int init(ObDataStoreDesc& spec);

// Appending a micro block into the macro block is to mainly copy the serialized micro block data into the macro block buffer,

// Update the metadata in the header of the macro block.

int write_micro_block(const ObMicroBlockDesc& micro_block_desc, int64_t& data_offset);

// Perform disk brushing operations on macro blocks that have been written (or do not need to be written again), including:

// 1. Serialize the common header;

// 2. Build various metadata in headers and trailers;

// 3. Call the underlying ObStorageFile::async_write_block to asynchronously write data to the disk;

// 4. After the macro block data is successfully written, the upper layer is notified by macro_handle.

int flush(const int64_t cur_macro_seq, ObMacroBlockHandle& macro_handle, ObMacroBlocksWriteCtx& block_write_ctx);

// Merge two sequential macro blocks. At the end of compaction, check whether the last not-full macro block can be merged with the previous macro block.

// If the space of the previous macro block is sufficient, merge it. The data of the two macro blocks are already ordered.

// This interface is only called by ObMacroBlockWriter::close. The main process of the merge function is as follows:

// 1. Check again whether the current macro block space is sufficient. If it is not sufficient, an error will be returned.

// 2. Append the micro block index of the last macro block to the current micro block index;

// 3. Append the micro block data of the last macro block to the buffer of the current micro block;

// 4. Update the metadata in the current macro block header.

int merge(const ObMacroBlock& macro_block);

// Used in conjunction with the merge interface. It is mainly to check whether the current macro block can accommodate more macro block data.

bool can_merge(const ObMacroBlock& macro_block);

// Reset the macro block, which is mainly to reuse the macro block object.

void reset();

...

};Class ObMacroBlock only implements the basic write interface of some macro blocks. The sequential write of multiple macro blocks of SSTable is implemented by class ObMacroBlockWriter. Please see the following code description for details:

// src/storage/blocksstable/ob_macro_block_writer.cpp

class ObMacroBlockWriter {

public:

// Open a macro block writer based on the table_id and partition_id information in the data_store_desc.

int open(ObDataStoreDesc& data_store_desc, const ObMacroDataSeq& start_seq,

const ObIArray<ObMacroBlockInfoPair>* lob_blocks = NULL, ObMacroBlockWriter* index_writer = NULL);

// Append a macro block, which is mainly applied to these scenarios:

// 1. When merging, a macro block of the original SSTable is not modified and is directly reused into the current SSTable;

// 2. After parallel merging, you can also use this interface to append multiple macro blocks with no overlapping data.

int append_macro_block(const ObMacroBlockCtx& macro_block_ctx);

// Append a microblock. Unlike append_macro_block, you need to consider whether there is data overlap:

// 1. If the data do not overlap, append the micro_block to the current macro block;

// 2. If the data overlap, you need to build a reader for micro_block and write the data to the current macro block by row.

int append_micro_block(const ObMicroBlock& micro_block);

// Append a row of data. The ObMicroBlockWriter::append_row is called.

int append_row(const storage::ObStoreRow& row, const bool virtual_append = false);

// Close the ObMacroBlockWriter. Before closing, it will try to merge the last two macro blocks to save space,

// Finally, flush the current last macro block to the disk and wait for the disk to be successfully brushed (wait_io_finish)

int close(storage::ObStoreRow* root = NULL, char* root_buf = NULL);

};It encapsulates a layer of class ObMacroBlockBuilder specifically for merging on top of the class ObMacroBlockWriter. Regarding the implementation of this class, this article will not explain too much. Later, it will be described in detail in an article related to merging.

For user requests, the system does not directly read the entire macro block generally. Instead, it reads the index of the macro block and then reads a micro block into the memory according to the filter conditions of the request. You can refer to the code of the struct ObMicroBlockDataHandle for an accurate reading of the micro block and search the upper and down call links to understand its logic. In OceanBase, complete macro blocks are read in a variety of scenarios, including:

// src/storage/blocksstable/ob_store_file_system.h

// The scheduled task for bad block check.

class ObFileSystemInspectBadBlockTask : public common::ObTimerTask {

public:

// The task execution content interface of the scheduled task base class.

// Call the inspect_bad_block.

virtual void runTimerTask();

private:

// Check all valid macro blocks for bad blocks. The main process is as follows:

// 1. Initialize the iterator of the macro block by ObPartitionService.

// 2. According to macro_iter, all macro blocks can be traversed.

// Call the check_macro_block to check the macro block.

void inspect_bad_block();

// After checking some parameters, do the bad block check to the macro block data.

// Check the data by calling the following check_data_block.

int check_macro_block(const ObMacroBlockInfoPair& pair, const storage::ObTenantFileKey& file_key);

// Read the data of the entire macro block from the disk.

// Use ObSSTableMacroBlockChecker::check_data_block to do specific checks.

int check_data_block(const MacroBlockId& macro_id, const blocksstable::ObFullMacroBlockMeta& full_meta,

const storage::ObTenantFileKey& file_key);

bool has_inited();

private:

// The bad block check task is only one part of each cycle. The following parameters record the break-point information.

int64_t last_partition_idx_;

int64_t last_sstable_idx_;

int64_t last_macro_idx_;

// The class of data check tool does macro-block, micro-block, column-related checksum verification.

ObSSTableMacroBlockChecker macro_checker_;

};

In summary, the reading of complete macro blocks mainly occurs in asynchronous tasks in the background because the overhead of reading a 2MB macro block is higher than a 16KB micro block. When processing user requests, usually a micro block is specified for reading.

The baseline data in OceanBase is stored in a pre-allocated large file (ob_dir/store/sstable/block_file). Most of the area in this file is filled with 2MB macro blocks. You can use metadata to distinguish between valid macro block arrays and unused macro block arrays. The application and release of macro blocks are based on these two arrays.

Please see ObStoreFile::alloc_block and ObStoreFile::free_block for more information. This part will be described in detail in an article entitled Macro Block GC Principles.

After reading this article, you should have a deeper understanding of the original design intention of OceanBase. Generally speaking, micro blocks are for lower latency, macro blocks are for greater throughput, and both are applied to different scenarios.

In the future, we will continue to interpret the relevant codes of the OceanBase storage layer and learn and exchange storage technology with everyone.

An Interpretation of the Source Code of OceanBase (8): Submission and Playback of Transaction Logs

An Interpretation of the Source Code of OceanBase (10): Table One and Its Service Addressing

OceanBase - September 15, 2022

OceanBase - May 30, 2022

OceanBase - September 9, 2022

OceanBase - September 9, 2022

OceanBase - September 9, 2022

OceanBase - September 14, 2022

Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Hybrid Cloud Storage

Hybrid Cloud Storage

A cost-effective, efficient and easy-to-manage hybrid cloud storage solution.

Learn More Hybrid Cloud Distributed Storage

Hybrid Cloud Distributed Storage

Provides scalable, distributed, and high-performance block storage and object storage services in a software-defined manner.

Learn MoreMore Posts by OceanBase