By Zhennan

The tenth article of this series (Table One and Its Service Addressing) introduced the creation of the system tenant's Table 1 and explained the service addressing process related to Table 1. This article introduces the analysis of the location cache module.

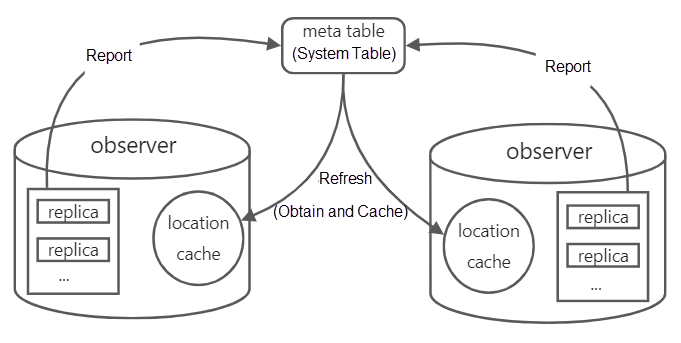

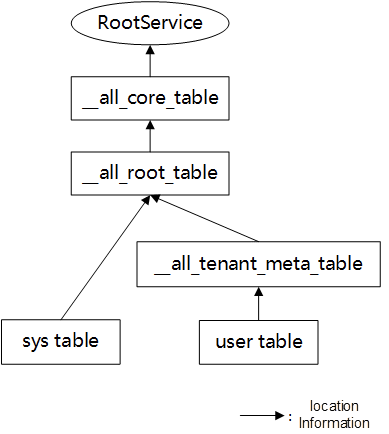

Location cache is a basic module on the observer. It provides the ability to obtain and cache the location information of a replica for multiple other modules (such as SQL, transaction, and CLOG). Location cache depends on the meta tables at all levels, the underlying partition_service, and the service of log_service to obtain the location information of the replica. It passively updates and caches the cache by calling each module. Each module of the same observer shares the same location cache.

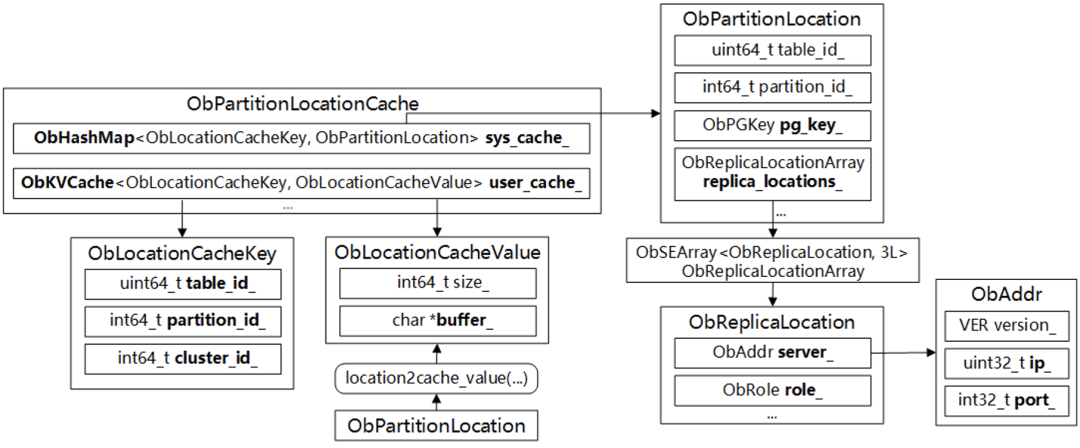

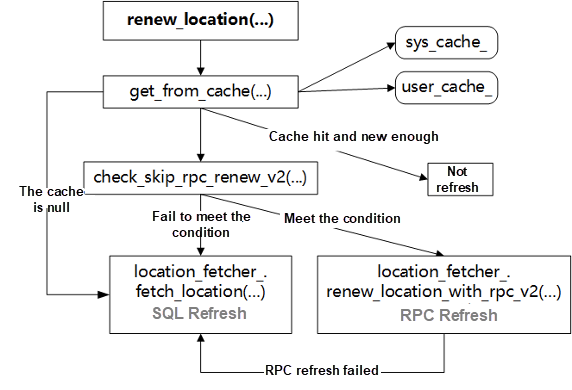

In the OceanBase cluster, the location information of each replica is recorded in the meta table. If you have to send SQL to the meta table to find the location every time you access the copy, it is too inefficient. Therefore, we cache the location information of the entity table in each observer, which is managed by the location cache module and implemented in the ObPartitionLocationCache. The main cache content is:

Sys_cache_ caches the location information of system tables, and user_cache_ caches the location information of user tables.

Different data structures are stored separately to avoid the excessive number of user tables and squeeze out the location cache of system tables.

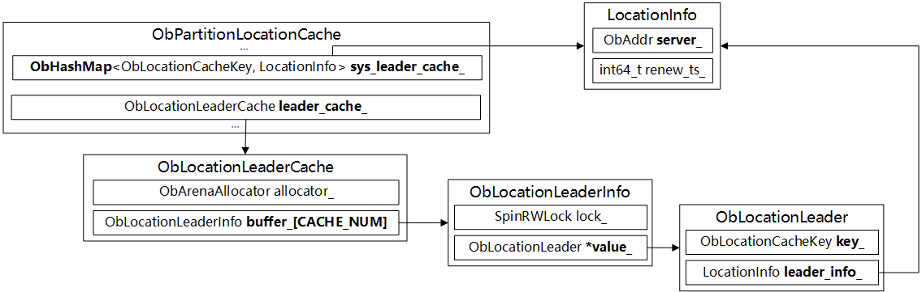

The cache is proposed to support the function of obtaining the leader of the system tenant table without relying on internal tables in limited scenarios. On the one hand, it solves the problem when distributed transaction promotion cannot obtain the system table leader deadlock. On the other hand, it can slightly optimize the speed of obtaining the leader of the system tenant table.

It was introduced to optimize the location path of the nonblock_get_leader() method by adding the leader_cache_ to cache the leader location information, considering the consumption of getting the location from the KVCache structure.

The location cache module caches the location information of the accessed entity table locally based on each observer. Location cache uses a passive refresh mechanism. When other internal modules find that the cache is invalid, the refreshed interface must be called to refresh the cache.

Corresponding to the cached content, the location cache module provides the ability to obtain the specific pkey(pgkey) corresponding to the partition and leader location information. It is mainly applied to modules such as SQL, Proxy, storage, transaction, and clog (the latter two focus on leader information). The interfaces are listed below:

// Synchronous interface:

int ObPartitionLocationCache::get(const uint64_t table_id,

const int64_t partition_id,

ObPartitionLocation &location,

const int64_t expire_renew_time, // If this parameter is set to INT64_MAX, it indicates a forced refresh.

bool &is_cache_hit,

const bool auto_update /*= true*/) // The get function has the refresh function.

int ObPartitionLocationCache::get_strong_leader(const common::ObPartitionKey &partition,

common::ObAddr &leader,

const bool force_renew) // The leader is essentially obtained through the get function, which has refresh capability.

// Asynchronous interfaces:

int ObPartitionLocationCache::nonblock_get(const uint64_t table_id,

const int64_t partition_id,

ObPartitionLocation &location,

const int64_t cluster_id) // Query from the location cache in nonblock mode.

int ObPartitionLocationCache::nonblock_get_strong_leader(const ObPartitionKey &partition, ObAddr &leader) // Check the leader cache first, if not, go to nonblock_get.

int ObPartitionLocationCache::nonblock_renew(const ObPartitionKey &partition,

const int64_t expire_renew_time,

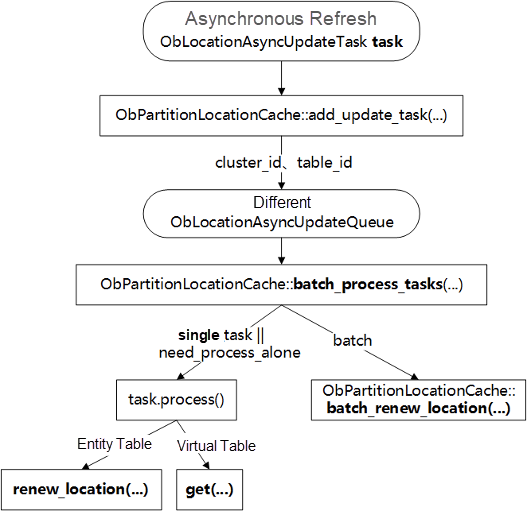

const int64_t specific_cluster_id) // With the above two functions, the location cache is refreshed if the access fails. Implemented by ObLocationAsyncUpdateTask.Nonblock_renew() is implemented in the form of a task queue:

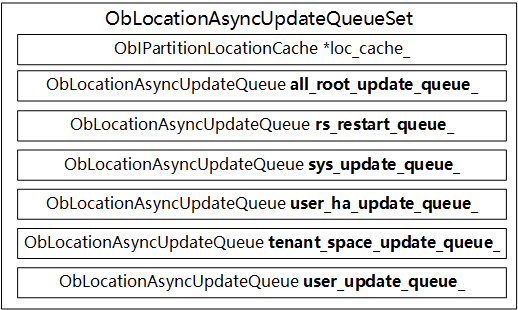

Priorities are assigned to multiple queues to speed up the recovery of abnormal scenarios:

• pall_root_update_queue_; // __all_core_table、__all_root_table、__all_tenant_gts、__all_gts

• prs_restart_queue_; // rs restart related sys table

• psys_update_queue_; // other sys table in sys tenant

• puser_ha_update_queue_; // __all_dummy、__all_tenant_meta_table

• ptenant_space_update_queue_; // sys table in tenant space

• puser_update_queue_; // user table

Main refresh processes:

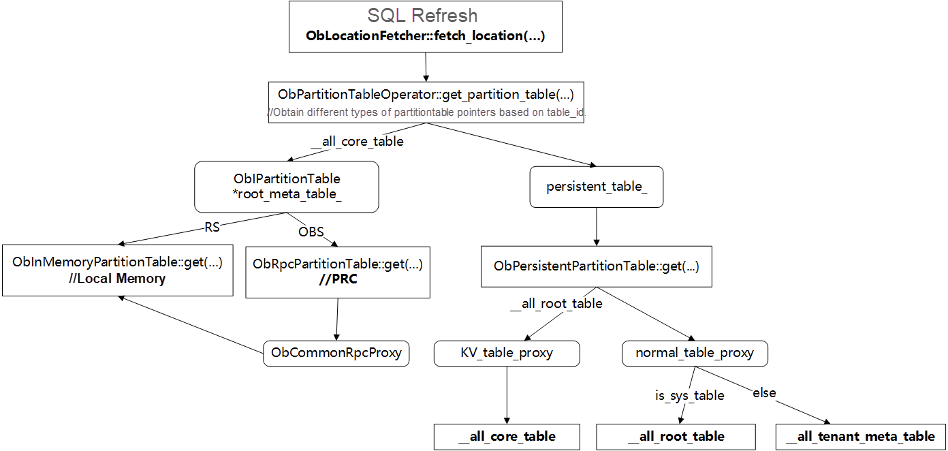

SQL refresh uses SQL statements to query location information in the meta table to refresh the local cache. This refresh process is the same as the reporting process.

SQL refresh depends on the meta table being readable and the reporting process running properly. SQL refresh has a certain delay. For example, when the leader changes and the report is in progress, the SQL refresh returns the old leader. This issue can be resolved by refreshing it again.

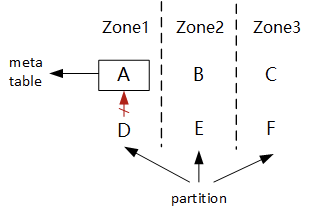

Since SQL refresh depends on meta tables, SQL modules, and underlying reports. The location cache cannot be refreshed in case of network exceptions. As shown in the following figure, server A (where the meta table is located) is disconnected from the DEF network, and D issues a refresh request. In this case, SQL refresh cannot be performed. However, the location of D can be determined using the replica location information of the same partition on EF. RPC refresh reduces the dependency on meta table and SQL query consumption to a certain extent, accelerating cache refresh.

The central idea is to obtain all replica location information of partitions in this region through the old cache. RPC obtains member_info (including the leader, member_list, lower_list, etc.) through the partition service on the corresponding server and compares member_info with the old location information to perceive the change of leader and replica type (F->L).

The conditions for a successful refresh are member_list unchanged and non_paxos members unchanged.

Advantages: The consumption is very small, so the replica changes in the leader and member lists can be sensed more quickly, which has a good effect on unowned election and leader re-election. Therefore, we will give priority to RPC refresh.

Disadvantages: RPC refresh only ensures the leader, the Paxos member list of the replica, and the location information of the read-only replica directly cascaded under the Paxos member of this region is accurate to pursue efficiency. However, it does not sense the changes of the read-only replica cascaded at the second level and above, nor does it sense changes to read-only replicas cascaded under other regions.

Method:

Method:

The virtual table does not have a storage entity and is generated based on specific rules when you are querying. The location cache module provides special location information for queries on virtual tables to unify the query logic at the SQL layer.

From the perspective of distribution, virtual tables can be divided into the following three categories:

// Key functions::

int ObSqlPartitionLocationCache::virtual_get(const uint64_t table_id,const int64_t partition_id,share::ObPartitionLocation &location,const int64_t expire_renew_time,bool &is_cache_hit)

//LOC_DIST_MODE_ONLY_LOCAL:// only requires its own address.

int ObSqlPartitionLocationCache::build_local_location(uint64_t table_id,ObPartitionLocation &location)

|-replica_location.server_ = self_addr_;

//LOC_DIST_MODE_DISTRIBUTED:// is essentially the server_list of the cluster.

int ObSqlPartitionLocationCache::build_distribute_location(uint64_t table_id, const int64_t partition_id,ObPartitionLocation &location)

|-int ObTaskExecutorCtx::get_addr_by_virtual_partition_id(int64_t partition_id, ObAddr &addr)

//LOC_DIST_MODE_ONLY_RS:// is essentially the location of RS.

int ObSqlPartitionLocationCache::get(const uint64_t table_id,ObIArray<ObPartitionLocation> &locations,const int64_t expire_renew_time,bool &is_cache_hit,const bool auto_update /*=true*/)

|-int ObPartitionLocationCache::get(const uint64_t table_id,ObIArray<ObPartitionLocation> &locations,const int64_t expire_renew_time,bool &is_cache_hit,const bool auto_update /*=true*/)

| |-int ObPartitionLocationCache::vtable_get(const uint64_t table_id,ObIArray<ObPartitionLocation> &locations,const int64_t expire_renew_time,bool &is_cache_hit)

| | |- int renew_vtable_location(const uint64_t table_id,common::ObSArray<ObPartitionLocation> &locations);We can summarize the location cache of the virtual table in three sentences.

This is the end of the source code interpretation. Thank you.

An Interpretation of the Source Code of OceanBase (10): Table One and Its Service Addressing

OceanBase - September 15, 2022

OceanBase - September 9, 2022

OceanBase - September 9, 2022

OceanBase - September 13, 2022

OceanBase - May 30, 2022

OceanBase - September 14, 2022

Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More Cloud Hardware Security Module (HSM)

Cloud Hardware Security Module (HSM)

Industry-standard hardware security modules (HSMs) deployed on Alibaba Cloud.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn More Oracle Database Migration Solution

Oracle Database Migration Solution

Migrate your legacy Oracle databases to Alibaba Cloud to save on long-term costs and take advantage of improved scalability, reliability, robust security, high performance, and cloud-native features.

Learn MoreMore Posts by OceanBase