By Zhennan

The ninth article of this series (Macro Block Storage Format of Storage Layer Code Interpretation) introduced the storage format of the macro block. This article explains the creation process of the table one of the system tenant and the service addressing process related to the table one.

OceanBase has a unique set of metadata management methods. One of its design goals is that all information is stored in tables (including configuration items). The pattern of system tables cannot be hard code, and everything is self-contained without external dependencies. An obvious benefit of this is that it facilitates O&M personnel substantially in large-scale cluster management. However, it makes metadata have some circular dependencies that need to be resolved. The metadata of the user table is stored in the system table. The metadata of the system table is stored in the core table. The metadata of the core table is stored in Table 1 of the system tenant. Table 1 is the one that represents the first table. It is called THE ONE.

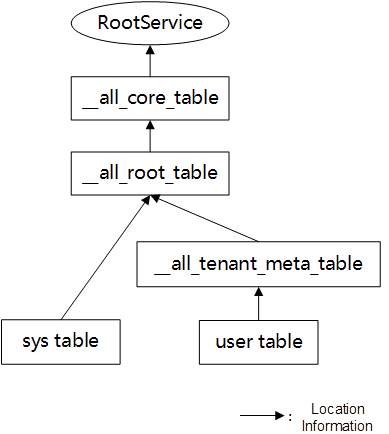

RootService (RS) is the master control service. It is responsible for resource scheduling, resource allocation, data distribution information management, and schema management for the entire OceanBase cluster. RS is not an independent process but a set of services started on the __all_core_table Leader. If you want to learn the relevant codes of RS, you need to understand the __all_core_table first.

__all_core_table?From the most basic point of view, __all_core_table is a table with a key-value structure, and the table number is 1, which is the first table generated when the entire cluster is started. It stores some information required for RS startup. The startup of RS and the services provided by RS all need to rely on __all_core_table, so __all_core_table to become the foundation of RS's entire service and the starting point of RS.

__all_core_table

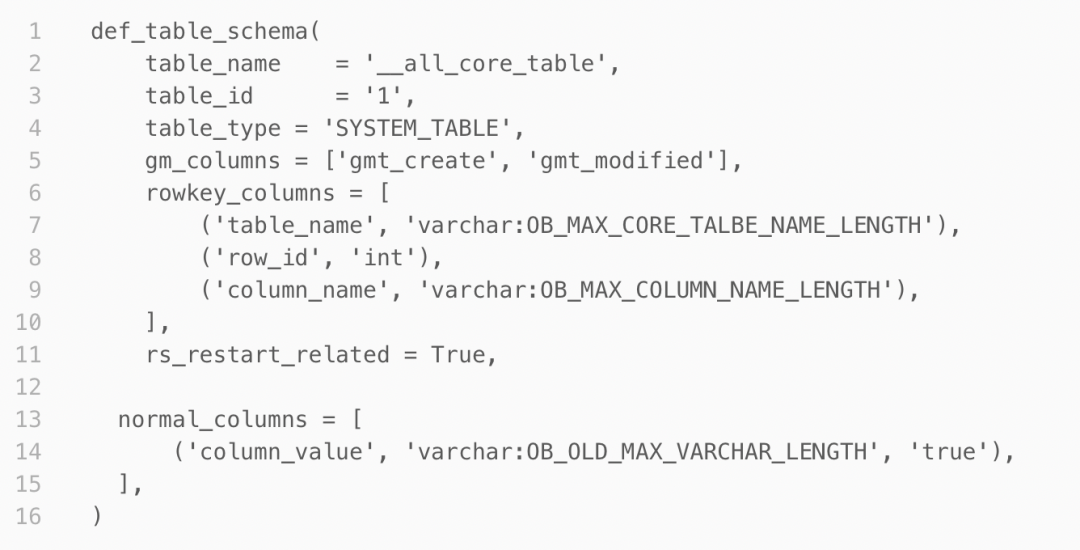

The structure of __all_core_table is key-value. Its schema is hard-coded in the int ObInnerTableSchema::all_core_table_schema(ObTableSchema &table_schema). You can view the specific contents of its schema in the ob_inner_table_schema_def.py. (gm_columns is a hidden column, which generates time automatically and can be ignored.)

The __all_core_table row key contains three types: table_name, row_id, and column_name. Each set of keys corresponds to a column_value. It can be understood as splitting a normal two-dimensional relational table into a one-dimensional table for storage.

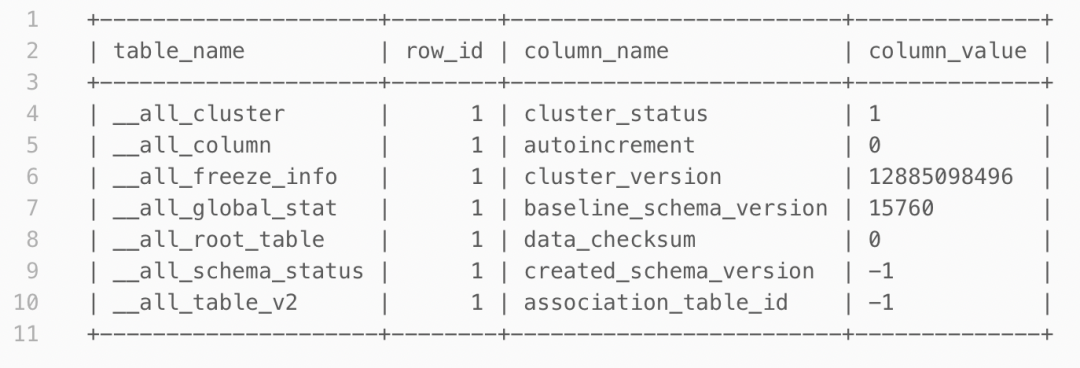

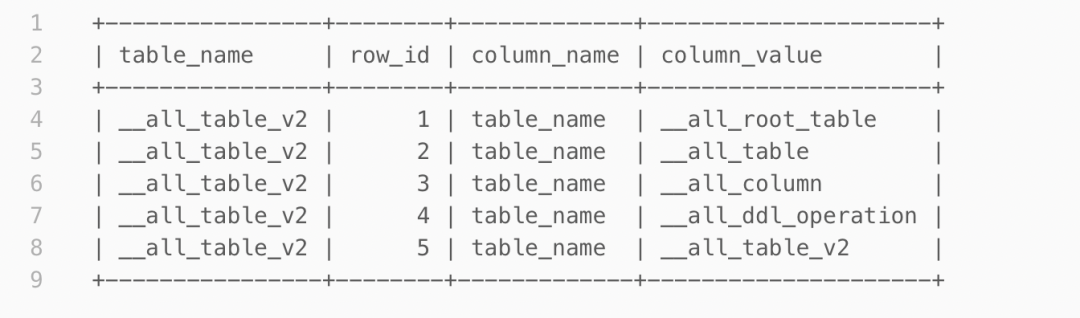

__all_core_table

(The figure above shows the corresponding contents of GROUP BY table_name and table_name='__all_table_v2'.)

The __all_core_table holds the necessary information required to start the RS. The important core tables include:

__all_root_table: It is the core table of the location cache module, which records the location of the system table and __all_tenant_meta_table (Tenant-level is a core table used to record the location of user tables). The location information of the __all_root_table is recorded in the __all_core_table. Its schema information is recorded in the __all_table_v2 and __all_column.__all_table_v2 (__all_table is obsolete), __all_column, and __all_ddl_operation: The core tables of the schema module are hierarchically expanded by these three tables, recording the schema information of all tables.(The schema of these tables is recorded in__all_column and __all_table_v2.)

After explaining Table 1, the next step is introducing the Location Discovery Service.

In a standalone database, tables in the database are stored locally. However, in a distributed database (such as OceanBase), multiple copies of an entity table may exist, scattered across multiple servers in a cluster. When a user wants to query an entity table, how does the database locate this table on so many servers?

OceanBase uses an internal set of system tables to discover the location of entity tables. This set of system tables is generally referred to as meta tables.

The meta table that involves location includes three internal tables: __all_core_table, __all_root_table, and __all_tenant_meta_table. The location (location information) of all entity tables in the OB cluster (with partition replicas as the granularity) is recorded in the hierarchical relationship.

Look from the bottom to the top:

__all_tenant_meta_table of the tenant.__all_tenant_meta_table and the location information of the system table (including the user tenant and the system tenant) are recorded in the __all_root_table.__all_root_table is recorded in the __all_core_table.__all_core_table is stored in the memory of the machine where the RS is located.The reason why it is designed as a hierarchical structure is that it is easy to maintain and can store more entity table information. When the cluster starts and the partition replica of the entity table changes, the location information will be actively reported to the meta table. The database can determine the location of each entity table by maintaining and querying this hierarchy.

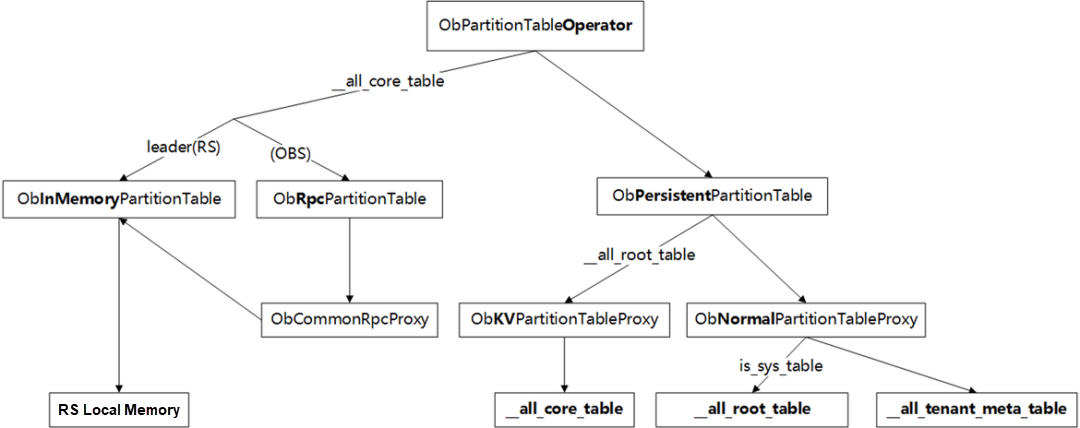

As shown in the preceding figure, the updates and queries of meta tables are implemented through ObPartitionTableOperator. The ObPartitionTableOperator will call different ObPartitionTable to process according to the table_id of the entity table and then hand it over to the ObPartitionTableProxy to perform specific update/query operations.

As table 1, __all_core_table is the key to bootstrapping and service discovery of OB clusters. The related processing is relatively complicated, which will be described separately below.

__all_core_table Location Determined?Table 1 __all_core_table is also the starting point of the meta table's hierarchical relationship. Therefore, when the cluster starts, how can we determine the __all_core_table location information?

__all_core_table leader

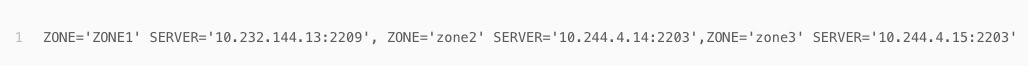

In the bootstrap process of RS, you must enter rs_list, which includes one server address (IP:port) for each zone. For example:

The __all_core_table creates partitions on the server in rs_list and selects an appropriate server as the leader of the __all_core_table during the pre-bootstrap process. After the leader is specified, it will wait for the election to complete (the to_leader_time of the circular monitoring replica) and obtain the leader. After that, it will send an RPC to the server to execute bootstrap and start the RS.

__all_core_table leader?Each observer has an RsMgr to maintain the location information of the master RS in the memory. The __all_core_table copy information is stored in rs memory. Other servers use RPC to obtain partition_info from master_rs_. If the fetch fails, it will trigger an additional set of multi-layer addressing processes:

(Core function: int ObRpcPartitionTable::fetch_root_partition_v2(ObPartitionInfo &partition_info))

rootservice_list from the local configuration items through the ObInnerConfigRootAddr and look for master_rs in it. (rs_list changes through heartbeat refresh).all_server_list in configIn addition to the methods above, the RS uses the heartbeat mechanism to check the status of the observer. If there is no heartbeat for more than ten seconds, it considers that the lease of the server has timed out and actively broadcasts its location (broadcast_rs_list).

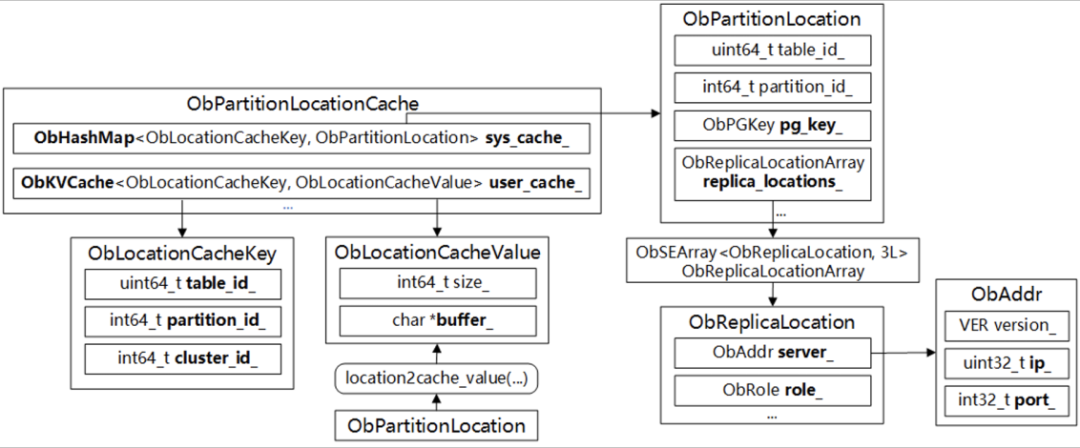

If we process the same table multiple times, it is too inefficient to find the location in the meta table. Therefore, we cache the location information of the entity table in each ObServer, which is managed by the location cache module and implemented in the ObPartitionLocationCache. Its main cache content is shown in the following figure:

Location cache uses a passive refresh mechanism. Each observer caches the location information of the accessed entity table locally and then uses the cache directly for repeated access. When other internal modules find that the cache is invalid, they call interfaces (such as ObPartitionLocationCache::nonblock_renew) to refresh the location cache. Therefore, we can know the location information of each entity table in the cluster.

The next article in this series will explain ObTableScan design and code knowledge. Stay tuned!

An Interpretation of the Source Code of OceanBase (9): "Macro Block Storage Format"

An Interpretation of the Source Code of OceanBase (11): Analysis of Location Cache Module

OceanBase - September 15, 2022

OceanBase - September 9, 2022

OceanBase - September 9, 2022

OceanBase - May 30, 2022

OceanBase - September 9, 2022

OceanBase - September 9, 2022

Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More YiDA Low-code Development Platform

YiDA Low-code Development Platform

A low-code development platform to make work easier

Learn More Phone Number Verification Service

Phone Number Verification Service

A one-stop, multi-channel verification solution

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by OceanBase