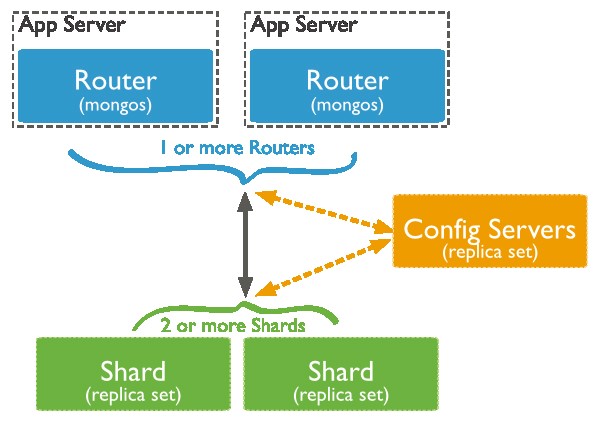

Sharding is a method of data distribution across multiple machines. MongoDB uses sharding to support deployments with very large data sets and high throughput operations. This article comprehensively discusses various methods of MongoDB sharding in relation to chunk splitting and migration.

Note: Content in this article is based on MongoDB 3.2.

Let us understand a primary shard in detail.

After using MongoDB sharding, data will scatter into one or more shards as chunks (64 MB by default) based on the shardKey.

Each database has a primary shard allocated at database creation. Let us now discuss the procedures that a shard follows to migrate and write data.

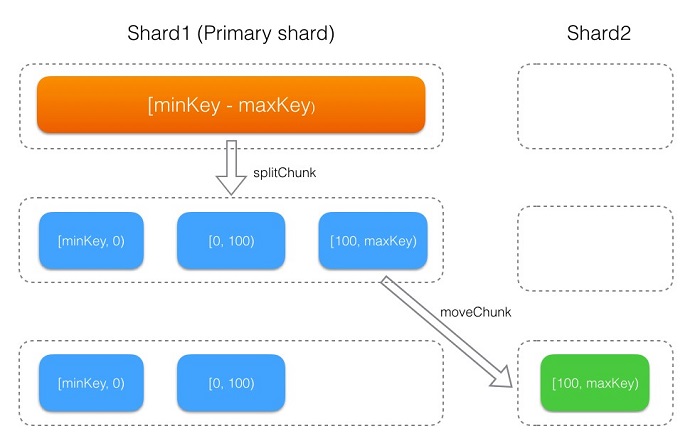

● The collection that initiates the shard (that is, invoked the shardCollectionshardCollection command) in the database, first generates a [minKey, maxKey] chunk stored on the primary shard. The chunk then constantly splits and migrates with data writes. The figure below is a depiction of the process.

● For the collection that does not initiate the shard in the database, all the data is stored on the primary shard.

There is a sharding.autoSplit configuration item on Mongos that automatically triggers chunk splitting. It is generally enabled by default. We strongly recommend you to refrain from disabling autoSplit without the guidance of professionals. A safer method would be to use "pre-sharding" to split the chunk in advance.

MongoDB's automatic splitting of chunks only occurs when Mongos writes data. When the data size written to the chunk exceeds a certain value, the chunk split will trigger. There are specific rules that the splitting process must follow which are stated as follows:

int ChunkManager::getCurrentDesiredChunkSize() const {

// split faster in early chunks helps spread out an initial load better

const int minChunkSize = 1 << 20; // 1 MBytes

int splitThreshold = Chunk::MaxChunkSize; // default 64MB

int nc = numChunks();

if (nc <= 1) {

return 1024;

} else if (nc < 3) {

return minChunkSize / 2;

} else if (nc < 10) {

splitThreshold = max(splitThreshold / 4, minChunkSize);

} else if (nc < 20) {

splitThreshold = max(splitThreshold / 2, minChunkSize);

}

return splitThreshold;

}

bool Chunk::splitIfShould(OperationContext* txn, long dataWritten) const {

dassert(ShouldAutoSplit);

LastError::Disabled d(&LastError::get(cc()));

try {

_dataWritten += dataWritten;

int splitThreshold = getManager()->getCurrentDesiredChunkSize();

if (_minIsInf() || _maxIsInf()) {

splitThreshold = (int)((double)splitThreshold * .9);

}

if (_dataWritten < splitThreshold / ChunkManager::SplitHeuristics::splitTestFactor)

return false;

if (!getManager()->_splitHeuristics._splitTickets.tryAcquire()) {

LOG(1) << "won't auto split because not enough tickets: " << getManager()->getns();

return false;

}

......

}The chunkSize is 64 MB by default and the split threshold is as follows:

| Number of chunks in a collection | Split threshold value |

|---|---|

| 1 | 1024B |

| [1, 3) | 0.5MB |

| [3, 10) | 16MB |

| [10, 20) | 32MB |

| [20, max) | 64MB |

int threshold = 8;

if (balancedLastTime || distribution.totalChunks() < 20)

threshold = 2;

else if (distribution.totalChunks() < 80)

threshold = 4;| Number of chunks in a collection | Migration threshold value |

|---|---|

| [1, 20) | 2 |

| [20, 80) | 4 |

| [80, max) | 8 |

Full-text Search Index Optimization - Alibaba Cloud RDS for PostgreSQL

Partitioned Index - Alibaba Cloud RDS PostgreSQL Best Practices

2,593 posts | 792 followers

FollowApsaraDB - July 14, 2021

ApsaraDB - January 12, 2023

Alibaba Clouder - November 8, 2016

Alibaba Clouder - February 24, 2018

Alibaba Clouder - November 2, 2018

Alibaba Clouder - July 16, 2020

2,593 posts | 792 followers

Follow ApsaraDB for MongoDB

ApsaraDB for MongoDB

A secure, reliable, and elastically scalable cloud database service for automatic monitoring, backup, and recovery by time point

Learn More Cloud Migration Solution

Cloud Migration Solution

Secure and easy solutions for moving you workloads to the cloud

Learn More Oracle Database Migration Solution

Oracle Database Migration Solution

Migrate your legacy Oracle databases to Alibaba Cloud to save on long-term costs and take advantage of improved scalability, reliability, robust security, high performance, and cloud-native features.

Learn More Database Migration Solution

Database Migration Solution

Migrating to fully managed cloud databases brings a host of benefits including scalability, reliability, and cost efficiency.

Learn MoreMore Posts by Alibaba Clouder