By Yang Yi (Xiweng), Nacos PMC

This article is from a speech delivered on Spring Cloud Alibaba Meetup in Hangzhou.

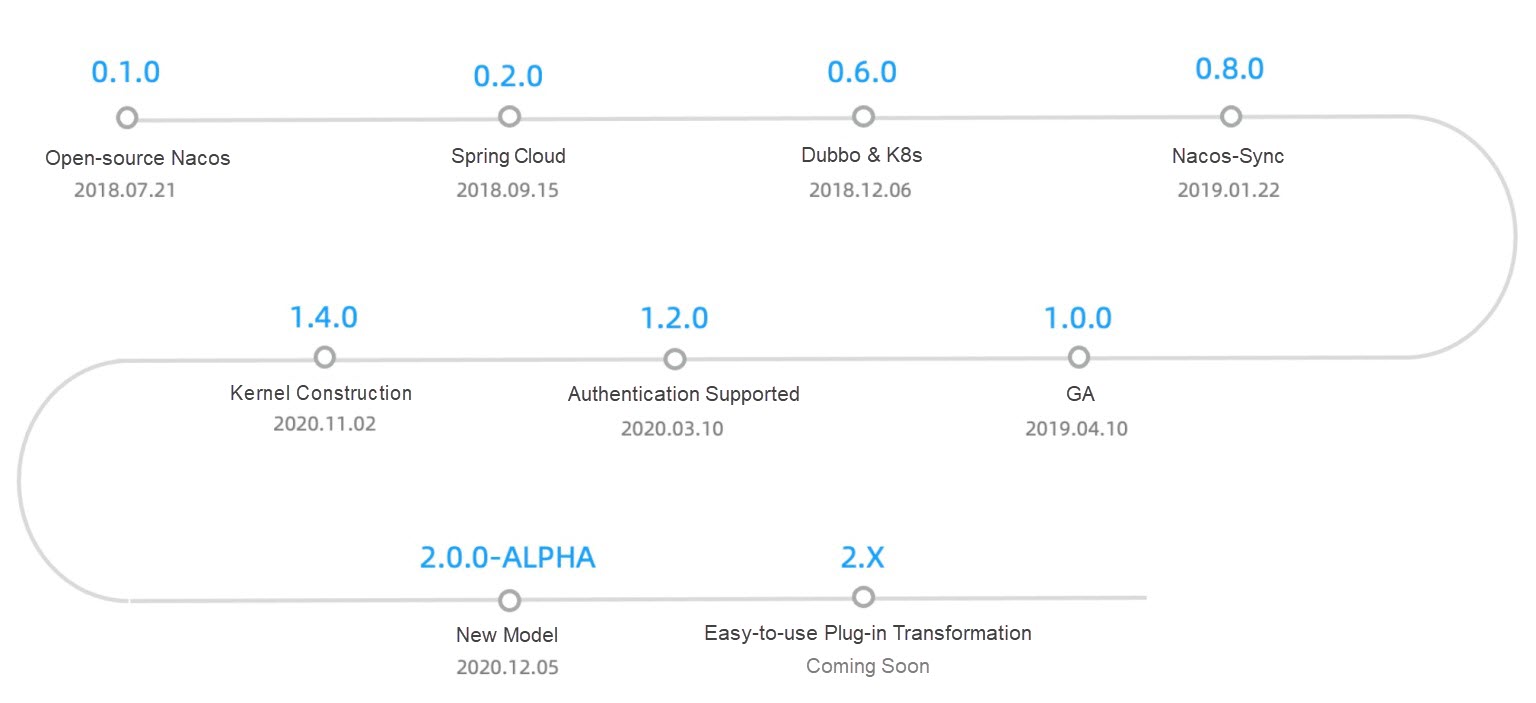

Nacos originated from the Alibaba colorful stone project (distributed system architecture practice) in 2008. It completed the splitting of microservices and the construction of business mid-ends. With the rise of cloud computing and open-source environments, we were affected deeply by the open-source software industry in 2018. Therefore, we made Nacos open-source and displayed the 10-year history of Alibaba's service discovery and configuration management. By doing so, we aim to promote the development of the microservice industry and accelerate the digital transformation of enterprises.

Currently, Nacos supports mainstream microservice development languages, mainstream service frameworks, and configuration management frameworks. It supports Dubbo and SCA and also connects to some cloud-native components, such as coreDNS and Sentinel.

In terms of client languages, Nacos supports mainstream languages, such as Java, Go, Python, and recently, C# and C++ released officially. We would like to thank all the community contributors for their support.

Over the past two years since Nacos has been available as an open-source project, a total of 34 versions have been released, including some important milestone versions. Nacos is still very young and needs more improvements. Community elites from all walks of life are welcomed to join us.

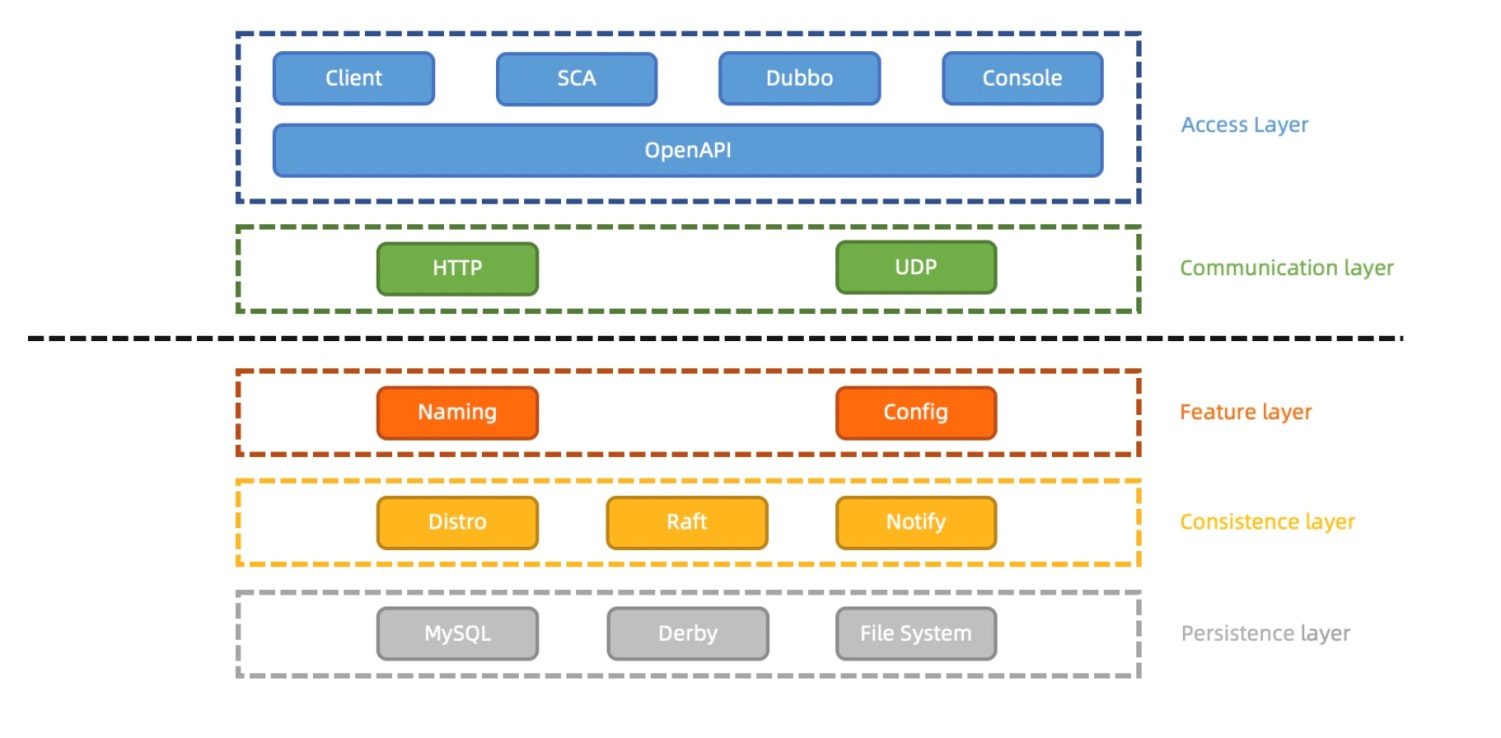

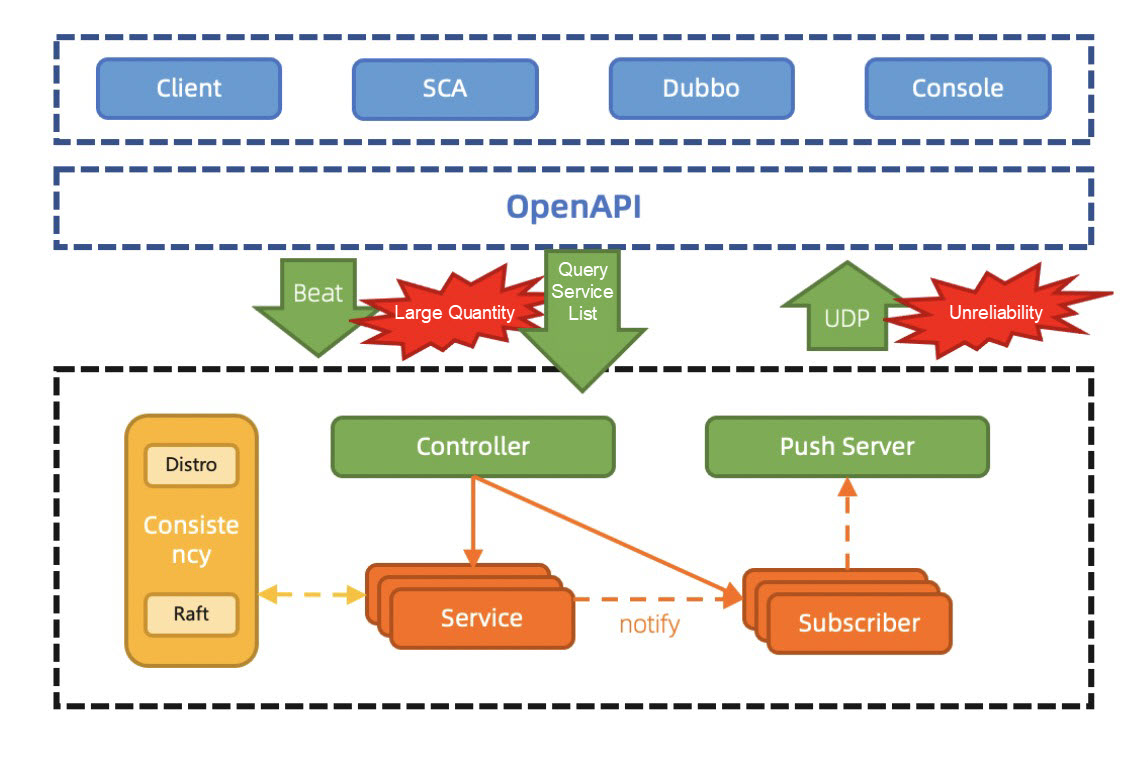

Now, let's take a look at the Nacos1.X architecture and analyze some important problems. First, take a look at the brief diagram of the architecture.

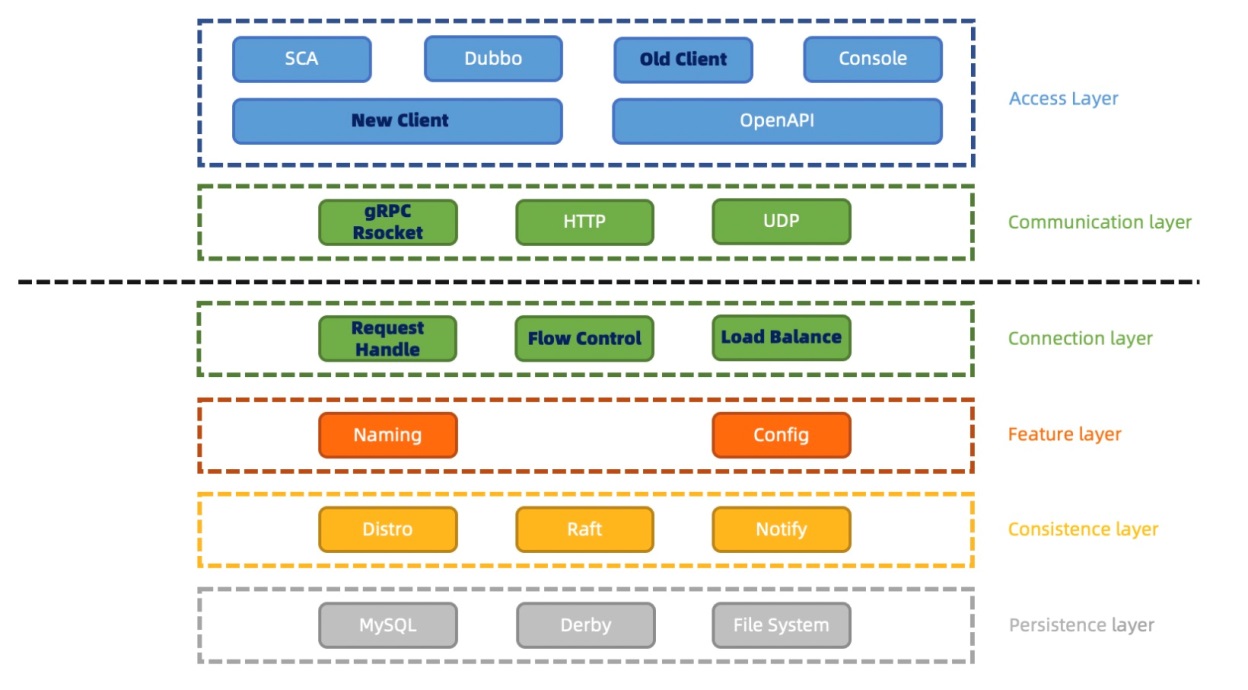

Nacos 1.X can be roughly divided into five layers: access, communication, functionality, synchronization, and persistence:

1. The access layer is the most direct interface for user interaction. It is mainly composed of the Nacos client, Dubbo, and SCA depending on the client, and the console operated by the users. The client and console perform service and configuration operations and initiate communication requests uniformly through the OpenAPI of HTTP.

2. The communication layer works through short connection requests based on HTTP. Some of these push features use UDP for communication.

3. The function layer is currently equipped with service discovery and configuration management. This is the business layer that manages services and configuration.

4. The synchronization layer is divided into Distro in AP mode and Raft in CP mode for data synchronization. There is also a simple level notification called Notify. All of them work differently:

5. At the persistence layer, Nacos uses MySQL, Derby, and the local file system to achieve persistence in data information configuration. User information and permission information are stored in the MySQL or Derby database. The persistent service information and the service and instance metadata are stored in the local file system.

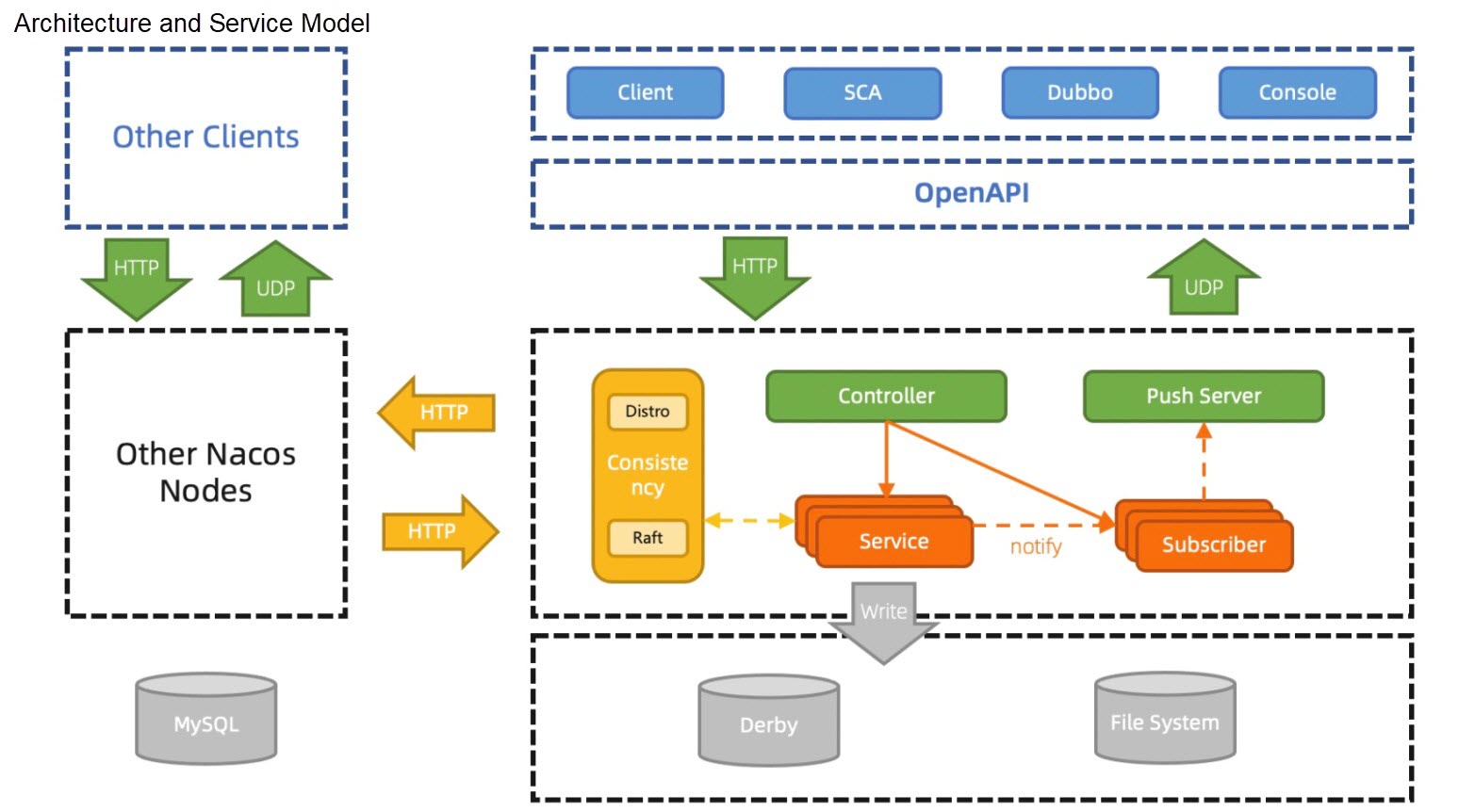

Let's take a closer look at Nacos 1.X architecture through a service discovery process. The Nacos service discovery model is based on the current architecture.

The Nacos client registration service sends an HTTP registration service request through OpenAPI. The requested content includes service information and instance information. This step is usually completed by microservice frameworks SCA and Dubbo.

After receiving the request, the server reads and verifies data in the Controller, for example, whether the IP address is legal or the service name is correct. If a Service is registered for the first time, Nacos generates a Service object on the server and stores the information of the instance registered this time into this service object. If the Nacos server already has this Service object, the information of the newly registered instance is directly stored in the object. The Service object ensures its uniqueness through the combination of a namespace, group, and service.

When the instance is saved to the Service, two events are triggered at the same time. One of the events is for data synchronization. Nacos server uses the Distro or Raft protocol for synchronization to notify other Nacos nodes of service changes based on whether the Service is a temporary object. Another event notifies the subscribers that have subscribed to the Service from the Nacos service node. Then, it uses UDP to push the latest service list to subscriber clients based on the subscriber information. At this point, a service registration process is completed.

All the information of services that are defined to be persisted can be written to the file systems for persistence through the Raft protocol.

Finally, other Nacos nodes also trigger events to notify subscribers when Service changes are performed through synchronization. Therefore, subscribers that subscribe to the Service from other Nacos service nodes can also receive the pushed message.

After a brief introduction to the Nacos1.X architecture and service discovery model, let's jump into the analysis of several important issues faced by Nacos 1.X.

In a word, too many heartbeats and invalid queries lead to the slow detection of changes by heartbeat renewal. They also lead to high connection consumption and severe idle resource waste.

As a result of the heartbeats renewal, when the service scale increases, especially when there are a large number of interface-level services similar to Dubbo, the polling of heartbeats and configuration metadata is numerous. This results in high TPS of the clusters and high consumption of idle system resources.

The heartbeat renewal will only remove and notify subscribers when it reaches the time limit. The default value is 15s, which indicates a long latency and poor timeliness. If the time limit is shortened, change pushes are triggered frequently during network jitter. This results in greater loss to the server and the client.

Since UDP is unreliable, clients need to perform reconciliation queries at regular intervals to ensure that the status of the service lists cached by clients is correct. When the number of subscribers increases, the cluster QPS grows. However, most service lists do not change frequently or result in invalid queries and idle resource waste.

TIME_WAIT status is frequently connected in the HTTP short connection model.In the HTTP short connection model, a TCP connection is created and destroyed for each client request. The TCP protocol destroys WAIT_TIME status, and it takes a certain amount of time before the TCP connection is completely released. When the TPS and QPS are high, a large number of WAIT_TIME status connections may exist at the server and client. This results in connect time out or cannot assign requested address.

The configuration module uses the HTTP short connection blocking model to simulate communication with persistent connections. However, since the model is not a real persistent connection model, the request and data context are switched every 30 seconds. Each switch leads to a memory waste and frequent GC by the server.

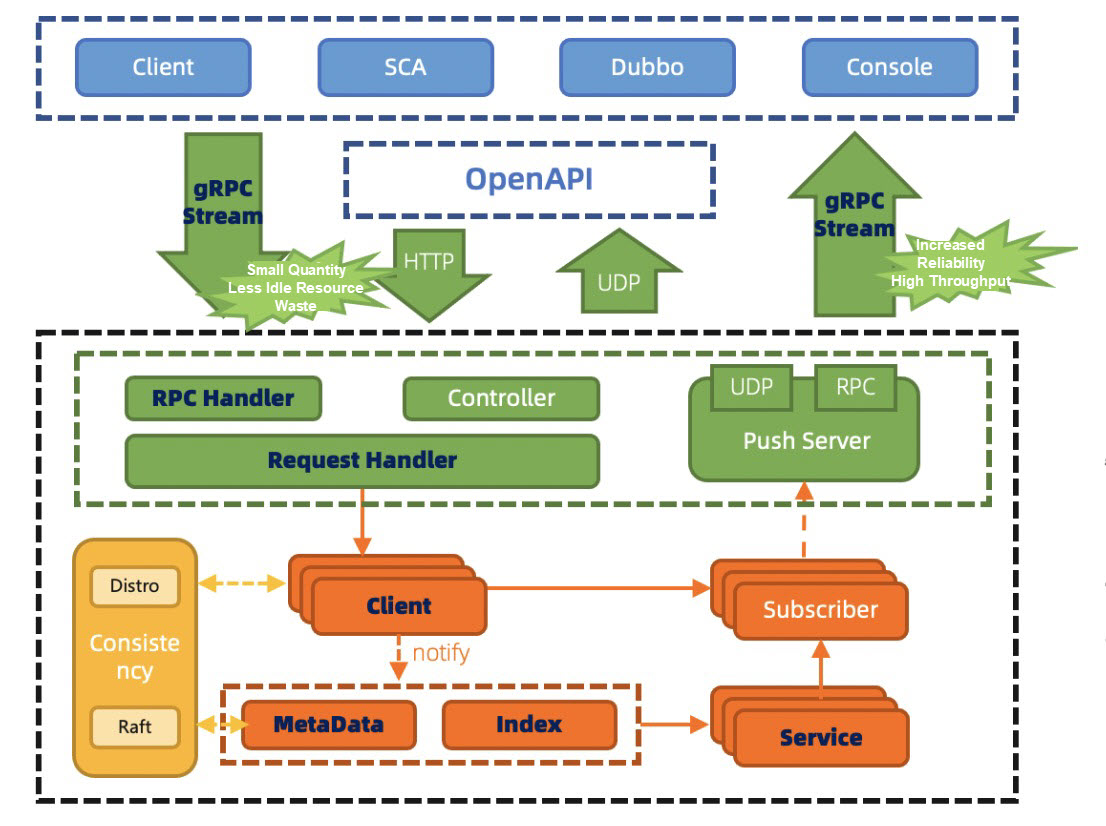

Based on the architecture of Nacos 1.X, Nacos 2.X adds the support for the persistent connection model while retaining the support for core functions of the old client and OpenAPI.

Currently, the communication layer implements persistent connection RPC call and push capabilities through gRPC and RSocket.

A new link layer is added on the server-side to convert different types of requests from different clients into functional data structures with the same semantics. It reuses the business processing logic. At the same time, future functions, such as traffic control and load balancing, will also be handled in the link layer.

Other architectural layers remain substantially unchanged.

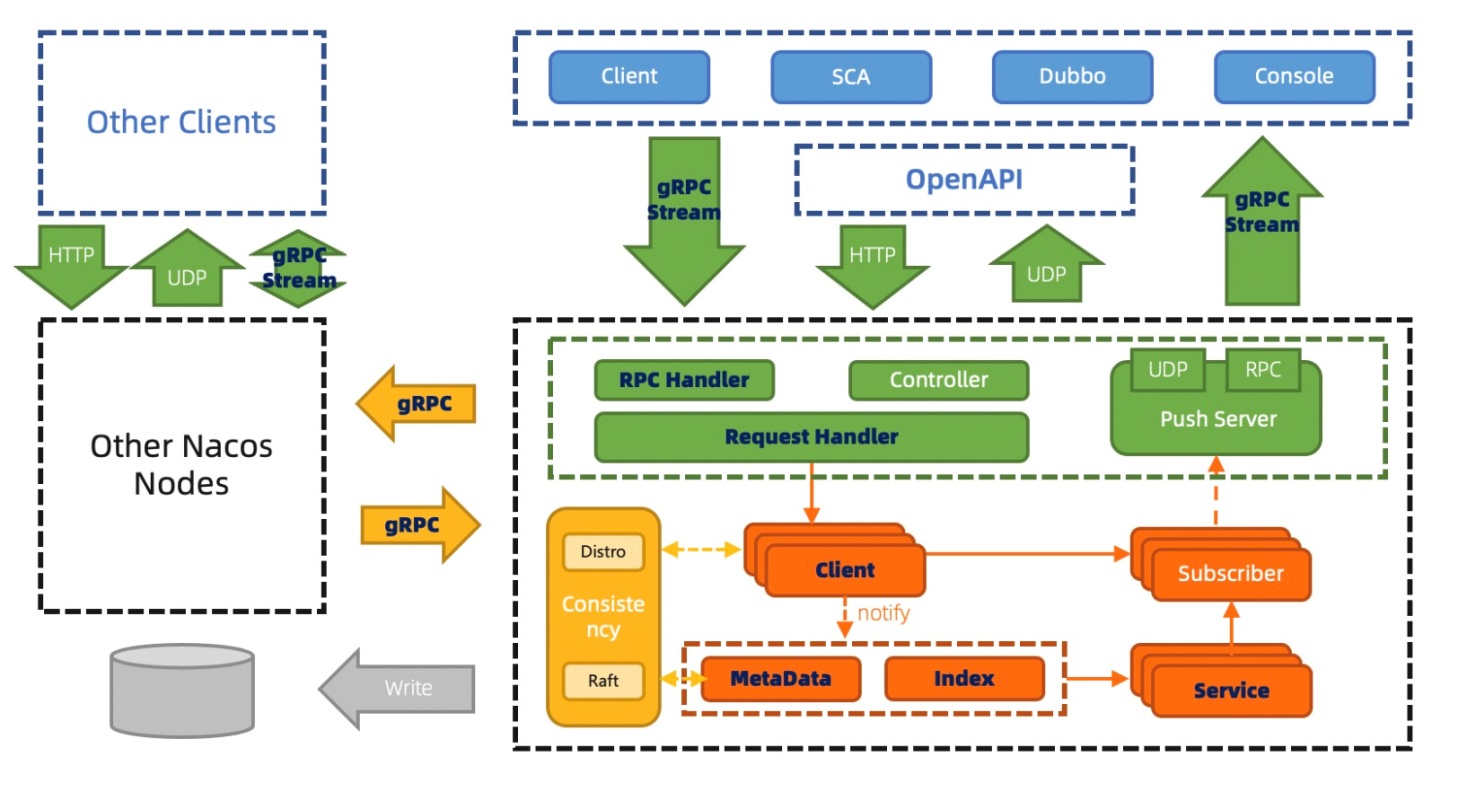

Although Nacos2.0 has not changed much in terms of the architecture layers, its model details have changed a lot. Therefore, the service registration process is still used. Let's take a look at the changes in the Nacos 2.0 service model.

Since the RPC method is used for communication, all requests whether registered or subscribed from a client are made through the same link and the same service node. Previously, each request may be made on different Nacos nodes through HTTP. As a result, the service discovery data changes from stateless to stateful data bound to the connection state. It is necessary to modify the data model to adapt to this change. Therefore, a new data structure is abstracted to associate the same client through the contents released and subscribed by this link. This structure is temporarily named Client. It doesn't literally mean the client, but the data related to the Client. A link corresponds to a Client.

When a Client publishes a service, all the services and subscriber information published on the Client are updated to the Client object corresponding to the Client link. Then, the update of index information is triggered by the event mechanism. The index is for the client link and services, making it convenient and quick to aggregate the service data that needs to be pushed.

After the index information is updated, the push event is triggered. All Client objects related to the service are aggregated based on the index information. Then, the client link of subscribers is filtered from all client links, and the data is pushed back through this link. This way, the primary link for releasing changes is completed.

Look back at the data synchronization. The object that is updated when the Client releases services changes from the original Service to the Client object. The contents to be synchronized are also changed to the Client object accordingly. In addition, the communication mode between services is changed to RPC. Synchronization is only triggered by Client objects updated by the Client.

Metadata includes some attributes separated from the Service object and Instance object in Nacos 1.X, such as the metadata label of the Service and online and offline status, weight, and metadata labels of the instance. The metadata can be modified separately by the API and takes effect when the data is aggregated. The metadata is separated from the basic data because the basic data, such as IP port and service name, should not be modified once published. Instead, they should be in line with the information published at the time. However, other raw data, such as the online and offline status and weights, is usually adjusted dynamically during the running process. Therefore, after the raw data is split, it is more reasonable to split it into two different workflows.

The previous section briefly introduces the architecture of Nacos 2.0 and how the new model works. Next, let's analyze the advantages and disadvantages of the changes.

TIME_WAIT problem.Without the silver bullet solution, the new architecture introduces some new problems:

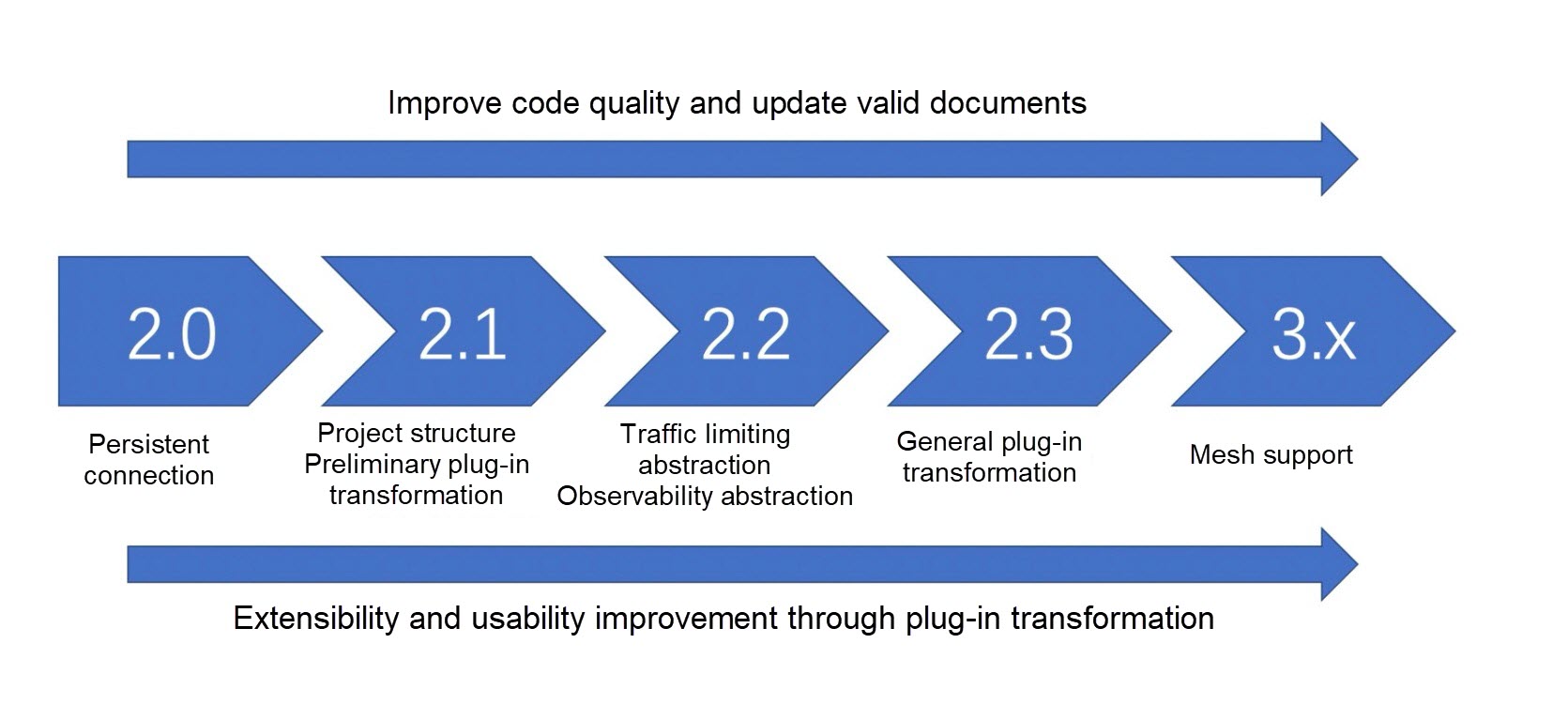

Now, let's talk about the future plans of Nacos 2.X, including documents, quality improvement, and feature roadmap.

Nacos 1.X is not doing very well in terms of the documents and quality. There are insufficient document contents with limited coverage in terms of topics. There is a certain disconnection from the version, and the update is not timely. There is no description of the technical content with high difficulties in the contribution participation. Code quality and test quality are not very high either. Although codeStyle has been verified through checkstyle and community collaborative review has been enabled, this is far from enough. Nacos 2.X will update and refine the usage documentation of the official website step-by-step. It will also analyze the technical details through e-books and display the technical solutions through Github to facilitate discussions and contributions. In addition, a lot of code refactoring and UT and IT governance work will be carried out. In the future, Benchmark will be made open-source to facilitate stress testing for open-source users.

In terms of feature roadmap, Nacos 2.X will reconstruct the project to insert plug-ins initially. It will also improve the Nacos 2.0 architecture in some aspects, such as load balancing and observability.

You are welcomed to submit issues on Nacos Github, have discussions with the PR, and make contributions. You can also join the Nacos community to participate in community discussions.

Yang Yi (Xiweng) is the PMC of Nacos, mainly involved in the service discovery module as well as kernel refactoring and improvement. Working as the Apache SharadingSphere PMC, Yang is mainly responsible for the following modules: route module, distributed transaction, data synchronization, and elastic scaling.

518 posts | 51 followers

FollowAlibaba Cloud Native Community - June 18, 2024

Aliware - February 5, 2021

Alibaba Cloud Native Community - January 3, 2024

Alibaba Cloud Community - May 6, 2024

Alibaba Cloud Native Community - January 26, 2024

Iain Ferguson - April 11, 2022

518 posts | 51 followers

Follow Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Architecture and Structure Design

Architecture and Structure Design

Customized infrastructure to ensure high availability, scalability and high-performance

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn MoreMore Posts by Alibaba Cloud Native Community

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free